Chapter 28: Model Selection

28 Model Selection, Stein Shrinkage, and Model Averaging

28.1 Introduction

The chapter reviews model selection, James-Stein shrinkage, and model averaging.

Model selection is a tool for selecting one model (or estimator) out of a set of models. Different model selection methods are distinguished by the criteria used to rank and compare models.

Model averaging is a generalization of model selection. Models and estimators are averaged using data-dependent weights.

James-Stein shrinkage modifies classical estimators by shrinking towards a reasonable target. Shrinking reduces mean squared error.

Two excellent monographs on model selection and averaging are Burnham and Anderson (1998) and Claeskens and Hjort (2008). James-Stein shrinkage theory is thoroughly covered in Lehmann and Casella (1998). See also Wasserman (2006) and Efron (2010).

28.2 Model Selection

In the course of an applied project an economist will routinely estimate multiple models. Indeed, most applied papers include tables displaying the results from different specifications. The question arises: Which model is best? Which should be used in practice? How can we select the best choice? This is the question of model selection.

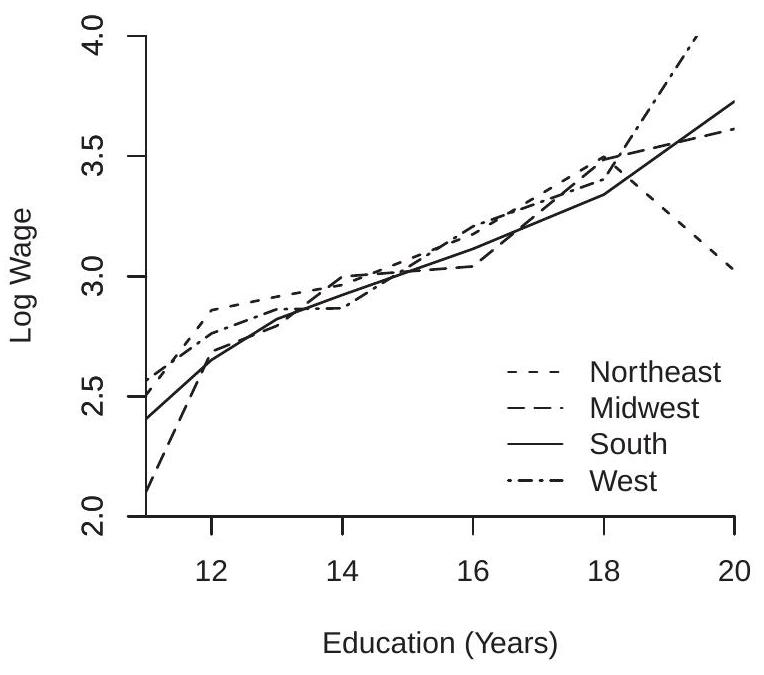

Take, for example, a wage regression. Suppose we want a model which includes education, experience, region, and marital status. How should we proceed? Should we estimate a simple linear model plus a quadratic in experience? Should education enter linearly, a simple spline as in Figure 2.6(a), or with separate dummies for each education level? Should marital status enter as a simple dummy (married or not) or allowing for all recorded categories? Should interactions be included? Which? How many? Taken together we need to select the specific regressors to include in the regression model.

Model “selection” may be mis-named. It would be more appropriate to call the issue “estimator selection”. When we examine a table containing the results from multiple regressions we are comparing multiple estimates of the same regression. One estimator may include fewer variables than another; that is a restricted estimator. One may be estimated by least squares and another by 2SLS. Another could be nonparametric. The underlying model is the same; the difference is the estimator. Regardless, the literature has adopted the term “model selection” and we will adhere to this convention. To gain some basic understanding it may be helpful to start with a stylzed example. Suppose that we have a \(K \times 1\) estimator \(\widehat{\theta}\) which has expectation \(\theta\) and known covariance matrix \(\boldsymbol{V}\). An alternative feasible estimator is \(\widetilde{\theta}=0\). The latter may seem like a silly estimator but it captures the feature that model selection typically concerns exclusion restrictions. In this context we can compare the accuracy of the two estimators by their weighted mean-squared error (WMSE). For a given weight matrix \(\boldsymbol{W}\) define

\[ \text { wmse }[\widehat{\theta}]=\operatorname{tr}\left(\mathbb{E}\left[(\widehat{\theta}-\theta)(\widehat{\theta}-\theta)^{\prime}\right] \boldsymbol{W}\right)=\mathbb{E}\left[(\widehat{\theta}-\theta)^{\prime} \boldsymbol{W}(\widehat{\theta}-\theta)\right] \text {. } \]

The calculations simplify by setting \(\boldsymbol{W}=\boldsymbol{V}^{-1}\) which we do for our remaining calculations.

For our two estimators we calculate that

\[ \begin{aligned} \text { wmse }[\hat{\theta}] &=K \\ \text { wmse }[\widetilde{\theta}] &=\theta^{\prime} \boldsymbol{V}^{-1} \theta \stackrel{\text { def }}{=} \lambda . \end{aligned} \]

(See Exercise 28.1) The WMSE of \(\widehat{\theta}\) is smaller if \(K<\lambda\) and the WMSE of \(\widetilde{\theta}\) is smaller if \(K>\lambda\). One insight from this simple analysis is that we should prefer smaller (simpler) models when potentially omitted variables have small coefficients relative to estimation variance, and should prefer larger (more complicated) models when these variables have large coefficients relative to estimation variance. Another insight is that this choice is infeasible because \(\lambda\) is unknown.

The comparison between (28.1) and (28.2) is a basic bias-variance trade-off. The estimator \(\widehat{\theta}\) is unbiased but has a variance contribution of \(K\). The estimator \(\widetilde{\theta}\) has zero variance but has a squared bias contribution \(\lambda\). The WMSE combines these two components.

Selection based on WMSE suggests that we should ideally select the estimator \(\widehat{\theta}\) if \(K<\lambda\) and select \(\tilde{\theta}\) if \(K>\lambda\). A feasible implementation replaces \(\lambda\) with an estimator. A plug-in estimator is \(\hat{\lambda}=\widehat{\theta}^{\prime} \boldsymbol{V}^{-1} \widehat{\theta}=W\), the Wald statistic for the test of \(\theta=0\). However, the estimator \(\widehat{\lambda}\) has expectation

\[ \mathbb{E}[\widehat{\lambda}]=\mathbb{E}\left[\widehat{\theta}^{\prime} \boldsymbol{V}^{-1} \hat{\theta}\right]=\theta^{\prime} \boldsymbol{V}^{-1 \prime} \theta+\mathbb{E}\left[(\widehat{\theta}-\theta)^{\prime} \boldsymbol{V}^{-1}(\widehat{\theta}-\theta)\right]=\lambda+K \]

so is biased. An unbiased estimator is \(\tilde{\lambda}=\widehat{\lambda}-K\). Notice that \(\tilde{\lambda}>K\) is the same as \(W>2 K\). This leads to the model-selection rule: Use \(\widehat{\theta}\) if \(W>2 K\) and use \(\widetilde{\theta}\) otherwise.

This is an overly-simplistic setting but highlights the fundamental ingredients of criterion-based model selection. Comparing the MSE of different estimators typically involves a trade-off between the bias and variance with more complicated models exhibiting less bias but increased estimation variance. The actual trade-off is unknown because the bias depends on the unknown true parameters. The bias, however, can be estimated, giving rise to empirical estimates of the MSE and empirical model selection rules.

A large number of model selection criteria have been proposed. We list here those most frequently used in applied econometrics.

We first list selection criteria for the linear regression model \(Y=X^{\prime} \beta+e\) with \(\sigma^{2}=\mathbb{E}\left[e^{2}\right]\) and a \(k \times 1\) coefficient vector \(\beta\). Let \(\widehat{\beta}\) be the least squares estimator, \(\widehat{e}_{i}\) the least squares residual, and \(\widehat{\sigma}^{2}=n^{-1} \sum_{i=1}^{n} \widehat{e}_{i}^{2}\) the variance estimator. The number of estimated parameters \(\left(\beta\right.\) and \(\left.\sigma^{2}\right)\) is \(K=k+1\).

28.3 Bayesian Information Criterion

\[ \mathrm{BIC}=n+n \log \left(2 \pi \widehat{\sigma}^{2}\right)+K \log (n) . \]

28.4 Akaike Information Criterion

\[ \mathrm{AIC}=n+n \log \left(2 \pi \widehat{\sigma}^{2}\right)+2 K . \]

28.5 Cross-Validation

\[ \mathrm{CV}=\sum_{i=1}^{n} \widetilde{e}_{i}^{2} \]

where \(\widetilde{e}_{i}\) are the least squares leave-one-out prediction errors.

We next list two commonly-used selection criteria for likelihood-based estimation. Let \(f(y, \theta)\) be a parametric density with a \(K \times 1\) parameter \(\theta\). The likelihood \(L_{n}(\theta)=\prod_{i=1}^{n} f\left(Y_{i}, \theta\right)\) is the density evaluated at the observations. The maximum likelihood estimator \(\widehat{\theta} \operatorname{maximizes} \ell_{n}(\theta)=\log L_{n}(\theta)\).

28.6 Bayesian Information Criterion

\[ \mathrm{BIC}=-2 \ell_{n}(\widehat{\theta})+K \log (n) . \]

28.7 Akaike Information Criterion

\[ \mathrm{AIC}=-2 \ell_{n}(\widehat{\theta})+2 K . \]

In the following sections we derive and discuss these and other model selection criteria.

28.8 Bayesian Information Criterion

The Bayesian Information Criterion (BIC), also known as the Schwarz Criterion, was introduced by Schwarz (1978). It is appropriate for parametric models estimated by maximum likelihood and is used to select the model with the highest approximate probability of being the true model.

Let \(\pi(\theta)\) be the prior density for \(\theta\). The joint density of \(Y\) and \(\theta\) is \(f(y, \theta) \pi(\theta)\). The marginal density of \(Y\) is

\[ p(y)=\int f(y, \theta) \pi(\theta) d \theta \]

The marginal density \(p(Y)\) evaluated at the observations is known as the marginal likelihood.

Schwarz (1978) established the following approximation.

Theorem 28.1 Schwarz. If the model \(f(y, \theta)\) satisfies standard regularity conditions and the prior \(\pi(\theta)\) is diffuse then

\[ -2 \log p(Y)=-2 \ell_{n}(\widehat{\theta})+K \log (n)+O(1) \]

where the \(O(1)\) term is bounded as \(n \rightarrow \infty\).

A heuristic proof for normal linear regression is given in Section 28.32. A “diffuse” prior is one which distributes weight uniformly over the parameter space.

Schwarz’s theorem shows that the marginal likelihood approximately equals the maximized likelihood multiplied by an adjustment depending on the number of estimated parameters and the sample size. The approximation (28.6) is commonly called the Bayesian Information Criterion or BIC. The BIC is a penalized \(\log\) likelihood. The term \(K \log (n)\) can be interpreted as an over-parameterization penalty. The multiplication of the log likelihood by \(-2\) is traditional as it puts the criterion into the same units as a log-likelihood statistic. In the context of normal linear regression we have calculated in (5.6) that

\[ \ell_{n}(\widehat{\theta})=-\frac{n}{2}(\log (2 \pi)+1)-\frac{n}{2} \log \left(\widehat{\sigma}^{2}\right) \]

where \(\widehat{\sigma}^{2}\) is the residual variance estimate. Hence BIC equals (28.3) with \(K=k+1\).

Since \(n \log (2 \pi)+n\) does not vary across models this term is often omitted. It is better, however, to define the BIC as described above so that different parametric families are comparable. It is also useful to know that some authors define the BIC by dividing the above expression by \(n\) (e.g. \(\mathrm{BIC}=\log \left(2 \pi \widehat{\sigma}^{2}\right)+\) \(K \log (n) / n)\) which does not change the rankings between models. However, this is an unwise choice because it alters the scaling, making it difficult to compare the degree of difference between models.

Now suppose that we have two models \(\mathscr{M}_{1}\) and \(\mathscr{M}_{2}\) which have marginal likelihoods \(p_{1}(Y)\) and \(p_{2}(Y)\). Assume that both models have equal prior probability. Bayes Theorem states that the probability that a model is true given the data is proportional to its marginal likelihood. Specifically

\[ \begin{aligned} &\mathbb{P}\left[\mathscr{M}_{1} \mid Y\right]=\frac{p_{1}(Y)}{p_{1}(Y)+p_{2}(Y)} \\ &\mathbb{P}\left[\mathscr{M}_{2} \mid Y\right]=\frac{p_{2}(Y)}{p_{1}(Y)+p_{2}(Y)} . \end{aligned} \]

Bayes selection picks the model with highest probability. Thus if \(p_{1}(Y)>p_{2}(Y)\) we select \(\mathscr{M}_{1}\). If \(p_{1}(Y)<p_{2}(Y)\) we select \(\mathscr{M}_{2}\).

Finding the model with highest marginal likelihood is the same as finding the model with lowest value of \(-2 \log p(Y)\). Theorem \(28.1\) shows that the latter approximately equals the BIC. BIC selection picks the model with the lowest \({ }^{1}\) value of BIC. Thus BIC selection is approximate Bayes selection.

The above discussion concerned two models but applies to any number of models. BIC selection picks the model with the smallest BIC. For implementation you simply estimate each model, calculate its BIC, and compare. model.

The BIC may be obtained in Stata by using the command estimates stats after an estimated

28.9 Akaike Information Criterion for Regression

The Akaike Information Criterion (AIC) was introduced by Akaike (1973). It is used to select the model whose estimated density is closest to the true density. It is designed for parametric models estimated by maximum likelihood.

Let \(\widehat{f}(y)\) be an estimator of the unknown true density \(g(y)\) of the observation vector \(Y=\left(Y_{1}, \ldots, Y_{n}\right)\). For example, the normal linear regression estimate of \(g(y)\) is \(\widehat{f}(y)=\prod_{i=1}^{n} \phi_{\widehat{\sigma}}\left(Y_{i}-X_{i}^{\prime} \widehat{\beta}\right)\).

To measure the distance between the two densities \(g\) and \(\widehat{f}\) Akaike used the Kullback-Leibler information criterion (KLIC)

\[ \operatorname{KLIC}(g, f)=\int g(y) \log \left(\frac{g(y)}{f(y)}\right) d y . \]

Notice that \(\operatorname{KLIC}(g, f)=0\) when \(f(y)=g(y)\). By Jensen’s inequality,

\[ \operatorname{KLIC}(g, f)=-\int g(y) \log \left(\frac{f(y)}{g(y)}\right) d y \geq-\log \int f(y) d y=0 . \]

Thus \(\operatorname{KLIC}(g, f)\) is a non-negative measure of the deviation of \(f\) from \(g\), with small values indicating a smaller deviation.

\({ }^{1}\) When the BIC is negative this means taking the most negative value. The KLIC distance between the true and estimated densities is

\[ \begin{aligned} \operatorname{KLIC}(g, \widehat{f}) &=\int g(y) \log \left(\frac{g(y)}{\widehat{f}(y)}\right) d y \\ &=\int g(y) \log (g(y)) d y-\int g(y) \log (\widehat{f}(y)) d y . \end{aligned} \]

This is random as it depends on the estimator \(\widehat{f}\). Akaike proposed the expected KLIC distance

\[ \mathbb{E}[\operatorname{KLIC}(g, \widehat{f})]=\int g(y) \log (g(y)) d y-\mathbb{E}\left[\int g(y) \log (\widehat{f}(y)) d y\right] . \]

The first term in (28.8) does not depend on the model. So minimization of expected KLIC distance is minimization of the second term. Multiplied by 2 (similarly to the BIC) this is

\[ T=-2 \mathbb{E}\left[\int g(y) \log (\widehat{f}(y)) d y\right] . \]

The expectation is over the random estimator \(\widehat{f}\).

An alternative interpretation is to notice that the integral in (28.9) is an expectation over \(Y\) with respect to the true data density \(g(y)\). Thus we can write (28.9) as

\[ T=-2 \mathbb{E}[\log (\widehat{f}(\widetilde{Y}))] \]

where \(\tilde{Y}\) is an independent copy of \(Y\). The key to understand this expression is that both the estimator \(\widehat{f}\) and the evaluation points \(\widetilde{Y}\) are random and independent. \(T\) is the expected log-likelihood fit using the estimated model \(\widehat{f}\) of an out-of-sample realization \(\widetilde{Y}\). Thus \(T\) can be interpreted as an expected predictive log likelihood. Models with low values of \(T\) have good fit based on the out-of-sample loglikelihood.

To gain further understanding we consider the simple case of the normal linear regression model with \(K\) regressors. The log density of the model for the observations is

\[ \log f(Y, \boldsymbol{X}, \theta)=-\frac{n}{2} \log \left(2 \pi \sigma^{2}\right)-\frac{1}{2 \sigma^{2}} \sum_{i=1}^{n}\left(Y_{i}-X_{i}^{\prime} \beta\right)^{2} . \]

The expected value at the true parameter values is \(-\frac{n}{2} \log \left(2 \pi \sigma^{2}\right)-\frac{n}{2}\). This means that the idealized value of \(T\) is \(T_{0}=n \log \left(2 \pi \sigma^{2}\right)+n\). This would be the value obtained if there were no estimation error.

To simplify the calculations, we add the assumption that the variance \(\sigma^{2}\) is known.

Theorem 28.2 Suppose \(\widehat{f}(y)\) is an estimated normal linear regression model with \(K\) regressors and a known variance \(\sigma^{2}\). Suppose that the true density \(g(y)\) is a conditionally homoskedastic regression with variance \(\sigma^{2}\). Then

\[ \begin{aligned} T &=n \log \left(2 \pi \sigma^{2}\right)+n+K=T_{0}+K \\ \mathbb{E}\left[-2 \ell_{n}(\widehat{\theta})\right] &=n \log \left(2 \pi \sigma^{2}\right)+n-K=T_{0}-K . \end{aligned} \]

The proof is given in Section \(28.32\). These expressions are interesting. Expression (28.12) shows that the expected KLIC distance \(T\) equals the idealized value \(T_{0}\) plus \(K\). The latter is the cost of parameter estimation, measured in terms of expected KLIC distance. By estimating parameters (rather than using the true values) the expected KLIC distance increases by \(K\).

Expression (28.13) shows the converse story. It shows that the sample log-likelihood function is smaller than the idealized value \(T_{0}\) by \(K\). This is the cost of in-sample over-fitting. The sample loglikelihood is an in-sample measure of fit and therefore understates the population log-likelihood. The two expressions together show that the expected sample log-likelihood is smaller than the target value \(T\) by \(2 K\). This is the combined cost of over-fitting and parameter estimation.

Combining these expressions we can suggest an unbiased estimator for \(T\). In the normal regression model we use (28.4). Since \(n \log (2 \pi)+n\) does not vary across models it are often omitted. Thus for linear regression it is common to use the definition \(\mathrm{AIC}=n \log \left(\widehat{\sigma}^{2}\right)+2 K\).

Interestingly the AIC takes a similar form to the BIC. Both the AIC and BIC are penalized log likelihoods, and both penalties are proportional to the number of estimated parameters \(K\). The difference is that the AIC penalty is \(2 K\) while the BIC penalty is \(K \log (n)\). Since \(2<\log (n)\) if \(n \geq 8\) the BIC uses a stronger parameterization penalty.

Selecting a model by the AIC is equivalent to calculating the AIC for each model and selecting the model with the lowest \({ }^{2}\) value.

Theorem 28.3 Under the assumptions of Theorem 28.2, \(\mathbb{E}[\mathrm{AIC}]=T\). AIC is thus an unbiased estimator of \(T\).

One of the interesting features of these results are that they are exact - there is no approximation and they do not require that the true error is normally distributed. The critical assumption is conditional homoskedasticity. If homoskedasticity fails then the AIC loses its validity.

The AIC may be obtained in Stata by using the command estimates stats after an estimated model.

28.10 Akaike Information Criterion for Likelihood

For the general likelihood context Akaike proposed the criterion (28.7). Here, \(\widehat{\theta}\) is the maximum likelihood estimator, \(\ell_{n}(\widehat{\theta})\) is the maximized log-likelihood function, and \(K\) is the number of estimated parameters. This specializes to (28.4) for the case of a normal linear regression model.

As for regression, AIC selection is performed by estimating a set of models, calculating AIC for each, and selecting the model with the smallest AIC.

The advantages of the AIC are that it is simple to calculate, easy to implement, and straightforward to interpret. It is intuitive as it is a simple penalized likelihood.

The disadvantage is that its simplicity may be deceptive. The proof shows that the criterion is based on a quadratic approximation to the log likelihood and an asymptotic chi-square approximation to the classical Wald statistic. When these conditions fail then the AIC may not be accurate. For example, if the model is an approximate (quasi) likelihood rather than a true likelihood then the failure of the information matrix equality implies that the classical Wald statistic is not asymptotically chi-square. In this case the accuracy of AIC fails. Another problem is that many nonlinear models have parameter regions where parametric identification fails. In these models the quadratic approximation to the log

\({ }^{2}\) When the AIC is negative this means taking the most negative value. likelihood function fails to hold uniformly in the parameter space so the accuracy of the AIC fails. These qualifications point to challenges in interpretation of the AIC in nonlinear models.

The following is an analog of Theorem 28.3.

Theorem 28.4 Under standard regularity conditions for maximum likelihood estimation, plus the assumption that certain statistics (identified in the proof) are uniformly integrable, \(\mathbb{E}[\mathrm{AIC}]=T+O\left(n^{1 / 2}\right)\). AIC is thus an approximately unbiased estimator of \(T\)

A sketch of the proof is given in Section \(28.32\).

This result shows that the AIC is, in general, a reasonable estimator of the KLIC fit of an estimated parametric model. The theorem holds broadly for maximum likelihood estimation and thus the AIC can be used in a wide variety of contexts.

28.11 Mallows Criterion

The Mallows Criterion was proposed by Mallows (1973) and is often called the \(C_{p}\) criterion. It is appropriate for linear estimators of homoskedastic regression models.

Take the homoskedastic regression framework

\[ \begin{aligned} Y &=m+e \\ m &=m(X) \\ \mathbb{E}[e \mid X] &=0 \\ \mathbb{E}\left[e^{2} \mid X\right] &=\sigma^{2} . \end{aligned} \]

Write the first equation in vector notation for the \(n\) observations as \(\boldsymbol{Y}=\boldsymbol{m}+\boldsymbol{e}\). Let \(\widehat{\boldsymbol{m}}=\boldsymbol{A} \boldsymbol{Y}\) be a linear estimator of \(\boldsymbol{m}\), meaning that \(\boldsymbol{A}\) is some \(n \times n\) function of the regressor matrix \(\boldsymbol{X}\) only. The residuals are \(\widehat{\boldsymbol{e}}=\boldsymbol{Y}-\widehat{\boldsymbol{m}}\). The class of linear estimators includes least squares, weighted least squares, kernel regression, local linear regression, and series regression. For example, the least squares estimator using a regressor matrix \(\boldsymbol{Z}\) is the case \(\boldsymbol{A}=\boldsymbol{Z}\left(\boldsymbol{Z}^{\prime} \boldsymbol{Z}\right)^{-1} \boldsymbol{Z}^{\prime}\).

Mallows (1973) proposed the criterion

\[ C_{p}=\widehat{\boldsymbol{e}}^{\prime} \widehat{\boldsymbol{e}}+2 \widetilde{\sigma}^{2} \operatorname{tr}(\boldsymbol{A}) \]

where \(\widetilde{\sigma}^{2}\) is a preliminary estimator of \(\sigma^{2}\) (typically based on fitting a large model). In the case of least squares regression with \(K\) coefficients this simplifies to

\[ C_{p}=n \widehat{\sigma}^{2}+2 K \widetilde{\sigma}^{2} . \]

The Mallows crierion can be used similarly to the AIC. A set of regression models are estimated and the criterion \(C_{p}\) calculated for each. The model with the smallest value of \(C_{p}\) is the Mallows-selected model.

Mallows designed the criterion \(C_{p}\) as an unbiased estimator of the following measure of fit

\[ R=\mathbb{E}\left[\sum_{i=1}^{n}\left(\widehat{m}_{i}-m_{i}\right)^{2}\right] . \]

This is the expected squared difference between the estimated and true regressions evaluated at the observations.

An alternative motivation for \(R\) is in terms of prediction accuracy. Consider an independent set of observations \(\widetilde{Y}_{i}, i=1, \ldots, n\), which have the same regressors \(X_{i}\) as those in sample. Consider prediction of \(\widetilde{Y}_{i}\) given \(X_{i}\) and the fitted regression. The least squares predictor is \(\widehat{m}_{i}\). The sum of expected squared prediction errors is

\[ \text { MSFE }=\sum_{i=1}^{n} \mathbb{E}\left[\left(\widetilde{Y}_{i}-\widehat{m}_{i}\right)^{2}\right] . \]

The best possible (infeasible) value of this quantity is

\[ \operatorname{MSFE}_{0}=\sum_{i=1}^{n} \mathbb{E}\left[\left(\widetilde{Y}_{i}-m_{i}\right)^{2}\right] . \]

The difference is the prediction accuracy of the estimator:

\[ \begin{aligned} \operatorname{MSFE}^{-\operatorname{MSFE}_{0}} &=\sum_{i=1}^{n} \mathbb{E}\left[\left(\widetilde{Y}_{i}-\widehat{m}_{i}\right)^{2}\right]-\sum_{i=1}^{n} \mathbb{E}\left[\left(\widetilde{Y}_{i}-m_{i}\right)^{2}\right] \\ &=\mathbb{E}\left[\sum_{i=1}^{n}\left(\widehat{m}_{i}-m_{i}\right)^{2}\right] \\ &=R \end{aligned} \]

which equals Mallows’ measure of fit. Thus \(R\) is a measure of prediction accuracy.

We stated that the Mallows criterion is an unbiased estimator of \(R\). More accurately, the adjusted criterion \(C_{p}^{*}=C_{p}-\boldsymbol{e}^{\prime} \boldsymbol{e}\) is unbiased for \(R\). When comparing models \(C_{p}\) and \(C_{p}^{*}\) are equivalent so this substitution has no consequence for model selection.

Theorem 28.5 If \(\widehat{\boldsymbol{m}}=\boldsymbol{A} \boldsymbol{Y}\) is a linear estimator, the regression error is conditionally mean zero and homoskedastic, and \(\widetilde{\sigma}^{2}\) is unbiased for \(\sigma^{2}\), then

\[ \mathbb{E}\left[C_{p}^{*}\right]=R \]

so the adjusted Mallows criterion \(C_{p}^{*}\) is an unbiased estimator of \(R\).

The proof is given in Section 28.32.

28.12 Hold-Out Criterion

Dividing the sample into two parts, one for estimation and the second for evaluation, creates a simple device for model evaluation and selection. This procedure is often labelled hold-out evaluation. In the recent machine learning literature the data division is typically described as a training sample and a test sample.

The sample is typically divided randomly so that the estimation (training) sample has \(N\) observations and the evaluation (test) sample has \(P\) observations, where \(N+P=n\). There is no universal rule for the choice of \(N \& P\), but \(N=P=n / 2\) is a standard choice. For more complicated procedures, such as the evaluation of model selection methods, it is desirable to make a tripartite division of the sample into (1) training, (2) model selection, and (3) final estimation and assessment. This can be particularly useful when it is desired to obtain a parameter estimator whose distribution is not distorted by the model selection process. Such divisions are most suited for a context of an extremely large sample.

Take the standard case of a bipartite division where \(1 \leq i \leq N\) is the estimation sample and \(N+1 \leq\) \(i \leq N+P\) is the evaluation sample. On the estimation sample we construct the parameter estimates, for example the least squares coefficients

\[ \widetilde{\beta}_{N}=\left(\sum_{i=1}^{N} X_{i} X_{i}^{\prime}\right)^{-1}\left(\sum_{i=1}^{N} X_{i} Y_{i}\right) \]

Combining this coefficient with the evaluation sample we calculate the prediction errors \(\widetilde{e}_{N, i}=Y_{i}-X_{i}^{\prime} \widetilde{\beta}_{N}\) for \(i \geq N+1\).

In Section \(4.12\) we defined the mean squared forecast error (MSFE) based on a estimation sample of size \(N\) as the expectation of the squared out-of-sample prediction error \(\operatorname{MSFE}_{N}=\mathbb{E}\left[\widetilde{e}_{N, i}^{2}\right]\). The hold-out estimator of the MSFE is the average of the squared prediction errors

\[ \widetilde{\sigma}_{N, P}^{2}=\frac{1}{P} \sum_{i=N+1}^{N+P} \widetilde{e}_{N, i}^{2} . \]

We can see that \(\widetilde{\sigma}_{N, P}^{2}\) is unbiased for \(\mathrm{MSFE}_{N}\).

When \(N=P\) we can improve estimation of the MSFE by flipping the procedure. Exchanging the roles of estimation and evaluation samples we obtain a second MSFE estimator, say \(\widetilde{\omega}_{N, P}^{2}\). The global estimator is their average \(\widetilde{\sigma}_{N, P}^{* 2}=\left(\widetilde{\sigma}_{N, P}^{2}+\widetilde{\omega}_{N, P}^{2}\right) / 2\). This estimator also has expectation MSFE \({ }_{N}\) but has reduced variance.

The estimated MSFE \(\widetilde{\sigma}_{N, P}^{* 2}\) can be used for model selection. The quantity \(\widetilde{\sigma}_{N, P}^{* 2}\) is calculated for a set of proposed models. The selected model is the one with the smallest value of \(\widetilde{\sigma}_{N, P}^{* 2}\). The method is intuitive, general, and flexible, and does not rely on technical assumptions.

The hold-out method has two disadvantages. First, if our goal is estimation using the full sample, our desired estimate is \(\mathrm{MSFE}_{n}\), not \(\operatorname{MSFE}_{N}\). Hold-out estimation provides an estimator of the MSFE based on estimation using a substantially reduced sample size, and is thus biased for the MSFE based on estimation using the full sample. Second, the estimator \(\widetilde{\sigma}_{N, P}^{* 2}\) is sensitive to the random sorting of the observations into the estimation and evaluation samples. This affects model selection. Results can depend on the initial sample sorting and are therefore partially arbitrary.

28.13 Cross-Validation Criterion

In applied statistics and machine learning the default method for model selection and tuning parameter selection is cross-validation. We have introduced some of the concepts throughout the textbook, and review and unify the concepts at this point. Cross-validation is closely related to the hold-out criterion introduced in the previous section.

In Section \(3.20\) we defined the leave-one-out estimator as that obtained by applying an estimation formula to the sample omitting the \(i^{t h}\) observation. This is identical to the hold-out problem as described previously, where the estimation sample is \(N=n-1\) and the evaluation sample is \(P=1\). The estimator obtained omitting observation \(i\) is written as \(\widehat{\beta}_{(-i)}\). The prediction error is \(\widetilde{e}_{i}=Y_{i}-X_{i}^{\prime} \widehat{\beta}_{(-i)}\). The out-of-sample mean squared error “estimate” is \(\widetilde{e}_{i}^{2}\). This is repeated \(n\) times, once for each observation \(i\), and the MSFE estimate is the average of the \(n\) squared prediction errors

\[ \mathrm{CV}=\frac{1}{n} \sum_{i=1}^{n} \widetilde{e}_{i}^{2} . \]

The estimator CV is called the cross-validation (CV) criterion. It is a natural generalization of the hold-out criterion and eliminates the two disadvantages described in the previous section. First, the CV criterion is an unbiased estimator of MSFE \({ }_{n-1}\), which is essentially the same as MSFE . Thus CV \(_{n}\). is essentially unbiased for model selection. Second, the CV criterion does not depend on a random sorting of the observations. As there is no random component the criterion takes the same value in any implementation.

In least squares estimation the CV criterion has a simple computational implementation. Theorem 3.7 shows that the leave-one-out least squares estimator (3.42) equals

\[ \widehat{\beta}_{(-i)}=\widehat{\beta}-\frac{1}{\left(1-h_{i i}\right)}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} X_{i} \widehat{e}_{i} \]

where \(\widehat{e}_{i}\) are the least squares residuals and \(h_{i i}\) are the leverage values. The prediction error thus equals

\[ \widetilde{e}_{i}=Y_{i}-X_{i}^{\prime} \widehat{\beta}_{(-i)}=\left(1-h_{i i}\right)^{-1} \widehat{e}_{i} \]

where the second equality is from Theorem 3.7. Consequently the CV criterion is

\[ \mathrm{CV}=\frac{1}{n} \sum_{i=1}^{n} \widetilde{e}_{i}^{2}=\frac{1}{n} \sum_{i=1}^{n}\left(1-h_{i i}\right)^{-2} \widehat{e}_{i}^{2} . \]

Recall as well that in our study of nonparametric regression (Section 19.12) we defined the crossvalidation criterion for kernel regression as the weighted average of the squared prediction errors

\[ \mathrm{CV}=\frac{1}{n} \sum_{i=1}^{n} \tilde{e}_{i}^{2} w\left(X_{i}\right) . \]

Theorem \(19.7\) showed that \(\mathrm{CV}\) is approximately unbiased for the integrated mean squared error (IMSE), which is a standard measure of accuracy for nonparametric regression. These results show that CV is an unbiased estimator for both the MSFE and IMSE, showing a close connection between these measures of accuracy.

In Section \(20.17\) and equation (20.30) we defined the CV criterion for series regression as in (28.5). Selecting variables for series regression is identical to model selection. The results as described above show that the CV criterion is an estimator for the MSFE and IMSE of the regression model and is therefore a good candidate for assessing model accuracy. The validity of the CV criterion is much broader than the AIC as the theorems for CV do not require conditional homoskedasticity. This is not an artifact of the proof method; cross-validation is inherently more robust than AIC or BIC.

Implementation of CV model selection is the same as for the other criteria. A set of regression models are estimated. For each the CV criterion is calculated. The model with the smallest value of CV is the CVselected model.

The CV method is also much broader in concept and potential application. It applies to any estimation method so long as a “leave one out” error can be calculated. It can also be applied to other loss functions beyond squared error loss. For example, a cross-validation estimate of absolute loss is

\[ \mathrm{CV}=\frac{1}{n} \sum_{i=1}^{n}\left|\widetilde{e}_{i}\right| . \]

Computationally and conceptually it is straightforward to select models by minimizing such criterion. However, the properties of applying CV to general criterion is not known.

Stata does not have a standard command to calculate the CV criterion for regression models.

28.14 K-Fold Cross-Validation

There are two deficiencies with the CV criterion which can be alleviated by the closely related K-fold cross-validation criterion. The first deficiency is that CV calculation can be computationally costly when sample sizes are very large or the estimation method is other than least squares. For estimators other than least squares it may be necessary to calculate \(n\) separate estimations. This can be computationally prohibitive in some contexts. A second deficiency is that the CV criterion, viewed as an estimator of \(\operatorname{MSFE}_{n}\), has a high variance. The source is that the leave-one-out estimators \(\widehat{\beta}_{(-i)}\) have minimal variation across \(i\) and are therefore highly correlated.

An alternative is is to split the sample into \(K\) groups (or “folds”) and treat each group as a hold-out sample. This effectively reduces the number of estimations from \(n\) to \(K\). (This \(K\) is not the number of estimated coefficients. I apologize for the possible confusion in notation but this is the standard label.) A common choice is \(K=10\), leading to what is known as \(\mathbf{1 0}\)-fold cross-validation.

The method works by the following steps. This description is for estimation of a regression model \(Y=g(X, \theta)+e\) with estimator \(\widehat{\theta}\)

Randomly sort the observations.

Split the observations into folds \(k=1, \ldots, K\) of (roughly) equal size \(n_{k} \simeq n / K\). Let \(I_{k}\) denote the observations in fold \(k\)

For \(k=1, . ., K\)

Exclude fold \(I_{k}\) from the dataset. This produces a sample with \(n-n_{k}\) observations.

Calculate the estimator \(\widehat{\theta}_{(-k)}\) on this sample.

Calculate the prediction errors \(\widetilde{e}_{i}=Y_{i}-g\left(X_{i}, \widehat{\theta}_{(-k)}\right)\) for \(i \in I_{k}\).

Calculate \(\mathrm{CV}_{k}=n_{k}^{-1} \sum_{i \in I_{k}} \widetilde{e}_{i}^{2}\)

1. Calculate \(\mathrm{CV}=K^{-1} \sum_{k=1}^{K} \mathrm{CV}_{k}\).

If \(K=n\) the method is identical to leave-one-out cross validation.

A useful feature of \(K\)-fold CV is that we can calculate an approximate standard error. It is based on the approximation \(\operatorname{var}[\mathrm{CV}] \simeq K^{-1} \operatorname{var}\left[\mathrm{CV}_{k}\right]\) which is based on the idea that \(\mathrm{CV}_{k}\) are approximately uncorrelated acros folds. This leads to the standard error

\[ s(\mathrm{CV})=\sqrt{\frac{1}{K(K-1)} \sum_{k=1}^{K}\left(\mathrm{CV}_{k}-\mathrm{CV}\right)^{2}} . \]

This is similar to a clustered variance formula, where the folds are treated as clusters. The standard error \(s\) (CV) can be reported to assess the precision of CV as an estimate of the MSFE.

One disadvantage of K-fold cross-validation is that CV can be sensitive to the initial random sorting of the observations, leading to partially arbitrary results. This problem can be reduced by a technique called repeated CV, which repeats the K-fold CV algorithm \(M\) times (each time with a different random sorting), leading to \(M\) values of \(C\). These are averaged to produce the repeated CV value. As \(M\) increases, the randomness due to sorting is eliminated. An associated standard error can be obtained by taking the square root of the average squared standard errors.

CV model selection is typically implemented by selecting the model with the smallest value of CV. An alternative implementation is known as the one standard error (1se) rule and selects the most parsimonious model whose value of CV is within one standard error of the minimum CV. The (informal) idea is that models whose value of \(\mathrm{CV}\) is within one standard error of one another are not statistically distinguishable, and all else held equal we should lean towards parsimony. The 1se rule is the default in the popular cv.glmnet R function. The lse rule is an oversmoothing choice, meaning that it leans towards higher bias and reduced variance. In contrast, for inference many econometricians recommend undersmoothing bandwidths, which means selecting a less parsimonious model than the CV minimizing choice.

28.15 Many Selection Criteria are Similar

For the linear regression model many selection criteria have been introduced. However, many of these alternative criteria are quite similar to one another. In this section we review some of these connections. The following discussion is for the standard regression model \(Y=X^{\prime} \beta+e\) with \(n\) observations, \(K\) estimated coefficients, and least squares variance estimator \(\widehat{\sigma}_{K}^{2}\).

Shibata (1980) proposed the criteria

\[ \text { Shibata }=\widehat{\sigma}_{K}^{2}\left(1+\frac{2 K}{n}\right) \]

as an estimator of the MSFE. Recalling the Mallows criterion for regression (28.15) we see that Shibata = \(C_{p} / n\) if we replace the preliminary estimator \(\widetilde{\sigma}^{2}\) with \(\widehat{\sigma}_{K}^{2}\). Thus the two are quite similar in practice.

Taking logarithms and using the approximation \(\log (1+x) \simeq x\) for small \(x\)

\[ n \log (\text { Shibata })=n \log \left(\widehat{\sigma}_{K}^{2}\right)+n \log \left(1+\frac{2 K}{n}\right) \simeq n \log \left(\widehat{\sigma}_{K}^{2}\right)+2 K=\text { AIC. } \]

Thus minimization of Shibata’s criterion and AIC are similar.

Akaike (1969) proposed the Final Prediction Error Criteria

\[ \mathrm{FPE}=\widehat{\sigma}_{K}^{2}\left(\frac{1+K / n}{1-K / n}\right) . \]

Using the expansions \((1-x)^{-1} \simeq 1+x\) and \((1+x)^{2} \simeq 1+2 x\) we see that \(\mathrm{FPE} \simeq\) Shibata.

Craven and Wahba (1979) proposed Generalized Cross Validation

\[ \mathrm{GCV}=\frac{n \widehat{\sigma}_{K}^{2}}{(n-K)^{2}} . \]

By the expansion \((1-x)^{-2} \simeq 1+2 x\) we find that

\[ n \mathrm{GCV}=\frac{\widehat{\sigma}_{K}^{2}}{(1-K / n)^{2}} \simeq \widehat{\sigma}_{K}^{2}\left(1+\frac{2 K}{n}\right)=\text { Shibata. } \]

The above calculations show that the WMSE, AIC, Shibata, FPE, GCV, and Mallows criterion are all close approximations to one another when \(K / n\) is small. Differences arise in finite samples for large \(K\). However, the above analysis shows that there is no fundamental difference between these criteria. They are all estimating the same target. This is in contrast to BIC which uses a different parameterization penalty and is asymptotically distinct. Interestingly there also is a connection between \(\mathrm{CV}\) and the above criteria. Again using the expansion \((1-x)^{-2} \simeq 1+2 x\) we find that

\[ \begin{aligned} \mathrm{CV} &=\sum_{i=1}^{n}\left(1-h_{i i}\right)^{-2} \widehat{e}_{i}^{2} \\ & \simeq \sum_{i=1}^{n} \widehat{e}_{i}^{2}+\sum_{i=1}^{n} 2 h_{i i} \widehat{e}_{i}^{2} \\ &=n \widehat{\sigma}_{K}^{2}+2 \sum_{i=1}^{n} X_{i}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} X_{i} \widehat{e}_{i}^{2} \\ &=n \widehat{\sigma}_{K}^{2}+2 \operatorname{tr}\left(\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1}\left(\sum_{i=1}^{n} X_{i} X_{i}^{\prime} \widehat{e}_{i}^{2}\right)\right) \\ & \simeq n \widehat{\sigma}_{K}^{2}+2 \operatorname{tr}\left(\left(\mathbb{E}\left[X X^{\prime}\right]\right)^{-1}\left(\mathbb{E}\left[X X^{\prime} e^{2}\right]\right)\right) \\ &=n \widehat{\sigma}_{K}^{2}+2 K \sigma^{2} \\ & \simeq S h i b a t a . \end{aligned} \]

The third-to-last line holds asymptotically by the WLLN. The following equality holds under conditional homoskedasiticity. The final approximation replaces \(\sigma^{2}\) by the estimator \(\widehat{\sigma}_{K}^{2}\). This calculation shows that under the assumption of conditional homoskedasticity the CV criterion is similar to the other criteria. It differs under heteroskedasticity, however, which is one of its primary advantages.

28.16 Relation with Likelihood Ratio Testing

Since the AIC and BIC are penalized log-likelihoods, AIC and BIC selection are related to likelihood ratio testing. Suppose we have two nested models \(\mathscr{M}_{1}\) and \(\mathscr{M}_{2}\) with log-likelihoods \(\ell_{1 n}\left(\widehat{\theta}_{1}\right)\) and \(\ell_{2 n}\left(\widehat{\theta}_{2}\right)\) and \(K_{1}<K_{2}\) estimated parameters. AIC selects \(\mathscr{M}_{1}\) if \(\operatorname{AIC}\left(K_{1}\right)<\operatorname{AIC}\left(K_{2}\right)\) which occurs when

\[ \left.-2 \ell_{1 n}\left(\widehat{\theta}_{1}\right)+2 K_{1}<-2 \ell_{2 n}\left(\widehat{\theta}_{2}\right)\right)+2 K_{2} \]

or

\[ \mathrm{LR}=2\left(\ell_{2 n}\left(\widehat{\theta}_{2}\right)-\ell_{1 n}\left(\widehat{\theta}_{1}\right)\right)<2 r \]

where \(r=K_{2}-K_{1}\). Thus AIC selection is similar to selection by likelihood ratio testing with a different critical value. Rather than using a critical value from the chi-square distribution the “critical value” is \(2 r\). This is not to say that AIC selection is testing (it is not). But rather that there is a similar structure in the decision.

There are two useful practical implications. One is that when test statistics are reported in their \(F\) form (which divide by the difference in coefficients \(r\) ) then the AIC “critical value” is 2 . The AIC selects the restricted (smaller) model if \(F<2\). It selects the unrestricted (larger) model if \(F>2\).

Another useful implication is in the case of considering a single coefficient (when \(r=1\) ). AIC selects the coefficient (the larger model) if \(\mathrm{LR}>2\). In contrast a \(5 %\) significance test “selects” the larger model (rejects the smaller) if LR \(>3.84\). Thus AIC is more generous in terms of selecting larger models. An equivalent way of seeing this is that AIC selects the coefficient if the t-ratio exceeds \(1.41\) while the \(5 %\) significance test selects if the t-ratio exceeds \(1.96\).

Similar comments apply to BIC selection though the effective critical values are different. For comparing models with coefficients \(K_{1}<K_{2}\) the BIC selects \(\mathscr{M}_{1}\) if \(\mathrm{LR}<\log (n) r\). The “critical value” for an \(F\) statistic is \(\log (n)\). Hence BIC selection becomes stricter as sample sizes increase.

28.17 Consistent Selection

An important property of a model selection procedure is whether it selects a true model in large samples. We call such a procedure consistent.

To discuss this further we need to thoughtfully define what is a “true” model. The answer depends on the type of model.

When a model is a parametric density or distribution \(f(y, \theta)\) with \(\theta \in \Theta\) (as in likelihood estimation) then the model is true if there is some \(\theta_{0} \in \Theta\) such that \(f\left(y, \theta_{0}\right)\) equals the true density or distribution. Notice that it is important in this context both that the function class \(f(y, \theta)\) and parameter space \(\Theta\) are appropriately defined.

In a semiparametric conditional moment condition model which states \(\mathbb{E}[g(Y, X, \theta) \mid X]=0\) with \(\theta \in \Theta\) then the model is true if there is some \(\theta_{0} \in \Theta\) such that \(\mathbb{E}\left[g\left(Y, X, \theta_{0}\right) \mid X\right]=0\). This includes the regression model \(Y=m(X, \theta)+e\) with \(\mathbb{E}[e \mid X]=0\) where the model is true if there is some \(\theta_{0} \in \Theta\) such that \(m\left(X, \theta_{0}\right)=\mathbb{E}[Y \mid X]\). It also includes the homoskedastic regression model which adds the requirement that \(\mathbb{E}\left[e^{2} \mid X\right]=\sigma^{2}\) is a constant.

In a semiparametric unconditional moment condition model \(\mathbb{E}[g(Y, X, \theta)]=0\) then the model is true if there is some \(\theta_{0} \in \Theta\) such that \(\mathbb{E}\left[g\left(Y, X, \theta_{0}\right)\right]=0\). A subtle issue here is that when the model is just identified and \(\Theta\) is unrestricted then this condition typically holds and so the model is typically true. This includes least squares regression interpreted as a projection and just-identified instrumental variables regression.

In a nonparametric model such as \(Y \sim f \in \mathscr{F}\) where \(\mathscr{F}\) is some function class (such as second-order differentiable densities) then the model is true if the true density is a member of the function class \(\mathscr{F}\).

A complication arises that there may be multiple true models. This cannot occur when models are strictly non-nested (meaning that there is no common element in both model classes) but strictly nonnested models are rare. Most models have non-trivial intersections. For example, the linear regression models \(Y=\alpha+X_{1}^{\prime} \beta_{1}+e\) and \(Y=\alpha+X_{2}^{\prime} \beta_{2}+e\) with \(X_{1}\) and \(X_{2}\) containing no common elements may appear non-nested but they intersect when \(\beta_{1}=0\) and \(\beta_{2}=0\). As another example consider the linear model \(Y=\alpha+X^{\prime} \beta+e\) and \(\log\)-linear model \(\log (Y)=\alpha+X^{\prime} \beta+e\). If we add the assumption that \(e \sim \mathrm{N}\left(0, \sigma^{2}\right)\) then the models are non-intersecting. But if we relax normality and instead use the conditional mean assumption \(\mathbb{E}[e \mid X]=0\) then the models are intersecting when \(\beta_{1}=0\) and \(\beta_{2}=0\).

The most common type of intersecting models are nested. In regression this occurs when the two models are \(Y=X_{1}^{\prime} \beta_{1}+e\) and \(Y=X_{1}^{\prime} \beta_{1}+X_{2}^{\prime} \beta_{2}+e\). If \(\beta_{2} \neq 0\) then only the second model is true. But if \(\beta_{2}=0\) then both are true models.

In general, given a set of models \(\overline{\mathscr{M}}=\left\{\mathscr{M}_{1}, \ldots, \mathscr{M}_{M}\right\}\) a subset \(\overline{\mathscr{M}}^{*}\) are true models (as described above) while the remainder are not true models.

A model selection rule \(\widehat{M}\) selects one model from the set \(\bar{M}\). We say a method is consistent if it asymptotically selects a true model.

Definition 28.1 A model selection rule is model selection consistent if \(\mathbb{P}\left[\widehat{M} \in \bar{M}^{*}\right] \rightarrow 1\) as \(n \rightarrow \infty\)

This states that the model selection rule selects a true model with probability tending to 1 as the sample size diverges.

A broad class of model selection methods satisfy this definition of consistency. To see this consider the class of information criteria

\[ \mathrm{IC}=-2 \ell_{n}(\widehat{\theta})+c(n, K) . \]

This includes AIC \((c=2 K), \mathrm{BIC}(c=K \log (n))\), and testing-based selection ( \(c\) equals a fixed quantile of the \(\chi_{K}^{2}\) distribution).

Theorem 28.6 Under standard regularity conditions for maximum likelihood estimation, selection based on IC is model selection consistent if \(c(n, K)=o(n)\) as \(n \rightarrow \infty\).

The proof is given in Section \(28.32\).

This result covers AIC, BIC and testing-based selection. Thus all are model selection consistent.

A major limitation with this result is that the definition of model selection consistency is weak. A model may be true but over-parameterized. To understand the distinction consider the models \(Y=\) \(X_{1}^{\prime} \beta_{1}+e\) and \(Y=X_{1}^{\prime} \beta_{1}+X_{2}^{\prime} \beta_{2}+e\). If \(\beta_{2}=0\) then both \(\mathscr{M}_{1}\) and \(\mathscr{M}_{2}\) are true, but \(\mathscr{M}_{1}\) is the preferred model as it is more parsimonious. When two nested models are both true models it is conventional to think of the more parsimonious model as the correct model. In this context we do not describe the larger model as an incorrect model but rather as over-parameterized. If a selection rule asymptotically selects an over-parameterized model we say that it “over-selects”.

Definition 28.2 A model selection rule asymptotically over-selects if there are models \(\mathscr{M}_{1} \subset \mathscr{M}_{2}\) such that \(\liminf _{n \rightarrow \infty} \mathbb{P}\left[\widehat{\mathscr{M}}=\mathscr{M}_{2} \mid \mathscr{M}_{1}\right]>0\).

The definition states that over-selection occurs when two models are nested and the smaller model is true (so both models are true models but the smaller model is more parsimonious) if the larger model is asymptotically selected with positive probability.

Theorem 28.7 Under standard regularity conditions for maximum likelihood estimation, selection based on IC asymptotically over-selects if \(c(n, K)=O(1)\) as \(n \rightarrow \infty\).

The proof is given in Section \(28.32\).

This result includes both AIC and testing-based selection. Thus these procedures over-select. For example, if the models are \(Y=X_{1}^{\prime} \beta_{1}+e\) and \(Y=X_{1}^{\prime} \beta_{1}+X_{2}^{\prime} \beta_{2}+e\) and \(\beta_{2}=0\) holds, then these procedures select the over-parameterized regression with positive probability.

Following this line of reasoning, it is useful to draw a distinction between true and parsimonious models. We define the set of parsimonious models \(\bar{M}^{0} \subset \overline{\mathscr{M}}^{*}\) as the set of true models with the fewest number of parameters. When the models in \(\bar{M}^{*}\) are nested then \(\overline{\mathscr{M}}^{0}\) will be a singleton. In the regression example with \(\beta_{2}=0\) then \(\mathscr{M}_{1}\) is the unique parsimonious model among \(\left\{\mathscr{M}_{1}, \mathscr{M}_{2}\right\}\). We introduce a stronger consistency definition for procedures which asymptotically select parsimonious models. Definition 28.3 A model selection rule is consistent for parsimonious models if \(\mathbb{P}\left[\widehat{\mathscr{M}} \in \overline{\mathscr{M}}^{0}\right] \rightarrow 1\) as \(n \rightarrow \infty\)

Of the methods we have reviewed, only BIC selection is consistent for parsimonious models, as we now show.

Theorem 28.8 Under standard regularity conditions for maximum likelihood estimation, selection based on IC is consistent for parsimonious models if for all \(K_{2}>K_{1}\)

\[ c\left(n, K_{2}\right)-c\left(n, K_{1}\right) \rightarrow \infty \]

as \(n \rightarrow \infty\), yet \(c(n, K)=o(n)\) as \(n \rightarrow \infty\).

The proof is given in Section 28.32.

The condition includes BIC because \(c\left(n, K_{2}\right)-c\left(n, K_{1}\right)=\left(K_{2}-K_{1}\right) \log (n) \rightarrow \infty\) if \(K_{2}>K_{1}\).

Some economists have interpreted Theorem \(28.8\) as indicating that BIC selection is preferred over the other methods. This is an incorrect deduction. In the next section we show that the other selection procedures are asymptotically optimal in terms of model fit and in terms of out-of-sample forecasting. Thus consistent model selection is only one of several desirable statistical properties.

28.18 Asymptotic Selection Optimality

Regressor selection by the AIC/Shibata/Mallows/CV class turns out to be asymptotically optimal with respect to out-of-sample prediction under quite broad conditions. This may appear to conflict with the results of the previous section but it does not as there is a critical difference between the goals of consistent model selection and accurate prediction.

Our analysis will be in the homoskedastic regression model conditioning on the regressor matrix \(\boldsymbol{X}\). We write the regression model as

\[ \begin{aligned} Y &=m+e \\ m &=\sum_{j=1}^{\infty} X_{j} \beta_{j} \\ \mathbb{E}[e \mid X] &=0 \\ \mathbb{E}\left[e^{2} \mid X\right] &=\sigma^{2} \end{aligned} \]

where \(X=\left(X_{1}, X_{2}, \ldots\right)\). We can also write the regression equation in matrix notation as \(\boldsymbol{Y}=\boldsymbol{m}+\boldsymbol{e}\).

The \(K^{t h}\) regression model uses the first \(K\) regressors \(X_{K}=\left(X_{1}, X_{2}, \ldots, X_{K}\right)\). The least squares estimates in matrix notation are

\[ \boldsymbol{Y}=\boldsymbol{X}_{K} \widehat{\beta}_{K}+\widehat{\boldsymbol{e}}_{K} . \]

As in Section \(28.6\) define the fitted values \(\widehat{\boldsymbol{m}}=\boldsymbol{X}_{K} \widehat{\beta}_{K}\) and regression fit (sum of expected squared prediction errors) as

\[ R_{n}(K)=\mathbb{E}\left[(\widehat{\boldsymbol{m}}-\boldsymbol{m})^{\prime}(\widehat{\boldsymbol{m}}-\boldsymbol{m}) \mid \boldsymbol{X}\right] \]

though now we index \(R\) by sample size \(n\) and model \(K\).

In any sample there is an optimal model \(K\) which minimizes \(R_{n}(K)\) :

\[ K_{n}^{\mathrm{opt}}=\underset{K}{\operatorname{argmin}} R_{n}(K) . \]

Model \(K_{n}^{\text {opt }}\) obtains the minimized value of \(R_{n}(K)\)

\[ R_{n}^{\mathrm{opt}}=R_{n}\left(K_{n}^{\mathrm{opt}}\right)=\min _{K} R_{n}(K) . \]

Now consider model selection using the Mallow’s criterion for regression models

\[ C_{p}(K)=\widehat{\boldsymbol{e}}_{K}^{\prime} \widehat{\boldsymbol{e}}_{K}+2 \sigma^{2} K \]

where we explicitly index by \(K\), and for simplicity we assume the error variance \(\sigma^{2}\) is known. (The results are unchanged if it is replaced by a consistent estimator.) Let the selected model be

\[ \widehat{K}_{n}=\underset{K}{\operatorname{argmin}} C_{p}(K) . \]

Prediction accuracy using the Mallows-selected model is \(R_{n}\left(\widehat{K}_{n}\right)\). We say that a selection procedure is asymptotically optimal if the prediction accuracy is asymptotically equivalent with the infeasible optimum. This can be written as

\[ \frac{R_{n}\left(\widehat{K}_{n}\right)}{R_{n}^{\mathrm{opt}}} \underset{p}{\longrightarrow} 1 . \]

We consider convergence in (28.18) in terms of the risk ratio because \(R_{n}^{\text {opt }}\) diverges as the sample size increases.

Li (1987) established the asymptotic optimality (28.18). His result depends on the following conditions.

The observations \(\left(Y_{i}, X_{\boldsymbol{i}}\right), i=1, \ldots, n\), are independent and identically distributed.

\(\mathbb{E}[e \mid X]=0\).

\(\mathbb{E}\left[e^{2} \mid X\right]=\sigma^{2}\).

\(\mathbb{E}\left[|e|^{4 r} \mid X\right] \leq B<\infty\) for some \(r>1\).

\(R_{n}^{\mathrm{opt}} \rightarrow \infty\) as \(n \rightarrow \infty\)

The estimated models are nested.

Assumptions 28.1.2 and 28.1.3 state that the true model is a conditionally homoskedastic regression. Assumption 28.1.4 is a technical condition, that a conditional moment of the error is uniformly bounded. Assumption 28.1.5 is subtle. It effectively states that there is no correctly specified finite-dimensional model. To see this, suppose that there is a \(K_{0}\) such that the model is correctly specified, meaning that \(m_{i}=\sum_{j=1}^{K_{0}} X_{j i} \beta_{j}\). In this case we can show that for \(K \geq K_{0}, R_{n}(K)=R_{n}\left(K_{0}\right)\) does not change with \(n\), violating Assumption 28.1.5. Assumption 28.1.6 is a technical condition that restricts the number of estimated models. This assumption can be generalized to allow non-nested models, but in this case an alternative restriction on the number of estimated models is needed.

Theorem 28.9 Assumption \(28.1\) implies (28.18). Thus Mallows selection is asymptotically equivalent to using the infeasible optimal model.

The proof is given in Section 28.32.

Theorem \(28.9\) states that Mallows selection in a conditional homoskedastic regression is asymptotically optimal. The key assumptions are homoskedasticity and that all finite-dimensional models are misspecified (incomplete), meaning that there are always omitted variables. The latter means that regardless of the sample size there is always a trade-off between omitted variables bias and estimation variance. The theorem as stated is specific for Mallows selection but extends to AIC, Shibata, GCV, FPE, and CV with some additional technical considerations. The primary message is that the selection methods discussed in the previous section asymptotically select a sequence of models which are best-fitting in the sense of minimizing the prediction error.

Using a similar argument, Andrews (1991c) showed that selection by cross-validation satisfies the same asymptotic optimality condition without requiring conditional homoskedasticity. The treatment is a bit more technical so we do not review it here. This indicates an important advantage for crossvalidation selection over the other methods.

28.19 Focused Information Criterion

Claeskens and Hjort (2003) introduced the Focused Information Criterion (FIC) as an estimator of the MSE of a scalar parameter. The criterion is appropriate in correctly-specified likelihood models when one of the estimated models nests the other models. Let \(f(y, \theta)\) be a parametric model density with a \(K \times 1\) parameter \(\theta\).

The class of models (sub-models) allowed are those defined by a set of differentiable restrictions \(r(\theta)=0\). Let \(\widetilde{\theta}\) be the restricted MLE which maximizes the likelihood subject to \(r(\theta)=0\).

A key feature of the FIC is that it focuses on a real-valued parameter \(\mu=g(\theta)\) where \(g\) is some differentiable function. Claeskens and Hjort call \(\mu\) the target parameter. The choice of \(\mu\) is made by the researcher and is a critical choice. In most applications \(\mu\) is the key coefficient in the application (for example, the returns to schooling in a wage regression). The unrestricted MLE for \(\mu\) is \(\widehat{\mu}=g(\widehat{\theta})\), the restricted MLE is \(\widetilde{\mu}=g(\widetilde{\theta})\).

Estimation accuracy is measured by the MSE of the estimator of the target parameter, which is the squared bias plus the variance:

\[ \operatorname{mse}[\widetilde{\mu}]=\mathbb{E}\left[(\widetilde{\mu}-\mu)^{2}\right]=(\mathbb{E}[\widetilde{\mu}]-\mu)^{2}+\operatorname{var}[\widetilde{\mu}] . \]

It turns out to be convenient to normalize the MSE by that of the unrestricted estimator. We define this as the Focus

\[ \mathrm{F}=\operatorname{mse}[\widetilde{\mu}]-\operatorname{mse}[\widehat{\mu}] . \]

The Claeskens-Hjort FIC is an estimator of F. Specifically,

\[ \mathrm{FIC}=(\widetilde{\mu}-\widehat{\mu})^{2}-2 \widehat{\boldsymbol{G}}^{\prime} \widehat{\boldsymbol{V}}_{\widehat{\theta}} \widehat{\boldsymbol{R}}\left(\widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\widehat{\theta}} \widehat{\boldsymbol{R}}^{-1} \widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\widehat{\theta}} \widehat{\boldsymbol{G}}\right. \]

where \(\widehat{\boldsymbol{V}}_{\widehat{\theta}}, \widehat{\boldsymbol{G}}\) and \(\widehat{\boldsymbol{R}}\) are estimators of \(\operatorname{var}[\widehat{\theta}], \boldsymbol{G}=\frac{\partial}{\partial \theta^{\prime}} g(\theta)\) and \(\boldsymbol{R}=\frac{\partial}{\partial \theta^{\prime}} r(\theta)\).

In a least squares regression \(\boldsymbol{Y}=\boldsymbol{X} \beta+\boldsymbol{e}\) with a linear restriction \(\boldsymbol{R}^{\prime} \beta=0\) and linear parameter of interest \(\mu=\boldsymbol{G}^{\prime} \beta\) the FIC equals

\[ \begin{aligned} \mathrm{FIC} &=\left(\boldsymbol{G}^{\prime} \boldsymbol{R}\left(\boldsymbol{R}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \boldsymbol{R}\right)^{-1} \boldsymbol{R}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \widehat{\beta}\right)^{2} \\ &-2 \widehat{\sigma}^{2} \boldsymbol{G}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \boldsymbol{R}\left(\boldsymbol{R}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \boldsymbol{R}\right)^{-1} \boldsymbol{R}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \boldsymbol{G} . \end{aligned} \]

The FIC is used similarly to AIC. The FIC is calculated for each sub-model of interest and the model with the lowest value of FIC is selected.

The advantage of the FIC is that it is specifically targeted to minimize the MSE of the target parameter. The FIC is therefore appropriate when the goal is to estimate a specific target parameter. A disadvantage is that it does not necessarily produce a model with good estimates of the other parameters. For example, in a linear regression \(Y=X_{1} \beta_{1}+X_{2} \beta_{2}+e\), if \(X_{1}\) and \(X_{2}\) are uncorrelated and the focus parameter is \(\beta_{1}\) then the FIC will tend to select the sub-model without \(X_{2}\), and thus the selected model will produce a highly biased estimate of \(\beta_{2}\). Consequently when using the FIC it is dubious if attention should be paid to estimates other than those of \(\mu\).

Computationally it may be convenient to implement the FIC using an alternative formulation. Define the adjusted focus

\[ \mathrm{F}^{*}=n(\mathrm{~F}+2 \operatorname{mse}[\widehat{\mu}])=n(\operatorname{mse}[\widetilde{\mu}]+\operatorname{mse}[\widehat{\mu}]) . \]

This adds the same quantity to all models and therefore does not alter the minimizing model. Multiplication by \(n\) puts the FIC in units which are easier for reporting. The estimate of the adjusted focus is an adjusted FIC and can be written as

\[ \begin{aligned} \text { FIC }^{*} &=n(\widetilde{\mu}-\widehat{\mu})^{2}+2 n \widehat{\boldsymbol{V}}_{\widetilde{\mu}} \\ &=n(\widetilde{\mu}-\widehat{\mu})^{2}+2 n s(\widetilde{\mu})^{2} \end{aligned} \]

where

\[ \widehat{\boldsymbol{V}}_{\widetilde{\mu}}=\widehat{\boldsymbol{G}}^{\prime}\left(\boldsymbol{I}_{k}-\widehat{\boldsymbol{V}}_{\widehat{\theta}} \widehat{\boldsymbol{R}}\left(\widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\widehat{\theta}} \widehat{\boldsymbol{R}}\right)^{-1} \widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\widehat{\theta}}\right) \widehat{\boldsymbol{G}} \]

is an estimator of \(\operatorname{var}[\widetilde{\mu}]\) and \(s(\widetilde{\mu})=\widehat{V}_{\widetilde{\mu}}^{1 / 2}\) is a standard error for \(\widetilde{\mu}\).

This means that \(\mathrm{FIC}^{*}\) can be easily calculated using conventional software without additional programming. The estimator \(\widehat{\mu}\) can be calculated from the full model (the long regression) and the estimator \(\widetilde{\mu}\) and its standard error \(s(\widetilde{\mu})\) from the restricted model (the short regression). The formula (28.20) can then be applied to obtain FIC \(^{*}\).

The formula (28.19) also provides an intuitive understanding of the FIC. When we minimize FIC* we are minimizing the variance of the estimator of the target parameter \(\left(\widehat{\boldsymbol{V}}_{\widetilde{\mu}}\right)\) while not altering the estimate \(\widetilde{\mu}\) too much from the unrestricted estimate \(\widehat{\mu}\).

When selecting from amongst just two models, the FIC selects the restricted model if \((\widetilde{\mu}-\widehat{\mu})^{2}+2 \widehat{\boldsymbol{V}}_{\widetilde{\mu}}<\) 0 which is the same as \((\widetilde{\mu}-\widehat{\mu})^{2} / \widehat{V}_{\widetilde{\mu}}<2\). The statistic to the left of the inequality is the squared t-statistic in the restricted model for testing the hypothesis that \(\mu\) equals the unrestricted estimator \(\widehat{\mu}\) but ignoring the estimation error in the latter. Thus a simple implementation (when just comparing two models) is to estimate the long and short regressions, take the difference in the two estimates of the coefficient of interest, and compute a t-ratio using the standard error from the short (restricted) regression. If this t-ratio exceeds \(1.4\) the FIC selects the long regression estimate. If the t-ratio is smaller than \(1.4\) the FIC selects the short regression estimate. Claeskens and Hjort motivate the FIC using a local misspecification asymptotic framework. We use a simpler heuristic motivation. First take the unrestricted MLE. Under standard conditions \(\widehat{\mu}\) has asymptotic variance \(\boldsymbol{G}^{\prime} \boldsymbol{V}_{\theta} \boldsymbol{G}\) where \(\boldsymbol{V}_{\theta}=\mathscr{I}^{-1}\). As the estimator is asymptotically unbiased it follows that

\[ \operatorname{mse}[\widehat{\mu}] \simeq \operatorname{var}[\widehat{\mu}] \simeq n^{-1} \boldsymbol{G}^{\prime} \boldsymbol{V}_{\theta} \boldsymbol{G} . \]

Second take the restricted MLE. Under standard conditions \(\widetilde{\mu}\) has asymptotic variance

\[ \boldsymbol{G}^{\prime}\left(\boldsymbol{V}_{\theta}-\boldsymbol{V}_{\theta} \boldsymbol{R}\left(\boldsymbol{R}^{\prime} \boldsymbol{V}_{\theta} \boldsymbol{R}\right)^{-1} \boldsymbol{R} \boldsymbol{V}_{\theta}\right) \boldsymbol{G} . \]

\(\widetilde{\mu}\) also has a probability limit, say \(\mu_{R}\), which (generally) differs from \(\mu\). Together we find that

\[ \operatorname{mse}[\widetilde{\mu}] \simeq B+n^{-1} \boldsymbol{G}^{\prime}\left(\boldsymbol{V}_{\theta}-\boldsymbol{V}_{\theta} \boldsymbol{R}\left(\boldsymbol{R}^{\prime} \boldsymbol{V}_{\theta} \boldsymbol{R}\right)^{-1} \boldsymbol{R} \boldsymbol{V}_{\theta}\right) \boldsymbol{G} \]

where \(B=\left(\mu-\mu_{R}\right)^{2}\). Subtracting, we find that the Focus is

\[ \mathrm{F} \simeq B-n^{-1} \boldsymbol{G}^{\prime} \boldsymbol{V}_{\theta} \boldsymbol{R}\left(\boldsymbol{R}^{\prime} \boldsymbol{V}_{\theta} \boldsymbol{R}\right)^{-1} \boldsymbol{R} \boldsymbol{V}_{\theta} \boldsymbol{G} . \]

The plug-in estimator \(\widehat{B}=(\widehat{\mu}-\widetilde{\mu})^{2}\) of \(B\) is biased because

\[ \begin{aligned} \mathbb{E}[\widehat{B}] &=(\mathbb{E}[\widehat{\mu}-\widetilde{\mu}])^{2}+\operatorname{var}[\widehat{\mu}-\widetilde{\mu}] \\ & \simeq B+\operatorname{var}[\widehat{\mu}]-\operatorname{var}[\widetilde{\mu}] \\ & \simeq B+n^{-1} \boldsymbol{G}^{\prime} \boldsymbol{V}_{\theta} \boldsymbol{R}\left(\boldsymbol{R}^{\prime} \boldsymbol{V}_{\theta} \boldsymbol{R}\right)^{-1} \boldsymbol{R}_{\theta} \boldsymbol{G} . \end{aligned} \]

It follows that an approximately unbiased estimator for \(F\) is

\[ \widehat{B}-2 n^{-1} \boldsymbol{G}^{\prime} \boldsymbol{V}_{\theta} \boldsymbol{R}\left(\boldsymbol{R}^{\prime} \boldsymbol{V}_{\theta} \boldsymbol{R}\right)^{-1} \boldsymbol{R} \boldsymbol{V}_{\theta} \boldsymbol{G} . \]

The FIC is obtained by replacing the unknown \(\boldsymbol{G}, \boldsymbol{R}\), and \(n^{-1} \boldsymbol{V}_{\theta}\) by estimates.

28.20 Best Subset and Stepwise Regression

Suppose that we have a set of potential regressors \(\left\{X_{1}, \ldots, X_{K}\right\}\) and we want to select a subset of the regressors to use in a regression. Let \(S_{m}\) denote a subset of the regressors, and let \(m=1, \ldots, M\) denote the set of potential subsets. Given a model selection criterion (e.g. AIC, Mallows, or CV) the best subset model is the one which minimizes the criterion across the \(M\) models. This is implemented by estimating the \(M\) models and comparing the model selection criteria.

If \(K\) is small it is computationally feasible to compare all subset models. However, when \(K\) is large this may not be feasible. This is because the number of potential subsets is \(M=2^{K}\) which increases quickly with \(K\). For example, \(K=10\) implies \(M=1024, K=20\) implies \(M \geq 1,000,000\), and \(K=40\) implies \(M\) exceeds one trillion. It simply does not make sense to estimate all subset regressions in such cases.

If the goal is to find the set of regressors which produces the smallest selection criterion it seems likely that we should be able to find an approximating set of regressors at much reduced computation cost. Some specific algorithms to implement this goal are as called stepwise, stagewise, and least angle regression. None of these procedures actually achieve the goal of minimizing any specific selection criterion; rather they are viewed as useful computational approximations. There is also some potential confusion as different authors seem to use the same terms for somewhat different implementations. We use the terms here as described in Hastie, Tibshirani, and Friedman (2008).

In the following descriptions we use \(\operatorname{SSE}(m)\) to refer to the sum of squared residuals from a fitted model and \(C(m)\) to refer to the selection criterion used for model comparison (AIC is most typically used).

28.21 Backward Stepwise Regression

Start with all regressors \(\left\{X_{1}, \ldots, X_{K}\right\}\) included in the “active set”.

For \(m=0, \ldots, K-1\)

Estimate the regression of \(Y\) on the active set.

Identify the regressor whose omission will have the smallest impact on \(C(m)\).

Put this regressor in slot \(K-m\) and delete from the active set.

Calculate \(C(m)\) and store in slot \(K-m\).

1. The model with the smallest value of \(C(m)\) is the selected model.

Backward stepwise regression requires \(K<n\) so that regression with all variables is feasible. It produces an ordering of the regressors from “most relevant” to “least relevant”. A simplified version is to exit the loop when \(C(m)\) increases. (This may not yield the same result as completing the loop.) For the case of AIC selection, step (b) can be implemented by calculating the classical (homoskedastic) t-ratio for each active regressor and find the regressor with the smallest absolute t-ratio. (See Exercise 28.3.)

28.22 Forward Stepwise Regression

Start with the null set \(\{\varnothing\}\) as the “active set” and all regressors \(\left\{X_{1}, \ldots, X_{K}\right\}\) as the “inactive set”.

For \(m=1, \ldots, \min (n-1, K)\)

Estimate the regression of \(Y\) on the active set.

Identify the regressor in the inactive set whose inclusion will have the largest impact on \(C(m)\).

Put this regressor in slot \(m\) and move it from the inactive to the active set.

Calculate \(C(m)\) and store in slot \(m\).

1. The model with the smallest value of \(C(m)\) is the selected model.

A simplified version is to exit the loop when \(C(m)\) increases. (This may not yield the same answer as completing the loop.) For the case of AIC selection step (b) can be implemented by finding the regressor in the inactive set with the largest absolute correlation with the residual from step (a). (See Exercise 28.4.)

There are combined algorithms which check both forward and backward movements at each step. The algorithms can also be implemented with the regressors organized in groups (so that all elements are either included or excluded at each step). There are also old-fashioned versions which use significance testing rather than selection criterion (this is generally not advised).

Stepwise regression based on old-fashioned significance testing can be implemented in Stata using the stepwise command. If attention is confined to models which include regressors one-at-a-time, AIC selection can be implemented by setting the significance level equal to \(p=0.32\). Thus the command stepwise, \(\operatorname{pr}\) (.32) implements backward stepwise regression with the AIC criterion, and stepwise, pe (.32) implements forward stepwise regression with the AIC criterion.

Stepwise regression can be implemented in R using the lars command.

28.23 The MSE of Model Selection Estimators

Model selection can lead to estimators with poor sampling performance. In this section we show that the mean squared error of estimation is not necessarily improved, and can be considerably worsened, by model selection.

To keep things simple consider an estimator with an exact normal distribution and known covariance matrix. Normalizing the latter to the identity we consider the setting

\[ \widehat{\theta} \sim \mathrm{N}\left(\theta, I_{K}\right) \]

and the class of model selection estimators

\[ \widehat{\theta}_{\mathrm{pms}}=\left\{\begin{array}{lll} \widehat{\theta} & \text { if } & \widehat{\theta}^{\prime} \widehat{\theta}>c \\ 0 & \text { if } & \widehat{\theta}^{\prime} \hat{\theta} \leq c \end{array}\right. \]

for some \(c\). AIC sets \(c=2 K\), BIC sets \(c=K \log (n)\), and \(5 %\) significance testing sets \(c\) to equal the \(95 %\) quantile of the \(\chi_{K}^{2}\) distribution. It is common to call \(\widehat{\theta}_{\mathrm{pms}}\) a post-model-selection (PMS) estimator

We can explicitly calculate the MSE of \(\widehat{\theta}_{\mathrm{pms}}\).

Theorem 28.10 If \(\widehat{\theta} \sim \mathrm{N}\left(\theta, \boldsymbol{I}_{K}\right)\) then

\[ \operatorname{mse}\left[\widehat{\theta}_{\mathrm{pms}}\right]=K+(2 \lambda-K) F_{K+2}(c, \lambda)-\lambda F_{K+4}(c, \lambda) \]

where \(F_{r}(x, \lambda)\) is the non-central chi-square distribution function with \(r\) degrees of freedom and non-centrality parameter \(\lambda=\theta^{\prime} \theta\).

The proof is given in Section \(28.32\).

The MSE is determined only by \(K, \lambda\), and \(c . \lambda=\theta^{\prime} \theta\) turns out to be an important parameter for the MSE. As the squared Euclidean length, it indexes the magnitude of the coefficient \(\theta\).

We can see the following limiting cases. If \(\lambda=0\) then mse \(\left[\widehat{\theta}_{\mathrm{pms}}\right]=K\left(1-F_{K+2}(c, 0)\right)\). As \(\lambda \rightarrow \infty\) then mse \(\left[\widehat{\theta}_{\mathrm{pms}}\right] \rightarrow K\). The unrestricted estimator obtains if \(c=0\), in which case mse \(\left[\widehat{\theta}_{\mathrm{pms}}\right]=K\). As \(c \rightarrow \infty\), mse \(\left[\widehat{\theta}_{\mathrm{pms}}\right] \rightarrow \lambda\). The latter fact implies that the PMS estimator based on the BIC has MSE \(\rightarrow \infty\) as \(n \rightarrow \infty\).

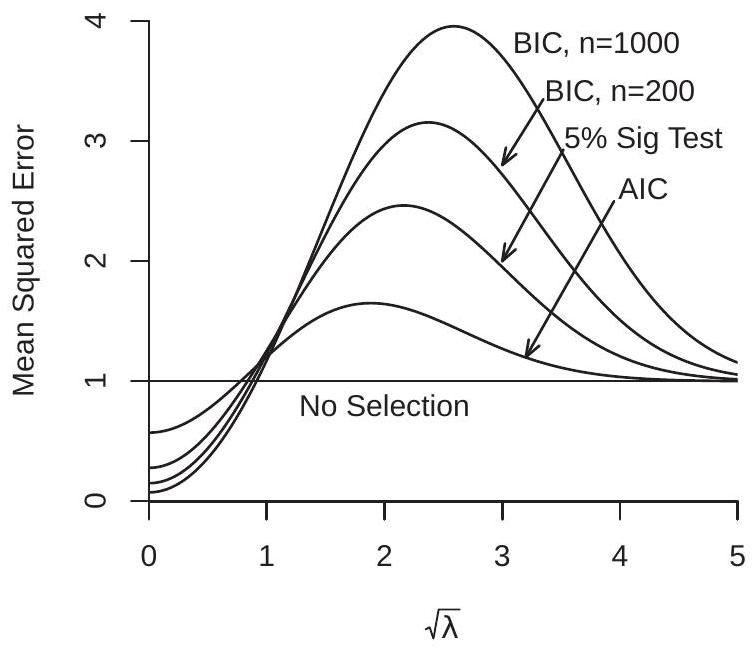

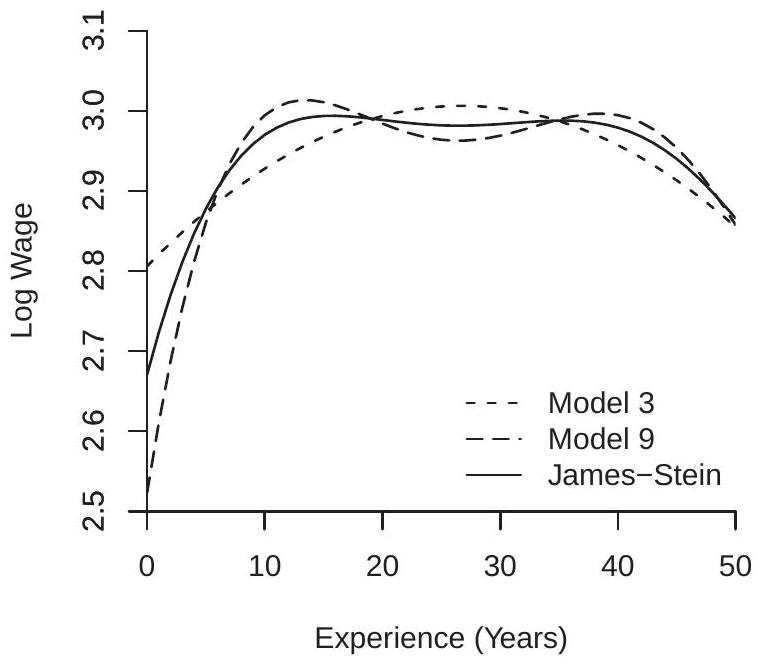

Using Theorem \(28.10\) we can numerically calculate the MSE. In Figure 28.1(a) and (b) we plot the MSE of a set of estimators for a range of values of \(\sqrt{\lambda}\). Panel (a) is for \(K=1\), panel (b) is for \(K=5\). Note that the MSE of the unselected estimator \(\widehat{\theta}\) is invariant to \(\lambda\), so its MSE plot is a flat line at \(K\). The other estimators plotted are AIC selection ( \(c=2 K\) ), 5% significance testing selection (chi-square critical value), and BIC selection \((c=K \log (n))\) for \(n=200\) and \(n=1000\).

In the plots you can see that the PMS estimators have lower MSE than the unselected estimator roughly for \(\lambda<K\) but higher MSE for \(\lambda>K\). The AIC estimator has MSE which is least distorted from the unselected estimator, reaching a peak of about \(1.5\) for \(K=1\). The BIC estimators, however, have very large MSE for larger values of \(\lambda\), and the distortion is growing as \(n\) increases. The MSE of the selection estimators increases with \(\lambda\) until it reaches a peak and then slowly decreases and asymptotes back to \(K\). Furthermore, the MSE of BIC is unbounded as \(n\) diverges. Thus for very large sample sizes the MSE of a BIC-selected estimator can be a very large multiple of the MSE of the unselected estimator. The plots show that if \(\lambda\) is small there are advantages to model selection as MSE can be greatly reduced. However if \(\lambda\) is large then MSE can be greatly increased if BIC is used, and moderately increased if AIC is used. A sensible reading of the plots leads to the practical recommendation to not use the BIC for model selection, and use the AIC with care.

- MSE, \(K=1\)

.jpg)

- MSE, \(K=5\)

Figure 28.1: MSE of Post-Model-Selection Estimators

The numerical calculations show that MSE is reduced by selection when \(\lambda\) is small but increased when \(\lambda\) is moderately large. What does this mean in practice? \(\lambda\) is small when \(\theta\) is small which means the compared models are similar in terms of estimation accuracy. In these contexts model selection can be valuable as it helps select smaller models to improve precision. However when \(\lambda\) is moderately large (which means that \(\theta\) is moderately large) the smaller model has meaningful omitted variable bias, yet the selection criteria have difficulty detecting which model to use. The conservative BIC selection procedure tends to select the smaller model and thus incurs greater bias resulting in high MSE. These considerations suggest that it is better to use the AIC when selecting among models with similar estimation precision. Unfortunately it is impossible to known a priori the appropriate models.

The results of this section may appear to contradict Theorem \(28.8\) which showed that the BIC is consistent for parsimonious models as for all \(\lambda>0\) in the plots the correct parsimonious model is the larger model. Yet BIC is not selecting this model with sufficient frequency to produce a low MSE. There is no contradiction. The consistency of the BIC appears in the lower portion of the plots where the MSE of the BIC estimator is very small, and approaching zero as \(\lambda \rightarrow 0\). The fact that the MSE of the AIC estimator somewhat exceeds that of the BIC in this region is due to the over-selection property of the AIC.

28.24 Inference After Model Selection

Economists are typically interested in inferential questions such as hypothesis tests and confidence intervals. If an econometric model has been selected by a procedure such as AIC or CV what are the properties of statistical tests applied to the selected model?

To be concrete, consider the regression model \(Y=X_{1} \beta_{1}+X_{2} \beta_{2}+e\) and selection of the variable \(X_{2}\). That is, we compare \(Y=X_{1} \beta_{1}+e\) with \(Y=X_{1} \beta_{1}+X_{2} \beta_{2}+e\). It is not too deep a realization that in this context it is inappropriate to conduct conventional inference for \(\beta_{2}\) in the selected model. If we select the smaller model there is no estimate of \(\beta_{2}\). If we select the larger it is because the \(\mathrm{t}\)-ratio for \(\beta_{2}\) exceeds the critical value. The distribution of the t-ratio, conditional on exceeding a critical value, is not conventionally distributed and there seems little point to push this issue further.

The more interesting and subtle question is the impact on inference concerning \(\beta_{1}\). This indeed is a context of typical interest. An economist is interested in the impact of \(X_{1}\) on \(Y\) given a set of controls \(X_{2}\). It is common to select across these controls to find a suitable empirical model. Once this has been obtained we want to make inferential statements about \(\beta_{1}\). Has selection over the controls impacted inference?