Chapter 10: Resampling Methods

10 Resampling Methods

10.1 Introduction

So far in this textbook we have discussed two approaches to inference: exact and asymptotic. Both have their strengths and weaknesses. Exact theory provides a useful benchmark but is based on the unrealistic and stringent assumption of the homoskedastic normal regression model. Asymptotic theory provides a more flexible distribution theory but is an approximation with uncertain accuracy.

In this chapter we introduce a set of alternative inference methods which are based around the concept of resampling - which means using sampling information extracted from the empirical distribution of the data. These are powerful methods, widely applicable, and often more accurate than exact methods and asymptotic approximations. Two disadvantages, however, are (1) resampling methods typically require more computation power; and (2) the theory is considerably more challenging. A consequence of the computation requirement is that most empirical researchers use asymptotic approximations for routine calculations while resampling approximations are used for final reporting.

We will discuss two categories of resampling methods used in statistical and econometric practice: jackknife and bootstrap. Most of our attention will be given to the bootstrap as it is the most commonly used resampling method in econometric practice.

The jackknife is the distribution obtained from the \(n\) leave-one-out estimators (see Section 3.20). The jackknife is most commonly used for variance estimation.

The bootstrap is the distribution obtained by estimation on samples created by i.i.d. sampling with replacement from the dataset. (There are other variants of bootstrap sampling, including parametric sampling and residual sampling.) The bootstrap is commonly used for variance estimation, confidence interval construction, and hypothesis testing.

There is a third category of resampling methods known as sub-sampling which we will not cover in this textbook. Sub-sampling is the distribution obtained by estimation on sub-samples (sampling without replacement) of the dataset. Sub-sampling can be used for most of same purposes as the bootstrap. See the excellent monograph by Politis, Romano and Wolf (1999).

10.2 Example

To motivate our discussion we focus on the application presented in Section 3.7, which is a bivariate regression applied to the CPS subsample of married Black female wage earners with 12 years potential work experience and displayed in Table 3.1. The regression equation is

\[ \log (\text { wage })=\beta_{1} \text { education }+\beta_{2}+e . \]

The estimates as reported in (4.44) are

\[ \begin{aligned} & \log (\text { wage })=0.155 \text { education }+0.698+\widehat{e} \\ & \text { (0.031) } \quad(0.493) \\ & \widehat{\sigma}^{2}=0.144 \\ & \text { (0.043) } \\ & n=20 \text {. } \end{aligned} \]

We focus on four estimates constructed from this regression. The first two are the coefficient estimates \(\widehat{\beta}_{1}\) and \(\widehat{\beta}_{2}\). The third is the variance estimate \(\widehat{\sigma}^{2}\). The fourth is an estimate of the expected level of wages for an individual with 16 years of education (a college graduate), which turns out to be a nonlinear function of the parameters. Under the simplifying assumption that the error \(e\) is independent of the level of education and normally distributed we find that the expected level of wages is

\[ \begin{aligned} \mu &=\mathbb{E}[\text { wage } \mid \text { education }=16] \\ &=\mathbb{E}\left[\exp \left(16 \beta_{1}+\beta_{2}+e\right)\right] \\ &=\exp \left(16 \beta_{1}+\beta_{2}\right) \mathbb{E}[\exp (e)] \\ &=\exp \left(16 \beta_{1}+\beta_{2}+\sigma^{2} / 2\right) . \end{aligned} \]

The final equality is \(\mathbb{E}[\exp (e)]=\exp \left(\sigma^{2} / 2\right)\) which can be obtained from the normal moment generating function. The parameter \(\mu\) is a nonlinear function of the coefficients. The natural estimator of \(\mu\) replaces the unknowns by the point estimators. Thus

\[ \widehat{\mu}=\exp \left(16 \widehat{\beta}_{1}+\widehat{\beta}_{2}+\widehat{\sigma}^{2} / 2\right)=25.80 \]

The standard error for \(\widehat{\mu}\) can be found by extending Exercise \(7.8\) to find the joint asymptotic distribution of \(\widehat{\sigma}^{2}\) and the slope estimates, and then applying the delta method.

We are interested in calculating standard errors and confidence intervals for the four estimates described above.

10.3 Jackknife Estimation of Variance

The jackknife estimates moments of estimators using the distribution of the leave-one-out estimators. The jackknife estimators of bias and variance were introduced by Quenouille (1949) and Tukey (1958), respectively. The idea was expanded further in the monographs of Efron (1982) and Shao and Tu (1995).

Let \(\widehat{\theta}\) be any estimator of a vector-valued parameter \(\theta\) which is a function of a random sample of size \(n\). Let \(\boldsymbol{V}_{\widehat{\theta}}=\operatorname{var}[\widehat{\theta}]\) be the variance of \(\widehat{\theta}\). Define the leave-one-out estimators \(\widehat{\theta}_{(-i)}\) which are computed using the formula for \(\widehat{\theta}\) except that observation \(i\) is deleted. Tukey’s jackknife estimator for \(\boldsymbol{V}_{\widehat{\theta}}\) is defined as a scale of the sample variance of the leave-one-out estimators:

\[ \widehat{\boldsymbol{V}}_{\widehat{\theta}}^{\text {jack }}=\frac{n-1}{n} \sum_{i=1}^{n}\left(\widehat{\theta}_{(-i)}-\bar{\theta}\right)\left(\widehat{\theta}_{(-i)}-\bar{\theta}\right)^{\prime} \]

where \(\bar{\theta}\) is the sample mean of the leave-one-out estimators \(\bar{\theta}=n^{-1} \sum_{i=1}^{n} \widehat{\theta}_{(-i)}\). For scalar estimators \(\widehat{\theta}\) the jackknife standard error is the square root of (10.1): \(s_{\widehat{\theta}}^{\text {jack }}=\sqrt{\widehat{V}_{\widehat{\theta}}^{\text {jack }}}\).

A convenient feature of the jackknife estimator \(\widehat{V}_{\widehat{\theta}}^{\text {jack }}\) is that the formula (10.1) is quite general and does not require any technical (exact or asymptotic) calculations. A downside is that can require \(n\) separate estimations, which in some cases can be computationally costly.

In most cases \(\widehat{\boldsymbol{V}}_{\widehat{\theta}}^{\text {jack }}\) will be similar to a robust asymptotic covariance matrix estimator. The main attractions of the jackknife estimator are that it can be used when an explicit asymptotic variance formula is not available and that it can be used as a check on the reliability of an asymptotic formula.

The formula (10.1) is not immediately intuitive so may benefit from some motivation. We start by examining the sample mean \(\bar{Y}=\frac{1}{n} \sum_{i=1}^{n} Y_{i}\) for \(Y \in \mathbb{R}^{m}\). The leave-one-out estimator is

\[ \bar{Y}_{(-i)}=\frac{1}{n-1} \sum_{j \neq i} Y_{j}=\frac{n}{n-1} \bar{Y}-\frac{1}{n-1} Y_{i} . \]

The sample mean of the leave-one-out estimators is

\[ \frac{1}{n} \sum_{i=1}^{n} \bar{Y}_{(-i)}=\frac{n}{n-1} \bar{Y}-\frac{1}{n-1} \bar{Y}=\bar{Y} \]

The difference is

\[ \bar{Y}_{(-i)}-\bar{Y}=\frac{1}{n-1}\left(\bar{Y}-Y_{i}\right) . \]

The jackknife estimate of variance (10.1) is then

\[ \begin{aligned} \widehat{\boldsymbol{V}}_{\bar{Y}}^{\text {jack }} &=\frac{n-1}{n} \sum_{i=1}^{n}\left(\frac{1}{n-1}\right)^{2}\left(\bar{Y}-Y_{i}\right)\left(\bar{Y}-Y_{i}\right)^{\prime} \\ &=\frac{1}{n}\left(\frac{1}{n-1}\right) \sum_{i=1}^{n}\left(\bar{Y}-Y_{i}\right)\left(\bar{Y}-Y_{i}\right)^{\prime} \end{aligned} \]

This is identical to the conventional estimator for the variance of \(\bar{Y}\). Indeed, Tukey proposed the \((n-1) / n\) scaling in (10.1) so that \(\widehat{V}_{\bar{Y}}^{\text {jack }}\) precisely equals the conventional estimator.

We next examine the case of least squares regression coefficient estimator. Recall from (3.43) that the leave-one-out OLS estimator equals

\[ \widehat{\beta}_{(-i)}=\widehat{\beta}-\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} X_{i} \widetilde{e}_{i} \]

where \(\widetilde{e}_{i}=\left(1-h_{i i}\right)^{-1} \widehat{e}_{i}\) and \(h_{i i}=X_{i}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} X_{i}\). The sample mean of the leave-one-out estimators is \(\bar{\beta}=\widehat{\beta}-\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \widetilde{\mu}\) where \(\widetilde{\mu}=n^{-1} \sum_{i=1}^{n} X_{i} \widetilde{e}_{i}\). Thus \(\widehat{\beta}_{(-i)}-\bar{\beta}=-\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1}\left(X_{i} \widetilde{e}_{i}-\widetilde{\mu}\right)\). The jackknife estimate of variance for \(\widehat{\beta}\) is

\[ \begin{aligned} \widehat{\boldsymbol{V}}_{\widehat{\beta}}^{\text {jack }} &=\frac{n-1}{n} \sum_{i=1}^{n}\left(\widehat{\beta}_{(-i)}-\bar{\beta}\right)\left(\widehat{\beta}_{(-i)}-\bar{\beta}\right)^{\prime} \\ &=\frac{n-1}{n}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1}\left(\sum_{i=1}^{n} X_{i} X_{i}^{\prime} \tilde{e}_{i}^{2}-n \widetilde{\mu} \widetilde{\mu}^{\prime}\right)\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \\ &=\frac{n-1}{n} \widehat{\boldsymbol{V}}_{\widehat{\beta}}^{\mathrm{HC} 3}-(n-1)\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \widetilde{\mu} \widetilde{\mu}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \end{aligned} \]

where \(\widehat{\boldsymbol{V}}_{\widehat{\beta}}^{\mathrm{HC}}\) is the HC3 covariance estimator (4.39) based on prediction errors. The second term in (10.5) is typically quite small since \(\widetilde{\mu}\) is typically small in magnitude. Thus \(\widehat{\boldsymbol{V}}_{\widehat{\beta}}^{\text {jack }} \simeq \widehat{\boldsymbol{V}}_{\widehat{\beta}}^{\mathrm{HC}}\). Indeed the HC3 estimator was originally motivated as a simplification of the jackknife estimator. This shows that for regression coefficients the jackknife estimator of variance is similar to a conventional robust estimator. This is accomplished without the user “knowing” the form of the asymptotic covariance matrix. This is further confirmation that the jackknife is making a reasonable calculation.

Third, we examine the jackknife estimator for a function \(\widehat{\theta}=r(\widehat{\beta})\) of a least squares estimator. The leave-one-out estimator of \(\theta\) is

\[ \begin{aligned} \widehat{\theta}_{(-i)} &=r\left(\widehat{\beta}_{(-i)}\right) \\ &=r\left(\widehat{\beta}-\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} X_{i} \widetilde{e}_{i}\right) \\ & \simeq \widehat{\theta}-\widehat{\boldsymbol{R}}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} X_{i} \widetilde{e}_{i} \end{aligned} \]

The second equality is (10.4). The final approximation is obtained by a mean-value expansion, using \(r(\widehat{\beta})=\widehat{\theta}\) and setting \(\widehat{\boldsymbol{R}}=(\partial / \partial \beta) r(\widehat{\beta})^{\prime}\). This approximation holds in large samples because \(\widehat{\beta}_{(-i)}\) are uniformly consistent for \(\beta\). The jackknife variance estimator for \(\widehat{\theta}\) thus equals

\[ \begin{aligned} \widehat{\boldsymbol{V}}_{\widehat{\theta}}^{\mathrm{jack}} &=\frac{n-1}{n} \sum_{i=1}^{n}\left(\widehat{\theta}_{(-i)}-\bar{\theta}\right)\left(\widehat{\theta}_{(-i)}-\bar{\theta}\right)^{\prime} \\ & \simeq \frac{n-1}{n} \widehat{\boldsymbol{R}}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1}\left(\sum_{i=1}^{n} X_{i} X_{i}^{\prime} \widehat{e}_{i}^{2}-n \widetilde{\mu} \widetilde{\mu}^{\prime}\right)\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \widehat{\boldsymbol{R}} \\ &=\widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\widehat{\beta}}^{\mathrm{jack}} \widehat{\boldsymbol{R}} \\ & \simeq \widehat{\boldsymbol{R}}^{\prime} \widetilde{\boldsymbol{V}}_{\widehat{\beta}} \widehat{\boldsymbol{R}} . \end{aligned} \]

The final line equals a delta-method estimator for the variance of \(\widehat{\theta}\) constructed with the covariance estimator (4.39). This shows that the jackknife estimator of variance for \(\widehat{\theta}\) is approximately an asymptotic delta-method estimator. While this is an asymptotic approximation, it again shows that the jackknife produces an estimator which is asymptotically similar to one produced by asymptotic methods. This is despite the fact that the jackknife estimator is calculated without reference to asymptotic theory and does not require calculation of the derivatives of \(r(\beta)\).

This argument extends directly to any “smooth function” estimator. Most of the estimators discussed so far in this textbook take the form \(\widehat{\theta}=g(\bar{W})\) where \(\bar{W}=n^{-1} \sum_{i=1}^{n} W_{i}\) and \(W_{i}\) is some vector-valued function of the data. For any such estimator \(\widehat{\theta}\) the leave-one-out estimator equals \(\widehat{\theta}_{(-i)}=g\left(\bar{W}_{(-i)}\right)\) and its jackknife estimator of variance is (10.1). Using (10.2) and a mean-value expansion we have the largesample approximation

\[ \begin{aligned} \widehat{\theta}_{(-i)} &=g\left(\bar{W}_{(-i)}\right) \\ &=g\left(\frac{n}{n-1} \bar{W}-\frac{1}{n-1} W_{i}\right) \\ & \simeq g(\bar{W})-\frac{1}{n-1} \boldsymbol{G}(\bar{W})^{\prime} W_{i} \end{aligned} \]

where \(\boldsymbol{G}(x)=(\partial / \partial x) g(x)^{\prime}\). Thus

\[ \widehat{\theta}_{(-i)}-\bar{\theta} \simeq-\frac{1}{n-1} \boldsymbol{G}(\bar{W})^{\prime}\left(W_{i}-\bar{W}\right) \]

and the jackknife estimator of the variance of \(\widehat{\theta}\) approximately equals

\[ \begin{aligned} \widehat{\boldsymbol{V}}_{\widehat{\theta}}^{\mathrm{jack}} &=\frac{n-1}{n} \sum_{i=1}^{n}\left(\widehat{\theta}_{(-i)}-\widehat{\theta}_{(\cdot)}\right)\left(\widehat{\theta}_{(-i)}-\widehat{\theta}_{(\cdot)}\right)^{\prime} \\ & \simeq \frac{n-1}{n} \boldsymbol{G}(\bar{W})^{\prime}\left(\frac{1}{(n-1)^{2}} \sum_{i=1}^{n}\left(W_{i}-\bar{W}\right)\left(W_{i}-\bar{W}\right)^{\prime}\right) \boldsymbol{G}(\bar{W}) \\ &=\boldsymbol{G}(\bar{W})^{\prime} \widehat{\boldsymbol{V}}_{\bar{W}}^{\mathrm{jack}} \boldsymbol{G}(\bar{W}) \end{aligned} \]

where \(\widehat{V}_{\bar{W}}^{\text {jack }}\) as defined in (10.3) is the conventional (and jackknife) estimator for the variance of \(\bar{W}\). Thus \(\widehat{\boldsymbol{V}}_{\widehat{\theta}}^{\text {jack }}\) is approximately the delta-method estimator. Once again, we see that the jackknife estimator automatically calculates what is effectively the delta-method variance estimator, but without requiring the user to explicitly calculate the derivative of \(g(x)\).

10.4 Example

We illustrate by reporting the asymptotic and jackknife standard errors for the four parameter estimates given earlier. In Table \(10.1\) we report the actual values of the leave-one-out estimates for each of the twenty observations in the sample. The jackknife standard errors are calculated as the scaled square roots of the sample variances of these leave-one-out estimates and are reported in the second-to-last row. For comparison the asymptotic standard errors are reported in the final row.

For all estimates the jackknife and asymptotic standard errors are quite similar. This reinforces the credibility of both standard error estimates. The largest differences arise for \(\widehat{\beta}_{2}\) and \(\widehat{\mu}\), whose jackknife standard errors are about \(5 %\) larger than the asymptotic standard errors.

The take-away from our presentation is that the jackknife is a simple and flexible method for variance and standard error calculation. Circumventing technical asymptotic and exact calculations, the jackknife produces estimates which in many cases are similar to asymptotic delta-method counterparts. The jackknife is especially appealing in cases where asymptotic standard errors are not available or are difficult to calculate. They can also be used as a double-check on the reasonableness of asymptotic delta-method calculations.

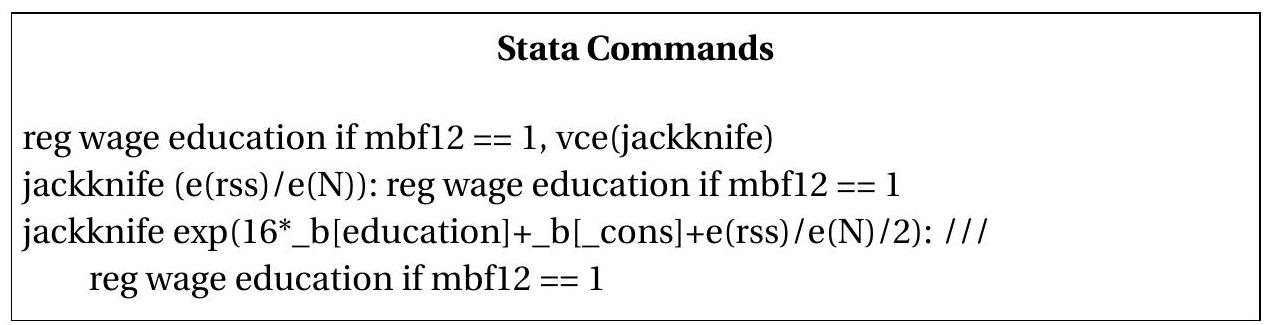

In Stata, jackknife standard errors for coefficient estimates in many models are obtained by the vce(jackknife) option. For nonlinear functions of the coefficients or other estimators the jackkn ife command can be combined with any other command to obtain jackknife standard errors.

To illustrate, below we list the Stata commands which calculate the jackknife standard errors listed above. The first line is least squares estimation with standard errors calculated by the jackknife. The second line calculates the error variance estimate \(\widehat{\sigma}^{2}\) with a jackknife standard error. The third line does the same for the estimate \(\widehat{\mu}\).

Table 10.1: Leave-one-out Estimators and Jackknife Standard Errors

| Observation | \(\widehat{\beta}_{1(-i)}\) | \(\widehat{\beta}_{2(-i)}\) | \(\widehat{\sigma}_{(-i)}^{2}\) | \(\widehat{\mu}_{(-i)}\) |

|---|---|---|---|---|

| 1 | \(0.150\) | \(0.764\) | \(0.150\) | \(25.63\) |

| 2 | \(0.148\) | \(0.798\) | \(0.149\) | \(25.48\) |

| 3 | \(0.153\) | \(0.739\) | \(0.151\) | \(25.97\) |

| 4 | \(0.156\) | \(0.695\) | \(0.144\) | \(26.31\) |

| 5 | \(0.154\) | \(0.701\) | \(0.146\) | \(25.38\) |

| 6 | \(0.158\) | \(0.655\) | \(0.151\) | \(26.05\) |

| 7 | \(0.152\) | \(0.705\) | \(0.114\) | \(24.32\) |

| 8 | \(0.146\) | \(0.822\) | \(0.147\) | \(25.37\) |

| 9 | \(0.162\) | \(0.588\) | \(0.151\) | \(25.75\) |

| 10 | \(0.157\) | \(0.693\) | \(0.139\) | \(26.40\) |

| 11 | \(0.168\) | \(0.510\) | \(0.141\) | \(26.40\) |

| 12 | \(0.158\) | \(0.691\) | \(0.118\) | \(26.48\) |

| 13 | \(0.139\) | \(0.974\) | \(0.141\) | \(26.56\) |

| 14 | \(0.169\) | \(0.451\) | \(0.131\) | \(26.26\) |

| 15 | \(0.146\) | \(0.852\) | \(0.150\) | \(24.93\) |

| 16 | \(0.156\) | \(0.696\) | \(0.148\) | \(26.06\) |

| 17 | \(0.165\) | \(0.513\) | \(0.140\) | \(25.22\) |

| 18 | \(0.155\) | \(0.698\) | \(0.151\) | \(25.90\) |

| 19 | \(0.152\) | \(0.742\) | \(0.151\) | \(25.73\) |

| 20 | \(0.155\) | \(0.697\) | \(0.151\) | \(25.95\) |

| \(s^{\text {jack }}\) | \(0.032\) | \(0.514\) | \(0.046\) | \(2.39\) |

| \(s^{\text {asy }}\) | \(0.031\) | \(0.493\) | \(0.043\) | \(2.29\) |

10.5 Jackknife for Clustered Observations

In Section \(4.21\) we introduced the clustered regression model, cluster-robust variance estimators, and cluster-robust standard errors. Jackknife variance estimation can also be used for clustered samples but with some natural modifications. Recall that the least squares estimator in the clustered sample context can be written as

\[ \widehat{\beta}=\left(\sum_{g=1}^{G} \boldsymbol{X}_{g}^{\prime} \boldsymbol{X}_{g}\right)^{-1}\left(\sum_{g=1}^{G} \boldsymbol{X}_{g}^{\prime} \boldsymbol{Y}_{g}\right) \]

where \(g=1, \ldots, G\) indexes the cluster. Instead of leave-one-out estimators, it is natural to use deletecluster estimators, which delete one cluster at a time. They take the form (4.58):

\[ \widehat{\beta}_{(-g)}=\widehat{\beta}-\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \boldsymbol{X}_{g}^{\prime} \widetilde{\boldsymbol{e}}_{g} \]

where

\[ \begin{aligned} &\widetilde{\boldsymbol{e}}_{g}=\left(\boldsymbol{I}_{n_{g}}-\boldsymbol{X}_{g}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \boldsymbol{X}_{g}^{\prime}\right)^{-1} \widehat{\boldsymbol{e}}_{g} \\ &\widehat{\boldsymbol{e}}_{g}=\boldsymbol{Y}_{g}-\boldsymbol{X}_{g} \widehat{\beta} \end{aligned} \]

The delete-cluster jackknife estimator of the variance of \(\widehat{\beta}\) is

\[ \begin{aligned} \widehat{\boldsymbol{V}}_{\widehat{\beta}}^{\mathrm{jack}} &=\frac{G-1}{G} \sum_{g=1}^{G}\left(\widehat{\beta}_{(-g)}-\bar{\beta}\right)\left(\widehat{\beta}_{(-g)}-\bar{\beta}\right)^{\prime} \\ \bar{\beta} &=\frac{1}{G} \sum_{g=1}^{G} \widehat{\beta}_{(-g)} . \end{aligned} \]

We call \(\widehat{V}_{\widehat{\beta}}^{\text {jack }}\) a cluster-robust jackknife estimator of variance.

Using the same approximations as the previous section we can show that the delete-cluster jackknife estimator is asymptotically equivalent to the cluster-robust covariance matrix estimator (4.59) calculated with the delete-cluster prediction errors. This verifies that the delete-cluster jackknife is the appropriate jackknife approach for clustered dependence.

For parameters which are functions \(\widehat{\theta}=r(\widehat{\beta})\) of the least squares estimator, the delete-cluster jackknife estimator of the variance of \(\widehat{\theta}\) is

\[ \begin{aligned} \widehat{\boldsymbol{V}}_{\widehat{\theta}}^{\text {jack }} &=\frac{G-1}{G} \sum_{g=1}^{G}\left(\widehat{\theta}_{(-g)}-\bar{\theta}\right)\left(\widehat{\theta}_{(-g)}-\bar{\theta}\right)^{\prime} \\ \widehat{\theta}_{(-i)} &=r\left(\widehat{\beta}_{(-g)}\right) \\ \bar{\theta} &=\frac{1}{G} \sum_{g=1}^{G} \widehat{\theta}_{(-g)} . \end{aligned} \]

Using a mean-value expansion we can show that this estimator is asymptotically equivalent to the deltamethod cluster-robust covariance matrix estimator for \(\widehat{\theta}\). This shows that the jackknife estimator is appropriate for covariance matrix estimation.

As in the context of i.i.d. samples, one advantage of the jackknife covariance matrix estimators is that they do not require the user to make a technical calculation of the asymptotic distribution. A downside is an increase in computation cost, as \(G\) separate regressions are effectively estimated.

In Stata, jackknife standard errors for coefficient estimates with clustered observations are obtained by using the options cluster (id) vce(jackkn ife) where id denotes the cluster variable.

10.6 The Bootstrap Algorithm

The bootstrap is a powerful approach to inference and is due to the pioneering work of Efron (1979). There are many textbook and monograph treatments of the bootstrap, including Efron (1982), Hall (1992), Efron and Tibshirani (1993), Shao and Tu (1995), and Davison and Hinkley (1997). Reviews for econometricians are provided by Hall (1994) and Horowitz (2001)

There are several ways to describe or define the bootstrap and there are several forms of the bootstrap. We start in this section by describing the basic nonparametric bootstrap algorithm. In subsequent sections we give more formal definitions of the bootstrap as well as theoretical justifications.

Briefly, the bootstrap distribution is obtained by estimation on independent samples created by i.i.d. sampling (sampling with replacement) from the original dataset.

To understand this it is useful to start with the concept of sampling with replacement from the dataset. To continue the empirical example used earlier in the chapter we focus on the dataset displayed in Table 3.1, which has \(n=20\) observations. Sampling from this distribution means randomly selecting one row from this table. Mathematically this is the same as randomly selecting an integer from the set \(\{1,2, \ldots, 20\}\). To illustrate, MATLAB has a random integer generator (the function randi). Using the random number seed of 13 (an arbitrary choice) we obtain the random draw 16 . This means that we draw observation number 16 from Table 3.1. Examining the table we can see that this is an individual with wage \(\$ 18.75\) and education of 16 years. We repeat by drawing another random integer on the set \(\{1,2, \ldots, 20\}\) and this time obtain 5 . This means we take observation 5 from Table 3.1, which is an individual with wage \(\$ 33.17\) and education of 16 years. We continue until we have \(n=20\) such draws. This random set of observations are \(\{16,5,17,20,20,10,13,16,13,15,1,6,2,18,8,14,6,7,1,8\}\). We call this the bootstrap sample.

Notice that the observations \(1,6,8,13,16,20\) each appear twice in the bootstrap sample, and the observations \(3,4,9,11,12,19\) do not appear at all. That is okay. In fact, it is necessary for the bootstrap to work. This is because we are drawing with replacement. (If we instead made draws without replacement then the constructed dataset would have exactly the same observations as in Table 3.1, only in different order.) We can also ask the question “What is the probability that an individual observation will appear at least once in the bootstrap sample?” The answer is

\[ \begin{aligned} \mathbb{P}[\text { Observation in Bootstrap Sample }] &=1-\left(1-\frac{1}{n}\right)^{n} \\ & \rightarrow 1-e^{-1} \simeq 0.632 . \end{aligned} \]

The limit holds as \(n \rightarrow \infty\). The approximation \(0.632\) is excellent even for small \(n\). For example, when \(n=20\) the probability (10.6) is \(0.641\). These calculations show that an individual observation is in the bootstrap sample with probability near \(2 / 3\).

Once again, the bootstrap sample is the constructed dataset with the 20 observations drawn randomly from the original sample. Notationally, we write the \(i^{\text {th }}\) bootstrap observation as \(\left(Y_{i}^{*}, X_{i}^{*}\right)\) and the bootstrap sample as \(\left\{\left(Y_{1}^{*}, X_{1}^{*}\right), \ldots,\left(Y_{n}^{*}, X_{n}^{*}\right)\right\}\). In our present example with \(Y\) denoting the log wage the bootstrap sample is

\[ \left\{\left(Y_{1}^{*}, X_{1}^{*}\right), \ldots,\left(Y_{n}^{*}, X_{n}^{*}\right)\right\}=\{(2.93,16),(3.50,16) \ldots,(3.76,18)\} \]

The bootstrap estimate \(\widehat{\beta}^{*}\) is obtained by applying the least squares estimation formula to the bootstrap sample. Thus we regress \(Y^{*}\) on \(X^{*}\). The other bootstrap estimates, in our example \(\widehat{\sigma}^{2 *}\) and \(\widehat{\mu}^{*}\), are obtained by applying their estimation formulae to the bootstrap sample as well. Writing \(\widehat{\theta}^{*}=\) \(\left(\widehat{\beta}_{1}^{*}, \widehat{\beta}_{2}^{*}, \widehat{\sigma}^{* 2}, \widehat{\mu}^{*}\right)^{\prime}\) we have the bootstrap estimate of the parameter vector \(\theta=\left(\beta_{1}, \beta_{2}, \sigma^{2}, \mu\right)^{\prime}\). In our example (the bootstrap sample described above) \(\widehat{\theta}^{*}=(0.195,0.113,0.107,26.7)^{\prime}\). This is one draw from the bootstrap distribution of the estimates.

The estimate \(\widehat{\theta}^{*}\) as described is one random draw from the distribution of estimates obtained by i.i.d. sampling from the original data. With one draw we can say relatively little. But we can repeat this exercise to obtain multiple draws from this bootstrap distribution. To distinguish between these draws we index the bootstrap samples by \(b=1, \ldots, B\), and write the bootstrap estimates as \(\widehat{\theta}_{b}^{*}\) or \(\widehat{\theta}^{*}(b)\).

To continue our illustration we draw 20 more random integers \(\{19,5,7,19,1,2,13,18,1,15,17,2\), \(14,11,10,20,1,5,15,7\}\) and construct a second bootstrap sample. On this sample we again estimate the parameters and obtain \(\widehat{\theta}^{*}(2)=(0.175,0.52,0.124,29.3)^{\prime}\). This is a second random draw from the distribution of \(\widehat{\theta}^{*}\). We repeat this \(B\) times, storing the parameter estimates \(\widehat{\theta}^{*}(b)\). We have thus created a new dataset of bootstrap draws \(\left\{\widehat{\theta}^{*}(b): b=1, \ldots, B\right\}\). By construction the draws are independent across \(b\) and identically distributed.

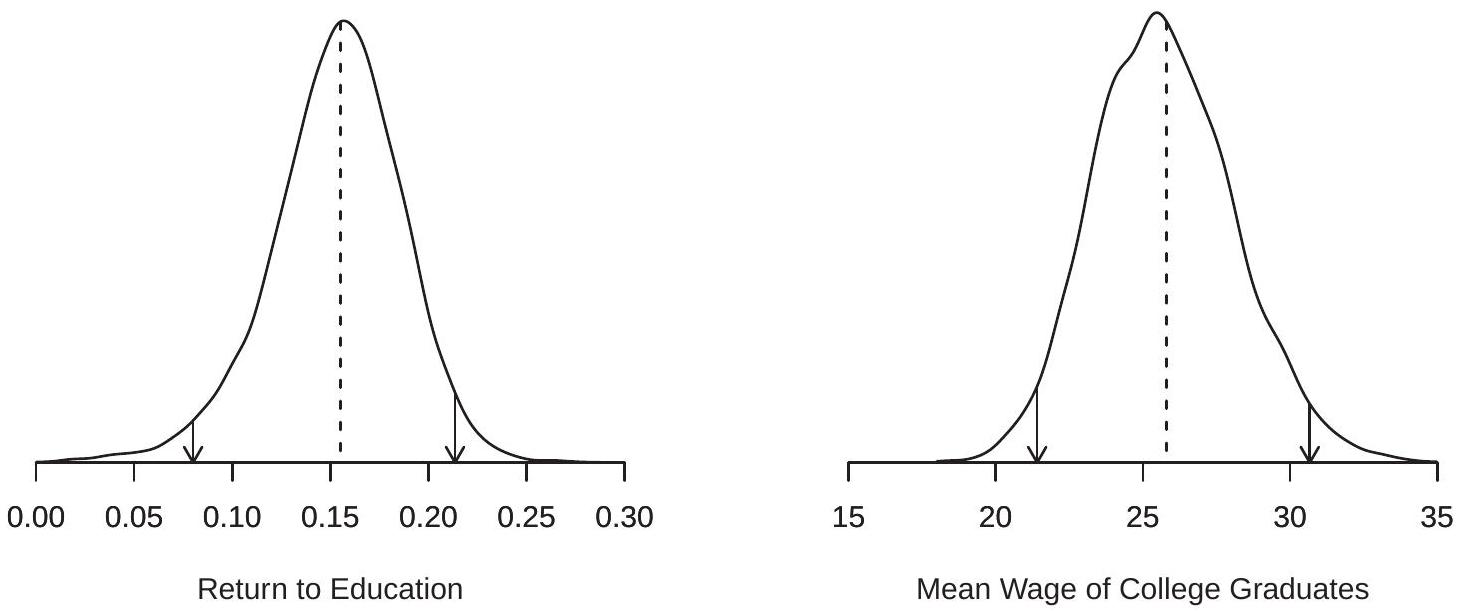

The number of bootstrap draws, \(B\), is often called the “number of bootstrap replications”. Typical choices for \(B\) are 1000,5000 , and 10,000. We discuss selecting \(B\) later, but roughly speaking, larger \(B\) results in a more precise estimate at an increased computation cost. For our application we set \(B=\) 10,000 . To illustrate, Figure \(13.1\) displays the densities of the distributions of the bootstrap estimates \(\widehat{\beta}_{1}^{*}\) and \(\widehat{\mu}^{*}\) across 10,000 draws. The dashed lines show the point estimate. You can notice that the density for \(\widehat{\beta}_{1}^{*}\) is slightly skewed to the left.\

Figure 10.1: Bootstrap Distributions of \(\widehat{\beta}_{1}^{*}\) and \(\widehat{\mu}^{*}\)

10.7 Bootstrap Variance and Standard Errors

Given the bootstrap draws we can estimate features of the bootstrap distribution. The bootstrap estimator of variance of an estimator \(\widehat{\theta}\) is the sample variance across the bootstrap draws \(\widehat{\theta}^{*}(b)\). It equals

\[ \begin{aligned} \widehat{\boldsymbol{V}}_{\widehat{\theta}}^{\text {boot }} &=\frac{1}{B-1} \sum_{b=1}^{B}\left(\widehat{\theta}^{*}(b)-\bar{\theta}^{*}\right)\left(\widehat{\theta}^{*}(b)-\bar{\theta}^{*}\right)^{\prime} \\ \bar{\theta}^{*} &=\frac{1}{B} \sum_{b=1}^{B} \widehat{\theta}^{*}(b) \end{aligned} \]

For a scalar estimator \(\hat{\theta}\) the bootstrap standard error is the square root of the bootstrap estimator of variance:

\[ s_{\widehat{\widehat{\theta}}}^{\text {boot }}=\sqrt{\widehat{\boldsymbol{V}}_{\widehat{\theta}}^{\text {boot }}} . \]

This is a very simple statistic to calculate and is the most common use of the bootstrap in applied econometric practice. A caveat (discussed in more detail in Section 10.15) is that in many cases it is better to use a trimmed estimator.

Standard errors are conventionally reported to convey the precision of the estimator. They are also commonly used to construct confidence intervals. Bootstrap standard errors can be used for this purpose. The normal-approximation bootstrap confidence interval is

\[ C^{\mathrm{nb}}=\left[\widehat{\theta}-z_{1-\alpha / 2} s_{\widehat{\theta}}^{\text {boot }}, \quad \widehat{\theta}+z_{1-\alpha / 2} s_{\widehat{\theta}}^{\text {boot }}\right] \]

where \(z_{1-\alpha / 2}\) is the \(1-\alpha / 2\) quantile of the \(\mathrm{N}(0,1)\) distribution. This interval \(C^{\mathrm{nb}}\) is identical in format to an asymptotic confidence interval, but with the bootstrap standard error replacing the asymptotic standard error. \(C^{\mathrm{nb}}\) is the default confidence interval reported by Stata when the bootstrap has been used to calculate standard errors. However, the normal-approximation interval is in general a poor choice for confidence interval construction as it relies on the normal approximation to the t-ratio which can be inaccurate in finite samples. There are other methods - such as the bias-corrected percentile method to be discussed in Section \(10.17\) - which are just as simple to compute but have better performance. In general, bootstrap standard errors should be used as estimates of precision rather than as tools to construct confidence intervals.

Since \(B\) is finite, all bootstrap statistics, such as \(\widehat{\boldsymbol{V}}_{\widehat{\theta}}^{\text {boot }}\), are estimates and hence random. Their values will vary across different choices for \(B\) and simulation runs (depending on how the simulation seed is set). Thus you should not expect to obtain the exact same bootstrap standard errors as other researchers when replicating their results. They should be similar (up to simulation sampling error) but not precisely the same.

In Table \(10.2\) we report the four parameter estimates introduced in Section \(10.2\) along with asymptotic, jackknife and bootstrap standard errors. We also report four bootstrap confidence intervals which will be introduced in subsequent sections.

For these four estimators we can see that the bootstrap standard errors are quite similar to the asymptotic and jackknife standard errors. The most noticable difference arises for \(\widehat{\beta}_{2}\), where the bootstrap standard error is about \(10 %\) larger than the asymptotic standard error.

Table 10.2: Comparison of Methods

| \(\widehat{\beta}_{1}\) | \(\widehat{\beta}_{2}\) | \(\widehat{\sigma}^{2}\) | \(\widehat{\mu}\) | |

|---|---|---|---|---|

| Estimate | \(0.155\) | \(0.698\) | \(0.144\) | \(25.80\) |

| Asymptotic s.e. | \((0.031)\) | \((0.493)\) | \((0.043)\) | \((2.29)\) |

| Jackknife s.e. | \((0.032)\) | \((0.514)\) | \((0.046)\) | \((2.39)\) |

| Bootstrap s.e. | \((0.034)\) | \((0.548)\) | \((0.041)\) | \((2.38)\) |

| \(95 %\) Percentile Interval | \([0.08,0.21]\) | \([-0.27,1.91]\) | \([0.06,0.22]\) | \([21.4,30.7]\) |

| \(95 %\) BC Percentile Interval | \([0.08,0.21]\) | \([-0.25,1.93]\) | \([0.09,0.28]\) | \([22.0,31.5]\) |

| \(95 %\) BC |

In Stata, bootstrap standard errors for coefficient estimates in many models are obtained by the vce(bootstrap, reps(#)) option, where # is the number of bootstrap replications. For nonlinear functions of the coefficients or other estimators the bootstrap command can be combined with any other command to obtain bootstrap standard errors. Synonyms for bootstrap are bstrap and bs.

To illustrate, below we list the Stata commands which will calculate \({ }^{1}\) the bootstrap standard errors listed above.

\({ }^{1}\) They will not precisely replicate the standard errors since those in Table \(10.2\) were produced in Matlab which uses a different random number sequence.

Stata Commands reg wage education if \(\operatorname{mbf} 12==1\), vce(bootstrap, reps \((10000))\)

bs (e(rss)/e(N)), reps(10000): reg wage education if \(\mathrm{mbf} 12==1\)

bs ( \(\exp \left(16^{*}\right.\) bb[education]+_b[_cons] \(\left.\left.+\mathrm{e}(\mathrm{rss}) / \mathrm{e}(\mathrm{N}) / 2\right)\right)\), reps(10000): ///

reg wage education if \(\operatorname{mbf} 12==1\)

10.8 Percentile Interval

The second most common use of bootstrap methods is for confidence intervals. There are multiple bootstrap methods to form confidence intervals. A popular and simple method is called the percentile interval. It is based on the quantiles of the bootstrap distribution.

In Section \(10.6\) we described the bootstrap algorithm which creates an i.i.d. sample of bootstrap estimates \(\left\{\widehat{\theta}_{1}^{*}, \widehat{\theta}_{2}^{*}, \ldots, \widehat{\theta}_{B}^{*}\right\}\) corresponding to an estimator \(\widehat{\theta}\) of a parameter \(\theta\). We focus on the case of a scalar parameter \(\theta\).

For any \(0<\alpha<1\) we can calculate the empirical quantile \(q_{\alpha}^{*}\) of these bootstrap estimates. This is the number such that \(n \alpha\) bootstrap estimates are smaller than \(q_{\alpha}^{*}\), and is typically calculated by taking the \(n \alpha^{t h}\) order statistic of the \(\widehat{\theta}_{b}^{*}\). See Section \(11.13\) of Probability and Statistics for Economists for a precise discussion of empirical quantiles and common quantile estimators.

The percentile bootstrap \(100(1-\alpha) %\) confidence interval is

\[ C^{\mathrm{pc}}=\left[q_{\alpha / 2}^{*}, q_{1-\alpha / 2}^{*}\right] . \]

For example, if \(B=1000, \alpha=0.05\), and the empirical quantile estimator is used, then \(C^{\mathrm{pc}}=\left[\widehat{\theta}_{(25)}^{*}, \widehat{\theta}_{(975)}^{*}\right]\).

To illustrate, the \(0.025\) and \(0.975\) quantiles of the bootstrap distributions of \(\widehat{\beta}_{1}^{*}\) and \(\widehat{\mu}^{*}\) are indicated in Figure \(13.1\) by the arrows. The intervals between the arrows are the \(95 %\) percentile intervals.

The percentile interval has the convenience that it does not require calculation of a standard error. This is particularly convenient in contexts where asymptotic standard error calculation is complicated, burdensome, or unknown. \(C^{\mathrm{pc}}\) is a simple by-product of the bootstrap algorithm and does not require meaningful computational cost above that required to calculate the bootstrap standard error.

The percentile interval has the useful property that it is transformation-respecting. Take a monotone parameter transformation \(m(\theta)\). The percentile interval for \(m(\theta)\) is simply the percentile interval for \(\theta\) mapped by \(m(\theta)\). That is, if \(\left[q_{\alpha / 2}^{*}, q_{1-\alpha / 2}^{*}\right]\) is the percentile interval for \(\theta\), then \(\left[m\left(q_{\alpha / 2}^{*}\right), m\left(q_{1-\alpha / 2}^{*}\right)\right]\) is the percentile interval for \(m(\theta)\). This property follows directly from the equivariance property of sample quantiles. Many confidence-interval methods, such as the delta-method asymptotic interval and the normal-approximation interval \(C^{\mathrm{nb}}\), do not share this property.

To illustrate the usefulness of the transformation-respecting property consider the variance \(\sigma^{2}\). In some cases it is useful to report the variance \(\sigma^{2}\) and in other cases it is useful to report the standard deviation \(\sigma\). Thus we may be interested in confidence intervals for \(\sigma^{2}\) or \(\sigma\). To illustrate, the asymptotic \(95 %\) normal confidence interval for \(\sigma^{2}\) which we calculate from Table \(13.2\) is \([0.060,0.228]\). Taking square roots we obtain an interval for \(\sigma\) of [0.244,0.477]. Alternatively, the delta method standard error for \(\widehat{\sigma}=0.379\) is \(0.057\), leading to an asymptotic \(95 %\) confidence interval for \(\sigma\) of \([0.265,0.493]\) which is different. This shows that the delta method is not transformation-respecting. In contrast, the \(95 %\) percentile interval for \(\sigma^{2}\) is \([0.062,0.220]\) and that for \(\sigma\) is \([0.249,0.469]\) which is identical to the square roots of the interval for \(\sigma^{2}\).

The bootstrap percentile intervals for the four estimators are reported in Table 13.2. In Stata, percentile confidence intervals can be obtained by using the command estat bootstrap, percentile or the command estat bootstrap, all after an estimation command which calculates standard errors via the bootstrap.

10.9 The Bootstrap Distribution

For applications it is often sufficient if one understands the bootstrap as an algorithm. However, for theory it is more useful to view the bootstrap as a specific estimator of the sampling distribution. For this it is useful to introduce some additional notation.

The key is that the distribution of any estimator or statistic is determined by the distribution of the data. While the latter is unknown it can be estimated by the empirical distribution of the data. This is what the bootstrap does.

To fix notation, let \(F\) denote the distribution of an individual observation \(W\). (In regression, \(W\) is the \(\operatorname{pair}(Y, X)\).) Let \(G_{n}(u, F)\) denote the distribution of an estimator \(\widehat{\theta}\). That is,

\[ G_{n}(u, F)=\mathbb{P}[\widehat{\theta} \leq u \mid F] . \]

We write the distribution \(G_{n}\) as a function of \(n\) and \(F\) since the latter (generally) affect the distribution of \(\widehat{\theta}\). We are interested in the distribution \(G_{n}\). For example, we want to know its variance to calculate a standard error or its quantiles to calculate a percentile interval.

In principle, if we knew the distribution \(F\) we should be able to determine the distribution \(G_{n}\). In practice there are two barriers to implementation. The first barrier is that the calculation of \(G_{n}(u, F)\) is generally infeasible except in certain special cases such as the normal regression model. The second barrier is that in general we do not know \(F\).

The bootstrap simultaneously circumvents these two barriers by two clever ideas. First, the bootstrap proposes estimation of \(F\) by the empirical distribution function (EDF) \(F_{n}\), which is the simplest nonparametric estimator of the joint distribution of the observations. The EDF is \(F_{n}(w)=n^{-1} \sum_{i=1}^{n} \mathbb{1}\left\{W_{i} \leq w\right\}\). (See Section \(11.2\) of Probability and Statistics for Economists for details and properties.) Replacing \(F\) with \(F_{n}\) we obtain the idealized bootstrap estimator of the distribution of \(\widehat{\theta}\)

\[ G_{n}^{*}(u)=G_{n}\left(u, F_{n}\right) . \]

The bootstrap’s second clever idea is to estimate \(G_{n}^{*}\) by simulation. This is the bootstrap algorithm described in the previous sections. The essential idea is that simulation from \(F_{n}\) is sampling with replacement from the original data, which is computationally simple. Applying the estimation formula for \(\hat{\theta}\) we obtain i.i.d. draws from the distribution \(G_{n}^{*}(u)\). By making a large number \(B\) of such draws we can estimate any feature of \(G_{n}^{*}\) of interest. The bootstrap combines these two ideas: (1) estimate \(G_{n}(u, F)\) by \(G_{n}\left(u, F_{n}\right)\); (2) estimate \(G_{n}\left(u, F_{n}\right)\) by simulation. These ideas are intertwined. Only by considering these steps together do we obtain a feasible method.

The way to think about the connection between \(G_{n}\) and \(G_{n}^{*}\) is as follows. \(G_{n}\) is the distribution of the estimator \(\widehat{\theta}\) obtained when the observations are sampled i.i.d. from the population distribution \(F\). \(G_{n}^{*}\) is the distribution of the same statistic, denoted \(\widehat{\theta}^{*}\), obtained when the observations are sampled i.i.d. from the empirical distribution \(F_{n}\). It is useful to conceptualize the “universe” which separately generates the dataset and the bootstrap sample. The “sampling universe” is the population distribution \(F\). In this universe the true parameter is \(\theta\). The “bootstrap universe” is the empircal distribution \(F_{n}\). When drawing from the bootstrap universe we are treating \(F_{n}\) as if it is the true distribution. Thus anything which is true about \(F_{n}\) should be treated as true in the bootstrap universe. In the bootstrap universe the “true” value of the parameter \(\theta\) is the value determined by the EDF \(F_{n}\). In most cases this is the estimate \(\widehat{\theta}\). It is the true value of the coefficient when the true distribution is \(F_{n}\). We now carefully explain the connection with the bootstrap algorithm as previously described.

First, observe that sampling with replacement from the sample \(\left\{Y_{1}, \ldots, Y_{n}\right\}\) is identical to sampling from the EDF \(F_{n}\). This is because the EDF is the probability distribution which puts probability mass \(1 / n\) on each observation. Thus sampling from \(F_{n}\) means sampling an observation with probability \(1 / n\), which is sampling with replacement.

Second, observe that the bootstrap estimator \(\widehat{\theta}^{*}\) described here is identical to the bootstrap algorithm described in Section 10.6. That is, \(\widehat{\theta}^{*}\) is the random vector generated by applying the estimator formula \(\widehat{\theta}\) to samples obtained by random sampling from \(F_{n}\).

Third, observe that the distribution of these bootstrap estimators is the bootstrap distribution (10.9). This is a precise equality. That is, the bootstrap algorithm generates i.i.d. samples from \(F_{n}\), and when the estimators are applied we obtain random variables \(\widehat{\theta}^{*}\) with the distribution \(G_{n}^{*}\).

Fourth, observe that the bootstrap statistics described earlier - bootstrap variance, standard error, and quantiles - are estimators of the corresponding features of the bootstrap distribution \(G_{n}^{*}\).

This discussion is meant to carefully describe why the notation \(G_{n}^{*}(u)\) is useful to help understand the properties of the bootstrap algorithm. Since \(F_{n}\) is the natural nonparametric estimator of the unknown distribution \(F, G_{n}^{*}(u)=G_{n}\left(u, F_{n}\right)\) is the natural plug-in estimator of the unknown \(G_{n}(u, F)\). Furthermore, because \(F_{n}\) is uniformly consistent for \(F\) by the Glivenko-Cantelli Lemma (Theorem \(18.8\) in Probability and Statistics for Economists) we also can expect \(G_{n}^{*}(u)\) to be consistent for \(G_{n}(u)\). Making this precise is a bit challenging since \(F_{n}\) and \(G_{n}\) are functions. In the next several sections we develop an asymptotic distribution theory for the bootstrap distribution based on extending asymptotic theory to the case of conditional distributions.

10.10 The Distribution of the Bootstrap Observations

Let \(Y^{*}\) be a random draw from the sample \(\left\{Y_{1}, \ldots, Y_{n}\right\}\). What is the distribution of \(Y^{*}\) ?

Since we are fixing the observations, the correct question is: What is the conditional distribution of \(Y^{*}\), conditional on the observed data? The empirical distribution function \(F_{n}\) summarizes the information in the sample, so equivalently we are talking about the distribution conditional on \(F_{n}\). Consequently we will write the bootstrap probability function and expectation as

\[ \begin{aligned} \mathbb{P}^{*}\left[Y^{*} \leq x\right] &=\mathbb{P}\left[Y^{*} \leq x \mid F_{n}\right] \\ \mathbb{E}^{*}\left[Y^{*}\right] &=\mathbb{E}\left[Y^{*} \mid F_{n}\right] . \end{aligned} \]

Notationally, the starred distribution and expectation are conditional given the data.

The (conditional) distribution of \(Y^{*}\) is the empirical distribution function \(F_{n}\), which is a discrete distribution with mass points \(1 / n\) on each observation \(Y_{i}\). Thus even if the original data come from a continuous distribution, the bootstrap data distribution is discrete.

The (conditional) mean and variance of \(Y^{*}\) are calculated from the EDF, and equal the sample mean and variance of the data. The mean is

\[ \mathbb{E}^{*}\left[Y^{*}\right]=\sum_{i=1}^{n} Y_{i} \mathbb{P}^{*}\left[Y^{*}=Y_{i}\right]=\sum_{i=1}^{n} Y_{i} \frac{1}{n}=\bar{Y} \]

and the variance is

\[ \begin{aligned} \operatorname{var}^{*}\left[Y^{*}\right] &=\mathbb{E}^{*}\left[Y^{*} Y^{* \prime}\right]-\left(\mathbb{E}^{*}\left[Y^{*}\right]\right)\left(\mathbb{E}^{*}\left[Y^{*}\right]\right)^{\prime} \\ &=\sum_{i=1}^{n} Y_{i} Y_{i}^{\prime} \mathbb{P}^{*}\left[Y^{*}=Y_{i}\right]-\bar{Y} \bar{Y}^{\prime} \\ &=\sum_{i=1}^{n} Y_{i} Y_{i}^{\prime} \frac{1}{n}-\bar{Y} \bar{Y}^{\prime} \\ &=\widehat{\Sigma} \end{aligned} \]

To summarize, the conditional distribution of \(Y^{*}\), given \(F_{n}\), is the discrete distribution on \(\left\{Y_{1}, \ldots, Y_{n}\right\}\) with mean \(\bar{Y}\) and covariance matrix \(\widehat{\Sigma}\).

We can extend this analysis to any integer moment \(r\). Assume \(Y\) is scalar. The \(r^{t h}\) moment of \(Y^{*}\) is

\[ \mu_{r}^{* \prime}=\mathbb{E}^{*}\left[Y^{* r}\right]=\sum_{i=1}^{n} Y_{i}^{r} \mathbb{P}^{*}\left[Y^{*}=Y_{i}\right]=\frac{1}{n} \sum_{i=1}^{n} Y_{i}^{r}=\widehat{\mu}_{r}^{\prime}, \]

the \(r^{t h}\) sample moment. The \(r^{t h}\) central moment of \(Y^{*}\) is

\[ \mu_{r}^{*}=\mathbb{E}^{*}\left[\left(Y^{*}-\bar{Y}\right)^{r}\right]=\frac{1}{n} \sum_{i=1}^{n}\left(Y_{i}-\bar{Y}\right)^{r}=\widehat{\mu}_{r}, \]

the \(r^{t h}\) central sample moment. Similarly, the \(r^{t h}\) cumulant of \(Y^{*}\) is \(\kappa_{r}^{*}=\widehat{\kappa}_{r}\), the \(r^{t h}\) sample cumulant.

10.11 The Distribution of the Bootstrap Sample Mean

The bootstrap sample mean is

\[ \bar{Y}^{*}=\frac{1}{n} \sum_{i=1}^{n} Y_{i}^{*} . \]

We can calculate its (conditional) mean and variance. The mean is

\[ \mathbb{E}^{*}\left[\bar{Y}^{*}\right]=\mathbb{E}^{*}\left[\frac{1}{n} \sum_{i=1}^{n} Y_{i}^{*}\right]=\frac{1}{n} \sum_{i=1}^{n} \mathbb{E}^{*}\left[Y_{i}^{*}\right]=\frac{1}{n} \sum_{i=1}^{n} \bar{Y}=\bar{Y} \]

using (10.10). Thus the bootstrap sample mean \(\bar{Y}^{*}\) has a distribution centered at the sample mean \(\bar{Y}\). This is because the bootstrap observations \(Y_{i}^{*}\) are drawn from the bootstrap universe, which treats the EDF as the truth, and the mean of the latter distribution is \(\bar{Y}\).

The (conditional) variance of the bootstrap sample mean is

\[ \operatorname{var}^{*}\left[\bar{Y}^{*}\right]=\operatorname{var}^{*}\left[\frac{1}{n} \sum_{i=1}^{n} Y_{i}^{*}\right]=\frac{1}{n^{2}} \sum_{i=1}^{n} \operatorname{var}^{*}\left[Y_{i}^{*}\right]=\frac{1}{n^{2}} \sum_{i=1}^{n} \widehat{\Sigma}=\frac{1}{n} \widehat{\Sigma} \]

using (10.11). In the scalar case, \(\operatorname{var}^{*}\left[\bar{Y}^{*}\right]=\widehat{\sigma}^{2} / n\). This shows that the bootstrap variance of \(\bar{Y}^{*}\) is precisely described by the sample variance of the original observations. Again, this is because the bootstrap observations \(Y_{i}^{*}\) are drawn from the bootstrap universe.

We can extend this to any integer moment \(r\). Assume \(Y\) is scalar. Define the normalized bootstrap sample mean \(Z_{n}^{*}=\sqrt{n}\left(\bar{Y}^{*}-\bar{Y}\right)\). Using expressions from Section \(6.17\) of Probability and Statistics for Economists, the \(3^{r d}\) through \(6^{\text {th }}\) conditional moments of \(Z_{n}^{*}\) are

\[ \begin{aligned} &\mathbb{E}^{*}\left[Z_{n}^{* 3}\right]=\widehat{\kappa}_{3} / n^{1 / 2} \\ &\mathbb{E}^{*}\left[Z_{n}^{* 4}\right]=\widehat{\kappa}_{4} / n+3 \widehat{\kappa}_{2}^{2} \\ &\mathbb{E}^{*}\left[Z_{n}^{* 5}\right]=\widehat{\kappa}_{5} / n^{3 / 2}+10 \widehat{\kappa}_{3} \widehat{\kappa}_{2} / n^{1 / 2} \\ &\mathbb{E}^{*}\left[Z_{n}^{* 6}\right]=\widehat{\kappa}_{6} / n^{2}+\left(15 \widehat{\kappa}_{4} \kappa_{2}+10 \widehat{\kappa}_{3}^{2}\right) / n+15 \widehat{\kappa}_{2}^{3} \end{aligned} \]

where \(\widehat{\kappa}_{r}\) is the \(r^{t h}\) sample cumulant. Similar expressions can be derived for higher moments. The moments (10.14) are exact, not approximations.

10.12 Bootstrap Asymptotics

The bootstrap mean \(\bar{Y}^{*}\) is a sample average over \(n\) i.i.d. random variables, so we might expect it to converge in probability to its expectation. Indeed, this is the case, but we have to be a bit careful since the bootstrap mean has a conditional distribution (given the data) so we need to define convergence in probability for conditional distributions.

Definition \(10.1\) We say that a random vector \(Z_{n}^{*}\) converges in bootstrap probability to \(Z\) as \(n \rightarrow \infty\), denoted \(Z_{n}^{*} \underset{p^{*}}{\longrightarrow} Z\), if for all \(\epsilon>0\)

\[ \mathbb{P}^{*}\left[\left\|Z_{n}^{*}-Z\right\|>\epsilon\right] \underset{p}{\longrightarrow} 0 \]

To understand this definition recall that conventional convergence in probability \(Z_{n} \underset{p}{\longrightarrow}\) means that for a sufficiently large sample size \(n\), the probability is high that \(Z_{n}\) is arbitrarily close to its limit \(Z\). In contrast, Definition \(10.1\) says \(Z_{n}^{*} \underset{p^{*}}{ } Z\) means that for a sufficiently large \(n\), the probability is high that the conditional probability that \(Z_{n}^{*}\) is close to its limit \(Z\) is high. Note that there are two uses of probability - both unconditional and conditional.

Our label “convergence in bootstrap probability” is a bit unusual. The label used in much of the statistical literature is “convergence in probability, in probability” but that seems like a mouthful. That literature more often focuses on the related concept of “convergence in probability, almost surely” which holds if we replace the ” \(\underset{p}{\text { " }}\) convergence with almost sure convergence. We do not use this concept in this chapter as it is an unnecessary complication.

While we have stated Definition \(10.1\) for the specific conditional probability distribution \(\mathbb{P}^{*}\), the idea is more general and can be used for any conditional distribution and any sequence of random vectors.

The following may seem obvious but it is useful to state for clarity. Its proof is given in Section \(10.31 .\)

Theorem \(10.1\) If \(Z_{n} \underset{p}{\longrightarrow} Z\) as \(n \rightarrow \infty\) then \(Z_{n} \underset{p^{*}}{ } Z\).

Given Definition 10.1, we can establish a law of large numbers for the bootstrap sample mean. Theorem \(10.2\) Bootstrap WLLN. If \(Y_{i}\) are independent and uniformly integrable then \(\bar{Y}^{*}-\bar{Y} \underset{p^{*}}{\longrightarrow} 0\) and \(\bar{Y}^{*} \underset{p^{*}}{\longrightarrow} \mu=\mathbb{E}[Y]\) as \(n \rightarrow \infty\).

The proof (presented in Section 10.31) is somewhat different from the classical case as it is based on the Marcinkiewicz WLLN (Theorem 10.20, presented in Section 10.31).

Notice that the conditions for the bootstrap WLLN are the same for the conventional WLLN. Notice as well that we state two related but slightly different results. The first is that the difference between the bootstrap sample mean \(\bar{Y}^{*}\) and the sample mean \(\bar{Y}\) diminishes as the sample size diverges. The second result is that the bootstrap sample mean converges to the population mean \(\mu\). The latter is not surprising (since the sample mean \(\bar{Y}\) converges in probability to \(\mu\) ) but it is constructive to be precise since we are dealing with a new convergence concept.

Theorem 10.3 Bootstrap Continuous Mapping Theorem. If \(Z_{n}^{*} \underset{p^{*}}{ } c\) as \(n \rightarrow\) \(\infty\) and \(g(\cdot)\) is continuous at \(c\), then \(g\left(Z_{n}^{*}\right) \underset{p^{*}}{ } g(c)\) as \(n \rightarrow \infty\).

The proof is essentially identical to that of Theorem \(6.6\) so is omitted.

We next would like to show that the bootstrap sample mean is asymptotically normally distributed, but for that we need a definition of convergence for conditional distributions.

Definition \(10.2\) Let \(Z_{n}^{*}\) be a sequence of random vectors with conditional distributions \(G_{n}^{*}(x)=\mathbb{P}^{*}\left[Z_{n}^{*} \leq x\right]\). We say that \(Z_{n}^{*}\) converges in bootstrap distribution to \(Z\) as \(n \rightarrow \infty\), denoted \(Z_{n}^{*} \underset{d^{*}}{\longrightarrow}\), if for all \(x\) at which \(G(x)=\mathbb{P}[Z \leq x]\) is continuous, \(G_{n}^{*}(x) \underset{p}{\longrightarrow} G(x)\) as \(n \rightarrow \infty\).

The difference with the conventional definition is that Definition \(10.2\) treats the conditional distribution as random. An alternative label for Definition \(10.2\) is “convergence in distribution, in probability”.

We now state a CLT for the bootstrap sample mean, with a proof given in Section 10.31.

Theorem 10.4 Bootstrap CLT. If \(Y_{i}\) are i.i.d., \(\mathbb{E}\|Y\|^{2}<\infty\), and \(\Sigma=\operatorname{var}[Y]>0\), then as \(n \rightarrow \infty, \sqrt{n}\left(\bar{Y}^{*}-\bar{Y}\right) \underset{d^{*}}{\longrightarrow} \mathrm{N}(0, \Sigma)\).

Theorem \(10.4\) shows that the normalized bootstrap sample mean has the same asymptotic distribution as the sample mean. Thus the bootstrap distribution is asymptotically the same as the sampling distribution. A notable difference, however, is that the bootstrap sample mean is normalized by centering at the sample mean, not at the population mean. This is because \(\bar{Y}\) is the true mean in the bootstrap universe.

We next state the distributional form of the continuous mapping theorem for bootstrap distributions and the Bootstrap Delta Method. Theorem 10.5 Bootstrap Continuous Mapping Theorem

If \(Z_{n}^{*} \underset{d^{*}}{ } Z\) as \(n \rightarrow \infty\) and \(g: \mathbb{R}^{m} \rightarrow \mathbb{R}^{k}\) has the set of discontinuity points \(D_{g}\) such that \(\mathbb{P}^{*}\left[Z^{*} \in D_{g}\right]=0\), then \(g\left(Z_{n}^{*}\right) \underset{d^{*}}{\rightarrow} g(Z)\) as \(n \rightarrow \infty\).

Theorem 10.6 Bootstrap Delta Method: If \(\widehat{\mu} \underset{p}{\longrightarrow} \mu, \sqrt{n}\left(\widehat{\mu}^{*}-\widehat{\mu}\right) \underset{d^{*}}{\longrightarrow} \xi\), and \(g(u)\) is continuously differentiable in a neighborhood of \(\mu\), then as \(n \rightarrow \infty\)

\[ \sqrt{n}\left(g\left(\widehat{\mu}^{*}\right)-g(\widehat{\mu})\right) \underset{d^{*}}{\longrightarrow} \boldsymbol{G}^{\prime} \xi \]

where \(\boldsymbol{G}(x)=\frac{\partial}{\partial x} g(x)^{\prime}\) and \(\boldsymbol{G}=\boldsymbol{G}(\mu)\). In particular, if \(\xi \sim \mathrm{N}(0, \boldsymbol{V})\) then as \(n \rightarrow \infty\)

\[ \sqrt{n}\left(g\left(\widehat{\mu}^{*}\right)-g(\widehat{\mu})\right) \underset{d^{*}}{\longrightarrow} \mathrm{N}\left(0, \boldsymbol{G}^{\prime} \boldsymbol{V} \boldsymbol{G}\right) . \]

For a proof, see Exercise 10.7.

We state an analog of Theorem 6.10, which presented the asymptotic distribution for general smooth functions of sample means, which covers most econometric estimators.

Theorem 10.7 Under the assumptions of Theorem 6.10, that is, if \(Y_{i}\) is i.i.d., \(\mu=\mathbb{E}[h(Y)], \theta=g(\mu), \mathbb{E}\|h(Y)\|^{2}<\infty\), and \(\boldsymbol{G}(x)=\frac{\partial}{\partial x} g(x)^{\prime}\) is continuous in a neighborhood of \(\mu\), for \(\widehat{\theta}=g(\widehat{\mu})\) with \(\widehat{\mu}=\frac{1}{n} \sum_{i=1}^{n} h\left(Y_{i}\right)\) and \(\widehat{\theta}^{*}=g\left(\widehat{\mu}^{*}\right)\) with \(\widehat{\mu}^{*}=\frac{1}{n} \sum_{i=1}^{n} h\left(Y_{i}^{*}\right)\), as \(n \rightarrow \infty\)

\[ \sqrt{n}\left(\widehat{\theta}^{*}-\widehat{\theta}\right) \underset{d^{*}}{\longrightarrow} \mathrm{N}\left(0, \boldsymbol{V}_{\theta}\right) \]

where \(\boldsymbol{V}_{\theta}=\boldsymbol{G}^{\prime} \boldsymbol{V} \boldsymbol{G}, \boldsymbol{V}=\mathbb{E}\left[(h(Y)-\mu)(h(Y)-\mu)^{\prime}\right]\) and \(\boldsymbol{G}=\boldsymbol{G}(\mu)\).

For a proof, see Exercise 10.8.

Theorem \(10.7\) shows that the asymptotic distribution of the bootstrap estimator \(\widehat{\theta}^{*}\) is identical to that of the sample estimator \(\widehat{\theta}\). This means that we can learn the distribution of \(\widehat{\theta}\) from the bootstrap distribution, and hence perform asymptotically correct inference.

For some bootstrap applications we use bootstrap estimates of variance. The plug-in estimator of \(\boldsymbol{V}_{\boldsymbol{\theta}}\) is \(\widehat{\boldsymbol{V}}_{\theta}=\widehat{\boldsymbol{G}}^{\prime} \widehat{\boldsymbol{V}} \widehat{\boldsymbol{G}}\) where \(\widehat{\boldsymbol{G}}=\boldsymbol{G}(\widehat{\mu})\) and

\[ \widehat{\boldsymbol{V}}=\frac{1}{n} \sum_{i=1}^{n}\left(h\left(Y_{i}\right)-\widehat{\mu}\right)\left(h\left(Y_{i}\right)-\widehat{\mu}\right)^{\prime} . \]

The bootstrap version is

\[ \begin{aligned} &\widehat{\boldsymbol{V}}_{\theta}^{*}=\widehat{\boldsymbol{G}}^{* \prime} \widehat{\boldsymbol{V}}^{*} \widehat{\boldsymbol{G}}^{*} \\ &\widehat{\boldsymbol{G}}^{*}=\boldsymbol{G}\left(\widehat{\mu}^{*}\right) \\ &\widehat{\boldsymbol{V}}^{*}=\frac{1}{n} \sum_{i=1}^{n}\left(h\left(Y_{i}^{*}\right)-\widehat{\mu}^{*}\right)\left(h\left(Y_{i}^{*}\right)-\widehat{\mu}^{*}\right)^{\prime} . \end{aligned} \]

Application of the bootstrap WLLN and bootstrap CMT show that \(\widehat{\boldsymbol{V}}_{\theta}^{*}\) is consistent for \(\boldsymbol{V}_{\theta}\).

Theorem \(10.8\) Under the assumptions of Theorem 10.7, \(\widehat{\boldsymbol{V}}_{\theta}^{*} \underset{p^{*}}{\longrightarrow} \boldsymbol{V}_{\theta}\) as \(n \rightarrow \infty\).

For a proof, see Exercise 10.9.

10.13 Consistency of the Bootstrap Estimate of Variance

Recall the definition (10.7) of the bootstrap estimator of variance \(\widehat{\boldsymbol{V}}_{\widehat{\theta}}^{\text {boot }}\) of an estimator \(\widehat{\theta}\). In this section we explore conditions under which \(\widehat{\boldsymbol{V}}_{\widehat{\theta}}^{\text {boot }}\) is consistent for the asymptotic variance of \(\widehat{\theta}\).

To do so it is useful to focus on a normalized version of the estimator so that the asymptotic variance is not degenerate. Suppose that for some sequence \(a_{n}\) we have

\[ Z_{n}=a_{n}(\widehat{\theta}-\theta) \underset{d}{\longrightarrow} \xi \]

and

\[ Z_{n}^{*}=a_{n}\left(\widehat{\theta}^{*}-\widehat{\theta}\right) \underset{d^{*}}{\longrightarrow} \xi \]

for some limit distribution \(\xi\). That is, for some normalization, both \(\hat{\theta}\) and \(\widehat{\theta}^{*}\) have the same asymptotic distribution. This is quite general as it includes the smooth function model. The conventional bootstrap estimator of the variance of \(Z_{n}\) is the sample variance of the bootstrap draws \(\left\{Z_{n}^{*}(b): b=1, \ldots, B\right\}\). This equals the estimator (10.7) multiplied by \(a_{n}^{2}\). Thus it is equivalent (up to scale) whether we discuss estimating the variance of \(\widehat{\theta}\) or \(Z_{n}\).

The bootstrap estimator of variance of \(Z_{n}\) is

\[ \begin{aligned} \widehat{\boldsymbol{V}}_{\theta}^{\text {boot,B }} &=\frac{1}{B-1} \sum_{b=1}^{B}\left(Z_{n}^{*}(b)-Z_{n}^{*}\right)\left(Z_{n}^{*}(b)-Z_{n}^{*}\right)^{\prime} \\ \bar{Z}_{n}^{*} &=\frac{1}{B} \sum_{b=1}^{B} Z_{n}^{*}(b) \end{aligned} \]

Notice that we index the estimator by the number of bootstrap replications \(B\).

Since \(Z_{n}^{*}\) converges in bootstrap distribution to the same asymptotic distribution as \(Z_{n}\), it seems reasonable to guess that the variance of \(Z_{n}^{*}\) will converge to that of \(\xi\). However, convergence in distribution is not sufficient for convergence in moments. For the variance to converge it is also necessary for the sequence \(Z_{n}^{*}\) to be uniformly square integrable. Theorem \(10.9\) If (10.15) and (10.16) hold for some sequence \(a_{n}\) and \(\left\|Z_{n}^{*}\right\|^{2}\) is uniformly integrable, then as \(B \rightarrow \infty\)

\[ \widehat{\boldsymbol{V}}_{\theta}^{\mathrm{boot}, \mathrm{B}} \underset{p^{*}}{\longrightarrow} \widehat{\boldsymbol{V}}_{\theta}^{\text {boot }}=\operatorname{var}\left[Z_{n}^{*}\right] \text {, } \]

and as \(n \rightarrow \infty\)

\[ \widehat{\boldsymbol{V}}_{\theta}^{\text {boot }} \underset{p^{*}}{\longrightarrow} \boldsymbol{V}_{\theta}=\operatorname{var}[\xi] . \]

This raises the question: Is the normalized sequence \(Z_{n}\) uniformly integrable? We spend the remainder of this section exploring this question and turn in the next section to trimmed variance estimators which do not require uniform integrability.

This condition is reasonably straightforward to verify for the case of a scalar sample mean with a finite variance. That is, suppose \(Z_{n}^{*}=\sqrt{n}\left(\bar{Y}^{*}-\bar{Y}\right)\) and \(\mathbb{E}\left[Y^{2}\right]<\infty\). In (10.14) we calculated the exact fourth central moment of \(Z_{n}^{*}\) :

\[ \mathbb{E}^{*}\left[Z_{n}^{* 4}\right]=\frac{\widehat{\kappa}_{4}}{n}+3 \widehat{\sigma}^{4}=\frac{\widehat{\mu}_{4}-3 \widehat{\sigma}^{4}}{n}+3 \widehat{\sigma}^{4} \]

where \(\widehat{\sigma}^{2}=n^{-1} \sum_{i=1}^{n}\left(Y_{i}-\bar{Y}\right)^{2}\) and \(\widehat{\mu}_{4}=n^{-1} \sum_{i=1}^{n}\left(Y_{i}-\bar{Y}\right)^{4}\). The assumption \(\mathbb{E}\left[Y^{2}\right]<\infty\) implies that \(\mathbb{E}\left[\widehat{\sigma}^{2}\right]=O(1)\) so \(\widehat{\sigma}^{2}=O_{p}(1)\). Furthermore, \(n^{-1} \widehat{\mu}_{4}=n^{-2} \sum_{i=1}^{n}\left(Y_{i}-\bar{Y}\right)^{4}=o_{p}(1)\) by the Marcinkiewicz WLLN (Theorem 10.20). It follows that

\[ \mathbb{E}^{*}\left[Z_{n}^{* 4}\right]=n^{2} \mathbb{E}^{*}\left[\left(\bar{Y}^{*}-\bar{Y}\right)^{4}\right]=O_{p}(1) . \]

Theorem \(6.13\) shows that this implies that \(Z_{n}^{* 2}\) is uniformly integrable. Thus if \(Y\) has a finite variance the normalized bootstrap sample mean is uniformly square integrable and the bootstrap estimate of variance is consistent by Theorem \(10.9\).

Now consider the smooth function model of Theorem 10.7. We can establish the following result.

Theorem 10.10 In the smooth function model of Theorem 10.7, if for some \(p \geq 1\) the \(p^{t h}\)-order derivatives of \(g(x)\) are bounded, then \(Z_{n}^{*}=\sqrt{n}\left(\widehat{\theta}^{*}-\widehat{\theta}\right)\) is uniformly square integrable and the bootstrap estimator of variance is consistent as in Theorem 10.9.

For a proof see Section \(10.31\).

This shows that the bootstrap estimate of variance is consistent for a reasonably broad class of estimators. The class of functions \(g(x)\) covered by this result includes all \(p^{t h}\)-order polynomials.

10.14 Trimmed Estimator of Bootstrap Variance

Theorem \(10.10\) showed that the bootstrap estimator of variance is consistent for smooth functions with a bounded \(p^{t h}\) order derivative. This is a fairly broad class but excludes many important applications. An example is \(\theta=\mu_{1} / \mu_{2}\) where \(\mu_{1}=\mathbb{E}\left[Y_{1}\right]\) and \(\mu_{2}=\mathbb{E}\left[Y_{2}\right]\). This function does not have a bounded derivative (unless \(\mu_{2}\) is bounded away from zero) so is not covered by Theorem 10.10. This is more than a technical issue. When \(\left(Y_{1}, Y_{2}\right)\) are jointly normally distributed then it is known that \(\widehat{\theta}=\bar{Y}_{1} / \bar{Y}_{2}\) does not possess a finite variance. Consequently we cannot expect the bootstrap estimator of variance to perform well. (It is attempting to estimate the variance of \(\widehat{\theta}\), which is infinity.)

In these cases it is preferred to use a trimmed estimator of bootstrap variance. Let \(\tau_{n} \rightarrow \infty\) be a sequence of positive trimming numbers satisfying \(\tau_{n}=O\left(e^{n / 8}\right)\). Define the trimmed statistic

\[ Z_{n}^{* *}=Z_{n}^{*} \mathbb{1}\left\{\left\|Z_{n}^{*}\right\| \leq \tau_{n}\right\} . \]

The trimmed bootstrap estimator of variance is

\[ \begin{aligned} \widehat{\boldsymbol{V}}_{\theta}^{\text {boot, }, \tau} &=\frac{1}{B-1} \sum_{b=1}^{B}\left(Z_{n}^{* *}(b)-Z_{n}^{* *}\right)\left(Z_{n}^{* *}(b)-Z_{n}^{* *}\right)^{\prime} \\ Z_{n}^{* *} &=\frac{1}{B} \sum_{b=1}^{B} Z_{n}^{* *}(b) . \end{aligned} \]

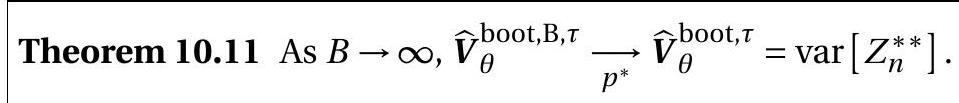

We first examine the behavior of \(\widehat{\boldsymbol{V}}_{\theta}^{\text {boot, } \mathrm{B}}\) as the number of bootstrap replications \(B\) grows to infinity. It is a sample variance of independent bounded random vectors. Thus by the bootstrap WLLN (Theorem 10.2) \(\widehat{\boldsymbol{V}}_{\theta}^{\mathrm{boot}, \mathrm{B}, \tau}\) converges in bootstrap probability to the variance of \(Z_{n}^{* *}\).

We next examine the behavior of the bootstrap estimator \(\widehat{\boldsymbol{V}}_{\theta}^{\text {boot, } \tau}\) as \(n\) grows to infinity. We focus on the smooth function model of Theorem 10.7, which showed that \(Z_{n}^{*}=\sqrt{n}\left(\widehat{\theta}^{*}-\widehat{\theta}\right) \underset{d^{*}}{\longrightarrow} \sim \mathrm{N}\left(0, \boldsymbol{V}_{\theta}\right)\). Since the trimming is asymptotically negligible, it follows that \(Z_{n}^{* *} \underset{d^{*}}{\longrightarrow}\). If we can show that \(Z_{n}^{* *}\) is uniformly square integrable, Theorem \(10.9\) shows that \(\operatorname{var}\left[Z_{n}^{* *}\right] \rightarrow \operatorname{var}[Z]=\boldsymbol{V}_{\theta}\) as \(n \rightarrow \infty\). This is shown in the following result, whose proof is presented in Section 10.31.

Theorem \(10.12\) Under the assumptions of Theorem 10.7, \(\widehat{\boldsymbol{V}}_{\theta}^{\mathrm{boot}, \tau} \underset{p^{*}}{\longrightarrow} \boldsymbol{V}_{\theta} .\)

Theorems \(10.11\) and \(10.12\) show that the trimmed bootstrap estimator of variance is consistent for the asymptotic variance in the smooth function model, which includes most econometric estimators. This justifies bootstrap standard errors as consistent estimators for the asymptotic distribution.

An important caveat is that these results critically rely on the trimmed variance estimator. This is a critical caveat as conventional statistical packages (e.g. Stata) calculate bootstrap standard errors using the untrimmed estimator (10.7). Thus there is no guarantee that the reported standard errors are consistent. The untrimmed variance estimator works in the context of Theorem \(10.10\) and whenever the bootstrap statistic is uniformly square integrable, but not necessarily in general applications.

In practice, it may be difficult to know how to select the trimming sequence \(\tau_{n}\). The rule \(\tau_{n}=O\left(e^{n / 8}\right)\) does not provide practical guidance. Instead, it may be useful to think about trimming in terms of percentages of the bootstrap draws. Thus we can set \(\tau_{n}\) so that a given small percentage \(\gamma_{n}\) is trimmed. For theoretical interpretation we would set \(\gamma_{n} \rightarrow 0\) as \(n \rightarrow \infty\). In practice we might set \(\gamma_{n}=1 %\).

10.15 Unreliability of Untrimmed Bootstrap Standard Errors

In the previous section we presented a trimmed bootstrap variance estimator which should be used to form bootstrap standard errors for nonlinear estimators. Otherwise, the untrimmed estimator is potentially unreliable.

This is an unfortunate situation, because reporting of bootstrap standard errors is commonplace in contemporary applied econometric practice, and standard applications (including Stata) use the untrimmed estimator.

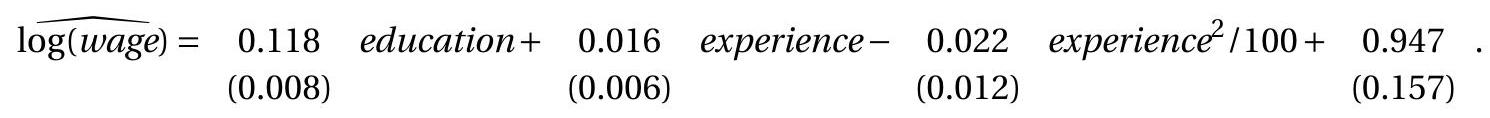

To illustrate the seriousness of the problem we use the simple wage regression (7.31) which we repeat here. This is the subsample of married Black women with 982 observations. The point estimates and standard errors are

We are interested in the experience level which maximizes expected log wages \(\theta_{3}=-50 \beta_{2} / \beta_{3}\). The point estimate and standard errors calculated with different methods are reported in Table \(10.3\) below.

The point estimate of the experience level with maximum earnings is \(\widehat{\theta}_{3}=35\). The asymptotic and jackknife standard errors are about 7 . The bootstrap standard error, however, is 825 ! Confused by this unusual value we rerun the bootstrap and obtain a standard error of 544 . Each was computed with 10,000 bootstrap replications. The fact that the two bootstrap standard errors are considerably different when recomputed (with different starting seeds) is indicative of moment failure. When there is an enormous discrepancy like this between the asymptotic and bootstrap standard error, and between bootstrap runs, it is a signal that there may be moment failure and consequently bootstrap standard errors are unreliable.

A trimmed bootstrap with \(\tau=25\) (set to slightly exceed three asymptotic standard errors) produces a more reasonable standard error of \(10 .\)

One message from this application is that when different methods produce very different standard errors we should be cautious about trusting any single method. The large discrepancies indicate poor asymptotic approximations, rendering all methods inaccurate. Another message is to be cautious about reporting conventional bootstrap standard errors. Trimmed versions are preferred, especially for nonlinear functions of estimated coefficients.

Table 10.3: Experience Level Which Maximizes Expected log Wages

| Estimate | \(35.2\) |

|---|---|

| Asymptotic s.e. | \((7.0)\) |

| Jackknife s.e. | \((7.0)\) |

| Bootstrap s.e. (standard) | \((825)\) |

| Bootstrap s.e. (repeat) | \((544)\) |

| Bootstrap s.e. (trimmed) | \((10.1)\) |

10.16 Consistency of the Percentile Interval

Recall the percentile interval (10.8). We now provide conditions under which it has asymptotically correct coverage. Theorem \(10.13\) Assume that for some sequence \(a_{n}\)

\[ a_{n}(\widehat{\theta}-\theta) \underset{d}{\longrightarrow} \xi \]

and

\[ a_{n}\left(\widehat{\theta}^{*}-\widehat{\theta}\right) \underset{d^{*}}{\longrightarrow} \xi \]

where \(\xi\) is continuously distributed and symmetric about zero. Then \(\mathbb{P}\left[\theta \in C^{\mathrm{pc}}\right] \rightarrow 1-\alpha\) as \(n \rightarrow \infty\)

The assumptions (10.18)-(10.19) hold for the smooth function model of Theorem 10.7, so this result incorporates many applications. The beauty of Theorem \(10.13\) is that the simple confidence interval \(C^{\mathrm{pc}}\) - which does not require technical calculation of asymptotic standard errors - has asymptotically valid coverage for any estimator which falls in the smooth function class, as well as any other estimator satisfying the convergence results (10.18)-(10.19) with \(\xi\) symmetrically distributed. The conditions are weaker than those required for consistent bootstrap variance estimation (and normal-approximation confidence intervals) because it is not necessary to verify that \(\widehat{\theta}^{*}\) is uniformly integrable, nor necessary to employ trimming.

The proof of Theorem \(10.7\) is not difficult. The convergence assumption (10.19) implies that the \(\alpha^{t h}\) quantile of \(a_{n}\left(\widehat{\theta}^{*}-\widehat{\theta}\right)\), which is \(a_{n}\left(q_{\alpha}^{*}-\widehat{\theta}\right)\) by quantile equivariance, converges in probability to the \(\alpha^{t h}\) quantile of \(\xi\), which we can denote as \(\bar{q}_{\alpha}\). Thus

\[ a_{n}\left(q_{\alpha}^{*}-\widehat{\theta}\right) \underset{p}{\longrightarrow} \bar{q}_{\alpha} . \]

Let \(H(x)=\mathbb{P}[\xi \leq x]\) be the distribution function of \(\xi\). The assumption of symmetry implies \(H(-x)=\) \(1-H(x)\). Then the percentile interval has coverage

\[ \begin{aligned} \mathbb{P}\left[\theta \in C^{\mathrm{pc}}\right] &=\mathbb{P}\left[q_{\alpha / 2}^{*} \leq \theta \leq q_{1-\alpha / 2}^{*}\right] \\ &=\mathbb{P}\left[-a_{n}\left(q_{\alpha / 2}^{*}-\widehat{\theta}\right) \geq a_{n}(\widehat{\theta}-\theta) \geq-a_{n}\left(q_{1-\alpha / 2}^{*}-\widehat{\theta}\right)\right] \\ & \rightarrow \mathbb{P}\left[-\bar{q}_{\alpha / 2} \geq \xi \geq-\bar{q}_{1-\alpha / 2}\right] \\ &=H\left(-\bar{q}_{\alpha / 2}\right)-H\left(-\bar{q}_{1-\alpha / 2}\right) \\ &=H\left(\bar{q}_{1-\alpha / 2}\right)-H\left(\bar{q}_{\alpha / 2}\right) \\ &=1-\alpha . \end{aligned} \]

The convergence holds by (10.18) and (10.20). The following equality uses the definition of \(H\), the nextto-last is the symmetry of \(H\), and the final equality is the definition of \(\bar{q}_{\alpha}\). This establishes Theorem \(10.13 .\)

Theorem \(10.13\) seems quite general, but it critically rests on the assumption that the asymptotic distribution \(\xi\) is symmetrically distributed about zero. This may seem innocuous since conventional asymptotic distributions are normal and hence symmetric, but it deserves further scrutiny. It is not merely a technical assumption - an examination of the steps in the preceeding argument isolate quite clearly that if the symmetry assumption is violated then the asymptotic coverage will not be \(1-\alpha\). While Theorem \(10.13\) does show that the percentile interval is asymptotically valid for a conventional asymptotically normal estimator, the reliance on symmetry in the argument suggests that the percentile method will work poorly when the finite sample distribution is asymmetric. This turns out to be the case and leads us to consider alternative methods in the following sections. It is also worthwhile to investigate a finite sample justification for the percentile interval based on a heuristic analogy due to Efron.

Assume that there exists an unknown but strictly increasing transformation \(\psi(\theta)\) such that \(\psi(\widehat{\theta})-\) \(\psi(\theta)\) has a pivotal distribution \(H(u)\) (does not vary with \(\theta\) ) which is symmetric about zero. For example, if \(\widehat{\theta} \sim \mathrm{N}\left(\theta, \sigma^{2}\right)\) we can set \(\psi(\theta)=\theta / \sigma\). Alternatively, if \(\widehat{\theta}=\exp (\widehat{\mu})\) and \(\widehat{\mu} \sim \mathrm{N}\left(\mu, \sigma^{2}\right)\) then we can set \(\psi(\theta)=\) \(\log (\theta) / \sigma\)

To assess the coverage of the percentile interval, observe that since the distribution \(H\) is pivotal the bootstrap distribution \(\psi\left(\widehat{\theta}^{*}\right)-\psi(\widehat{\theta})\) also has distribution \(H(u)\). Let \(\bar{q}_{\alpha}\) be the \(\alpha^{\text {th }}\) quantile of the distribution \(H\). Since \(q_{\alpha}^{*}\) is the \(\alpha^{t h}\) quantile of the distribution of \(\widehat{\theta}^{*}\) and \(\psi\left(\widehat{\theta}^{*}\right)-\psi(\widehat{\theta})\) is a monotonic transformation of \(\widehat{\theta}^{*}\), by the quantile equivariance property we deduce that \(\bar{q}_{\alpha}+\psi(\widehat{\theta})=\psi\left(q_{\alpha}^{*}\right)\). The percentile interval has coverage

\[ \begin{aligned} \mathbb{P}\left[\theta \in C^{\mathrm{pc}}\right] &=\mathbb{P}\left[q_{\alpha / 2}^{*} \leq \theta \leq q_{1-\alpha / 2}^{*}\right] \\ &=\mathbb{P}\left[\psi\left(q_{\alpha / 2}^{*}\right) \leq \psi(\theta) \leq \psi\left(q_{1-\alpha / 2}^{*}\right)\right] \\ &=\mathbb{P}\left[\psi(\widehat{\theta})-\psi\left(q_{\alpha / 2}^{*}\right) \geq \psi(\widehat{\theta})-\psi(\theta) \geq \psi(\widehat{\theta})-\psi\left(q_{1-\alpha / 2}^{*}\right)\right] \\ &=\mathbb{P}\left[-\bar{q}_{\alpha / 2} \geq \psi(\widehat{\theta})-\psi(\theta) \geq-\bar{q}_{1-\alpha / 2}\right] \\ &=H\left(-\bar{q}_{\alpha / 2}\right)-H\left(-\bar{q}_{1-\alpha / 2}\right) \\ &=H\left(\bar{q}_{1-\alpha / 2}\right)-H\left(\bar{q}_{\alpha / 2}\right) \\ &=1-\alpha . \end{aligned} \]

The second equality applies the monotonic transformation \(\psi(u)\) to all elements. The fourth uses the relationship \(\bar{q}_{\alpha}+\psi(\widehat{\theta})=\psi\left(q_{\alpha}^{*}\right)\). The fifth uses the defintion of \(H\). The sixth uses the symmetry property of \(H\), and the final is by the definition of \(\bar{q}_{\alpha}\) as the \(\alpha^{t h}\) quantile of \(H\).

This calculation shows that under these assumptions the percentile interval has exact coverage \(1-\alpha\). The nice thing about this argument is the introduction of the unknown transformation \(\psi(u)\) for which the percentile interval automatically adapts. The unpleasant feature is the assumption of symmetry. Similar to the asymptotic argument the calculation strongly relies on the symmetry of distribution \(H(x)\). Without symmetry the coverage will be incorrect.

Intuitively, we expect that when the assumptions are approximately true then the percentile interval will have approximately correct coverage. Thus so long as there is a transformation \(\psi(u)\) such that \(\psi(\widehat{\theta})-\) \(\psi(\theta)\) is approximately pivotal and symmetric about zero, then the percentile interval should work well.

This argument has the following application. Suppose that the parameter of interest is \(\theta=\exp (\mu)\) where \(\mu=\mathbb{E}[Y]\) and suppose \(Y\) has a pivotal symmetric distribution about \(\mu\). Then even though \(\widehat{\theta}=\) \(\exp (\bar{Y})\) does not have a symmetric distribution, the percentile interval applied to \(\widehat{\theta}\) will have the correct coverage, because the monotonic transformation \(\log (\widehat{\theta})\) has a pivotal symmetric distribution.

10.17 Bias-Corrected Percentile Interval