Chapter 14: Time Series

14 Time Series

14.1 Introduction

A time series \(Y_{t} \in \mathbb{R}^{m}\) is a process which is sequentially ordered over time. In this textbook we focus on discrete time series where \(t\) is an integer, though there is also a considerable literature on continuoustime processes. To denote the time period it is typical to use the subscript \(t\). The time series is univariate if \(m=1\) and multivariate if \(m>1\). This chapter is primarily focused on univariate time series models, though we describe the concepts for the multivariate case when the added generality does not add extra complication.

Most economic time series are recorded at discrete intervals such as annual, quarterly, monthly, weekly, or daily. The number of observaed periods \(s\) per year is called the frequency. In most cases we will denote the observed sample by the periods \(t=1, \ldots, n\).

Because of the sequential nature of time series we expect that observations close in calender time, e.g. \(Y_{t}\) and its lagged value \(Y_{t-1}\), will be dependent. This type of dependence structure requires a different distributional theory than for cross-sectional and clustered observations since we cannot divide the sample into independent groups. Many of the issues which distinguish time series from cross-section econometrics concern the modeling of these dependence relationships.

There are many excellent textbooks for time series analysis. The encyclopedic standard is Hamilton (1994). Others include Harvey (1990), Tong (1990), Brockwell and Davis (1991), Fan and Yao (2003), Lütkepohl (2005), Enders (2014), and Kilian and Lütkepohl (2017). For textbooks on the related subject of forecasting see Granger and Newbold (1986), Granger (1989), and Elliott and Timmermann (2016).

14.2 Examples

Many economic time series are macroeconomic variables. An excellent resource for U.S. macroeconomic data are the FRED-MD and FRED-QD databases which contain a wide set of monthly and quarterly variables, assembled and maintained by the St. Louis Federal Reserve Bank. See McCracken and Ng (2016, 2021). The datasets FRED-MD and FRED-QD for 1959-2017 are posted on the textbook website. FRED-MD has 129 variables over 708 months. FRED-QD has 248 variables over 236 quarters.

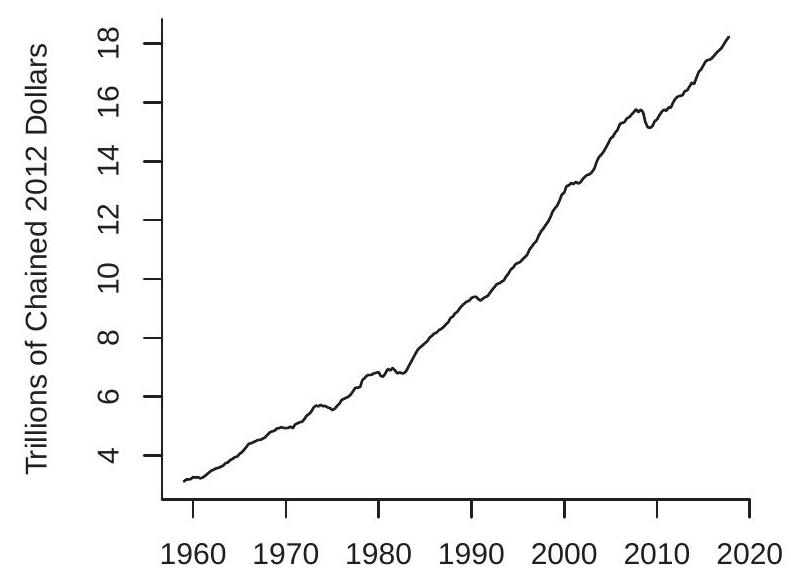

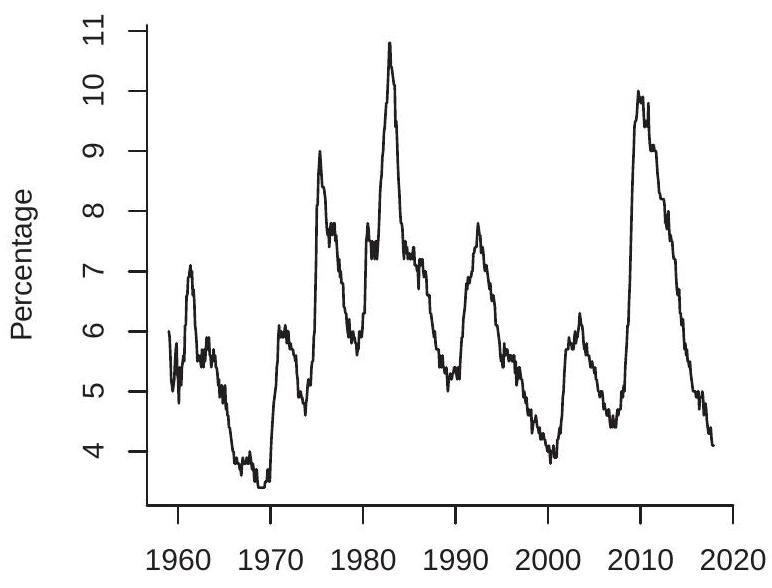

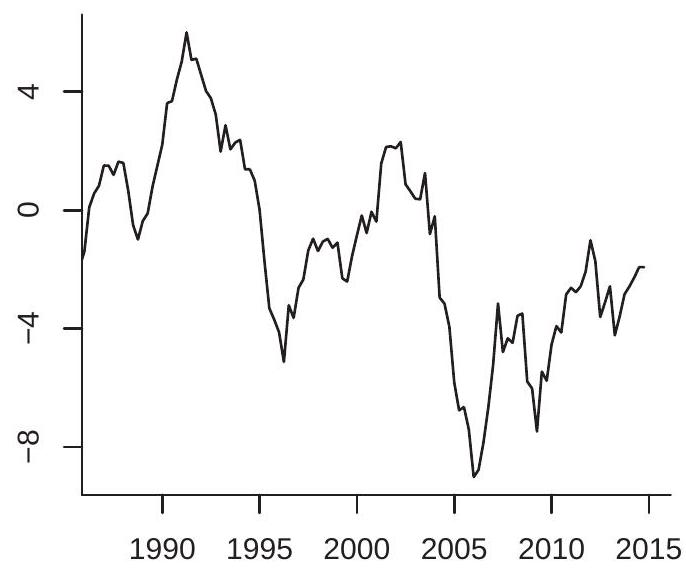

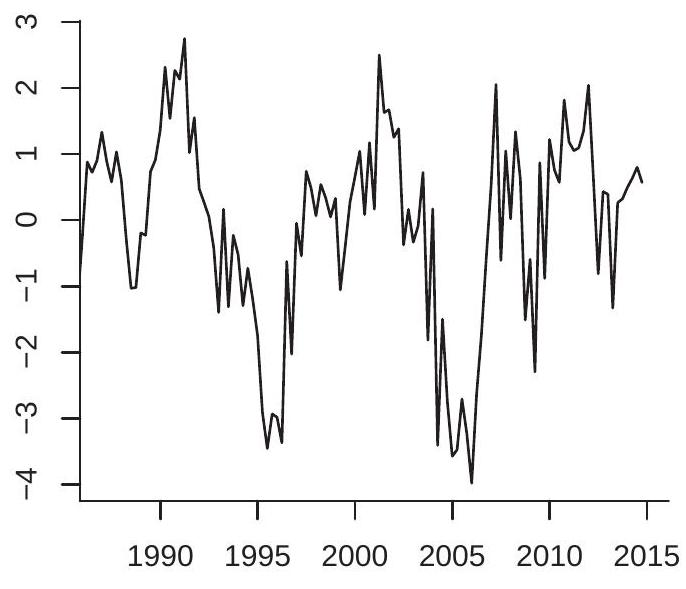

When working with time series data one of the first tasks is to plot the series against time. In Figures 14.1-14.2 we plot eight example time series from FRED-QD and FRED-MD. As is conventional, the x-axis displays calendar dates (in this case years) and the y-axis displays the level of the series. The series plotted are: (1a) Real U.S. GDP ( \(g d p c 1)\); (1b) U.S.-Canada exchange rate (excausx); (1c) Interest rate on U.S. 10-year Treasury bond (gs10); (1d) Real crude oil price (oilpricex); (2a) U.S. unemployment rate (unrate); (2b) U.S. real non-durables consumption growth rate (growth rate of \(p c n d x\) ); (2c) U.S. CPI inflation rate

- U.S. Real GDP

.jpg)

- Interest Rate on 10-Year Treasury

.jpg)

- U.S.-Canada Exchange Rate

.jpg)

- Real Crude Oil Price

Figure 14.1: GDP, Exchange Rate, Interest Rate, Oil Price

(growth rate of cpiaucsl); (2d) S&P 500 return (growth rate of \(s p 500\) ). (1a) and (2b) are quarterly series, the rest are monthly.

Many of the plots are smooth, meaning that the neighboring values (in calendar time) are similar to one another and hence are serially correlated. Some of the plots are non-smooth, meaning that the neighboring values are less similar and hence less correlated. At least one plot (real GDP) displays an upward trend.

- U.S. Unemployment Rate

.jpg)

- U.S. Inflation Rate

.jpg)

- Consumption Growth Rate

.jpg)

- S&P 500 Return

Figure 14.2: Unemployment Rate, Consumption Growth Rate, Inflation Rate, and S&P 500 Return

14.3 Differences and Growth Rates

It is common to transform series by taking logarithms, differences, and/or growth rates. Three of the series in Figure \(14.2\) (consumption growth, inflation [growth rate of CPI index], and S&P 500 return) are displayed as growth rates. This may be done for a number of reasons. The most credible is that this is the suitable transformation for the desired analysis.

Many aggregate series such as real GDP are transformed by taking natural logarithms. This flattens the apparent exponential growth and makes fluctuations proportionate.

The first difference of a series \(Y_{t}\) is

\[ \Delta Y_{t}=Y_{t}-Y_{t-1} \]

The second difference is

\[ \Delta^{2} Y_{t}=\Delta Y_{t}-\Delta Y_{t-1} . \]

Higher-order differences can be defined similarly but are not used in practice. The annual, or year-onyear, change of a series \(Y_{t}\) with frequency \(s\) is

\[ \Delta_{s} Y_{t}=Y_{t}-Y_{t-s} . \]

There are several methods to calculate growth rates. The one-period growth rate is the percentage change from period \(t-1\) to period \(t\) :

\[ Q_{t}=100\left(\frac{\Delta Y_{t}}{Y_{t-1}}\right)=100\left(\frac{Y_{t}}{Y_{t-1}}-1\right) . \]

The multiplication by 100 is not essential but scales \(Q_{t}\) so that it is a percentage. This is the transformation used for the plots in Figures \(14.2\) (b)-(d). For quarterly data, \(Q_{t}\) is the quarterly growth rate. For monthly data, \(Q_{t}\) is the monthly growth rate.

For non-annual data the one-period growth rate (14.1) may be unappealing for interpretation. Consequently, statistical agencies commonly report “annualized” growth rates which is the annual growth which would occur if the one-period growth rate is compounded for a full year. For a series with frequency \(s\) the annualized growth rate is

\[ A_{t}=100\left(\left(\frac{Y_{t}}{Y_{t-1}}\right)^{s}-1\right) . \]

Notice that \(A_{t}\) is a nonlinear function of \(Q_{t}\).

Year-on-year growth rates are

\[ G_{t}=100\left(\frac{\Delta_{s} Y_{t}}{Y_{t-s}}\right)=100\left(\frac{Y_{t}}{Y_{t-s}}-1\right) . \]

These do not need annualization.

Growth rates are closely related to logarithmic transformations. For small growth rates, \(Q_{t}, A_{t}\) and \(G_{t}\) are approximately first differences in logarithms:

\[ \begin{aligned} Q_{t} & \simeq 100 \Delta \log Y_{t} \\ A_{t} & \simeq s \times 100 \Delta \log Y_{t} \\ G_{t} & \simeq 100 \Delta_{s} \log Y_{t} . \end{aligned} \]

For analysis using growth rates I recommend the one-period growth rates (14.1) or differenced logarithms rather than the annualized growth rates (14.2). While annualized growth rates are preferred for reporting, they are a highly nonlinear transformation which is unnatural for statistical analysis. Differenced logarithms are the most common choice and are recommended for models which combine log-levels and growth rates for then the models are linear in all variables.

14.4 Stationarity

Recall that cross-sectional observations are conventionally treated as random draws from an underlying population. This is not an appropriate model for time series processes due to serial dependence. Instead, we treat the observed sample \(\left\{Y_{1}, \ldots, Y_{n}\right\}\) as a realization of a dependent stochastic process. It is often useful to view \(\left\{Y_{1}, \ldots, Y_{n}\right\}\) as a subset of an underlying doubly-infinite sequence \(\left\{\ldots, Y_{t-1}, Y_{t}, Y_{t+1}, \ldots\right\}\).

A random vector \(Y_{t}\) can be characterized by its distribution. A set such as \(\left(Y_{t}, Y_{t+1}, \ldots, Y_{t+\ell}\right)\) can be characterized by its joint distribution. Important features of these distributions are their means, variances, and covariances. Since there is only one observed time series sample, in order to learn about these distributions there needs to be some sort of constancy. This may only hold after a suitable transformation such as growth rates (as discussed in the previous section).

The most commonly assumed form of constancy is stationarity. There are two definitions. The first is sufficient for construction of linear models.

Definition \(14.1\left\{Y_{t}\right\}\) is covariance or weakly stationary if the expectation \(\mu=\) \(\mathbb{E}\left[Y_{t}\right]\) and covariance matrix \(\Sigma=\operatorname{var}\left[Y_{t}\right]=\mathbb{E}\left[\left(Y_{t}-\mu\right)\left(Y_{t}-\mu\right)^{\prime}\right]\) are finite and are independent of \(t\), and the autocovariances

\[ \Gamma(k)=\operatorname{cov}\left(Y_{t}, Y_{t-k}\right)=\mathbb{E}\left[\left(Y_{t}-\mu\right)\left(Y_{t-k}-\mu\right)^{\prime}\right] \]

are independent of \(t\) for all \(k\)

In the univariate case we typically write the variance as \(\sigma^{2}\) and autocovariances as \(\gamma(k)\).

The expectation \(\mu\) and variance \(\Sigma\) are features of the marginal distribution of \(Y_{t}\) (the distribution of \(Y_{t}\) at a specific time period \(t\) ). Their constancy as stated in the above definition means that these features of the distribution are stable over time.

The autocovariances \(\Gamma(k)\) are features of the bivariate distributions of \(\left(Y_{t}, Y_{t-k}\right)\). Their constancy as stated in the definition means that the correlation patterns between adjacent \(Y_{t}\) are stable over time and only depend on the number of time periods \(k\) separating the variables. By symmetry we have \(\Gamma(-k)=\) \(\Gamma(k)^{\prime}\). In the univariate case this simplifies to \(\gamma(-k)=\gamma(k)\). The autocovariances \(\Gamma(k)\) are finite under the assumption that the covariance matrix \(\Sigma\) is finite by the Cauchy-Schwarz inequality.

The autocovariances summarize the linear dependence between \(Y_{t}\) and its lags. A scale-free measure of linear dependence in the univariate case are the autocorrelations

\[ \rho(k)=\operatorname{corr}\left(Y_{t}, Y_{t-k}\right)=\frac{\operatorname{cov}\left(Y_{t}, Y_{t-k}\right)}{\sqrt{\operatorname{var}\left[Y_{t}\right] \operatorname{var}\left[Y_{t-1}\right]}}=\frac{\gamma(k)}{\sigma^{2}}=\frac{\gamma(k)}{\gamma(0)} . \]

Notice by symmetry that \(\rho(-k)=\rho(k)\).

The second definition of stationarity concerns the entire joint distribution.

Definition 14.2 \(\left\{Y_{t}\right\}\) is strictly stationary if the joint distribution of \(\left(Y_{t}, \ldots, Y_{t+\ell}\right)\) is independent of \(t\) for all \(\ell\). This is the natural generalization of the cross-section definition of identical distributions. Strict stationarity implies that the (marginal) distribution of \(Y_{t}\) does not vary over time. It also implies that the bivariate distributions of \(\left(Y_{t}, Y_{t+1}\right)\) and multivariate distributions of \(\left(Y_{t}, \ldots, Y_{t+\ell}\right)\) are stable over time. Under the assumption of a bounded variance a strictly stationary process is covariance stationary \({ }^{1}\).

For formal statistical theory we generally require the stronger assumption of strict stationarity. Therefore if we label a process as “stationary” you should interpret it as meaning “strictly stationary”.

The core meaning of both weak and strict stationarity is the same - that the distribution of \(Y_{t}\) is stable over time. To understand the concept it may be useful to review the plots in Figures 14.1-14.2. Are these stationary processes? If so, we would expect that the expectation and variance to be stable over time. This seems unlikely to apply to the series in Figure 14.1, as in each case it is difficult to describe what is the “typical” value of the series. Stationarity may be appropriate for the series in Figure \(14.2\) as each oscillates with a fairly regular pattern. It is difficult, however, to know whether or not a given time series is stationary simply by examining a time series plot.

A straightforward but essential relationship is that an i.i.d. process is strictly stationary.

Theorem 14.1 If \(Y_{t}\) is i.i.d., then it strictly stationary.

Here are some examples of strictly stationary scalar processes. In each, \(e_{t}\) is i.i.d. and \(\mathbb{E}\left[e_{t}\right]=0\).

Example 14.1 \(Y_{t}=e_{t}+\theta e_{t-1}\).

Example 14.2 \(Y_{t}=Z\) for some random variable \(Z\).

Example 14.3 \(Y_{t}=(-1)^{t} Z\) for a random variable \(Z\) which is symmetrically distributed about 0 .

Here are some examples of processes which are not stationary.

Example 14.4 \(Y_{t}=t\).

Example 14.5 \(Y_{t}=(-1)^{t}\).

Example 14.6 \(Y_{t}=\cos (\theta t)\).

Example 14.7 \(Y_{t}=\sqrt{t} e_{t}\).

Example 14.8 \(Y_{t}=e_{t}+t^{-1 / 2} e_{t-1}\).

Example 14.9 \(Y_{t}=Y_{t-1}+e_{t}\) with \(Y_{0}=0\).

From the examples we can see that stationarity means that the distribution is constant over time. It does not mean, however, that the process has some sort of limited dependence, nor that there is an absence of periodic patterns. These restrictions are associated with the concepts of ergodicity and mixing which we shall introduce in subsequent sections.

\({ }^{1}\) More generally, the two classes are non-nested since strictly stationary infinite variance processes are not covariance stationary.

14.5 Transformations of Stationary Processes

One of the important properties of strict stationarity is that it is preserved by transformation. That is, transformations of strictly stationary processes are also strictly stationary. This includes transformations which include the full history of \(Y_{t}\).

Theorem 14.2 If \(Y_{t}\) is strictly stationary and \(X_{t}=\phi\left(Y_{t}, Y_{t-1}, Y_{t-2}, \ldots\right) \in \mathbb{R}^{q}\) is a random vector then \(X_{t}\) is strictly stationary.

Theorem \(14.2\) is extremely useful both for the study of stochastic processes which are constructed from underlying errors and for the study of sample statistics such as linear regression estimators which are functions of sample averages of squares and cross-products of the original data.

We give the proof of Theorem \(14.2\) in Section 14.47.

14.6 Convergent Series

A transformation which includes the full past history is an infinite-order moving average. For scalar \(Y\) and coefficients \(a_{j}\) define the vector process

\[ X_{t}=\sum_{j=0}^{\infty} a_{j} Y_{t-j} . \]

Many time-series models involve representations and transformations of the form (14.3).

The infinite series (14.3) exists if it is convergent, meaning that the sequence \(\sum_{j=0}^{N} a_{j} Y_{t-j}\) has a finite limit as \(N \rightarrow \infty\). Since the inputs \(Y_{t}\) are random we define this as a probability limit.

Definition 14.3 The infinite series (14.3) converges almost surely if \(\sum_{j=0}^{N} a_{j} Y_{t-j}\) has a finite limit as \(N \rightarrow \infty\) with probability one. In this case we describe \(X_{t}\) as convergent.

Theorem 14.3 If \(Y_{t}\) is strictly stationary, \(\mathbb{E}|Y|<\infty\), and \(\sum_{j=0}^{\infty}\left|a_{j}\right|<\infty\), then (14.3) converges almost surely. Furthermore, \(X_{t}\) is strictly stationary.

The proof of Theorem \(14.3\) is provided in Section \(14.47\).

14.7 Ergodicity

Stationarity alone is not sufficient for the weak law of large numbers as there are strictly stationary processes with no time series variation. As we described earlier, an example of a stationary process is \(Y_{t}=Z\) for some random variable \(Z\). This is random but constant over all time. An implication is that the sample mean of \(Y_{t}=Z\) will be inconsistent for the population expectation.

What is a minimal assumption beyond stationarity so that the law of large numbers applies? This topic is called ergodicity. It is sufficiently important that it is treated as a separate area of study. We mention only a few highlights here. For a rigorous treatment see a standard textbook such as Walters (1982).

A time series \(Y_{t}\) is ergodic if all invariant events are trivial, meaning that any event which is unaffected by time-shifts has probability either zero or one. This definition is rather abstract and difficult to grasp but fortunately it is not needed by most economists.

A useful intuition is that if \(Y_{t}\) is ergodic then its sample paths will pass through all parts of the sample space never getting “stuck” in a subregion.

We will first describe the properties of ergodic series which are relevant for our needs and follow with the more rigorous technical definitions. For proofs of the results see Section 14.47.

First, many standard time series processes can be shown to be ergodic. A useful starting point is the observation that an i.i.d. sequence is ergodic.

Theorem 14.4 If \(Y_{t} \in \mathbb{R}^{m}\) is i.i.d. then it strictly stationary and ergodic.

Second, ergodicity, like stationarity, is preserved by transformation.

Theorem 14.5 If \(Y_{t} \in \mathbb{R}^{m}\) is strictly stationary and ergodic and \(X_{t}=\) \(\phi\left(Y_{t}, Y_{t-1}, Y_{t-2}, \ldots\right)\) is a random vector, then \(X_{t}\) is strictly stationary and ergodic.

As an example, the infinite-order moving average transformation (14.3) is ergodic if the input is ergodic and the coefficients are absolutely convergent.

Theorem 14.6 If \(Y_{t}\) is strictly stationary, ergodic, \(\mathbb{E}|Y|<\infty\), and \(\sum_{j=0}^{\infty}\left|a_{j}\right|<\infty\) then \(X_{t}=\sum_{j=0}^{\infty} a_{j} Y_{t-j}\) is strictly stationary and ergodic.

We now present a useful property. It is that the Cesàro sum of the autocovariances limits to zero.

Theorem 14.7 If \(Y_{t} \in \mathbb{R}\) is strictly stationary, ergodic, and \(\mathbb{E}\left[Y^{2}\right]<\infty\), then

\[ \lim _{n \rightarrow \infty} \frac{1}{n} \sum_{\ell=1}^{n} \operatorname{cov}\left(Y_{t}, Y_{t+\ell}\right)=0 . \]

The result (14.4) can be interpreted as that the autocovariances “on average” tend to zero. Some authors have mis-stated ergodicity as implying that the covariances tend to zero but this is not correct, as (14.4) allows, for example, the non-convergent sequence \(\operatorname{cov}\left(Y_{t}, Y_{t+\ell}\right)=(-1)^{\ell}\). The reason why (14.4) is particularly useful is because it is sufficient for the WLLN as we discover later in Theorem 14.9.

We now give the formal definition of ergodicity for interested readers. As the concepts will not be used again most readers can safely skip this discussion.

As we stated above, by definition the series \(Y_{t} \in \mathbb{R}^{m}\) is ergodic if all invariant events are trivial. To understand this we introduce some technical definitions. First, we can write an event as \(A=\left\{\widetilde{Y}_{t} \in G\right\}\) where \(\widetilde{Y}_{t}=\left(\ldots, Y_{t-1}, Y_{t}, Y_{t+1}, \ldots\right)\) is an infinite history and \(G \subset \mathbb{R}^{m \infty}\). Second, the \(\ell^{t h}\) time-shift of \(\widetilde{Y}_{t}\) is defined as \(\widetilde{Y}_{t+\ell}=\left(\ldots, Y_{t-1+\ell}, Y_{t+\ell}, Y_{t+1+\ell}, \ldots\right)\). Thus \(\widetilde{Y}_{t+\ell}\) replaces each observation in \(\widetilde{Y}_{t}\) by its \(\ell^{t h}\) shifted value \(Y_{t+\ell}\). A time-shift of the event \(A=\left\{\widetilde{Y}_{t} \in G\right\}\) is \(A_{\ell}=\left\{\widetilde{Y}_{t+\ell} \in G\right\}\). Third, an event \(A\) is called invariant if it is unaffected by a time-shift, so that \(A_{\ell}=A\). Thus replacing any history \(\widetilde{Y}_{t}\) with its shifted history \(\widetilde{Y}_{t+\ell}\) doesn’t change the event. Invariant events are rather special. An example of an invariant event is \(A=\left\{\max _{-\infty<t<\infty} Y_{t} \leq 0\right\}\). Fourth, an event \(A\) is called trivial if either \(\mathbb{P}[A]=0\) or \(\mathbb{P}[A]=1\). You can think of trivial events as essentially non-random. Recall, by definition \(Y_{t}\) is ergodic if all invariant events are trivial. This means that any event which is unaffected by a time shift is trivial-is essentially non-random. For example, again consider the invariant event \(A=\left\{\max _{-\infty<t<\infty} Y_{t} \leq 0\right\}\). If \(Y_{t}=Z \sim \mathrm{N}(0,1)\) for all \(t\) then \(\mathbb{P}[A]=\mathbb{P}[Z \leq 0]=0.5\). Since this does not equal 0 or 1 then \(Y_{t}=Z\) is not ergodic. However, if \(Y_{t}\) is i.i.d. \(\mathrm{N}(0,1)\) then \(\mathbb{P}\left[\max _{-\infty<t<\infty} Y_{t} \leq 0\right]=0\). This is a trivial event. For \(Y_{t}\) to be ergodic (it is in this case) all such invariant events must be trivial.

An important technical result is that ergodicity is equivalent to the following property.

Theorem 14.8 A stationary series \(Y_{t} \in \mathbb{R}^{m}\) is ergodic iff for all events \(A\) and \(B\)

\[ \lim _{n \rightarrow \infty} \frac{1}{n} \sum_{\ell=1}^{n} \mathbb{P}\left[A_{\ell} \cap B\right]=\mathbb{P}[A] \mathbb{P}[B] . \]

This result is rather deep so we do not prove it here. See Walters (1982), Corollary 1.14.2, or Davidson (1994), Theorem 14.7. The limit in (14.5) is the Cesàro sum of \(\mathbb{P}\left[A_{\ell} \cap B\right]\). The Theorem of Cesàro Means (Theorem A.4 of Probability and Statistics for Economists) shows that a sufficient condition for (14.5) is that \(\mathbb{P}\left[A_{\ell} \cap B\right] \rightarrow \mathbb{P}[A] \mathbb{P}[B]\) which is known as mixing. Thus mixing implies ergodicity. Mixing, roughly, means that separated events are asymptotically independent. Ergodicity is weaker, only requiring that the events are asymptotically independent “on average”. We discuss mixing in Section 14.12.

14.8 Ergodic Theorem

The ergodic theorem is one of the most famous results in time series theory. There are actually several forms of the theorem, most of which concern almost sure convergence. For simplicity we state the theorem in terms of convergence in probability. Theorem 14.9 Ergodic Theorem.

If \(Y_{t} \in \mathbb{R}^{m}\) is strictly stationary, ergodic, and \(\mathbb{E}\|Y\|<\infty\), then as \(n \rightarrow \infty\),

\[ \mathbb{E}\|\bar{Y}-\mu\| \longrightarrow 0 \]

and

\[ \bar{Y} \underset{p}{\longrightarrow} \mu \]

where \(\mu=\mathbb{E}[Y]\).

The ergodic theorem shows that ergodicity is sufficient for consistent estimation. The moment condition \(\mathbb{E}\|Y\|<\infty\) is the same as in the WLLN for i.i.d. observations.

We now provide a proof of the ergodic theorem for the scalar case under the additional assumption that \(\operatorname{var}[Y]=\sigma^{2}<\infty\). A proof which relaxes this assumption is provided in Section 14.47.

By direct calculation

\[ \operatorname{var}[\bar{Y}]=\frac{1}{n^{2}} \sum_{t=1}^{n} \sum_{j=1}^{n} \gamma(t-j) \]

where \(\gamma(\ell)=\operatorname{cov}\left(Y_{t}, Y_{t+\ell}\right)\). The double sum is over all elements of an \(n \times n\) matrix whose \(t j^{t h}\) element is \(\gamma(t-j)\). The diagonal elements are \(\gamma(0)=\sigma^{2}\), the first off-diagonal elements are \(\gamma(1)\), the second offdiagonal elements are \(\gamma(2)\) and so on. This means that there are precisely \(n\) diagonal elements equalling \(\sigma^{2}, 2(n-1)\) equalling \(\gamma(1)\), etc. Thus the above equals

\[ \begin{aligned} \operatorname{var}[\bar{Y}] &=\frac{1}{n^{2}}\left(n \sigma^{2}+2(n-1) \gamma(1)+2(n-2) \gamma(2)+\cdots+2 \gamma(n-1)\right) \\ &=\frac{\sigma^{2}}{n}+\frac{2}{n} \sum_{\ell=1}^{n}\left(1-\frac{\ell}{n}\right) \gamma(\ell) . \end{aligned} \]

This is a rather intruiging expression. It shows that the variance of the sample mean precisely equals \(\sigma^{2} / n\) (which is the variance of the sample mean under i.i.d. sampling) plus a weighted Cesàro mean of the autocovariances. The latter is zero under i.i.d. sampling but is non-zero otherwise. Theorem \(14.7\) shows that the Cesàro mean of the autocovariances converges to zero. Let \(w_{n \ell}=2\left(\ell / n^{2}\right)\), which satisfy the conditions of the Toeplitz Lemma (Theorem A.5 of Probability and Statistics for Economists). Then

\[ \frac{2}{n} \sum_{\ell=1}^{n}\left(1-\frac{\ell}{n}\right) \gamma(\ell)=\frac{2}{n^{2}} \sum_{\ell=1}^{n-1} \sum_{j=1}^{\ell} \gamma(j)=\sum_{\ell=1}^{n-1} w_{n \ell}\left(\frac{1}{\ell} \sum_{j=1}^{\ell} \gamma(j)\right) \longrightarrow 0 \]

Together, we have shown that (14.8) is \(o(1)\) under ergodicity. Hence \(\operatorname{var}[\bar{Y}] \rightarrow 0\). Markov’s inequality establishes that \(\bar{Y} \underset{p}{\longrightarrow} \mu\).

14.9 Conditioning on Information Sets

In the past few sections we have introduced the concept of the infinite histories. We now consider conditional expectations given infinite histories.

First, some basics. Recall from probability theory that an outcome is an element of a sample space. An event is a set of outcomes. A probability law is a rule which assigns non-negative real numbers to events. When outcomes are infinite histories then events are collections of such histories and a probability law is a rule which assigns numbers to collections of infinite histories.

Now we wish to define a conditional expectation given an infinite past history. Specifically, we wish to define

\[ \mathbb{E}_{t-1}\left[Y_{t}\right]=\mathbb{E}\left[Y_{t} \mid Y_{t-1}, Y_{t-2}, \ldots\right] \text {. } \]

the expected value of \(Y_{t}\) given the history \(\widetilde{Y}_{t-1}=\left(Y_{t-1}, Y_{t-2}, \ldots\right)\) up to time \(t\). Intuitively, \(\mathbb{E}_{t-1}\left[Y_{t}\right]\) is the mean of the conditional distribution, the latter reflecting the information in the history. Mathematically this cannot be defined using (2.6) as the latter requires a joint density for \(\left(Y_{t}, Y_{t-1}, Y_{t-2}, \ldots\right)\) which does not make much sense. Instead, we can appeal to Theorem \(2.13\) which states that the conditional expectation (14.10) exists if \(\mathbb{E}\left|Y_{t}\right|<\infty\) and the probabilities \(\mathbb{P}\left[\widetilde{Y}_{t-1} \in A\right]\) are defined. The latter events are discussed in the previous paragraph. Thus the conditional expectation is well defined.

In this textbook we have avoided measure-theoretic terminology to keep the presentation accessible, and because it is my belief that measure theory is more distracting than helpful. However, it is standard in the time series literature to follow the measure-theoretic convention of writing (14.10) as the conditional expectation given a \(\sigma\)-field. So at the risk of being overly-technical we will follow this convention and write the expectation (14.10) as \(\mathbb{E}\left[Y_{t} \mid \mathscr{F}_{t-1}\right]\) where \(\mathscr{F}_{t-1}=\sigma\left(\widetilde{Y}_{t-1}\right)\) is the \(\sigma\)-field generated by the history \(\widetilde{Y}_{t-1}\). A \(\sigma\)-field (also known as a \(\sigma\)-algebra) is a collection of sets satisfying certain regularity conditions \({ }^{2}\). See Probability and Statistics for Economists, Section 1.14. The \(\sigma\)-field generated by a random variable \(Y\) is the collection of measurable events involving \(Y\). Similarly, the \(\sigma\)-field generated by an infinite history is the collection of measurable events involving this history. Intuitively, \(\mathscr{F}_{t-1}\) contains all the information available in the history \(\widetilde{Y}_{t-1}\). Consequently, economists typically call \(\mathscr{F}_{t-1}\) an information set rather than a \(\sigma\)-field. As I said, in this textbook we endeavor to avoid measure theoretic complications so will follow the economists’ label rather than the probabilists’, but use the latter’s notation as is conventional. To summarize, we will write \(\mathscr{F}_{t}=\sigma\left(Y_{t}, Y_{t-1}, \ldots\right)\) to indicate the information set generated by an infinite history \(\left(Y_{t}, Y_{t-1}, \ldots\right)\), and will write \((14.10)\) as \(\mathbb{E}\left[Y_{t} \mid \mathscr{F}_{t-1}\right]\).

We now describe some properties about information sets \(\mathscr{F}_{t}\).

First, they are nested: \(\mathscr{F}_{t-1} \subset \mathscr{F}\). This means that information accumulates over time. Information is not lost.

Second, it is important to be precise about which variables are contained in the information set. Some economists are sloppy and refer to “the information set at time \(t\)” without specifying which variables are in the information set. It is better to be specific. For example, the information sets \(\mathscr{F}_{1 t}=\) \(\sigma\left(Y_{t}, Y_{t-1}, \ldots\right)\) and \(\mathscr{F}_{2 t}=\sigma\left(Y_{t}, X_{t}, Y_{t-1}, X_{t-1} \ldots\right)\) are distinct even though they are both dated at time \(t\).

Third, the conditional expectations (14.10) follow the law of iterated expectations and the conditioning theorem, thus

\[ \begin{aligned} \mathbb{E}\left[\mathbb{E}\left[Y_{t} \mid \mathscr{F}_{t-1}\right] \mid \mathscr{F}_{t-2}\right] &=\mathbb{E}\left[Y_{t} \mid \mathscr{F}_{t-2}\right] \\ \mathbb{E}\left[\mathbb{E}\left[Y_{t} \mid \mathscr{F}_{t-1}\right]\right] &=\mathbb{E}\left[Y_{t}\right] \end{aligned} \]

and

\[ \mathbb{E}\left[Y_{t-1} Y_{t} \mid \mathscr{F}_{t-1}\right]=Y_{t-1} \mathbb{E}\left[Y_{t} \mid \mathscr{F}_{t-1}\right] \]

14.10 Martingale Difference Sequences

An important concept in economics is unforecastability, meaning that the conditional expectation is the unconditional expectation. This is similar to the properties of a regression error. An unforecastable process is called a martingale difference sequence (MDS).

\({ }^{2} \mathrm{~A} \sigma\)-field contains the universal set, is closed under complementation, and closed under countable unions. A MDS \(e_{t}\) is defined with respect to a specific sequence of information sets \(\mathscr{F}_{t}\). Most commonly the latter are the natural filtration \(\mathscr{F}_{t}=\sigma\left(e_{t}, e_{t-1}, \ldots\right)\) (the past history of \(\left.e_{t}\right)\) but it could be a larger information set. The only requirement is that \(e_{t}\) is adapted to \(\mathscr{F}_{t}\), meaning that \(\mathbb{E}\left[e_{t} \mid \mathscr{F}_{t}\right]=e_{t}\).

Definition 14.4 The process \(\left(e_{t}, \mathscr{F}_{t}\right)\) is a Martingale Difference Sequence (MDS) if \(e_{t}\) is adapted to \(\mathscr{F}_{t}\), EE \(\left|e_{t}\right|<\infty\), and \(\mathbb{E}\left[e_{t} \mid \mathscr{F}_{t-1}\right]=0\).

In words, a MDS \(e_{t}\) is unforecastable in the mean. It is useful to notice that if we apply iterated expectations \(\mathbb{E}\left[e_{t}\right]=\mathbb{E}\left[\mathbb{E}\left[e_{t} \mid \mathscr{F}_{t-1}\right]\right]=0\). Thus a MDS is mean zero.

The definition of a MDS requires the information sets \(\mathscr{F}_{t}\) to contain the information in \(e_{t}\), but is broader in the sense that it can contain more information. When no explicit definition is given it is standard to assume that \(\mathscr{F}_{t}\) is the natural filtration. However, it is best to explicitly specify the information sets so there is no confusion.

The term “martingale difference sequence” refers to the fact that the summed process \(S_{t}=\sum_{j=1}^{t} e_{j}\) is a martingale and \(e_{t}\) is its first-difference. A martingale \(S_{t}\) is a process which has a finite mean and \(\mathbb{E}\left[S_{t} \mid \mathscr{F}_{t-1}\right]=S_{t-1}\)

If \(e_{t}\) is i.i.d. and mean zero it is a MDS but the reverse is not the case. To see this, first suppose that \(e_{t}\) is i.i.d. and mean zero. It is then independent of \(\mathscr{F}_{t-1}=\sigma\left(e_{t-1}, e_{t-2}, \ldots\right)\) so \(\mathbb{E}\left[e_{t} \mid \mathscr{F}_{t-1}\right]=\mathbb{E}\left[e_{t}\right]=0\). Thus an i.i.d. shock is a MDS as claimed.

To show that the reverse is not true let \(u_{t}\) be i.i.d. \(\mathrm{N}(0,1)\) and set

\[ e_{t}=u_{t} u_{t-1} \]

By the conditioning theorem

\[ \mathbb{E}\left[e_{t} \mid \mathscr{F}_{t-1}\right]=u_{t-1} \mathbb{E}\left[u_{t} \mid \mathscr{F}_{t-1}\right]=0 \]

so \(e_{t}\) is a MDS. The process (14.11) is not, however, i.i.d. One way to see this is to calculate the first autocovariance of \(e_{t}^{2}\), which is

\[ \begin{aligned} \operatorname{cov}\left(e_{t}^{2}, e_{t-1}^{2}\right) &=\mathbb{E}\left[e_{t}^{2} e_{t-1}^{2}\right]-\mathbb{E}\left[e_{t}^{2}\right] \mathbb{E}\left[e_{t-1}^{2}\right] \\ &=\mathbb{E}\left[u_{t}^{2}\right] \mathbb{E}\left[u_{t-1}^{4}\right] \mathbb{E}\left[u_{t-2}^{2}\right]-1 \\ &=2 \neq 0 . \end{aligned} \]

Since the covariance is non-zero, \(e_{t}\) is not an independent sequence. Thus \(e_{t}\) is a MDS but not i.i.d.

An important property of a square integrable MDS is that it is serially uncorrelated. To see this, observe that by iterated expectations, the conditioning theorem, and the definition of a MDS, for \(k>0\),

\[ \begin{aligned} \operatorname{cov}\left(e_{t}, e_{t-k}\right) &=\mathbb{E}\left[e_{t} e_{t-k}\right] \\ &=\mathbb{E}\left[\mathbb{E}\left[e_{t} e_{t-k} \mid \mathscr{F}_{t-1}\right]\right] \\ &=\mathbb{E}\left[\mathbb{E}\left[e_{t} \mid \mathscr{F}_{t-1}\right] e_{t-k}\right] \\ &=\mathbb{E}\left[0 e_{t-k}\right] \\ &=0 . \end{aligned} \]

Thus the autocovariances and autocorrelations are zero. A process that is serially uncorrelated, however, is not necessarily a MDS. Take the process \(e_{t}=u_{t}+\) \(u_{t-1} u_{t-2}\) with \(u_{t}\) i.i.d. \(\mathrm{N}(0,1)\). The process \(e_{t}\) is not a MDS because \(\mathbb{E}\left[e_{t} \mid \mathscr{F}_{t-1}\right]=u_{t-1} u_{t-2} \neq 0\). However,

\[ \begin{aligned} \operatorname{cov}\left(e_{t}, e_{t-1}\right) &=\mathbb{E}\left[e_{t} e_{t-1}\right] \\ &=\mathbb{E}\left[\left(u_{t}+u_{t-1} u_{t-2}\right)\left(u_{t-1}+u_{t-2} u_{t-3}\right)\right] \\ &=\mathbb{E}\left[u_{t} u_{t-1}+u_{t} u_{t-2} u_{t-3}+u_{t-1}^{2} u_{t-2}+u_{t-1} u_{t-2}^{2} u_{t-3}\right] \\ &=\mathbb{E}\left[u_{t}\right] \mathbb{E}\left[u_{t-1}\right]+\mathbb{E}\left[u_{t}\right] \mathbb{E}\left[u_{t-2}\right] \mathbb{E}\left[u_{t-3}\right] \\ &+\mathbb{E}\left[u_{t-1}^{2}\right] \mathbb{E}\left[u_{t-2}\right]+\mathbb{E}\left[u_{t-1}\right] \mathbb{E}\left[u_{t-2}^{2}\right] \mathbb{E}\left[u_{t-3}\right] \\ &=0 . \end{aligned} \]

Similarly, \(\operatorname{cov}\left(e_{t}, e_{t-k}\right)=0\) for \(k \neq 0\). Thus \(e_{t}\) is serially uncorrelated. We have proved the following.

Theorem 14.10 If \(\left(e_{t}, \mathscr{F}_{t}\right)\) is a MDS and \(\mathbb{E}\left[e_{t}^{2}\right]<\infty\) then \(e_{t}\) is serially uncorrelated.

Another important special case is a homoskedastic martingale difference sequence.

Definition 14.5 The MDS \(\left(e_{t}, \mathscr{F}_{t}\right)\) is a Homoskedastic Martingale Difference Sequence if \(\mathbb{E}\left[e_{t}^{2} \mid \mathscr{F}_{t-1}\right]=\sigma^{2}\).

A homoskedastic MDS should more properly be called a conditionally homoskedastic MDS because the property concerns the conditional distribution rather than the unconditional. That is, any strictly stationary MDS satisfies a constant variance \(\mathbb{E}\left[e_{t}^{2}\right]\) but only a homoskedastic MDS has a constant conditional variance \(\mathbb{E}\left[e_{t}^{2} \mid \mathscr{F}_{t-1}\right]\)

A homoskedatic MDS is analogous to a conditionally homoskedastic regression error. It is intermediate between a MDS and an i.i.d. sequence. Specifically, a square integrable and mean zero i.i.d. sequence is a homoskedastic MDS and the latter is a MDS.

The reverse is not the case. First, a MDS is not necessarily conditionally homoskedastic. Consider the example \(e_{t}=u_{t} u_{t-1}\) given previously which we showed is a MDS. It is not conditionally homoskedastic, however, because

\[ \mathbb{E}\left[e_{t}^{2} \mid \mathscr{F}_{t-1}\right]=u_{t-1}^{2} \mathbb{E}\left[u_{t}^{2} \mid \mathscr{F}_{t-1}\right]=u_{t-1}^{2} \]

which is time-varying. Thus this MDS \(e_{t}\) is conditionally heteroskedastic. Second, a homoskedastic MDS is not necessarily i.i.d. Consider the following example. Set \(e_{t}=\sqrt{1-2 / \eta_{t-1}} T_{t}\), where \(T_{t}\) is distributed as student \(t\) with degree of freedom parameter \(\eta_{t-1}=2+e_{t-1}^{2}\). This is scaled so that \(\mathbb{E}\left[e_{t} \mid \mathscr{F}_{t-1}\right]=0\) and \(\mathbb{E}\left[e_{t}^{2} \mid \mathscr{F}_{t-1}\right]=1\), and is thus a homoskedastic MDS. The conditional distribution of \(e_{t}\) depends on \(e_{t-1}\) through the degree of freedom parameter. Hence \(e_{t}\) is not an independent sequence.

One way to think about the difference between MDS and i.i.d. shocks is in terms of forecastability. An i.i.d. process is fully unforecastable in that no function of an i.i.d. process is forecastable. A MDS is unforecastable in the mean but other moments may be forecastable.

As we mentioned above, the definition of a MDS \(e_{t}\) allows for conditional heteroskedasticity, meaning that the conditional variance \(\sigma_{t}^{2}=\mathbb{E}\left[e_{t}^{2} \mid \mathscr{F}_{t-1}\right]\) may be time-varying. In financial econometrics there are many models for conditional heteroskedasticity, including autoregressive conditional heteroskedasticity (ARCH), generalized ARCH (GARCH), and stochastic volatility. A good reference for this class of models is Campbell, Lo, and MacKinlay (1997).

14.11 CLT for Martingale Differences

We are interested in an asymptotic approximation for the distribution of the normalized sample mean

\[ S_{n}=\frac{1}{\sqrt{n}} \sum_{t=1}^{n} u_{t} \]

where \(u_{t}\) is mean zero with variance \(\mathbb{E}\left[u_{t} u_{t}^{\prime}\right]=\Sigma<\infty\). In this section we present a CLT for the case where \(u_{t}\) is a martingale difference sequence.

Theorem 14.11 MDS CLT If \(u_{t}\) is a strictly stationary and ergodic martingale difference sequence and \(\mathbb{E}\left[u_{t} u_{t}^{\prime}\right]=\Sigma<\infty\), then as \(n \rightarrow \infty\),

\[ S_{n}=\frac{1}{\sqrt{n}} \sum_{t=1}^{n} u_{t} \underset{d}{\longrightarrow} \mathrm{N}(0, \Sigma) \text {. } \]

The conditions for Theorem \(14.11\) are similar to the Lindeberg-Lévy CLT. The only difference is that the i.i.d. assumption has been replaced by the assumption of a strictly stationarity and ergodic MDS.

The proof of Theorem \(14.11\) is technically advanced so we do not present the full details, but instead refer readers to Theorem \(3.2\) of Hall and Heyde (1980) or Theorem \(25.3\) of Davidson (1994) (which are more general than Theorem 14.11, not requiring strict stationarity). To illustrate the role of the MDS assumption we give a sketch of the proof in Section 14.47.

14.12 Mixing

For many results, including a CLT for correlated (non-MDS) series, we need a stronger restriction on the dependence between observations than ergodicity.

Recalling the property (14.5) of ergodic sequences we can measure the dependence between two events \(A\) and \(B\) by the discrepancy

\[ \alpha(A, B)=|\mathbb{P}[A \cap B]-\mathbb{P}[A] \mathbb{P}[B]| . \]

This equals 0 when \(A\) and \(B\) are independent and is positive otherwise. In general, \(\alpha(A, B)\) can be used to measure the degree of dependence between the events \(A\) and \(B\).

Now consider the two information sets ( \(\sigma\)-fields)

\[ \begin{aligned} \mathscr{F}_{-\infty}^{t} &=\sigma\left(\ldots, Y_{t-1}, Y_{t}\right) \\ \mathscr{F}_{t}^{\infty} &=\sigma\left(Y_{t}, Y_{t+1}, \ldots\right) . \end{aligned} \]

The first is the history of the series up until period \(t\) and the second is the history of the series starting in period \(t\) and going forward. We then separate the information sets by \(\ell\) periods, that is, take \(\mathscr{F}_{-\infty}^{t-\ell}\) and \(\mathscr{F}_{t}^{\infty}\). We can measure the degree of dependence between the information sets by taking all events in each and then taking the largest discrepancy (14.13). This is

\[ \alpha(\ell)=\sup _{A \in \mathscr{F}_{-\infty}^{t-\ell}, B \in \mathscr{F}_{t}^{\infty}} \alpha(A, B) . \]

The constants \(\alpha(\ell)\) are known as the strong mixing coefficients. We say that \(Y_{t}\) is strong mixing if \(\alpha(\ell) \rightarrow 0\) as \(\ell \rightarrow \infty\). This means that as the time separation increases between the information sets, the degree of dependence decreases, eventually reaching independence.

From the Theorem of Cesàro Means (Theorem A.4 of Probability and Statistics for Economists), strong mixing implies (14.5) which is equivalent to ergodicity. Thus a mixing process is ergodic.

An intuition concerning mixing can be colorfully illustrated by the following example due to Halmos (1956). A martini is a drink consisting of a large portion of gin and a small part of vermouth. Suppose that you pour a serving of gin into a martini glass, pour a small amount of vermouth on top, and then stir the drink with a swizzle stick. If your stirring process is mixing, with each turn of the stick the vermouth will become more evenly distributed throughout the gin, and asymptotically (as the number of stirs tends to infinity) the vermouth and gin distributions will become independent \({ }^{3}\). If so, this is a mixing process.

For applications, mixing is often useful when we can characterize the rate at which the coefficients \(\alpha(\ell)\) decline to zero. There are two types of conditions which are seen in asymptotic theory: rates and summation. Rate conditions take the form \(\alpha(\ell)=O\left(\ell^{-r}\right)\) or \(\alpha(\ell)=o\left(\ell^{-r}\right)\). Summation conditions take the form \(\sum_{\ell=0}^{\infty} \alpha(\ell)^{r}<\infty\) or \(\sum_{\ell=0}^{\infty} \ell^{s} \alpha(\ell)^{r}<\infty\).

There are alternative measures of dependence beyond (14.13) and many have been proposed. Strong mixing is one of the weakest (and thus embraces a wide set of time series processes) but is insufficiently strong for some applications. Another popular dependence measure is known as absolute regularity or \(\beta\)-mixing. The \(\beta\)-mixing coefficients are

\[ \beta(\ell)=\sup _{A \in \mathscr{F}_{t}^{\infty}} \mathbb{E}\left|\mathbb{P}\left[A \mid \mathscr{F}_{-\infty}^{t-\ell}\right]-\mathbb{P}[A]\right| . \]

Absolute regularity is stronger than strong mixing in the sense that \(\beta(\ell) \rightarrow 0\) implies \(\alpha(\ell) \rightarrow 0\), and rate conditions for the \(\beta\)-mixing coefficients imply the same rates for the strong mixing coefficients.

One reason why mixing is useful for applications is that it is preserved by transformations.

Theorem 14.12 If \(Y_{t}\) has mixing coefficients \(\alpha_{Y}(\ell)\) and \(X_{t}=\) \(\phi\left(Y_{t}, Y_{t-1}, Y_{t-2}, \ldots, Y_{t-q}\right)\) then \(X_{t}\) has mixing coefficients \(\alpha_{X}(\ell) \leq \alpha_{Y}(\ell-q)\) (for \(\ell \geq q)\). The coefficients \(\alpha_{X}(\ell)\) satisfy the same summation and rate conditions as \(\alpha_{Y}(\ell)\).

A limitation of the above result is that it is confined to a finite number of lags unlike the transformation results for stationarity and ergodicity.

Mixing can be a useful tool because of the following inequalities.

\({ }^{3}\) Of course, if you really make an asymptotic number of stirs you will never finish stirring and you won’t be able to enjoy the martini. Hence in practice it is advised to stop stirring before the number of stirs reaches infinity. Theorem 14.13 Let \(\mathscr{F}_{-\infty}^{t}\) and \(\mathscr{F}_{t}^{\infty}\) be constructed from the pair \(\left(X_{t}, Z_{t}\right)\).

- If \(\left|X_{t}\right| \leq C_{1}\) and \(\left|Z_{t}\right| \leq C_{2}\) then

\[ \left|\operatorname{cov}\left(X_{t-\ell}, Z_{t}\right)\right| \leq 4 C_{1} C_{2} \alpha(\ell) . \]

1. If \(\mathbb{E}\left|X_{t}\right|^{r}<\infty\) and \(\mathbb{E}\left|Z_{t}\right|^{q}<\infty\) for \(1 / r+1 / q<1\) then

\[ \left|\operatorname{cov}\left(X_{t-\ell}, Z_{t}\right)\right| \leq 8\left(\mathbb{E}\left|X_{t}\right|^{r}\right)^{1 / r}\left(\mathbb{E}\left|Z_{t}\right|^{q}\right)^{1 / q} \alpha(\ell)^{1-1 / r-1 / q} . \]

1. If \(\mathbb{E}\left[Z_{t}\right]=0\) and \(\mathbb{E}\left|Z_{t}\right|^{r}<\infty\) for \(r \geq 1\) then

\[ \mathbb{E}\left|\mathbb{E}\left[Z_{t} \mid \mathscr{F}_{-\infty}^{t-\ell}\right]\right| \leq 6\left(\mathbb{E}\left|Z_{t}\right|^{r}\right)^{1 / r} \alpha(\ell)^{1-1 / r} . \]

The proof is given in Section 14.47. Our next result follows fairly directly from the definition of mixing.

Theorem 14.14 If \(Y_{t}\) is i.i.d. then it is strong mixing and ergodic.

14.14 Linear Projection

In Chapter 2 we extensively studied the properties of linear projection models. In the context of stationary time series we can use similar tools. An important extension is to allow for projections onto infinite dimensional random vectors. For this analysis we assume that \(Y_{t}\) is covariance stationary.

Recall that when \((Y, X)\) have a joint distribution with bounded variances the linear projection of \(Y\) onto \(X\) (the best linear predictor) is the minimizer of \(S(\beta)=\mathbb{E}\left[\left(Y-\beta^{\prime} X\right)^{2}\right]\) and has the solution

\[ \mathscr{P}[Y \mid X]=X^{\prime}\left(\mathbb{E}\left[X X^{\prime}\right]\right)^{-1} \mathbb{E}[X Y] \text {. } \]

This projection is unique and has a unique projection error \(e=Y-\mathscr{P}[Y \mid X]\).

This idea extends to any Hilbert space including the infinite past history \(\widetilde{Y}_{t-1}=\left(\ldots, Y_{t-2}, Y_{t-1}\right)\). From the projection theorem for Hilbert spaces (see Theorem 2.3.1 of Brockwell and Davis (1991)) the projection \(\mathscr{P}_{t-1}\left[Y_{t}\right]=\mathscr{P}\left[Y_{t} \mid \tilde{Y}_{t-1}\right]\) of \(Y_{t}\) onto \(\widetilde{Y}_{t-1}\) is unique and has a unique projection error

\[ e_{t}=Y_{t}-\mathscr{P}_{t-1}\left[Y_{t}\right] . \]

The projection error is mean zero, has finite variance \(\sigma^{2}=\mathbb{E}\left[e_{t}^{2}\right] \leq \mathbb{E}\left[Y_{t}^{2}\right]<\infty\), and is serially uncorrelated. By Theorem 14.2, if \(Y_{t}\) is strictly stationary then \(\mathscr{P}_{t-1}\left[Y_{t}\right]\) and \(e_{t}\) are strictly stationary.

The property (14.18) implies that the projection errors are serially uncorrelated. We state these results formally.

Theorem 14.16 If \(Y_{t} \in \mathbb{R}\) is covariance stationary it has the projection equation

\[ Y_{t}=\mathscr{P}_{t-1}\left[Y_{t}\right]+e_{t} . \]

The projection error \(e_{t}\) satisfies

\[ \begin{aligned} \mathbb{E}\left[e_{t}\right] &=0 \\ \mathbb{E}\left[e_{t-j} e_{t}\right] &=0 \quad j \geq 1 \end{aligned} \]

and

\[ \sigma^{2}=\mathbb{E}\left[e_{t}^{2}\right] \leq \mathbb{E}\left[Y_{t}^{2}\right]<\infty . \]

If \(Y_{t}\) is strictly stationary then \(e_{t}\) is strictly stationary.

14.15 White Noise

The projection error \(e_{t}\) is mean zero, has a finite variance, and is serially uncorrelated. This describes what is known as a white noise process.

Definition 14.6 The process \(e_{t}\) is white noise if \(\mathbb{E}\left[e_{t}\right]=0, \mathbb{E}\left[e_{t}^{2}\right]=\sigma^{2}<\infty\), and \(\operatorname{cov}\left(e_{t}, e_{t-k}\right)=0\) for \(k \neq 0\).

A MDS is white noise (Theorem 14.10) but the reverse is not true as shown by the example \(e_{t}=\) \(u_{t}+u_{t-1} u_{t-2}\) given in Section 14.10, which is white noise but not a MDS. Therefore, the following types of shocks are nested: i.i.d., MDS, and white noise, with i.i.d. being the most narrow class and white noise the broadest. It is helpful to observe that a white noise process can be conditionally heteroskedastic as the conditional variance is unrestricted.

14.16 The Wold Decomposition

In Section \(14.14\) we showed that a covariance stationary process has a white noise projection error. This result can be used to express the series as an infinite linear function of the projection errors. This is a famous result known as the Wold decomposition. Theorem 14.17 The Wold Decomposition If \(Y_{t}\) is covariance stationary and \(\sigma^{2}>0\) where \(\sigma^{2}\) is the projection error variance (14.19), then \(Y_{t}\) has the linear representation

\[ Y_{t}=\mu_{t}+\sum_{j=0}^{\infty} b_{j} e_{t-j} \]

where \(e_{t}\) are the white noise projection errors (14.18), \(b_{0}=1\),

\[ \sum_{j=1}^{\infty} b_{j}^{2}<\infty, \]

and

\[ \mu_{t}=\lim _{m \rightarrow \infty} \mathscr{P}_{t-m}\left[Y_{t}\right] \]

The Wold decomposition shows that \(Y_{t}\) can be written as a linear function of the white noise projection errors plus \(\mu_{t}\). The infinite sum in (14.20) is also known as a linear process. The Wold decomposition is a foundational result for linear time series analysis. Since any covariance stationary process can be written in this format this justifies linear models as approximations.

The series \(\mu_{t}\) is the projection of \(Y_{t}\) on the history from the infinite past. It is the part of \(Y_{t}\) which is perfectly predictable from its past values and is called the deterministic component. In most cases \(\mu_{t}=\mu\), the unconditional mean of \(Y_{t}\). However, it is possible for stationary processes to have more substantive deterministic components. An example is

\[ \mu_{t}=\left\{\begin{array}{cc} (-1)^{t} & \text { with probability } 1 / 2 \\ (-1)^{t+1} & \text { with probability } 1 / 2 . \end{array}\right. \]

This series is strictly stationary, mean zero, and variance one. However, it is perfectly predictable given the previous history as it simply oscillates between \(-1\) and 1 .

In practical applied time series analysis, deterministic components are typically excluded by assumption. We call a stationary time series non-deterministic \({ }^{4}\) if \(\mu_{t}=\mu\), a constant. In this case the Wold decomposition has a simpler form.

Theorem 14.18 If \(Y_{t}\) is covariance stationary and non-deterministic then \(Y_{t}\) has the linear representation

\[ Y_{t}=\mu+\sum_{j=0}^{\infty} b_{j} e_{t-j}, \]

where \(b_{j}\) satisfy (14.21) and \(e_{t}\) are the white noise projection errors (14.18).

A limitation of the Wold decomposition is the restriction to linearity. While it shows that there is a valid linear approximation, it may be that a nonlinear model provides a better approximation.

For a proof of Theorem \(14.17\) see Section 14.47.

\({ }^{4}\) Most authors define purely non-deterministic as the case \(\mu_{t}=0\). We allow for a non-zero mean so to accomodate practical time series applications.

14.17 Lag Operator

An algebraic construct which is useful for the analysis of time series models is the lag operator.

Definition 14.7 The lag operator L satisfies L \(Y_{t}=Y_{t-1}\).

Defining \(\mathrm{L}^{2}=\mathrm{LL}\), we see that \(\mathrm{L}^{2} Y_{t}=\mathrm{L} Y_{t-1}=Y_{t-2}\). In general, \(\mathrm{L}^{k} Y_{t}=Y_{t-k}\).

Using the lag operator the Wold decomposition can be written in the format

\[ \begin{aligned} Y_{t} &=\mu+b_{0} e_{t}+b_{1} \mathrm{~L} e_{t}+b_{2} \mathrm{~L}^{2} e_{t}+\cdots \\ &=\mu+\left(b_{0}+b_{1} \mathrm{~L}+b_{2} \mathrm{~L}^{2}+\cdots\right) e_{t} \\ &=\mu+b(\mathrm{~L}) e_{t} \end{aligned} \]

where \(b(z)=b_{0}+b_{1} z+b_{2} z^{2}+\cdots\) is an infinite-order polynomial. The expression \(Y_{t}=\mu+b(\mathrm{~L}) e_{t}\) is compact way to write the Wold representation.

14.18 Autoregressive Wold Representation

From Theorem 14.16, \(Y_{t}\) satisfies a projection onto its infinite past. Theorem \(14.18\) shows that this projection equals a linear function of the lagged projection errors. An alternative is to write the projection as a linear function of the lagged \(Y_{t}\). It turns out that to obtain a unique and convergent representation we need a strengthening of the conditions.

Theorem 14.19 If \(Y_{t}\) is covariance stationary, non-deterministic, with Wold representation \(Y_{t}=b(\mathrm{~L}) e_{t}\), such that \(|b(z)| \geq \delta>0\) for all complex \(|z| \leq 1\), and for some integer \(s \geq 0\) the Wold coefficients satisfy \(\sum_{j=0}^{\infty}\left(\sum_{k=0}^{\infty} k^{s} b_{j+k}\right)^{2}<\infty\), then \(Y_{t}\) has the representation

\[ Y_{t}=\mu+\sum_{j=1}^{\infty} a_{j} Y_{t-j}+e_{t} \]

for some coefficients \(\mu\) and \(a_{j}\). The coefficients satisfy \(\sum_{k=0}^{\infty} k^{s}\left|a_{k}\right|<\infty\) so (14.23) is convergent.

Equation (14.23) is known as an infinite-order autoregressive representation with autoregressive coefficients \(a_{j}\).

A solution to the equation \(b(z)=0\) is a root of the polynomial \(b(z)\). The assumption \(|b(z)|>0\) for \(|z| \leq 1\) means that the roots of \(b(z)\) lie outside the unit circle \(|z|=1\) (the circle in the complex plane with radius one). Theorem \(14.19\) makes the stronger restriction that \(|b(z)|\) is bounded away from 0 for \(z\) on or within the unit circle. The need for this strengthening is less intuitive but essentially excludes the possibility of an infinite number of roots outside but arbitrarily close to the unit circle. The summability assumption on the Wold coefficients ensures convergence of the autoregressive coefficients \(a_{j}\). To understand the restriction on the roots of \(b(z)\) consider the simple case \(b(z)=1-b_{1} z\). (Below we call this a MA(1) model.) The requirement \(|b(z)| \geq \delta\) for \(|z| \leq 1\) means \({ }^{5}\left|b_{1}\right| \leq 1-\delta\). Thus the assumption in Theorem \(14.19\) bounds the coefficient strictly below 1 . Now consider an infinite polynomial case \(b(z)=\prod_{j=1}^{\infty}\left(1-b_{j} z\right)\). The assumption in Theorem \(14.19\) requires \(\sup _{j}\left|b_{j}\right|<1\).

Theorem \(14.19\) is attributed to Wiener and Masani (1958). For a recent treatment and proof see Corollary 6.1.17 of Politis and McElroy (2020). These authors (as is common in the literature) state their assumptions differently than we do in Theorem 14.19. First, instead of the condition on \(b(z)\) they bound from below the spectral density function \(f(\lambda)\) of \(Y_{t}\). We do not define the spectral density in this text so we restate their condition in terms of the linear process polynomial \(b(z)\). Second, instead of the condition on the Wold coefficients they require that the autocovariances satisfy \(\sum_{k=0}^{\infty} k^{s}|\gamma(k)|<\infty\). This is implied by our stated summability condition on the \(b_{j}\) (using the expression for \(\gamma(k)\) in Section \(14.21\) below and simplifying).

14.19 Linear Models

In the previous two sections we showed that any non-deterministic covariance stationary time series has the projection representation

\[ Y_{t}=\mu+\sum_{j=0}^{\infty} b_{j} e_{t-j} \]

and under a restriction on the projection coefficients satisfies the autoregressive representation

\[ Y_{t}=\mu+\sum_{j=1}^{\infty} a_{j} Y_{t-j}+e_{t} . \]

In both equations the errors \(e_{t}\) are white noise projection errors. These representations help us understand that linear models can be used as approximations for stationary time series.

For the next several sections we reverse the analysis. We will assume a specific linear model and then study the properties of the resulting time series. In particular we will be seeking conditions under which the stated process is stationary. This helps us understand the properties of linear models. Throughout, we assume that the error \(e_{t}\) is a strictly stationary and ergodic white noise process. This allows as a special case the stronger assumption that \(e_{t}\) is i.i.d. but is less restrictive. In particular, it allows for conditional heteroskedasticity.

14.20 Moving Average Processes

The first-order moving average process, denoted MA(1), is

\[ Y_{t}=\mu+e_{t}+\theta e_{t-1} \]

where \(e_{t}\) is a strictly stationary and ergodic white noise process with var \(\left[e_{t}\right]=\sigma^{2}\). The model is called a “moving average” because \(Y_{t}\) is a weighted average of the shocks \(e_{t}\) and \(e_{t-1}\).

\({ }^{5}\) To see this, focus on the case \(b_{1} \geq 0\). The requirement \(\left|1-b_{1} z\right| \geq \delta\) for \(|z| \leq 1\) means \(\min _{|z| \leq 1}\left|1-b_{1} z\right|=1-b_{1} \geq \delta\) or \(b_{1} \leq 1-\delta\). It is straightforward to calculate that a MA(1) has the following moments.

\[ \begin{aligned} \mathbb{E}\left[Y_{t}\right] &=\mu \\ \operatorname{var}\left[Y_{t}\right] &=\left(1+\theta^{2}\right) \sigma^{2} \\ \gamma(1) &=\theta \sigma^{2} \\ \rho(1) &=\frac{\theta}{1+\theta^{2}} \\ \gamma(k) &=\rho(k)=0, \quad k \geq 2 . \end{aligned} \]

Thus the MA(1) process has a non-zero first autocorrelation with the remainder zero.

A MA(1) process with \(\theta \neq 0\) is serially correlated with each pair of adjacent observations \(\left(Y_{t-1}, Y_{t}\right)\) correlated. If \(\theta>0\) the pair are positively correlated, while if \(\theta<0\) they are negatively correlated. The serial correlation is limited in that observations separated by multiple periods are mutually independent.

The \(\mathbf{q}^{t h}\)-order moving average process, denoted \(\mathbf{M A}(\mathbf{q})\), is

\[ Y_{t}=\mu+\theta_{0} e_{t}+\theta_{1} e_{t-1}+\theta_{2} e_{t-2}+\cdots+\theta_{q} e_{t-q} \]

where \(\theta_{0}=1\). It is straightforward to calculate that a MA(q) has the following moments.

\[ \begin{aligned} \mathbb{E}\left[Y_{t}\right] &=\mu \\ \operatorname{var}\left[Y_{t}\right] &=\left(\sum_{j=0}^{q} \theta_{j}^{2}\right) \sigma^{2} \\ \gamma(k) &=\left(\sum_{j=0}^{q-k} \theta_{j+k} \theta_{j}\right) \sigma^{2}, \quad k \leq q \\ \rho(k) &=\frac{\sum_{j=0}^{q-k} \theta_{j+k} \theta_{j}}{\sum_{j=0}^{q} \theta_{j}^{2}} \\ \gamma(k) &=\rho(k)=0, \quad k>q . \end{aligned} \]

In particular, a MA(q) has \(q\) non-zero autocorrelations with the remainder zero.

A MA(q) process \(Y_{t}\) is strictly stationary and ergodic.

A MA(q) process with moderately large \(q\) can have considerably more complicated dependence relations than a MA(1) process. One specific pattern which can be induced by a MA process is smoothing. Suppose that the coefficients \(\theta_{j}\) all equal 1. Then \(Y_{t}\) is a smoothed version of the shocks \(e_{t}\).

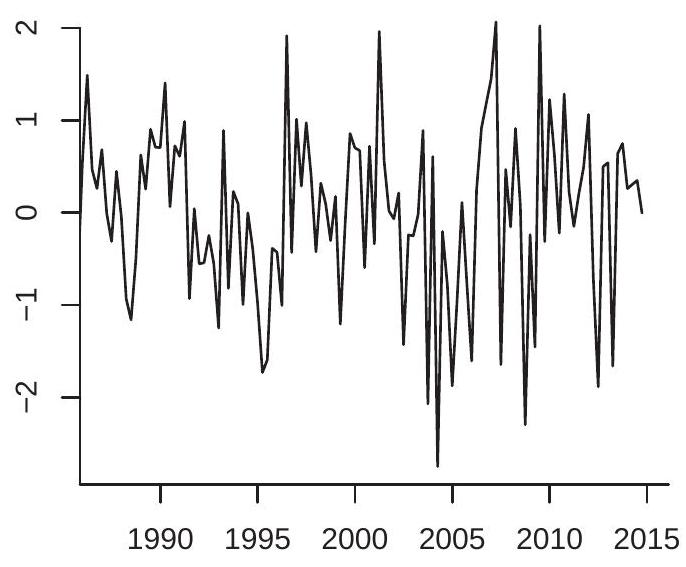

To illustrate, Figure \(14.3(\) a) displays a plot of a simulated white noise (i.i.d. \(\mathrm{N}(0,1)\) ) process with \(n=120\) observations. Figure 14.3(b) displays a plot of a MA(8) process constructed with the same innovations, with \(\theta_{j}=1, j=1, \ldots, 8\). You can see that the white noise has no predictable behavior while the \(\mathrm{MA}(8)\) is smooth.

14.21 Infinite-Order Moving Average Process

An infinite-order moving average process, denoted MA( \(\infty\) ), also known as a linear process, is

\[ Y_{t}=\mu+\sum_{j=0}^{\infty} \theta_{j} e_{t-j} \]

- White Noise

.jpg)

- MA(8)

Figure 14.3: White Noise and MA(8)

where \(e_{t}\) is a strictly stationary and ergodic white noise process, \(\operatorname{var}\left[e_{t}\right]=\sigma^{2}\), and \(\sum_{j=0}^{\infty}\left|\theta_{j}\right|<\infty\). From Theorem 14.6, \(Y_{t}\) is strictly stationary and ergodic. A linear process has the following moments:

\[ \begin{aligned} \mathbb{E}\left[Y_{t}\right] &=\mu \\ \operatorname{var}\left[Y_{t}\right] &=\left(\sum_{j=0}^{\infty} \theta_{j}^{2}\right) \sigma^{2} \\ \gamma(k) &=\left(\sum_{j=0}^{\infty} \theta_{j+k} \theta_{j}\right) \sigma^{2} \\ \rho(k) &=\frac{\sum_{j=0}^{\infty} \theta_{j+k} \theta_{j}}{\sum_{j=0}^{\infty} \theta_{j}^{2}} . \end{aligned} \]

14.22 First-Order Autoregressive Process

The first-order autoregressive process, denoted AR(1), is

\[ Y_{t}=\alpha_{0}+\alpha_{1} Y_{t-1}+e_{t} \]

where \(e_{t}\) is a strictly stationary and ergodic white noise process with var \(\left[e_{t}\right]=\sigma^{2}\). The AR(1) model is probably the single most important model in econometric time series analysis.

As a simple motivating example let \(Y_{t}\) be is the employment level (number of jobs) in an economy. Suppose that a fixed fraction \(1-\alpha_{1}\) of employees lose their job and a random number \(u_{t}\) of new employees are hired each period. Setting \(\alpha_{0}=\mathbb{E}\left[u_{t}\right]\) and \(e_{t}=u_{t}-\alpha_{0}\), this implies the law of motion (14.25).

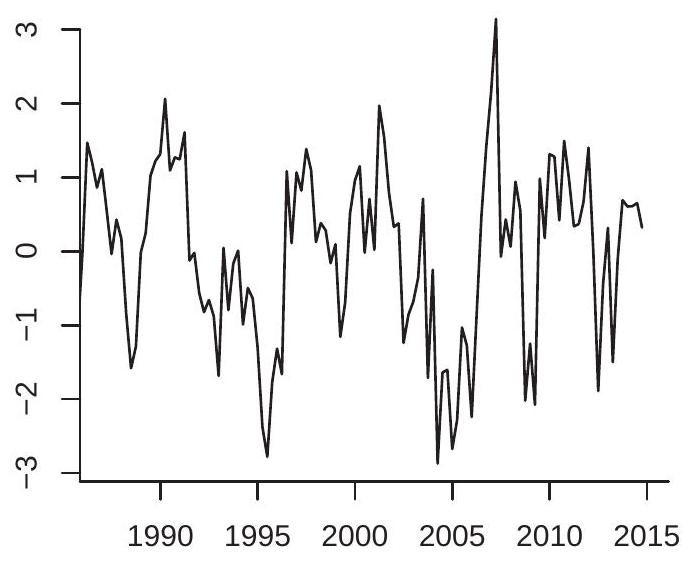

To illustrate the behavior of the AR(1) process, Figure \(14.4\) plots two simulated AR(1) processes. Each is generated using the white noise process \(e_{t}\) displayed in Figure 14.3(a). The plot in Figure 14.4(a) sets

- \(\operatorname{AR}(1)\) with \(\alpha_{1}=0.5\)

.jpg)

- \(\operatorname{AR}(1)\) with \(\alpha_{1}=0.95\)

Figure 14.4: AR(1) Processes

\(\alpha_{1}=0.5\) and the plot in Figure 14.4(b) sets \(\alpha_{1}=0.95\). You can see how both are more smooth than the white noise process and that the smoothing increases with \(\alpha\).

Our first goal is to obtain conditions under which (14.25) is stationary. We can do so by showing that \(Y_{t}\) can be written as a convergent linear process and then appealing to Theorem 14.5. To find a linear process representation for \(Y_{t}\) we can use backward recursion. Notice that \(Y_{t}\) in (14.25) depends on its previous value \(Y_{t-1}\). If we take (14.25) and lag it one period we find \(Y_{t-1}=\alpha_{0}+\alpha_{1} Y_{t-2}+e_{t-1}\). Substituting this into (14.25) we find

\[ \begin{aligned} Y_{t} &=\alpha_{0}+\alpha_{1}\left(\alpha_{0}+\alpha_{1} Y_{t-2}+e_{t-1}\right)+e_{t} \\ &=\alpha_{0}+\alpha_{1} \alpha_{0}+\alpha_{1}^{2} Y_{t-2}+\alpha_{1} e_{t-1}+e_{t} . \end{aligned} \]

Similarly we can lag (14.31) twice to find \(Y_{t-2}=\alpha_{0}+\alpha_{1} Y_{t-3}+e_{t-2}\) and can be used to substitute out \(Y_{t-2}\). Continuing recursively \(t\) times, we find

\[ \begin{aligned} Y_{t} &=\alpha_{0}\left(1+\alpha_{1}+\alpha_{1}^{2}+\cdots+\alpha_{1}^{t-1}\right)+\alpha_{1}^{t} Y_{0}+\alpha_{1}^{t-1} e_{1}+\alpha_{1}^{t-2} e_{2}+\cdots+e_{t} \\ &=\alpha_{0} \sum_{j=0}^{t-1} \alpha_{1}^{j}+\alpha_{1}^{t} Y_{0}+\sum_{j=0}^{t-1} \alpha_{1}^{j} e_{t-j} . \end{aligned} \]

Thus \(Y_{t}\) equals an intercept plus the scaled initial condition \(\alpha_{1}^{t} Y_{0}\) and the moving average \(\sum_{j=0}^{t-1} \alpha_{1}^{j} e_{t-j}\).

Now suppose we continue this recursion into the infinite past. By Theorem \(14.3\) this converges if \(\sum_{j=0}^{\infty}\left|\alpha_{1}\right|^{j}<\infty\). The limit is provided by the following well-known result.

Theorem \(14.20 \sum_{k=0}^{\infty} \beta^{k}=\frac{1}{1-\beta}\) is absolutely convergent if \(|\beta|<1\) The series converges by the ratio test (see Theorem A.3 of Probability and Statistics for Economists). To find the limit,

\[ A=\sum_{k=0}^{\infty} \beta^{k}=1+\sum_{k=1}^{\infty} \beta^{k}=1+\beta \sum_{k=0}^{\infty} \beta^{k}=1+\beta A . \]

Solving, we find \(A=1 /(1-\beta)\).

Thus the intercept in (14.26) converges to \(\alpha_{0} /\left(1-\alpha_{1}\right)\). We deduce the following:

Theorem 14.21 If \(\mathbb{E}\left|e_{t}\right|<\infty\) and \(\left|\alpha_{1}\right|<1\) then the AR(1) process (14.25) has the convergent representation

\[ Y_{t}=\mu+\sum_{j=0}^{\infty} \alpha_{1}^{j} e_{t-j} \]

where \(\mu=\alpha_{0} /\left(1-\alpha_{1}\right)\). The AR(1) process \(Y_{t}\) is strictly stationary and ergodic.

We can compute the moments of \(Y_{t}\) from (14.27)

\[ \begin{gathered} \mathbb{E}\left[Y_{t}\right]=\mu+\sum_{k=0}^{\infty} \alpha_{1}^{k} \mathbb{E}\left[e_{t-k}\right]=\mu \\ \operatorname{var}\left[Y_{t}\right]=\sum_{k=0}^{\infty} \alpha_{1}^{2 k} \operatorname{var}\left[e_{t-k}\right]=\frac{\sigma^{2}}{1-\alpha_{1}^{2}} . \end{gathered} \]

One way to calculate the moments is as follows. Apply expectations to both sides of (14.25)

\[ \mathbb{E}\left[Y_{t}\right]=\alpha_{0}+\alpha_{1} \mathbb{E}\left[Y_{t-1}\right]+\mathbb{E}\left[e_{t}\right]=\alpha_{0}+\alpha_{1} \mathbb{E}\left[Y_{t-1}\right] . \]

Stationarity implies \(\mathbb{E}\left[Y_{t-1}\right]=\mathbb{E}\left[Y_{t}\right]\). Solving we find \(\mathbb{E}\left[Y_{t}\right]=\alpha_{0} /\left(1-\alpha_{1}\right)\). Similarly,

\[ \operatorname{var}\left[Y_{t}\right]=\operatorname{var}\left[\alpha Y_{t-1}+e_{t}\right]=\alpha_{1}^{2} \operatorname{var}\left[Y_{t-1}\right]+\operatorname{var}\left[e_{t}\right]=\alpha_{1}^{2} \operatorname{var}\left[Y_{t-1}\right]+\sigma^{2} . \]

Stationarity implies \(\operatorname{var}\left[Y_{t-1}\right]=\operatorname{var}\left[Y_{t}\right]\). Solving we find \(\operatorname{var}\left[Y_{t}\right]=\sigma^{2} /\left(1-\alpha_{1}^{2}\right)\). This method is useful for calculation of autocovariances and autocorrelations. For simplicity set \(\alpha_{0}=0\) so that \(\mathbb{E}\left[Y_{t}\right]=0\) and \(\mathbb{E}\left[Y_{t}^{2}\right]=\operatorname{var}\left[Y_{t}\right]\). We find

\[ \gamma(1)=\mathbb{E}\left[Y_{t-1} Y_{t}\right]=\mathbb{E}\left[Y_{t-1}\left(\alpha_{1} Y_{t-1}+e_{t}\right)\right]=\alpha_{1} \operatorname{var}\left[Y_{t}\right] \]

so

\[ \rho(1)=\gamma(1) / \operatorname{var}\left[Y_{t}\right]=\alpha_{1} . \]

Furthermore,

\[ \gamma(k)=\mathbb{E}\left[Y_{t-k} Y_{t}\right]=\mathbb{E}\left[Y_{t-k}\left(\alpha_{1} Y_{t-1}+e_{t}\right)\right]=\alpha_{1} \gamma(k-1) \]

By recursion we obtain

\[ \begin{aligned} &\gamma(k)=\alpha_{1}^{k} \operatorname{var}\left[Y_{t}\right] \\ &\rho(k)=\alpha_{1}^{k} . \end{aligned} \]

Thus the AR(1) process with \(\alpha_{1} \neq 0\) has non-zero autocorrelations of all orders which decay to zero geometrically as \(k\) increases. For \(\alpha_{1}>0\) the autocorrelations are all positive. For \(\alpha_{1}<0\) the autocorrelations alternate in sign.

We can also express the AR(1) process using the lag operator notation:

\[ \left(1-\alpha_{1} \mathrm{~L}\right) Y_{t}=\alpha_{0}+e_{t} \]

We can write this as \(\alpha(\mathrm{L}) Y_{t}=\alpha_{0}+e_{t}\) where \(\alpha(\mathrm{L})=1-\alpha_{1} \mathrm{~L}\). We call \(\alpha(z)=1-\alpha_{1} z\) the autoregressive polynomial of \(Y_{t}\).

This suggests an alternative way of obtaining the representation (14.27). We can invert the operator (1- \(\left.\alpha_{1} \mathrm{~L}\right)\) to write \(Y_{t}\) as a function of lagged \(e_{t}\). That is, suppose that the inverse operator \(\left(1-\alpha_{1} \mathrm{~L}\right)^{-1}\) exists. Then we can use this operator on (14.28) to find

\[ Y_{t}=\left(1-\alpha_{1} \mathrm{~L}\right)^{-1}\left(1-\alpha_{1} \mathrm{~L}\right) Y_{t}=\left(1-\alpha_{1} \mathrm{~L}\right)^{-1}\left(\alpha_{0}+e_{t}\right) . \]

What is the operator \(\left(1-\alpha_{1} \mathrm{~L}\right)^{-1}\) ? Recall from Theorem \(14.20\) that for \(|x|<1\),

\[ \sum_{j=0}^{\infty} x^{j}=\frac{1}{1-x}=(1-x)^{-1} . \]

Evaluate this expression at \(x=\alpha_{1} z\). We find

\[ \left(1-\alpha_{1} z\right)^{-1}=\sum_{j=0}^{\infty} \alpha_{1}^{j} z^{j} . \]

Setting \(z=\mathrm{L}\) this is

\[ \left(1-\alpha_{1} \mathrm{~L}\right)^{-1}=\sum_{j=0}^{\infty} \alpha_{1}^{j} \mathrm{~L}^{j} . \]

Substituted into (14.29) we obtain

\[ \begin{aligned} Y_{t} &=\left(1-\alpha_{1} \mathrm{~L}\right)^{-1}\left(\alpha_{0}+e_{t}\right) \\ &=\left(\sum_{j=0}^{\infty} \alpha^{j} \mathrm{~L}^{j}\right)\left(\alpha_{0}+e_{t}\right) \\ &=\sum_{j=0}^{\infty} \alpha_{1}^{j} \mathrm{~L}^{j}\left(\alpha_{0}+e_{t}\right) \\ &=\sum_{j=0}^{\infty} \alpha_{1}^{j}\left(\alpha_{0}+e_{t-j}\right) \\ &=\frac{\alpha_{0}}{1-\alpha_{1}}+\sum_{j=0}^{\infty} \alpha_{1}^{j} e_{t-j} \end{aligned} \]

which is (14.27). This is valid for \(\left|\alpha_{1}\right|<1\).

This illustrates another important concept. We say that a polynomial \(\alpha(z)\) is invertible if

\[ \alpha(z)^{-1}=\sum_{j=0}^{\infty} a_{j} z^{j} \]

is absolutely convergent. In particular, the \(\operatorname{AR}(1)\) autoregressive polynomial \(\alpha(z)=1-\alpha_{1} z\) is invertible if \(\left|\alpha_{1}\right|<1\). This is the same condition as for stationarity of the AR(1) process. Invertibility turns out to be a useful property.

14.23 Unit Root and Explosive AR(1) Processes

The AR(1) process (14.25) is stationary if \(\left|\alpha_{1}\right|<1\). What happens otherwise?

If \(\alpha_{0}=0\) and \(\alpha_{1}=1\) the model is known as a random walk.

\[ Y_{t}=Y_{t-1}+e_{t} . \]

This is also called a unit root process, a martingale, or an integrated process. By back-substitution

\[ Y_{t}=Y_{0}+\sum_{j=1}^{t} e_{j} . \]

Thus the initial condition does not disappear for large \(t\). Consequently the series is non-stationary. The autoregressive polynomial \(\alpha(z)=1-z\) is not invertible, meaning that \(Y_{t}\) cannot be written as a convergent function of the infinite past history of \(e_{t}\).

The stochastic behavior of a random walk is noticably different from a stationary AR(1) process. It wanders up and down with equal likelihood and is not mean-reverting. While it has no tendency to return to its previous values the wandering nature of a random walk can give the illusion of mean reversion. The difference is that a random walk will take a very large number of time periods to “revert”.

- Example 1

.jpg)

- Example 2

Figure 14.5: Random Walk Processes

To illustrate, Figure \(14.5\) plots two independent random walk processes. The plot in panel (a) uses the innovations from Figure 14.3(a). The plot in panel (b) uses an independent set of i.i.d. \(N(0,1)\) errors. You can see that the plot in panel (a) appears similar to the MA(8) and AR(1) plots in the sense that the series is smooth with long swings, but the difference is that the series does not return to a longterm mean. It appears to have drifted down over time. The plot in panel (b) appears to have quite different behavior, falling dramatically over a 5-year period, and then appearing to stabilize. These are both common behaviors of random walk processes. If \(\alpha_{1}>1\) the process is explosive. The model (14.25) with \(\alpha_{1}>1\) exhibits exponential growth and high sensitivity to initial conditions. Explosive autoregressive processes do not seem to be good descriptions for most economic time series. While aggregate time series such as the GDP process displayed in Figure 14.1 (a) exhibit a similar exponential growth pattern, the exponential growth can typically be removed by taking logarithms.

The case \(\alpha_{1}<-1\) induces explosive oscillating growth and does not appear to be empirically relevant for economic applications.

14.24 Second-Order Autoregressive Process

The second-order autoregressive process, denoted \(\mathbf{A R}(2)\), is

\[ Y_{t}=\alpha_{0}+\alpha_{1} Y_{t-1}+\alpha_{2} Y_{t-2}+e_{t} \]

where \(e_{t}\) is a strictly stationary and ergodic white noise process. The dynamic patterns of an AR(2) process are more complicated than an AR(1) process.

As a motivating example consider the multiplier-accelerator model of Samuelson (1939). It might be a bit dated as a model but it is simple so hopefully makes the point. Aggregate output (in an economy with no trade) is defined as \(Y_{t}=\) Consumption \(_{t}+\) Investment \(_{t}+\) Gov \(_{t}\). Suppose that individuals make their consumption decisions on the previous period’s income Consumption \(t=b Y_{t-1}\), firms make their investment decisions on the change in consumption Investment \(t_{t}=d \Delta C_{t}\), and government spending is random \(G o v_{t}=a+e_{t}\). Then aggregate output follows

\[ Y_{t}=a+b(1+d) Y_{t-1}-b d Y_{t-2}+e_{t} \]

which is an \(\operatorname{AR}(2)\) process.

Using the lag operator we can write (14.31) as

\[ Y_{t}-\alpha_{1} \mathrm{~L} Y_{t}-\alpha_{2} \mathrm{~L}^{2} Y_{t}=\alpha_{0}+e_{t} \]

or \(\alpha(\mathrm{L}) Y_{t}=\alpha_{0}+e_{t}\) where \(\alpha(\mathrm{L})=1-\alpha_{1} \mathrm{~L}-\alpha_{2} \mathrm{~L}^{2}\). We call \(\alpha(z)\) the autoregressive polynomial of \(Y_{t}\).

We would like to find the conditions for the stationarity of \(Y_{t}\). It turns out that it is convenient to transform the process (14.31) into a VAR(1) process (to be studied in the next chapter). Set \(\widetilde{Y}_{t}=\left(Y_{t}, Y_{t-1}\right)^{\prime}\), which is stationary if and only if \(Y_{t}\) is stationary. Equation (14.31) implies that \(\widetilde{Y}_{t}\) satisfies

\[ \left(\begin{array}{c} Y_{t} \\ Y_{t-1} \end{array}\right)=\left(\begin{array}{cc} \alpha_{1} & \alpha_{2} \\ 1 & 0 \end{array}\right)\left(\begin{array}{c} Y_{t-1} \\ Y_{t-2} \end{array}\right)+\left(\begin{array}{c} a_{0}+e_{t} \\ 0 \end{array}\right) \]

or

\[ \widetilde{Y}_{t}=\boldsymbol{A} \widetilde{Y}_{t-1}+\widetilde{e}_{t} \]

where \(\boldsymbol{A}=\left(\begin{array}{cc}\alpha_{1} & \alpha_{2} \\ 1 & 0\end{array}\right)\) and \(\widetilde{e}_{t}=\left(a_{0}+e_{t}, 0\right)^{\prime}\). Equation (14.33) falls in the class of VAR(1) models studied in Section 15.6. Theorem \(15.6\) shows that the \(\operatorname{VAR}(1)\) process is strictly stationary and ergodic if the innovations satisfy \(\mathbb{E}\left\|\widetilde{e}_{t}\right\|<\infty\) and all eigenvalues \(\lambda\) of \(\boldsymbol{A}\) are less than one in absolute value. The eigenvalues satisfy \(\operatorname{det}\left(\boldsymbol{A}-\boldsymbol{I}_{2} \lambda\right)=0\), where

\[ \operatorname{det}\left(\boldsymbol{A}-\boldsymbol{I}_{2} \lambda\right)=\operatorname{det}\left(\begin{array}{cc} \alpha_{1}-\lambda & \alpha_{2} \\ 1 & -\lambda \end{array}\right)=\lambda^{2}-\lambda \alpha_{1}-\alpha_{2}=\lambda^{2} \alpha(1 / \lambda) \]

and \(\alpha(z)=1-\alpha_{1} z-\alpha_{2} z^{2}\) is the autoregressive polynomial. Thus the eigenvalues satisfy \(\alpha(1 / \lambda)=0\). Factoring the autoregressive polynomial as \(\alpha(z)=\left(1-\lambda_{1} z\right)\left(1-\lambda_{2} z\right)\) the solutions \(\alpha(1 / \lambda)=0\) must equal \(\lambda_{1}\) and \(\lambda_{2}\). The quadratic formula shows that these equal

\[ \lambda_{j}=\frac{\alpha_{1} \pm \sqrt{\alpha_{1}^{2}+4 \alpha_{2}}}{2} . \]

These eigenvalues are real if \(\alpha_{1}^{2}+4 \alpha_{2} \geq 0\) and are complex conjugates otherwise. The AR(2) process is stationary if the solutions (14.34) satisfy \(\left|\lambda_{j}\right|<1\).

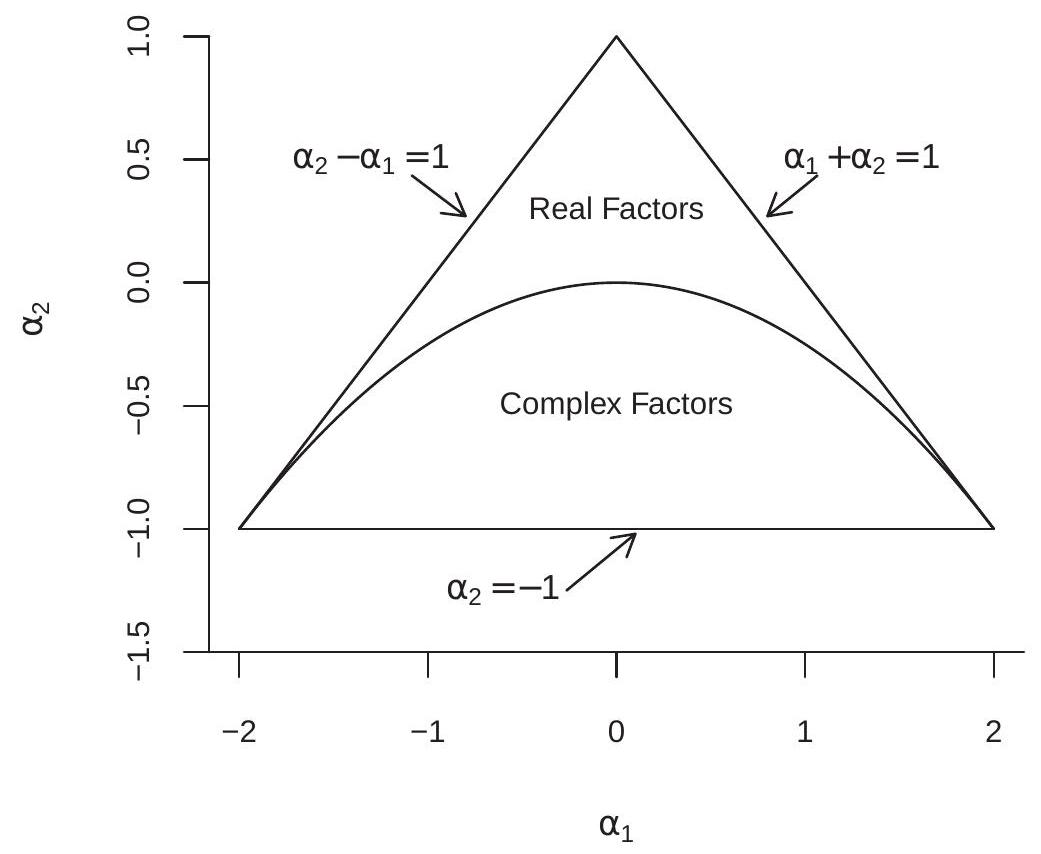

Figure 14.6: Stationarity Region for \(\operatorname{AR}(2)\)

Using (14.34) to solve for the AR coefficients in terms of the eigenvalues we find \(\alpha_{1}=\lambda_{1}+\lambda_{2}\) and \(\alpha_{2}=-\lambda_{1} \lambda_{2}\). With some algebra (the details are deferred to Section 14.47) we can show that \(\left|\lambda_{1}\right|<1\) and \(\left|\lambda_{2}\right|<1\) iff the following restrictions hold on the autoregressive coefficients:

\[ \begin{aligned} \alpha_{1}+\alpha_{2} &<1 \\ \alpha_{2}-\alpha_{1}<1 \\ \alpha_{2} &>-1 . \end{aligned} \]

These restrictions describe a triangle in \(\left(\alpha_{1}, \alpha_{2}\right)\) space which is shown in Figure 14.6. Coefficients within this triangle correspond to a stationary \(\operatorname{AR}(2)\) process.

Take the Samuelson multiplier-accelerator model (14.32). You can calculate that (14.35)-(14.37) are satisfied (and thus the process is strictly stationary) if \(0 \leq b<1\) and \(0 \leq d \leq 1\), which are reasonable restrictions on the model parameters. The most important restriction is \(b<1\), which in the language of old-school macroeconomics is that the marginal propensity to consume out of income is less than one.

Furthermore, the triangle is divided into two regions as marked in Figure 14.6: the region above the parabola \(\alpha_{1}^{2}+4 \alpha_{2}=0\) producing real eigenvalues \(\lambda_{j}\), and the region below the parabola producing complex eigenvalues \(\lambda_{j}\). This is interesting because when the eigenvalues are complex the autocorrelations of \(Y_{t}\) display damped oscillations. For this reason the dynamic patterns of an AR(2) can be much more complicated than those of an AR(1).

Again, take the Samuelson multiplier-accelerator model (14.32). You can calculate that if \(b \geq 0\), the model has real eigenvalues iff \(b \geq 4 d /(1+d)^{2}\), which holds for \(b\) large and \(d\) small, which are “stable” parameterizations. On the other hand, the model has complex eigenvalues (and thus oscillations) for sufficiently small \(b\) and large \(d\).

Theorem 14.22 If \(\mathbb{E}\left|e_{t}\right|<\infty\) and \(\left|\lambda_{j}\right|<1\) for \(\lambda_{j}\) defined in (14.34), or equivalently if the inequalities (14.35)-(14.37) hold, then the \(\mathrm{AR}(2)\) process (14.31) is absolutely convergent, strictly stationary, and ergodic.

The proof is presented in Section \(14.47\).

- \(\operatorname{AR}(2)\)

.jpg)

- \(\operatorname{AR}(2)\) with Complex Roots

Figure 14.7: AR(2) Processes

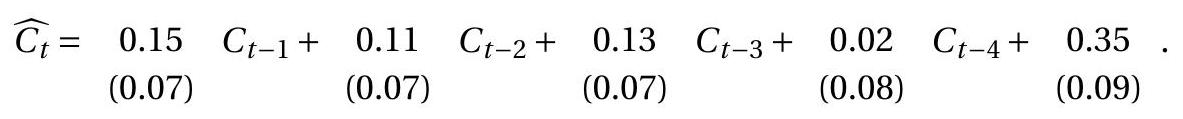

To illustrate, Figure \(14.7\) displays two simulated AR(2) processes. The plot in panel (a) sets \(\alpha_{1}=\alpha_{2}=\) 0.4. These coefficients produce real factors so the process displays behavior similar to that of the AR(1) processes. The plot in panel (b) sets \(\alpha_{1}=1.3\) and \(\alpha_{2}=-0.8\). These coefficients produce complex factors so the process displays oscillations.

14.25 AR(p) Processes

The \(\mathbf{p}^{\text {th }}\)-order autoregressive process, denoted \(\mathbf{A R}(\mathbf{p})\), is

\[ Y_{t}=\alpha_{0}+\alpha_{1} Y_{t-1}+\alpha_{2} Y_{t-2}+\cdots+\alpha_{p} Y_{t-p}+e_{t} \]

where \(e_{t}\) is a strictly stationary and ergodic white noise process.

Using the lag operator,

\[ Y_{t}-\alpha_{1} \mathrm{~L} Y_{t}-\alpha_{2} \mathrm{~L}^{2} Y_{t}-\cdots-\alpha_{p} \mathrm{~L}^{p} Y_{t}=\alpha_{0}+e_{t} \]

or \(\alpha(\mathrm{L}) Y_{t}=\alpha_{0}+e_{t}\) where

\[ \alpha(\mathrm{L})=1-\alpha_{1} \mathrm{~L}-\alpha_{2} \mathrm{~L}^{2}-\cdots-\alpha_{p} \mathrm{~L}^{p} . \]

We call \(\alpha(z)\) the autoregressive polynomial of \(Y_{t}\).

We find conditions for the stationarity of \(Y_{t}\) by a technique similar to that used for the AR(2) process. Set \(\widetilde{Y}_{t}=\left(Y_{t}, Y_{t-1}, \ldots, Y_{t-p+1}\right)^{\prime}\) and \(\widetilde{e}_{t}=\left(a_{0}+e_{t}, 0, \ldots, 0\right)^{\prime}\). Equation (14.38) implies that \(\widetilde{Y}_{t}\) satisfies the VAR(1) equation (14.33) with

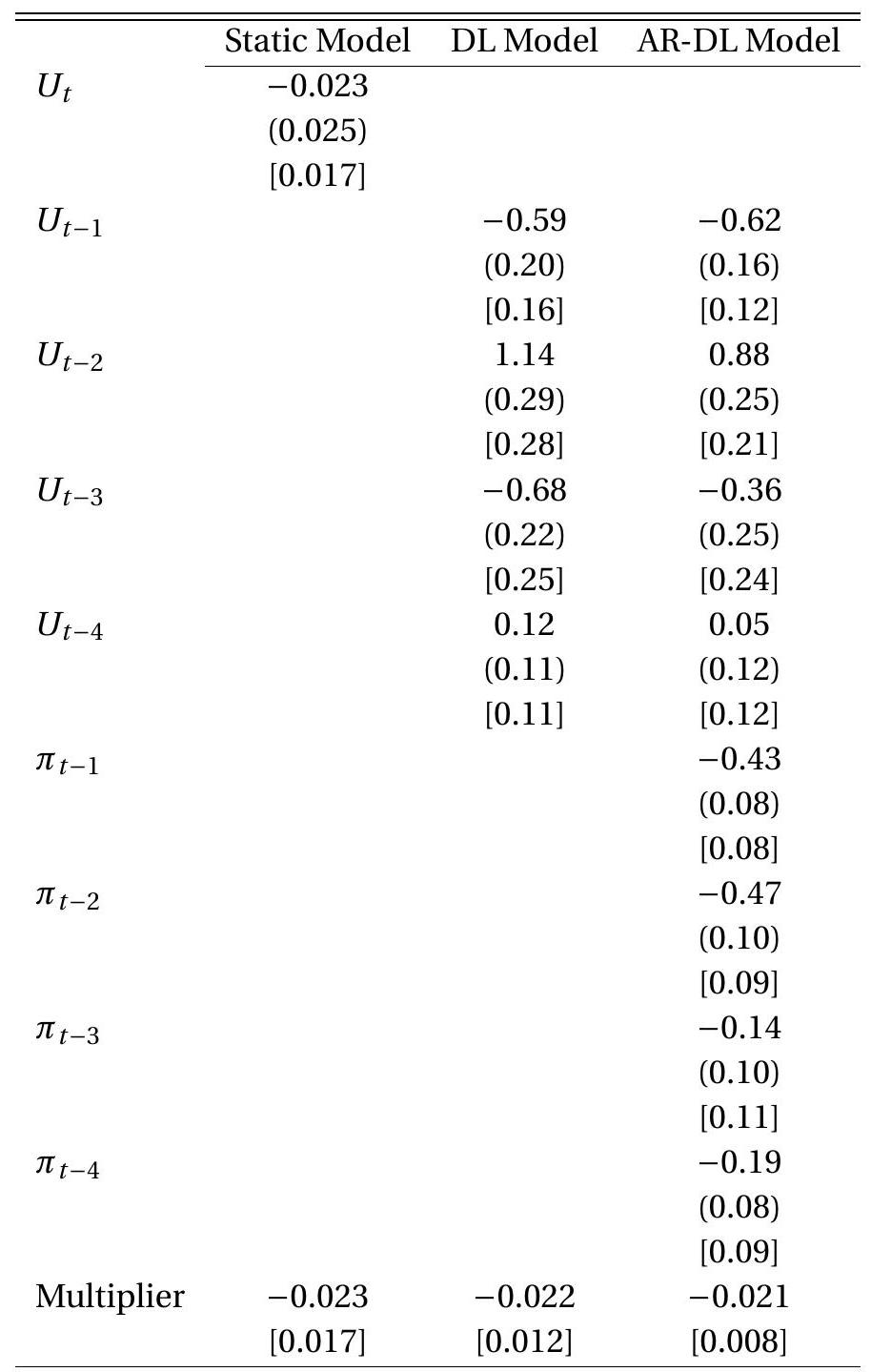

\[ \boldsymbol{A}=\left(\begin{array}{ccccc} \alpha_{1} & \alpha_{2} & \cdots & \alpha_{p-1} & \alpha_{p} \\ 1 & 0 & \cdots & 0 & 0 \\ 0 & 1 & \cdots & 0 & 0 \\ \vdots & \vdots & \ddots & \vdots & \vdots \\ 0 & 0 & \cdots & 1 & 0 \end{array}\right) \]