Chapter 9: Hypothesis Testing

9 Hypothesis Testing

In Chapter 5 we briefly introduced hypothesis testing in the context of the normal regression model. In this chapter we explore hypothesis testing in greater detail with a particular emphasis on asymptotic inference. For more detail on the foundations see Chapter 13 of Probability and Statistics for Economists.

9.1 Hypotheses

In Chapter 8 we discussed estimation subject to restrictions, including linear restrictions (8.1), nonlinear restrictions (8.44), and inequality restrictions (8.49). In this chapter we discuss tests of such restrictions.

Hypothesis tests attempt to assess whether there is evidence contrary to a proposed restriction. Let \(\theta=r(\beta)\) be a \(q \times 1\) parameter of interest where \(r: \mathbb{R}^{k} \rightarrow \Theta \subset \mathbb{R}^{q}\) is some transformation. For example, \(\theta\) may be a single coefficient, e.g. \(\theta=\beta_{j}\), the difference between two coefficients, e.g. \(\theta=\beta_{j}-\beta_{\ell}\), or the ratio of two coefficients, e.g. \(\theta=\beta_{j} / \beta_{\ell}\).

A point hypothesis concerning \(\theta\) is a proposed restriction such as

\[ \theta=\theta_{0} \]

where \(\theta_{0}\) is a hypothesized (known) value.

More generally, letting \(\beta \in B \subset \mathbb{R}^{k}\) be the parameter space, a hypothesis is a restriction \(\beta \in B_{0}\) where \(B_{0}\) is a proper subset of \(B\). This specializes to (9.1) by setting \(B_{0}=\left\{\beta \in B: r(\beta)=\theta_{0}\right\}\).

In this chapter we will focus exclusively on point hypotheses of the form (9.1) as they are the most common and relatively simple to handle.

The hypothesis to be tested is called the null hypothesis.

Definition 9.1 The null hypothesis \(\mathbb{M}_{0}\) is the restriction \(\theta=\theta_{0}\) or \(\beta \in B_{0}\).

We often write the null hypothesis as \(\mathbb{M}_{0}: \theta=\theta_{0}\) or \(\mathbb{M}_{0}: r(\beta)=\theta_{0}\).

The complement of the null hypothesis (the collection of parameter values which do not satisfy the null hypothesis) is called the alternative hypothesis.

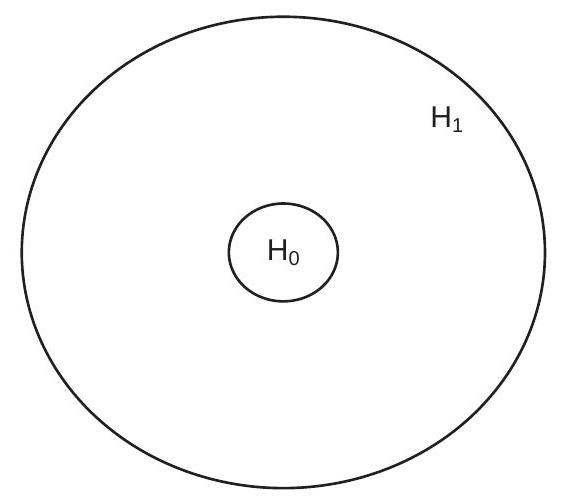

Definition 9.2 The alternative hypothesis \(\mathbb{M}_{1}\) is the set \(\left\{\theta \in \Theta: \theta \neq \theta_{0}\right\}\) or \(\left\{\beta \in B: \beta \notin B_{0}\right\}\) We often write the alternative hypothesis as \(\mathbb{M}_{1}: \theta \neq \theta_{0}\) or \(\mathbb{M}_{1}: r(\beta) \neq \theta_{0}\). For simplicity, we often refer to the hypotheses as “the null” and “the alternative”. Figure 9.1(a) illustrates the division of the parameter space into null and alternative hypotheses.

- Null and Alternative Hypotheses

.jpg)

- Acceptance and Rejection Regions

Figure 9.1: Hypothesis Testing

In hypothesis testing, we assume that there is a true (but unknown) value of \(\theta\) and this value either satisfies \(\mathbb{M}_{0}\) or does not satisfy \(\mathbb{M}_{0}\). The goal of hypothesis testing is to assess whether or not \(\mathbb{H}_{0}\) is true by asking if \(\mathbb{M}_{0}\) is consistent with the observed data.

To be specific, take our example of wage determination and consider the question: Does union membership affect wages? We can turn this into a hypothesis test by specifying the null as the restriction that a coefficient on union membership is zero in a wage regression. Consider, for example, the estimates reported in Table 4.1. The coefficient for “Male Union Member” is \(0.095\) (a wage premium of \(9.5 %\) ) and the coefficient for “Female Union Member” is \(0.022\) (a wage premium of \(2.2 %\) ). These are estimates, not the true values. The question is: Are the true coefficients zero? To answer this question the testing method asks the question: Are the observed estimates compatible with the hypothesis, in the sense that the deviation from the hypothesis can be reasonably explained by stochastic variation? Or are the observed estimates incompatible with the hypothesis, in the sense that that the observed estimates would be highly unlikely if the hypothesis were true?

9.2 Acceptance and Rejection

A hypothesis test either accepts the null hypothesis or rejects the null hypothesis in favor of the alternative hypothesis. We can describe these two decisions as “Accept \(\mathbb{H}_{0}\)” and “Reject \(\mathbb{H}_{0}\)”. In the example given in the previous section the decision is either to accept the hypothesis that union membership does not affect wages, or to reject the hypothesis in favor of the alternative that union membership does affect wages.

The decision is based on the data and so is a mapping from the sample space to the decision set. This splits the sample space into two regions \(S_{0}\) and \(S_{1}\) such that if the observed sample falls into \(S_{0}\) we accept \(\mathbb{M}_{0}\), while if the sample falls into \(S_{1}\) we reject \(\mathbb{M}_{0}\). The set \(S_{0}\) is called the acceptance region and the set \(S_{1}\) the rejection or critical region.

It is convenient to express this mapping as a real-valued function called a test statistic

\[ T=T\left(\left(Y_{1}, X_{1}\right), \ldots,\left(Y_{n}, X_{n}\right)\right) \]

relative to a critical value \(c\). The hypothesis test then consists of the decision rule:

Accept \(\mathbb{H}_{0}\) if \(T \leq c\).

Reject \(\mathbb{M}_{0}\) if \(T>c\).

Figure 9.1(b) illustrates the division of the sample space into acceptance and rejection regions.

A test statistic \(T\) should be designed so that small values are likely when \(\mathbb{H}_{0}\) is true and large values are likely when \(\mathbb{M}_{1}\) is true. There is a well developed statistical theory concerning the design of optimal tests. We will not review that theory here, but instead refer the reader to Lehmann and Romano (2005). In this chapter we will summarize the main approaches to the design of test statistics.

The most commonly used test statistic is the absolute value of the t-statistic

\[ T=\left|T\left(\theta_{0}\right)\right| \]

where

\[ T(\theta)=\frac{\widehat{\theta}-\theta}{s(\widehat{\theta})} \]

is the t-statistic from (7.33), \(\widehat{\theta}\) is a point estimator, and \(s(\widehat{\theta})\) its standard error. \(T\) is an appropriate statistic when testing hypotheses on individual coefficients or real-valued parameters \(\theta=h(\beta)\) and \(\theta_{0}\) is the hypothesized value. Quite typically \(\theta_{0}=0\), as interest focuses on whether or not a coefficient equals zero, but this is not the only possibility. For example, interest may focus on whether an elasticity \(\theta\) equals 1 , in which case we may wish to test \(\mathbb{H}_{0}: \theta=1\).

9.3 Type I Error

A false rejection of the null hypothesis \(\mathbb{H}_{0}\) (rejecting \(\mathbb{M}_{0}\) when \(\mathbb{H}_{0}\) is true) is called a Type I error. The probability of a Type I error is called the size of the test.

\[ \mathbb{P}\left[\text { Reject } \mathbb{H}_{0} \mid \mathbb{H}_{0} \text { true }\right]=\mathbb{P}\left[T>c \mid \mathbb{H}_{0} \text { true }\right] . \]

The uniform size of the test is the supremum of (9.4) across all data distributions which satisfy \(\mathbb{H}_{0}\). A primary goal of test construction is to limit the incidence of Type I error by bounding the size of the test.

For the reasons discussed in Chapter 7 , in typical econometric models the exact sampling distributions of estimators and test statistics are unknown and hence we cannot explicitly calculate (9.4). Instead, we typically rely on asymptotic approximations. Suppose that the test statistic has an asymptotic distribution under \(\mathbb{H}_{0}\). That is, when \(\mathbb{H}_{0}\) is true

\[ T \longrightarrow \underset{d}{\xi} \]

as \(n \rightarrow \infty\) for some continuously-distributed random variable \(\xi\). This is not a substantive restriction as most conventional econometric tests satisfy (9.5). Let \(G(u)=\mathbb{P}[\xi \leq u]\) denote the distribution of \(\xi\). We call \(\xi\) (or \(G\) ) the asymptotic null distribution. It is desirable to design test statistics \(T\) whose asymptotic null distribution \(G\) is known and does not depend on unknown parameters. In this case we say that \(T\) is asymptotically pivotal.

For example, if the test statistic equals the absolute \(t\)-statistic from (9.2), then we know from Theorem \(7.11\) that if \(\theta=\theta_{0}\) (that is, the null hypothesis holds), then \(T \underset{d}{\rightarrow}|Z|\) as \(n \rightarrow \infty\) where \(Z \sim \mathrm{N}(0,1)\). This means that \(G(u)=\mathbb{P}[|Z| \leq u]=2 \Phi(u)-1\), the distribution of the absolute value of the standard normal as shown in (7.34). This distribution does not depend on unknowns and is pivotal.

We define the asymptotic size of the test as the asymptotic probability of a Type I error:

\[ \lim _{n \rightarrow \infty} \mathbb{P}\left[T>c \mid \mathbb{M}_{0} \text { true }\right]=\mathbb{P}[\xi>c]=1-G(c) . \]

We see that the asymptotic size of the test is a simple function of the asymptotic null distribution \(G\) and the critical value \(c\). For example, the asymptotic size of a test based on the absolute t-statistic with critical value \(c\) is \(2(1-\Phi(c))\).

In the dominant approach to hypothesis testing the researcher pre-selects a significance level \(\alpha \epsilon\) \((0,1)\) and then selects \(c\) so the asymptotic size is no larger than \(\alpha\). When the asymptotic null distribution \(G\) is pivotal we accomplish this by setting \(c\) equal to the \(1-\alpha\) quantile of the distribution \(G\). (If the distribution \(G\) is not pivotal more complicated methods must be used.) We call \(c\) the asymptotic critical value because it has been selected from the asymptotic null distribution. For example, since \(2(1-\Phi(1.96))=0.05\) it follows that the \(5 %\) asymptotic critical value for the absolute t-statistic is \(c=1.96\). Calculation of normal critical values is done numerically in statistical software. For example, in MATLAB the command is norminv \((1-\alpha / 2)\).

9.4 t tests

As we mentioned earlier, the most common test of the one-dimensional hypothesis \(\mathbb{H}_{0}: \theta=\theta_{0} \in \mathbb{R}\) against the alternative \(\mathbb{M}_{1}: \theta \neq \theta_{0}\) is the absolute value of the \(\mathrm{t}\)-statistic (9.3). We now formally state its asymptotic null distribution, which is a simple application of Theorem 7.11.

Theorem 9.1 Under Assumptions 7.2, 7.3, and \(\mathbb{H}_{0}: \theta=\theta_{0} \in \mathbb{R}, T\left(\theta_{0}\right) \underset{d}{\longrightarrow} Z \sim\) \(\mathrm{N}(0,1)\). For \(c\) satisfying \(\alpha=2(1-\Phi(c)), \mathbb{P}\left[\left|T\left(\theta_{0}\right)\right|>c \mid \mathbb{H}_{0}\right] \rightarrow \alpha\), and the test “Reject \(\mathbb{H}_{0}\) if \(\left|T\left(\theta_{0}\right)\right|>c\)” has asymptotic size \(\alpha\).

Theorem 9.1 shows that asymptotic critical values can be taken from the normal distribution. As in our discussion of asymptotic confidence intervals (Section 7.13) the critical value could alternatively be taken from the student \(t\) distribution, which would be the exact test in the normal regression model (Section 5.12). Indeed, \(t\) critical values are the default in packages such as Stata. Since the critical values from the student \(t\) distribution are (slightly) larger than those from the normal distribution, student \(t\) critical values slightly decrease the rejection probability of the test. In practical applications the difference is typically unimportant unless the sample size is quite small (in which case the asymptotic approximation should be questioned as well).

The alternative hypothesis \(\theta \neq \theta_{0}\) is sometimes called a “two-sided” alternative. In contrast, sometimes we are interested in testing for one-sided alternatives such as \(\mathbb{M}_{1}: \theta>\theta_{0}\) or \(\mathbb{H}_{1}: \theta<\theta_{0}\). Tests of \(\theta=\theta_{0}\) against \(\theta>\theta_{0}\) or \(\theta<\theta_{0}\) are based on the signed t-statistic \(T=T\left(\theta_{0}\right)\). The hypothesis \(\theta=\theta_{0}\) is rejected in favor of \(\theta>\theta_{0}\) if \(T>c\) where \(c\) satisfies \(\alpha=1-\Phi(c)\). Negative values of \(T\) are not taken as evidence against \(\mathbb{M}_{0}\), as point estimates \(\widehat{\theta}\) less than \(\theta_{0}\) do not point to \(\theta>\theta_{0}\). Since the critical values are taken from the single tail of the normal distribution they are smaller than for two-sided tests. Specifically, the asymptotic \(5 %\) critical value is \(c=1.645\). Thus, we reject \(\theta=\theta_{0}\) in favor of \(\theta>\theta_{0}\) if \(T>1.645\).

Conversely, tests of \(\theta=\theta_{0}\) against \(\theta<\theta_{0}\) reject \(\mathbb{M}_{0}\) for negative t-statistics, e.g. if \(T<-c\). Large positive values of \(T\) are not evidence for \(\mathbb{H}_{1}: \theta<\theta_{0}\). An asymptotic \(5 %\) test rejects if \(T<-1.645\).

There seems to be an ambiguity. Should we use the two-sided critical value \(1.96\) or the one-sided critical value 1.645? The answer is that in most cases the two-sided critical value is appropriate. We should use the one-sided critical values only when the parameter space is known to satisfy a one-sided restriction such as \(\theta \geq \theta_{0}\). This is when the test of \(\theta=\theta_{0}\) against \(\theta>\theta_{0}\) makes sense. If the restriction \(\theta \geq \theta_{0}\) is not known a priori then imposing this restriction to test \(\theta=\theta_{0}\) against \(\theta>\theta_{0}\) does not makes sense. Since linear regression coefficients typically do not have a priori sign restrictions, the standard convention is to use two-sided critical values.

This may seem contrary to the way testing is presented in statistical textbooks which often focus on one-sided alternative hypotheses. The latter focus is primarily for pedagogy as the one-sided theoretical problem is cleaner and easier to understand.

9.5 Type II Error and Power

A false acceptance of the null hypothesis \(\mathbb{H}_{0}\) (accepting \(\mathbb{M}_{0}\) when \(\mathbb{H}_{1}\) is true) is called a Type II error. The rejection probability under the alternative hypothesis is called the power of the test, and equals 1 minus the probability of a Type II error:

\[ \pi(\theta)=\mathbb{P}\left[\text { Reject } \mathbb{H}_{0} \mid \mathbb{H}_{1} \text { true }\right]=\mathbb{P}\left[T>c \mid \mathbb{M}_{1} \text { true }\right] . \]

We call \(\pi(\theta)\) the power function and is written as a function of \(\theta\) to indicate its dependence on the true value of the parameter \(\theta\).

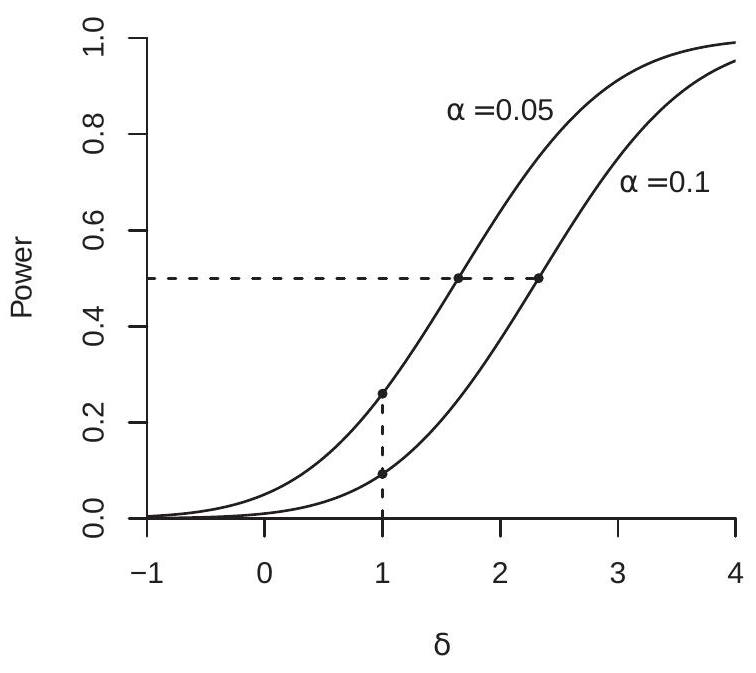

In the dominant approach to hypothesis testing the goal of test construction is to have high power subject to the constraint that the size of the test is lower than the pre-specified significance level. Generally, the power of a test depends on the true value of the parameter \(\theta\), and for a well-behaved test the power is increasing both as \(\theta\) moves away from the null hypothesis \(\theta_{0}\) and as the sample size \(n\) increases.

Given the two possible states of the world \(\left(\mathbb{M}_{0}\right.\) or \(\left.\mathbb{H}_{1}\right)\) and the two possible decisions (Accept \(\mathbb{M}_{0}\) or Reject \(\mathbb{M}_{0}\) ) there are four possible pairings of states and decisions as is depicted in Table 9.1.

Table 9.1: Hypothesis Testing Decisions

| {Accept \(\mathbb{H}_{0}\) | {Reject \(\mathbb{M}_{0}\) | |

|---|---|---|

| \(\mathbb{M}_{0}\) true | Correct Decision | Type I Error |

| \(\mathbb{H}_{1}\) true | Type II Error | Correct Decision |

Given a test statistic \(T\), increasing the critical value \(c\) increases the acceptance region \(S_{0}\) while decreasing the rejection region \(S_{1}\). This decreases the likelihood of a Type I error (decreases the size) but increases the likelihood of a Type II error (decreases the power). Thus the choice of \(c\) involves a trade-off between size and the power. This is why the significance level \(\alpha\) of the test cannot be set arbitrarily small. Otherwise the test will not have meaningful power.

It is important to consider the power of a test when interpreting hypothesis tests as an overly narrow focus on size can lead to poor decisions. For example, it is easy to design a test which has perfect size yet has trivial power. Specifically, for any hypothesis we can use the following test: Generate a random variable \(U \sim U[0,1]\) and reject \(\mathbb{M}_{0}\) if \(U<\alpha\). This test has exact size of \(\alpha\). Yet the test also has power precisely equal to \(\alpha\). When the power of a test equals the size we say that the test has trivial power. Nothing is learned from such a test.

9.6 Statistical Significance

Testing requires a pre-selected choice of significance level \(\alpha\) yet there is no objective scientific basis for choice of \(\alpha\). Nevertheless, the common practice is to set \(\alpha=0.05\) (5%). Alternative common values are \(\alpha=0.10(10 %)\) and \(\alpha=0.01(1 %)\). These choices are somewhat the by-product of traditional tables of critical values and statistical software.

The informal reasoning behind the \(5 %\) critical value is to ensure that Type I errors should be relatively unlikely - that the decision “Reject \(\mathbb{H}_{0}\)” has scientific strength - yet the test retains power against reasonable alternatives. The decision “Reject \(\mathbb{M}_{0}\)” means that the evidence is inconsistent with the null hypothesis in the sense that it is relatively unlikely ( 1 in 20) that data generated by the null hypothesis would yield the observed test result.

In contrast, the decision “Accept \(\mathbb{H}_{0}\)” is not a strong statement. It does not mean that the evidence supports \(\mathbb{M}_{0}\), only that there is insufficient evidence to reject \(\mathbb{M}_{0}\). Because of this it is more accurate to use the label “Do not Reject \(\mathbb{M}_{0}\)” instead of “Accept \(\mathbb{H}_{0}\)”.

When a test rejects \(\mathbb{M}_{0}\) at the \(5 %\) significance level it is common to say that the statistic is statistically significant and if the test accepts \(\mathbb{M}_{0}\) it is common to say that the statistic is not statistically significant or that it is statistically insignificant. It is helpful to remember that this is simply a compact way of saying “Using the statistic \(T\) the hypothesis \(\mathbb{H}_{0}\) can [cannot] be rejected at the asymptotic \(5 %\) level.” Furthermore, when the null hypothesis \(\mathbb{M}_{0}: \theta=0\) is rejected it is common to say that the coefficient \(\theta\) is statistically significant, because the test has rejected the hypothesis that the coefficient is equal to zero.

Let us return to the example about the union wage premium as measured in Table 4.1. The absolute \(\mathrm{t}\)-statistic for the coefficient on “Male Union Member” is \(0.095 / 0.020=4.7\), which is greater than the \(5 %\) asymptotic critical value of \(1.96\). Therefore we reject the hypothesis that union membership does not affect wages for men. In this case we can say that union membership is statistically significant for men. However, the absolute t-statistic for the coefficient on “Female Union Member” is \(0.023 / 0.020=1.2\), which is less than \(1.96\) and therefore we do not reject the hypothesis that union membership does not affect wages for women. In this case we find that membership for women is not statistically significant.

When a test accepts a null hypothesis (when a test is not statistically significant) a common misinterpretation is that this is evidence that the null hypothesis is true. This is incorrect. Failure to reject is by itself not evidence. Without an analysis of power we do not know the likelihood of making a Type II error and thus are uncertain. In our wage example it would be a mistake to write that “the regression finds that female union membership has no effect on wages”. This is an incorrect and most unfortunate interpretation. The test has failed to reject the hypothesis that the coefficient is zero but that does not mean that the coefficient is actually zero.

When a test rejects a null hypothesis (when a test is statistically significant) it is strong evidence against the hypothesis (because if the hypothesis were true then rejection is an unlikely event). Rejection should be taken as evidence against the null hypothesis. However, we can never conclude that the null hypothesis is indeed false as we cannot exclude the possibility that we are making a Type I error.

Perhaps more importantly, there is an important distinction between statistical and economic significance. If we correctly reject the hypothesis \(\mathbb{M}_{0}: \theta=0\) it means that the true value of \(\theta\) is non-zero. This includes the possibility that \(\theta\) may be non-zero but close to zero in magnitude. This only makes sense if we interpret the parameters in the context of their relevant models. In our wage regression example we might consider wage effects of \(1 %\) magnitude or less as being “close to zero”. In a log wage regression this corresponds to a dummy variable with a coefficient less than \(0.01\). If the standard error is sufficiently small (less than \(0.005\) ) then a coefficient estimate of \(0.01\) will be statistically significant but not economically significant. This occurs frequently in applications with very large sample sizes where standard errors can be quite small.

The solution is to focus whenever possible on confidence intervals and the economic meaning of the coefficients. For example, if the coefficient estimate is \(0.005\) with a standard error of \(0.002\) then a \(95 %\) confidence interval would be \([0.001,0.009]\) indicating that the true effect is likely between \(0 %\) and \(1 %\), and hence is slightly positive but small. This is much more informative than the misleading statement “the effect is statistically positive”.

9.7 P-Values

Continuing with the wage regression estimates reported in Table 4.1, consider another question: Does marriage status affect wages? To test the hypothesis that marriage status has no effect on wages, we examine the t-statistics for the coefficients on “Married Male” and “Married Female” in Table 4.1, which are \(0.211 / 0.010=22\) and \(0.016 / 0.010=1.7\), respectively. The first exceeds the asymptotic \(5 %\) critical value of \(1.96\) so we reject the hypothesis for men. The second is smaller than \(1.96\) so we fail to reject the hypothesis for women. Taking a second look at the statistics we see that the statistic for men (22) is exceptionally high and that for women (1.7) is only slightly below the critical value. Suppose that the \(\mathrm{t}\)-statistic for women were slightly increased to 2.0. This is larger than the critical value so would lead to the decision “Reject \(\mathbb{M}_{0}\)” rather than “Accept \(\mathbb{M}_{0}\)”. Should we really be making a different decision if the \(\mathrm{t}\)-statistic is \(2.0\) rather than 1.7? The difference in values is small, shouldn’t the difference in the decision be also small? Thinking through these examples it seems unsatisfactory to simply report “Accept \(\mathbb{M}_{0}\)” or “Reject \(\mathbb{H}_{0}\)”. These two decisions do not summarize the evidence. Instead, the magnitude of the statistic \(T\) suggests a “degree of evidence” against \(\mathbb{H}_{0}\). How can we take this into account?

The answer is to report what is known as the asymptotic p-value

\[ p=1-G(T) . \]

Since the distribution function \(G\) is monotonically increasing, the p-value is a monotonically decreasing function of \(T\) and is an equivalent test statistic. Instead of rejecting \(\mathbb{R}_{0}\) at the significance level \(\alpha\) if \(T>c\), we can reject \(\mathbb{M}_{0}\) if \(p<\alpha\). Thus it is sufficient to report \(p\), and let the reader decide. In practice, the p-value is calculated numerically. For example, in MATLAB the command is \(2 *(1-\operatorname{normal} c d f(\mathrm{abs}(\mathrm{t})))\).

It is instructive to interpret \(p\) as the marginal significance level: the smallest value of \(\alpha\) for which the test \(T\) “rejects” the null hypothesis. That is, \(p=0.11\) means that \(T\) rejects \(\mathbb{H}_{0}\) for all significance levels greater than \(0.11\), but fails to reject \(\mathbb{M}_{0}\) for significance levels less than \(0.11\).

Furthermore, the asymptotic p-value has a very convenient asymptotic null distribution. Since \(T-\vec{d}\) \(\xi\) under \(\mathbb{M}_{0}\), then \(p=1-G(T) \underset{d}{\longrightarrow} 1-G(\xi)\), which has the distribution

\[ \begin{aligned} \mathbb{P}[1-G(\xi) \leq u] &=\mathbb{P}[1-u \leq G(\xi)] \\ &=1-\mathbb{P}\left[\xi \leq G^{-1}(1-u)\right] \\ &=1-G\left(G^{-1}(1-u)\right) \\ &=1-(1-u) \\ &=u, \end{aligned} \]

which is the uniform distribution on \([0,1]\). (This calculation assumes that \(G(u)\) is strictly increasing which is true for conventional asymptotic distributions such as the normal.) Thus \(p \underset{d}{\longrightarrow} U[0,1]\). This means that the “unusualness” of \(p\) is easier to interpret than the “unusualness” of \(T\).

An important caveat is that the \(\mathrm{p}\)-value \(p\) should not be interpreted as the probability that either hypothesis is true. A common mis-interpretation is that \(p\) is the probability “that the null hypothesis is true.” This is incorrect. Rather, \(p\) is the marginal significance level-a measure of the strength of information against the null hypothesis. For a t-statistic the p-value can be calculated either using the normal distribution or the student \(t\) distribution, the latter presented in Section 5.12. p-values calculated using the student \(t\) will be slightly larger, though the difference is small when the sample size is large.

Returning to our empirical example, for the test that the coefficient on “Married Male” is zero the pvalue is \(0.000\). This means that it would be nearly impossible to observe a t-statistic as large as 22 when the true value of the coefficient is zero. When presented with such evidence we can say that we “strongly reject” the null hypothesis, that the test is “highly significant”, or that “the test rejects at any conventional critical value”. In contrast, the p-value for the coefficient on “Married Female” is \(0.094\). In this context it is typical to say that the test is “close to significant”, meaning that the p-value is larger than \(0.05\), but not too much larger.

A related but inferior empirical practice is to append asterisks \((*)\) to coefficient estimates or test statistics to indicate the level of significance. A common practice to to append a single asterisk (\textit{) for an estimate or test statistic which exceeds the \(10 %\) critical value (i.e., is significant at the \(10 %\) level), append a double asterisk () for a test which exceeds the \(5 %\) critical value, and append a triple asterisk (}) for a test which exceeds the \(1 %\) critical value. Such a practice can be better than a table of raw test statistics as the asterisks permit a quick interpretation of significance. On the other hand, asterisks are inferior to p-values, which are also easy and quick to interpret. The goal is essentially the same; it is wiser to report p-values whenever possible and avoid the use of asterisks.

Our recommendation is that the best empirical practice is to compute and report the asymptotic pvalue \(p\) rather than simply the test statistic \(T\), the binary decision Accept/Reject, or appending asterisks. The p-value is a simple statistic, easy to interpret, and contains more information than the other choices.

We now summarize the main features of hypothesis testing.

Select a significance level \(\alpha\).

Select a test statistic \(T\) with asymptotic distribution \(T \underset{d}{\rightarrow} \xi\) under \(\mathbb{H}_{0}\).

Set the asymptotic critical value \(c\) so that \(1-G(c)=\alpha\), where \(G\) is the distribution function of \(\xi\).

Calculate the asymptotic p-value \(p=1-G(T)\).

Reject \(\mathbb{R}_{0}\) if \(T>c\), or equivalently \(p<\alpha\).

Accept \(\mathbb{H}_{0}\) if \(T \leq c\), or equivalently \(p \geq \alpha\).

Report \(p\) to summarize the evidence concerning \(\mathbb{M}_{0}\) versus \(\mathbb{M}_{1}\).

9.8 t-ratios and the Abuse of Testing

In Section \(4.19\) we argued that a good applied practice is to report coefficient estimates \(\widehat{\theta}\) and standard errors \(s(\widehat{\theta})\) for all coefficients of interest in estimated models. With \(\widehat{\theta}\) and \(s(\widehat{\theta})\) the reader can easily construct confidence intervals \([\widehat{\theta} \pm 2 s(\widehat{\theta})]\) and t-statistics \(\left(\widehat{\theta}-\theta_{0}\right) / s(\widehat{\theta})\) for hypotheses of interest.

Some applied papers (especially older ones) report t-ratios \(T=\widehat{\theta} / s(\widehat{\theta})\) instead of standard errors. This is poor econometric practice. While the same information is being reported (you can back out standard errors by division, e.g. \(s(\widehat{\theta})=\widehat{\theta} / T)\), standard errors are generally more helpful to readers than t-ratios. Standard errors help the reader focus on the estimation precision and confidence intervals, while t-ratios focus attention on statistical significance. While statistical significance is important, it is less important that the parameter estimates themselves and their confidence intervals. The focus should be on the meaning of the parameter estimates, their magnitudes, and their interpretation, not on listing which variables have significant (e.g. non-zero) coefficients. In many modern applications sample sizes are very large so standard errors can be very small. Consequently t-ratios can be large even if the coefficient estimates are economically small. In such contexts it may not be interesting to announce “The coefficient is non-zero!” Instead, what is interesting to announce is that “The coefficient estimate is economically interesting!”

In particular, some applied papers report coefficient estimates and t-ratios and limit their discussion of the results to describing which variables are “significant” (meaning that their t-ratios exceed 2) and the signs of the coefficient estimates. This is very poor empirical work and should be studiously avoided. It is also a recipe for banishment of your work to lower tier economics journals.

Fundamentally, the common t-ratio is a test for the hypothesis that a coefficient equals zero. This should be reported and discussed when this is an interesting economic hypothesis of interest. But if this is not the case it is distracting.

One problem is that standard packages, such as Stata, by default report t-statistics and p-values for every estimated coefficient. While this can be useful (as a user doesn’t need to explicitly ask to test a desired coefficient) it can be misleading as it may unintentionally suggest that the entire list of t-statistics and p-values are important. Instead, a user should focus on tests of scientifically motivated hypotheses.

In general, when a coefficient \(\theta\) is of interest it is constructive to focus on the point estimate, its standard error, and its confidence interval. The point estimate gives our “best guess” for the value. The standard error is a measure of precision. The confidence interval gives us the range of values consistent with the data. If the standard error is large then the point estimate is not a good summary about \(\theta\). The endpoints of the confidence interval describe the bounds on the likely possibilities. If the confidence interval embraces too broad a set of values for \(\theta\) then the dataset is not sufficiently informative to render useful inferences about \(\theta\). On the other hand if the confidence interval is tight then the data have produced an accurate estimate and the focus should be on the value and interpretation of this estimate. In contrast, the statement “the t-ratio is highly significant” has little interpretive value.

The above discussion requires that the researcher knows what the coefficient \(\theta\) means (in terms of the economic problem) and can interpret values and magnitudes, not just signs. This is critical for good applied econometric practice.

For example, consider the question about the effect of marriage status on mean log wages. We had found that the effect is “highly significant” for men and “close to significant” for women. Now, let’s construct asymptotic \(95 %\) confidence intervals for the coefficients. The one for men is \([0.19,0.23]\) and that for women is \([-0.00,0.03]\). This shows that average wages for married men are about \(19-23 %\) higher than for unmarried men, which is substantial, while the difference for women is about 0-3%, which is small. These magnitudes are more informative than the results of the hypothesis tests.

9.9 Wald Tests

The t-test is appropriate when the null hypothesis is a real-valued restriction. More generally there may be multiple restrictions on the coefficient vector \(\beta\). Suppose that we have \(q>1\) restrictions which can be written in the form (9.1). It is natural to estimate \(\theta=r(\beta)\) by the plug-in estimator \(\widehat{\theta}=r(\widehat{\beta})\). To test \(\mathbb{H}_{0}: \theta=\theta_{0}\) against \(\mathbb{H}_{1}: \theta \neq \theta_{0}\) one approach is to measure the magnitude of the discrepancy \(\widehat{\theta}-\theta_{0}\). As this is a vector there is more than one measure of its length. One simple measure is the weighted quadratic form known as the Wald statistic. This is (7.37) evaluated at the null hypothesis

\[ W=W\left(\theta_{0}\right)=\left(\widehat{\theta}-\theta_{0}\right)^{\prime} \widehat{\boldsymbol{V}}_{\widehat{\theta}}^{-1}\left(\widehat{\theta}-\theta_{0}\right) \]

where \(\widehat{\boldsymbol{V}}_{\widehat{\theta}}=\widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\widehat{\beta}} \widehat{\boldsymbol{R}}\) is an estimator of \(\boldsymbol{V}_{\widehat{\theta}}\) and \(\widehat{\boldsymbol{R}}=\frac{\partial}{\partial \beta} r(\widehat{\beta})^{\prime}\). Notice that we can write \(W\) alternatively as

\[ W=n\left(\widehat{\theta}-\theta_{0}\right)^{\prime} \widehat{\boldsymbol{V}}_{\theta}^{-1}\left(\widehat{\theta}-\theta_{0}\right) \]

using the asymptotic variance estimator \(\widehat{\boldsymbol{V}}_{\theta}\), or we can write it directly as a function of \(\widehat{\beta}\) as

\[ W=\left(r(\widehat{\beta})-\theta_{0}\right)^{\prime}\left(\widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\widehat{\beta}} \widehat{\boldsymbol{R}}\right)^{-1}\left(r(\widehat{\beta})-\theta_{0}\right) . \]

Also, when \(r(\beta)=\boldsymbol{R}^{\prime} \beta\) is a linear function of \(\beta\), then the Wald statistic simplifies to

\[ W=\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\theta_{0}\right)^{\prime}\left(\boldsymbol{R}^{\prime} \widehat{\boldsymbol{V}}_{\widehat{\beta}} \boldsymbol{R}\right)^{-1}\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\theta_{0}\right) . \]

The Wald statistic \(W\) is a weighted Euclidean measure of the length of the vector \(\widehat{\theta}-\theta_{0}\). When \(q=1\) then \(W=T^{2}\), the square of the t-statistic, so hypothesis tests based on \(W\) and \(|T|\) are equivalent. The Wald statistic (9.6) is a generalization of the t-statistic to the case of multiple restrictions. As the Wald statistic is symmetric in the argument \(\widehat{\theta}-\theta_{0}\) it treats positive and negative alternatives symmetrically. Thus the inherent alternative is always two-sided.

As shown in Theorem 7.13, when \(\beta\) satisfies \(r(\beta)=\theta_{0}\) then \(W \underset{d}{\rightarrow} \chi_{q}^{2}\), a chi-square random variable with \(q\) degrees of freedom. Let \(G_{q}(u)\) denote the \(\chi_{q}^{2}\) distribution function. For a given significance level \(\alpha\) the asymptotic critical value \(c\) satisfies \(\alpha=1-G_{q}(c)\). For example, the \(5 %\) critical values for \(q=1, q=2\), and \(q=3\) are \(3.84,5.99\), and \(7.82\), respectively, and in general the level \(\alpha\) critical value can be calculated in MATLAB as chi2inv \((1-\alpha, q)\). An asymptotic test rejects \(\mathbb{M}_{0}\) in favor of \(\mathbb{M}_{1}\) if \(W>c\). As with t-tests, it is conventional to describe a Wald test as “significant” if \(W\) exceeds the \(5 %\) asymptotic critical value.

Theorem 9.2 Under Assumptions 7.2, 7.3, 7.4, and \(\mathbb{M}_{0}: \theta=\theta_{0} \in \mathbb{R}^{q}\), then \(W \vec{d}\) \(\chi_{q}^{2}\). For \(c\) satisfying \(\alpha=1-G_{q}(c), \mathbb{P}\left(W>c \mid \mathbb{H}_{0}\right) \longrightarrow \alpha\) so the test “Reject \(\mathbb{H}_{0}\) if \(W>c\)” has asymptotic size \(\alpha\).

Notice that the asymptotic distribution in Theorem \(9.2\) depends solely on \(q\), the number of restrictions being tested. It does not depend on \(k\), the number of parameters estimated.

The asymptotic p-value for \(W\) is \(p=1-G_{q}(W)\), and this is particularly useful when testing multiple restrictions. For example, if you write that a Wald test on eight restrictions ( \(q=8\) ) has the value \(W=\) \(11.2\) it is difficult for a reader to assess the magnitude of this statistic unless they have quick access to a statistical table or software. Instead, if you write that the p-value is \(p=0.19\) (as is the case for \(W=11.2\) and \(q=8\) ) then it is simple for a reader to interpret its magnitude as “insignificant”. To calculate the asymptotic p-value for a Wald statistic in MATLAB use the command \(1-\operatorname{ch} i 2 c d f(w, q)\).

Some packages (including Stata) and papers report \(F\) versions of Wald statistics. For any Wald statistic \(W\) which tests a \(q\)-dimensional restriction, the \(F\) version of the test is

\[ F=W / q . \]

When \(F\) is reported, it is conventional to use \(F_{q, n-k}\) critical values and \(\mathrm{p}\)-values rather than \(\chi_{q}^{2}\) values. The connection between Wald and F statistics is demonstrated in Section \(9.14\) where we show that when Wald statistics are calculated using a homoskedastic covariance matrix then \(F=W / q\) is identicial to the F statistic of (5.19). While there is no formal justification to using the \(F_{q, n-k}\) distribution for nonhomoskedastic covariance matrices, the \(F_{q, n-k}\) distribution provides continuity with the exact distribution theory under normality and is a bit more conservative than the \(\chi_{q}^{2}\) distribution. (Furthermore, the difference is small when \(n-k\) is moderately large.)

To implement a test of zero restrictions in Stata an easy method is to use the command test X1 X2 where X1 and X2 are the names of the variables whose coefficients are hypothesized to equal zero. The \(F\) version of the Wald statistic is reported using the covariance matrix calculated by the method specified in the regression command. A p-value is reported, calculated using the \(F_{q, n-k}\) distribution.

To illustrate, consider the empirical results presented in Table 4.1. The hypothesis “Union membership does not affect wages” is the joint restriction that both coefficients on “Male Union Member” and “Female Union Member” are zero. We calculate the Wald statistic for this joint hypothesis and find \(W=23\) (or \(F=12.5\) ) with a p-value of \(p=0.000\). Thus we reject the null hypothesis in favor of the alternative that at least one of the coefficients is non-zero. This does not mean that both coefficients are non-zero, just that one of the two is non-zero. Therefore examining both the joint Wald statistic and the individual t-statistics is useful for interpretation.

As a second example from the same regression, take the hypothesis that married status has no effect on mean wages for women. This is the joint restriction that the coefficients on “Married Female” and “Formerly Married Female” are zero. The Wald statistic for this hypothesis is \(W=6.4(F=3.2)\) with a p-value of \(0.04\). Such a p-value is typically called “marginally significant” in the sense that it is slightly smaller than \(0.05\).

The Wald statistic was proposed by Wald (1943).

9.10 Homoskedastic Wald Tests

If the error is known to be homoskedastic then it is appropriate to use the homoskedastic Wald statistic (7.38) which replaces \(\widehat{\boldsymbol{V}}_{\widehat{\theta}}\) with the homoskedastic estimator \(\widehat{\boldsymbol{V}}_{\widehat{\theta}}^{0}\). This statistic equals

\[ \begin{aligned} W^{0} &=\left(\widehat{\theta}-\theta_{0}\right)^{\prime}\left(\widehat{\boldsymbol{V}}_{\widehat{\theta}}^{0}\right)^{-1}\left(\widehat{\theta}-\theta_{0}\right) \\ &=\left(r(\widehat{\beta})-\theta_{0}\right)^{\prime}\left(\boldsymbol{R}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \widehat{\boldsymbol{R}}\right)^{-1}\left(r(\widehat{\beta})-\theta_{0}\right) / s^{2} . \end{aligned} \]

In the case of linear hypotheses \(\mathbb{M}_{0}: \boldsymbol{R}^{\prime} \beta=\theta_{0}\) we can write this as

\[ W^{0}=\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\theta_{0}\right)^{\prime}\left(\boldsymbol{R}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \boldsymbol{R}\right)^{-1}\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\theta_{0}\right) / s^{2} . \]

We call \(W^{0}\) a homoskedastic Wald statistic as it is appropriate when the errors are conditionally homoskedastic.

When \(q=1\) then \(W^{0}=T^{2}\), the square of the t-statistic where the latter is computed with a homoskedastic standard error. Theorem 9.3 Under Assumptions \(7.2\) and 7.3, \(\mathbb{E}\left[e^{2} \mid X\right]=\sigma^{2}>0\), and \(\mathbb{M}_{0}: \theta=\) \(\theta_{0} \in \mathbb{R}^{q}\), then \(W^{0} \underset{d}{\longrightarrow} \chi_{q}^{2}\). For \(c\) satisfying \(\alpha=1-G_{q}(c), \mathbb{P}\left[W^{0}>c \mid \mathbb{H}_{0}\right] \longrightarrow \alpha\) so the test “Reject \(\mathbb{M}_{0}\) if \(W^{0}>c\)” has asymptotic size \(\alpha\).

9.11 Criterion-Based Tests

The Wald statistic is based on the length of the vector \(\widehat{\theta}-\theta_{0}\) : the discrepancy between the estimator \(\widehat{\theta}=r(\widehat{\beta})\) and the hypothesized value \(\theta_{0}\). An alternative class of tests is based on the discrepancy between the criterion function minimized with and without the restriction.

Criterion-based testing applies when we have a criterion function, say \(J(\beta)\) with \(\beta \in B\), which is minimized for estimation, and the goal is to test \(\mathbb{M}_{0}: \beta \in B_{0}\) versus \(\mathbb{M}_{1}: \beta \notin B_{0}\) where \(B_{0} \subset \beta\). Minimizing the criterion function over \(B\) and \(B_{0}\) we obtain the unrestricted and restricted estimators

\[ \begin{aligned} &\widehat{\beta}=\underset{\beta \in B}{\operatorname{argmin}} J(\beta) \\ &\widetilde{\beta}=\underset{\beta \in B_{0}}{\operatorname{argmin}} J(\beta) . \end{aligned} \]

The criterion-based statistic for \(\mathbb{H}_{0}\) versus \(\mathbb{H}_{1}\) is proportional to

\[ J=\min _{\beta \in B_{0}} J(\beta)-\min _{\beta \in B} J(\beta)=J(\widetilde{\beta})-J(\widehat{\beta}) . \]

The criterion-based statistic \(J\) is sometimes called a distance statistic, a minimum-distance statistic, or a likelihood-ratio-like statistic.

Since \(B_{0}\) is a subset of \(B, J(\widetilde{\beta}) \geq J(\widehat{\beta})\) and thus \(J \geq 0\). The statistic \(J\) measures the cost on the criterion of imposing the null restriction \(\beta \in B_{0}\).

9.12 Minimum Distance Tests

The minimum distance test is based on the minimum distance criterion (8.19)

\[ J(\beta)=n(\widehat{\beta}-\beta)^{\prime} \widehat{\boldsymbol{W}}(\widehat{\beta}-\beta) \]

with \(\widehat{\beta}\) the unrestricted least squares estimator. The restricted estimator \(\widetilde{\beta}_{\text {md }}\) minimizes (9.8) subject to \(\beta \in B_{0}\). Observing that \(J(\widehat{\beta})=0\), the minimum distance statistic simplifies to

\[ J=J\left(\widetilde{\beta}_{\mathrm{md}}\right)=n\left(\widehat{\beta}-\widetilde{\beta}_{\mathrm{md}}\right)^{\prime} \widehat{\boldsymbol{W}}\left(\widehat{\beta}-\widetilde{\beta}_{\mathrm{md}}\right) . \]

The efficient minimum distance estimator \(\widetilde{\beta}_{\mathrm{emd}}\) is obtained by setting \(\widehat{\boldsymbol{W}}=\widehat{\boldsymbol{V}}_{\beta}^{-1}\) in (9.8) and (9.9). The efficient minimum distance statistic for \(\mathbb{H}_{0}: \beta \in B_{0}\) is therefore

\[ J^{*}=n\left(\widehat{\beta}-\widetilde{\beta}_{\mathrm{emd}}\right)^{\prime} \widehat{\boldsymbol{V}}_{\beta}^{-1}\left(\widehat{\beta}-\widetilde{\beta}_{\mathrm{emd}}\right) . \]

Consider the class of linear hypotheses \(\mathbb{M}_{0}: \boldsymbol{R}^{\prime} \beta=\theta_{0}\). In this case we know from (8.25) that the efficient minimum distance estimator \(\widetilde{\beta}_{\mathrm{emd}}\) subject to the constraint \(\boldsymbol{R}^{\prime} \beta=\theta_{0}\) is

\[ \widetilde{\beta}_{\mathrm{emd}}=\widehat{\beta}-\widehat{\boldsymbol{V}}_{\beta} \boldsymbol{R}\left(\boldsymbol{R}^{\prime} \widehat{\boldsymbol{V}}_{\beta} \boldsymbol{R}\right)^{-1}\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\theta_{0}\right) \]

and thus

\[ \widehat{\beta}-\widetilde{\beta}_{\mathrm{emd}}=\widehat{\boldsymbol{V}}_{\beta} \boldsymbol{R}\left(\boldsymbol{R}^{\prime} \widehat{\boldsymbol{V}}_{\beta} \boldsymbol{R}\right)^{-1}\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\theta_{0}\right) . \]

Substituting into (9.10) we find

\[ \begin{aligned} J^{*} &=n\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\theta_{0}\right)^{\prime}\left(\boldsymbol{R}^{\prime} \widehat{\boldsymbol{V}}_{\beta} \boldsymbol{R}\right)^{-1} \boldsymbol{R}^{\prime} \widehat{\boldsymbol{V}}_{\beta} \widehat{\boldsymbol{V}}_{\boldsymbol{\beta}}^{-1} \widehat{\boldsymbol{V}}_{\beta} \boldsymbol{R}\left(\boldsymbol{R}^{\prime} \widehat{\boldsymbol{V}}_{\beta} \boldsymbol{R}\right)^{-1}\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\theta_{0}\right) \\ &=n\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\theta_{0}\right)^{\prime}\left(\boldsymbol{R}^{\prime} \widehat{\boldsymbol{V}}_{\beta} \boldsymbol{R}\right)^{-1}\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\theta_{0}\right) \\ &=W, \end{aligned} \]

which is the Wald statistic (9.6).

Thus for linear hypotheses \(\mathbb{H}_{0}: \boldsymbol{R}^{\prime} \beta=\theta_{0}\), the efficient minimum distance statistic \(J^{*}\) is identical to the Wald statistic (9.6). For nonlinear hypotheses, however, the Wald and minimum distance statistics are different.

Newey and West (1987a) established the asymptotic null distribution of \(J^{*}\).

Theorem 9.4 Under Assumptions \(7.2,7.3,7.4\), and \(\mathbb{H}_{0}: \theta=\theta_{0} \in \mathbb{R}^{q}, J^{*} \underset{d}{\longrightarrow} \chi_{q}^{2}\).

Testing using the minimum distance statistic \(J^{*}\) is similar to testing using the Wald statistic \(W\). Critical values and p-values are computed using the \(\chi_{q}^{2}\) distribution. \(\mathbb{H}_{0}\) is rejected in favor of \(\mathbb{H}_{1}\) if \(J^{*}\) exceeds the level \(\alpha\) critical value, which can be calculated in MATLAB as chi2inv \((1-\alpha, q)\). The asymptotic pvalue is \(p=1-G_{q}\left(J^{*}\right)\). In MATLAB, use the command \(1-\operatorname{chi} 2 \mathrm{cdf}(\mathrm{J}, \mathrm{q})\).

We now demonstrate Theorem 9.4. The conditions of Theorem \(8.10\) hold, because \(\mathbb{H}_{0}\) implies Assumption 8.1. From (8.54) with \(\widehat{\boldsymbol{W}}=\widehat{\boldsymbol{V}}_{\beta}\), we see that

\[ \begin{aligned} \sqrt{n}\left(\widehat{\beta}-\widetilde{\beta}_{\mathrm{emd}}\right) &=\widehat{\boldsymbol{V}}_{\beta} \widehat{\boldsymbol{R}}\left(\boldsymbol{R}_{n}^{* \prime} \widehat{\boldsymbol{V}}_{\beta} \widehat{\boldsymbol{R}}\right)^{-1} \boldsymbol{R}_{n}^{* \prime} \sqrt{n}(\widehat{\beta}-\beta) \\ & \underset{d}{\longrightarrow} \boldsymbol{V}_{\beta} \boldsymbol{R}\left(\boldsymbol{R}^{\prime} \boldsymbol{V}_{\beta} \boldsymbol{R}\right)^{-1} \boldsymbol{R}^{\prime} \mathrm{N}\left(0, \boldsymbol{V}_{\beta}\right)=\boldsymbol{V}_{\beta} \boldsymbol{R} Z \end{aligned} \]

where \(Z \sim \mathrm{N}\left(0,\left(\boldsymbol{R}^{\prime} \boldsymbol{V}_{\beta} \boldsymbol{R}\right)^{-1}\right)\). Thus

\[ J^{*}=n\left(\widehat{\beta}-\widetilde{\beta}_{\mathrm{emd}}\right)^{\prime} \widehat{\boldsymbol{V}}_{\beta}^{-1}\left(\widehat{\beta}-\widetilde{\beta}_{\mathrm{emd}}\right) \underset{d}{\longrightarrow} Z^{\prime} \boldsymbol{R}^{\prime} \boldsymbol{V}_{\beta} \boldsymbol{V}_{\beta}^{-1} \boldsymbol{V}_{\beta} \boldsymbol{R} Z=Z^{\prime}\left(\boldsymbol{R}^{\prime} \boldsymbol{V}_{\beta} \boldsymbol{R}\right) Z=\chi_{q}^{2} \]

as claimed.

9.13 Minimum Distance Tests Under Homoskedasticity

If we set \(\widehat{\boldsymbol{W}}=\widehat{\boldsymbol{Q}}_{X X} / s^{2}\) in (9.8) we obtain the criterion (8.20)

\[ J^{0}(\beta)=n(\widehat{\beta}-\beta)^{\prime} \widehat{\boldsymbol{Q}}_{X X}(\widehat{\beta}-\beta) / s^{2} . \]

A minimum distance statistic for \(\mathbb{\Perp}_{0}: \beta \in B_{0}\) is

\[ J^{0}=\min _{\beta \in B_{0}} J^{0}(\beta) . \]

Equation (8.21) showed that \(\operatorname{SSE}(\beta)=n \widehat{\sigma}^{2}+s^{2} J^{0}(\beta)\). So the minimizers of \(\operatorname{SSE}(\beta)\) and \(J^{0}(\beta)\) are identical. Thus the constrained minimizer of \(J^{0}(\beta)\) is constrained least squares

\[ \widetilde{\beta}_{\text {cls }}=\underset{\beta \in B_{0}}{\operatorname{argmin}} J^{0}(\beta)=\underset{\beta \in B_{0}}{\operatorname{argmin}} \operatorname{SSE}(\beta) \]

and therefore

\[ J_{n}^{0}=J_{n}^{0}\left(\widetilde{\beta}_{\mathrm{cls}}\right)=n\left(\widehat{\beta}-\widetilde{\beta}_{\mathrm{cls}}\right)^{\prime} \widehat{\boldsymbol{Q}}_{X X}\left(\widehat{\beta}-\widetilde{\beta}_{\mathrm{cls}}\right) / s^{2} . \]

In the special case of linear hypotheses \(\mathbb{M}_{0}: \boldsymbol{R}^{\prime} \beta=\theta_{0}\), the constrained least squares estimator subject to \(\boldsymbol{R}^{\prime} \beta=\theta_{0}\) has the solution (8.9)

\[ \widetilde{\beta}_{\mathrm{cls}}=\widehat{\beta}-\widehat{\boldsymbol{Q}}_{X X}^{-1} \boldsymbol{R}\left(\boldsymbol{R}^{\prime} \widehat{\boldsymbol{Q}}_{X X}^{-1} \boldsymbol{R}\right)^{-1}\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\theta_{0}\right) \]

and solving we find

\[ J^{0}=n\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\theta_{0}\right)^{\prime}\left(\boldsymbol{R}^{\prime} \widehat{\boldsymbol{Q}}_{X X}^{-1} \boldsymbol{R}\right)^{-1}\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\theta_{0}\right) / s^{2}=W^{0} . \]

This is the homoskedastic Wald statistic (9.7). Thus for testing linear hypotheses, homoskedastic minimum distance and Wald statistics agree.

For nonlinear hypotheses they disagree, but have the same null asymptotic distribution.

Theorem 9.5 Under Assumptions \(7.2\) and \(7.3, \mathbb{E}\left[e^{2} \mid X\right]=\sigma^{2}>0\), and \(\mathbb{M}_{0}: \theta=\) \(\theta_{0} \in \mathbb{R}^{q}\), then \(J^{0} \underset{d}{\longrightarrow} \chi_{q}^{2}\)

9.14 F Tests

In Section \(5.13\) we introduced the \(F\) test for exclusion restrictions in the normal regression model. In this section we generalize this test to a broader set of restrictions. Let \(B_{0} \subset \mathbb{R}^{k}\) be a constrained parameter space which imposes \(q\) restrictions on \(\beta\).

Let \(\widehat{\beta}_{\text {ols }}\) be the unrestricted least squares estimator and let \(\widehat{\sigma}^{2}=n^{-1} \sum_{i=1}^{n}\left(Y_{i}-X_{i}^{\prime} \widehat{\beta}_{\text {ols }}\right)^{2}\) be the associated estimator of \(\sigma^{2}\). Let \(\widetilde{\beta}_{\text {cls }}\) be the CLS estimator (9.11) satisfying \(\widetilde{\beta}_{\text {cls }} \in B_{0}\) and let \(\widetilde{\sigma}^{2}=n^{-1} \sum_{i=1}^{n}\left(Y_{i}-X_{i}^{\prime} \widetilde{\beta}_{\text {cls }}\right)^{2}\) be the associated estimator of \(\sigma^{2}\). The \(F\) statistic for testing \(\mathbb{M}_{0}: \beta \in B_{0}\) is

\[ F=\frac{\left(\tilde{\sigma}^{2}-\widehat{\sigma}^{2}\right) / q}{\widehat{\sigma}^{2} /(n-k)} . \]

We can alternatively write

\[ F=\frac{\operatorname{SSE}\left(\widetilde{\beta}_{\mathrm{cls}}\right)-\operatorname{SSE}\left(\widehat{\beta}_{\mathrm{ols}}\right)}{q s^{2}} \]

where \(\operatorname{SSE}(\beta)=\sum_{i=1}^{n}\left(Y_{i}-X_{i}^{\prime} \beta\right)^{2}\) is the sum-of-squared errors.

This shows that \(F\) is a criterion-based statistic. Using (8.21) we can also write \(F=J^{0} / q\), so the \(F\) statistic is identical to the homoskedastic minimum distance statistic divided by the number of restrictions \(q\).

As we discussed in the previous section, in the special case of linear hypotheses \(\mathbb{M}_{0}: \boldsymbol{R}^{\prime} \beta=\theta_{0}, J^{0}=\) \(W^{0}\). It follows that in this case \(F=W^{0} / q\). Thus for linear restrictions the \(F\) statistic equals the homoskedastic Wald statistic divided by \(q\). It follows that they are equivalent tests for \(\mathbb{H}_{0}\) against \(\mathbb{H}_{1}\). Theorem 9.6 For tests of linear hypotheses \(\mathbb{H}_{0}: \boldsymbol{R}^{\prime} \beta=\theta_{0} \in \mathbb{R}^{q}\), the \(\mathrm{F}\) statistic equals \(F=W^{0} / q\) where \(W^{0}\) is the homoskedastic Wald statistic. Thus under 7.2, \(\mathbb{E}\left[e^{2} \mid X\right]=\sigma^{2}>0\), and \(\mathbb{M}_{0}: \theta=\theta_{0}\), then \(F \underset{d}{\longrightarrow} \chi_{q}^{2} / q\).

When using an \(F\) statistic it is conventional to use the \(F_{q, n-k}\) distribution for critical values and pvalues. Critical values are given in MATLAB by \(f\) inv \((1-\alpha, q, n-k)\) and \(p\)-values by \(1-f c d f(F, q, n-k)\). Alternatively, the \(\chi_{q}^{2} / q\) distribution can be used, using chi2inv \((1-\alpha, q) / q\) and \(1-\operatorname{chi} 2 c d f(F * q, q)\), respectively. Using the \(F_{q, n-k}\) distribution is a prudent small sample adjustment which yields exact answers if the errors are normal and otherwise slightly increasing the critical values and p-values relative to the asymptotic approximation. Once again, if the sample size is small enough that the choice makes a difference then probably we shouldn’t be trusting the asymptotic approximation anyway!

An elegant feature about (9.12) or (9.13) is that they are directly computable from the standard output from two simple OLS regressions, as the sum of squared errors (or regression variance) is a typical printed output from statistical packages and is often reported in applied tables. Thus \(F\) can be calculated by hand from standard reported statistics even if you don’t have the original data (or if you are sitting in a seminar and listening to a presentation!).

If you are presented with an \(F\) statistic (or a Wald statistic, as you can just divide by \(q\) ) but don’t have access to critical values, a useful rule of thumb is to know that for large \(n\) the \(5 %\) asymptotic critical value is decreasing as \(q\) increases and is less than 2 for \(q \geq 7\).

A word of warning: In many statistical packages when an OLS regression is estimated an “F-statistic” is automatically reported even though no hypothesis test was requested. What the package is reporting is an \(F\) statistic of the hypothesis that all slope coefficients \({ }^{1}\) are zero. This was a popular statistic in the early days of econometric reporting when sample sizes were very small and researchers wanted to know if there was “any explanatory power” to their regression. This is rarely an issue today as sample sizes are typically sufficiently large that this \(F\) statistic is nearly always highly significant. While there are special cases where this \(F\) statistic is useful these cases are not typical. As a general rule there is no reason to report this \(F\) statistic.

9.15 Hausman Tests

Hausman (1978) introduced a general idea about how to test a hypothesis \(\mathbb{M}_{0}\). If you have two estimators, one which is efficient under \(\mathbb{M}_{0}\) but inconsistent under \(\mathbb{H}_{1}\), and another which is consistent under \(\mathbb{H}_{1}\), then construct a test as a quadratic form in the differences of the estimators. In the case of testing a hypothesis \(\mathbb{M}_{0}: r(\beta)=\theta_{0}\) let \(\widehat{\beta}_{\text {ols }}\) denote the unconstrained least squares estimator and let \(\widetilde{\beta}_{\text {emd }}\) denote the efficient minimum distance estimator which imposes \(r(\beta)=\theta_{0}\). Both estimators are consistent under \(\mathbb{M}_{0}\) but \(\widetilde{\beta}_{\mathrm{emd}}\) is asymptotically efficient. Under \(\mathbb{H}_{1}, \widehat{\beta}_{\mathrm{ols}}\) is consistent for \(\beta\) but \(\widetilde{\beta}_{\mathrm{emd}}\) is inconsistent. The difference has the asymptotic distribution

\[ \sqrt{n}\left(\widehat{\beta}_{\mathrm{ols}}-\widetilde{\beta}_{\mathrm{emd}}\right) \underset{d}{\longrightarrow} \mathrm{N}\left(0, \boldsymbol{V}_{\beta} \boldsymbol{R}\left(\boldsymbol{R}^{\prime} \boldsymbol{V}_{\beta} \boldsymbol{R}\right)^{-1} \boldsymbol{R}^{\prime} \boldsymbol{V}_{\beta}\right) . \]

Let \(\boldsymbol{A}^{-}\)denote the Moore-Penrose generalized inverse. The Hausman statistic for \(\mathbb{H}_{0}\) is

\[ \begin{aligned} & H=\left(\widehat{\beta}_{\mathrm{ols}}-\widetilde{\beta}_{\mathrm{emd}}\right)^{\prime} \widehat{\operatorname{avar}}\left(\widehat{\beta}_{\mathrm{ols}}-\widetilde{\beta}_{\mathrm{emd}}\right)^{-}\left(\widehat{\beta}_{\mathrm{ols}}-\widetilde{\beta}_{\mathrm{emd}}\right) \\ & =n\left(\widehat{\beta}_{\mathrm{ols}}-\widetilde{\beta}_{\mathrm{emd}}\right)^{\prime}\left(\widehat{\boldsymbol{V}}_{\beta} \widehat{\boldsymbol{R}}\left(\widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\beta} \widehat{\boldsymbol{R}}\right)^{-1} \widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\beta}\right)^{-}\left(\widehat{\beta}_{\mathrm{ols}}-\widetilde{\beta}_{\mathrm{emd}}\right) . \end{aligned} \]

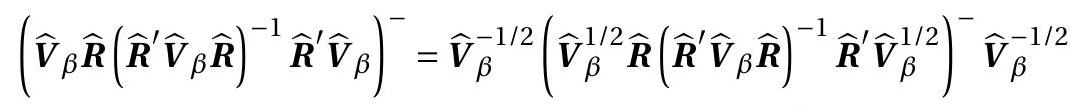

\({ }^{1}\) All coefficients except the intercept. The matrix \(\widehat{\boldsymbol{V}}_{\beta}^{1 / 2} \widehat{\boldsymbol{R}}\left(\widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\beta} \widehat{\boldsymbol{R}}\right)^{-1} \widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\beta}^{1 / 2}\) idempotent so its generalized inverse is itself. (See Section A.11.) It follows that

\[ \begin{aligned} & =\widehat{\boldsymbol{V}}_{\beta}^{-1 / 2} \widehat{\boldsymbol{V}}_{\beta}^{1 / 2} \widehat{\boldsymbol{R}}\left(\widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\beta} \widehat{\boldsymbol{R}}\right)^{-1} \widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\beta}^{1 / 2} \widehat{\boldsymbol{V}}_{\beta}^{-1 / 2} \\ & =\widehat{\boldsymbol{R}}\left(\widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\beta} \widehat{\boldsymbol{R}}\right)^{-1} \widehat{\boldsymbol{R}}^{\prime} . \end{aligned} \]

Thus the Hausman statistic is

\[ H=n\left(\widehat{\beta}_{\mathrm{ols}}-\widetilde{\beta}_{\mathrm{emd}}\right)^{\prime} \widehat{\boldsymbol{R}}\left(\widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\beta} \widehat{\boldsymbol{R}}\right)^{-1} \widehat{\boldsymbol{R}}^{\prime}\left(\widehat{\beta}_{\mathrm{ols}}-\widetilde{\beta}_{\mathrm{emd}}\right) . \]

In the context of linear restrictions, \(\widehat{\boldsymbol{R}}=\boldsymbol{R}\) and \(\boldsymbol{R}^{\prime} \widetilde{\beta}=\theta_{0}\) so the statistic takes the form

\[ H=n\left(\boldsymbol{R}^{\prime} \widehat{\beta}_{\mathrm{ols}}-\theta_{0}\right)^{\prime} \widehat{\boldsymbol{R}}\left(\boldsymbol{R}^{\prime} \widehat{\boldsymbol{V}}_{\beta} \boldsymbol{R}\right)^{-1}\left(\boldsymbol{R}^{\prime} \widehat{\beta}_{\mathrm{ols}}-\theta_{0}\right), \]

which is precisely the Wald statistic. With nonlinear restrictions \(W\) and \(H\) can differ.

In either case we see that that the asymptotic null distribution of the Hausman statistic \(H\) is \(\chi_{q}^{2}\), so the appropriate test is to reject \(\mathbb{M}_{0}\) in favor of \(\mathbb{H}_{1}\) if \(H>c\) where \(c\) is a critical value taken from the \(\chi_{q}^{2}\) distribution.

Theorem 9.7 For general hypotheses the Hausman test statistic is

\[ H=n\left(\widehat{\beta}_{\mathrm{ols}}-\widetilde{\beta}_{\mathrm{emd}}\right)^{\prime} \widehat{\boldsymbol{R}}\left(\widehat{\boldsymbol{R}}^{\prime} \widehat{\boldsymbol{V}}_{\beta} \widehat{\boldsymbol{R}}\right)^{-1} \widehat{\boldsymbol{R}}^{\prime}\left(\widehat{\beta}_{\mathrm{ols}}-\widetilde{\beta}_{\mathrm{emd}}\right) . \]

Under Assumptions \(7.2,7.3,7.4\), and \(\mathbb{M}_{0}: r(\beta)=\theta_{0} \in \mathbb{R}^{q}, H \underset{d}{\longrightarrow} \chi_{q}^{2}\)

9.16 Score Tests

Score tests are traditionally derived in likelihood analysis but can more generally be constructed from first-order conditions evaluated at restricted estimates. We focus on the likelihood derivation.

Given the log likelihood function \(\ell_{n}\left(\beta, \sigma^{2}\right)\), a restriction \(\mathbb{H}_{0}: r(\beta)=\theta_{0}\), and restricted estimators \(\widetilde{\beta}\) and \(\widetilde{\sigma}^{2}\), the score statistic for \(\mathbb{H}_{0}\) is defined as

\[ S=\left(\frac{\partial}{\partial \beta} \ell_{n}\left(\widetilde{\beta}, \widetilde{\sigma}^{2}\right)\right)^{\prime}\left(-\frac{\partial^{2}}{\partial \beta \partial \beta^{\prime}} \ell_{n}\left(\widetilde{\beta}, \widetilde{\sigma}^{2}\right)\right)^{-1}\left(\frac{\partial}{\partial \beta} \ell_{n}\left(\widetilde{\beta}, \widetilde{\sigma}^{2}\right)\right) . \]

The idea is that if the restriction is true then the restricted estimators should be close to the maximum of the log-likelihood where the derivative is zero. However if the restriction is false then the restricted estimators should be distant from the maximum and the derivative should be large. Hence small values of \(S\) are expected under \(\mathbb{H}_{0}\) and large values under \(\mathbb{H}_{1}\). Tests of \(\mathbb{M}_{0}\) reject for large values of \(S\).

We explore the score statistic in the context of the normal regression model and linear hypotheses \(r(\beta)=\boldsymbol{R}^{\prime} \beta\). Recall that in the normal regression log-likelihood function is

\[ \ell_{n}\left(\beta, \sigma^{2}\right)=-\frac{n}{2} \log \left(2 \pi \sigma^{2}\right)-\frac{1}{2 \sigma^{2}} \sum_{i=1}^{n}\left(Y_{i}-X_{i}^{\prime} \beta\right)^{2} . \]

The constrained MLE under linear hypotheses is constrained least squares

\[ \begin{aligned} \widetilde{\beta} &=\widehat{\beta}-\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \boldsymbol{R}\left[\boldsymbol{R}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \boldsymbol{R}\right]^{-1}\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\boldsymbol{c}\right) \\ \widetilde{e}_{i} &=Y_{i}-X_{i}^{\prime} \widetilde{\beta} \\ \widetilde{\sigma}^{2} &=\frac{1}{n} \sum_{i=1}^{n} \widetilde{e}_{i}^{2} \end{aligned} \]

We can calculate that the derivative and Hessian are

\[ \begin{aligned} \frac{\partial}{\partial \beta} \ell_{n}\left(\widetilde{\beta}, \widetilde{\sigma}^{2}\right) &=\frac{1}{\widetilde{\sigma}^{2}} \sum_{i=1}^{n} X_{i}\left(Y_{i}-X_{i}^{\prime} \widetilde{\beta}\right)=\frac{1}{\widetilde{\sigma}^{2}} \boldsymbol{X}^{\prime} \widetilde{\boldsymbol{e}} \\ -\frac{\partial^{2}}{\partial \beta \partial \beta^{\prime}} \ell_{n}\left(\widetilde{\beta}, \widetilde{\sigma}^{2}\right) &=\frac{1}{\widetilde{\sigma}^{2}} \sum_{i=1}^{n} X_{i} X_{i}^{\prime}=\frac{1}{\widetilde{\sigma}^{2}} \boldsymbol{X}^{\prime} \boldsymbol{X} \end{aligned} \]

Since \(\widetilde{\boldsymbol{e}}=\boldsymbol{Y}-\boldsymbol{X} \widetilde{\beta}\) we can further calculate that

\[ \begin{aligned} \frac{\partial}{\partial \beta} \ell_{n}\left(\widetilde{\beta}, \widetilde{\sigma}^{2}\right) &=\frac{1}{\widetilde{\sigma}^{2}}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)\left(\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \boldsymbol{X}^{\prime} \boldsymbol{Y}-\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \boldsymbol{X}^{\prime} \boldsymbol{X} \widetilde{\beta}\right) \\ &=\frac{1}{\widetilde{\sigma}^{2}}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)(\widehat{\beta}-\widetilde{\beta}) \\ &=\frac{1}{\widetilde{\sigma}^{2}} \boldsymbol{R}\left[\boldsymbol{R}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \boldsymbol{R}\right]^{-1}\left(\boldsymbol{R}^{\prime} \widehat{\beta}-\boldsymbol{c}\right) . \end{aligned} \]

Together we find that

\[ S=\left(\boldsymbol{R}^{\prime} \widehat{\boldsymbol{\beta}}-\boldsymbol{c}\right)^{\prime}\left(\boldsymbol{R}^{\prime}\left(\boldsymbol{X}^{\prime} \boldsymbol{X}\right)^{-1} \boldsymbol{R}\right)^{-1}\left(\boldsymbol{R}^{\prime} \widehat{\boldsymbol{\beta}}-\boldsymbol{c}\right) / \widetilde{\sigma}^{2} . \]

This is identical to the homoskedastic Wald statistic with \(s^{2}\) replaced by \(\widetilde{\sigma}^{2}\). We can also write \(S\) as a monotonic transformation of the \(F\) statistic, as

\[ S=n \frac{\left(\widetilde{\sigma}^{2}-\widehat{\sigma}^{2}\right)}{\widetilde{\sigma}^{2}}=n\left(1-\frac{\widehat{\sigma}^{2}}{\widetilde{\sigma}^{2}}\right)=n\left(1-\frac{1}{1+\frac{q}{n-k} F}\right) . \]

The test “Reject \(\mathbb{M}_{0}\) for large values of \(S\)” is identical to the test “Reject \(\mathbb{M}_{0}\) for large values of \(F\)” so they are identical tests. Since for the normal regression model the exact distribution of \(F\) is known, it is better to use the \(F\) statistic with \(F\) p-values.

In more complicated settings a potential advantage of score tests is that they are calculated using the restricted parameter estimates \(\widetilde{\beta}\) rather than the unrestricted estimates \(\widehat{\beta}\). Thus when \(\widetilde{\beta}\) is relatively easy to calculate there can be a preference for score statistics. This is not a concern for linear restrictions.

More generally, score and score-like statistics can be constructed from first-order conditions evaluated at restricted parameter estimates. Also, when test statistics are constructed using covariance matrix estimators which are calculated using restricted parameter estimates (e.g. restricted residuals) then these are often described as score tests.

An example of the latter is the Wald-type statistic

\[ W=\left(r(\widehat{\beta})-\theta_{0}\right)^{\prime}\left(\widehat{\boldsymbol{R}}^{\prime} \widetilde{\boldsymbol{V}}_{\widehat{\beta}} \widehat{\boldsymbol{R}}\right)^{-1}\left(r(\widehat{\beta})-\theta_{0}\right) \]

where the covariance matrix estimate \(\widetilde{\boldsymbol{V}}_{\widehat{\beta}}\) is calculated using the restricted residuals \(\widetilde{e}_{i}=Y_{i}-X_{i}^{\prime} \widetilde{\beta}\). This may be a good choice when \(\beta\) and \(\theta\) are high-dimensional as in this context there may be worry that the estimator \(\widehat{\boldsymbol{V}}_{\widehat{\beta}}\) is imprecise.

9.17 Problems with Tests of Nonlinear Hypotheses

While the \(t\) and Wald tests work well when the hypothesis is a linear restriction on \(\beta\), they can work quite poorly when the restrictions are nonlinear. This can be seen by a simple example introduced by Lafontaine and White (1986). Take the model \(Y \sim \mathrm{N}\left(\beta, \sigma^{2}\right.\) ) and consider the hypothesis \(\mathbb{H}_{0}: \beta=1\). Let \(\widehat{\beta}\) and \(\widehat{\sigma}^{2}\) be the sample mean and variance of \(Y\). The standard Wald statistic to test \(\mathbb{H}_{0}\) is

\[ W=n \frac{(\widehat{\beta}-1)^{2}}{\widehat{\sigma}^{2}} . \]

Notice that \(\mathbb{M}_{0}\) is equivalent to the hypothesis \(\mathbb{M}_{0}(s): \beta^{s}=1\) for any positive integer \(s\). Letting \(r(\beta)=\) \(\beta^{s}\), and noting \(\boldsymbol{R}=s \beta^{s-1}\), we find that the Wald statistic to test \(\mathbb{M}_{0}(s)\) is

\[ W_{s}=n \frac{\left(\widehat{\beta}^{s}-1\right)^{2}}{\widehat{\sigma}^{2} s^{2} \widehat{\beta}^{2 s-2}} . \]

While the hypothesis \(\beta^{s}=1\) is unaffected by the choice of \(s\), the statistic \(W_{s}\) varies with \(s\). This is an unfortunate feature of the Wald statistic.

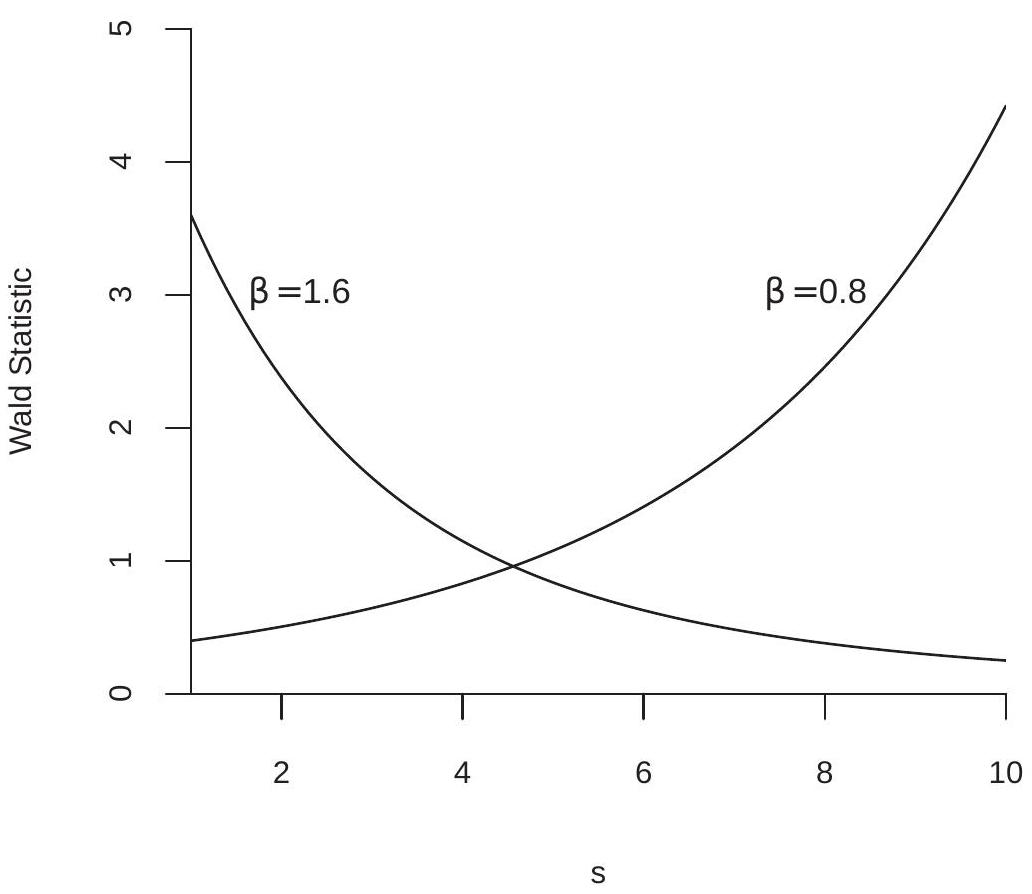

To demonstrate this effect, we have plotted in Figure \(9.2\) the Wald statistic \(W_{s}\) as a function of \(s\), setting \(n / \widehat{\sigma}^{2}=10\). The increasing line is for the case \(\widehat{\beta}=0.8\). The decreasing line is for the case \(\widehat{\beta}=1.6\). It is easy to see that in each case there are values of \(s\) for which the test statistic is significant relative to asymptotic critical values, while there are other values of \(s\) for which the test statistic is insignificant. This is distressing because the choice of \(s\) is arbitrary and irrelevant to the actual hypothesis.

Our first-order asymptotic theory is not useful to help pick \(s\), as \(W_{s} \underset{d}{\longrightarrow} \chi_{1}^{2}\) under \(\mathbb{H}_{0}\) for any \(s\). This is a context where Monte Carlo simulation can be quite useful as a tool to study and compare the exact distributions of statistical procedures in finite samples. The method uses random simulation to create artificial datasets to which we apply the statistical tools of interest. This produces random draws from the statistic’s sampling distribution. Through repetition, features of this distribution can be calculated.

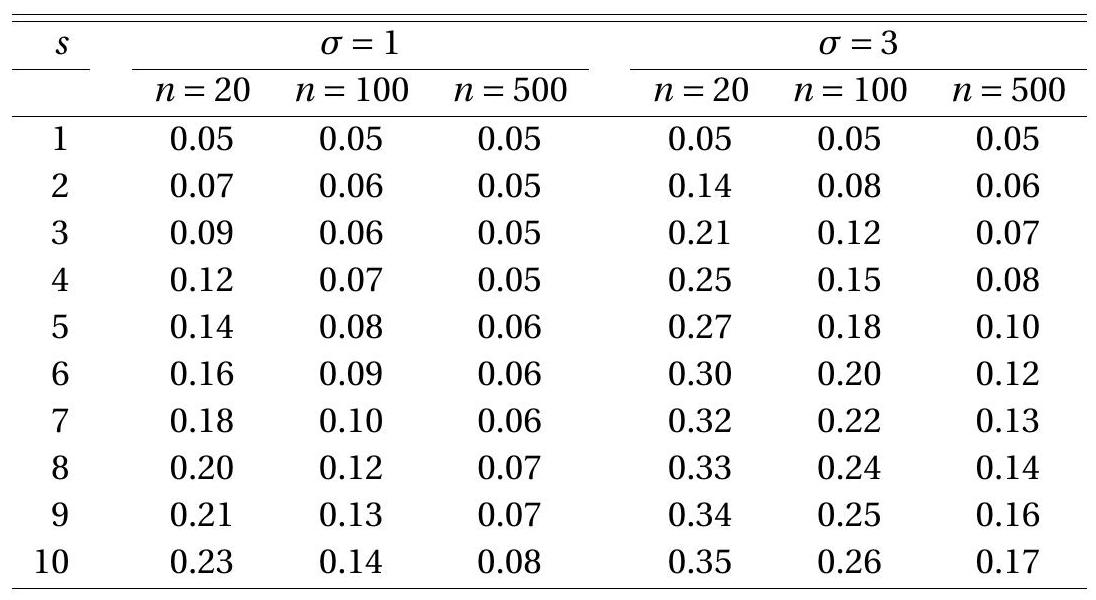

In the present context of the Wald statistic, one feature of importance is the Type I error of the test using the asymptotic \(5 %\) critical value \(3.84\) - the probability of a false rejection, \(\mathbb{P}\left[W_{s}>3.84 \mid \beta=1\right]\). Given the simplicity of the model this probability depends only on \(s, n\), and \(\sigma^{2}\). In Table \(9.2\) we report the results of a Monte Carlo simulation where we vary these three parameters. The value of \(s\) is varied from 1 to \(10, n\) is varied among 20,100 , and 500 , and \(\sigma\) is varied among 1 and 3 . The table reports the simulation estimate of the Type I error probability from 50,000 random samples. Each row of the table corresponds to a different value of \(s\) - and thus corresponds to a particular choice of test statistic. The second through seventh columns contain the Type I error probabilities for different combinations of \(n\) and \(\sigma\). These probabilities are calculated as the percentage of the 50,000 simulated Wald statistics \(W_{s}\) which are larger than 3.84. The null hypothesis \(\beta^{s}=1\) is true so these probabilities are Type I error.

To interpret the table remember that the ideal Type I error probability is \(5 %(.05)\) with deviations indicating distortion. Type I error rates between \(3 %\) and \(8 %\) are considered reasonable. Error rates above \(10 %\) are considered excessive. Rates above \(20 %\) are unacceptable. When comparing statistical procedures we compare the rates row by row, looking for tests for which rejection rates are close to \(5 %\) and rarely fall outside of the \(3 %-8 %\) range. For this particular example the only test which meets this criterion is the conventional \(W=W_{1}\) test. Any other \(s\) leads to a test with unacceptable Type I error probabilities.

In Table \(9.2\) you can also see the impact of variation in sample size. In each case the Type I error probability improves towards \(5 %\) as the sample size \(n\) increases. There is, however, no magic choice of \(n\) for which all tests perform uniformly well. Test performance deteriorates as \(s\) increases which is not surprising given the dependence of \(W_{s}\) on \(s\) as shown in Figure 9.2.

Figure 9.2: Wald Statistic as a Function of \(s\)

In this example it is not surprising that the choice \(s=1\) yields the best test statistic. Other choices are arbitrary and would not be used in practice. While this is clear in this particular example, in other examples natural choices are not obvious and the best choices may be counter-intuitive.

This point can be illustrated through an example based on Gregory and Veall (1985). Take the model

\[ \begin{aligned} Y &=\beta_{0}+X_{1} \beta_{1}+X_{2} \beta_{2}+e \\ \mathbb{E}[X e] &=0 \end{aligned} \]

and the hypothesis \(\mathbb{M}_{0}: \frac{\beta_{1}}{\beta_{2}}=\theta_{0}\) where \(\theta_{0}\) is a known constant. Equivalently, define \(\theta=\beta_{1} / \beta_{2}\) so the hypothesis can be stated as \(\mathbb{M}_{0}: \theta=\theta_{0}\).

Let \(\widehat{\beta}=\left(\widehat{\beta}_{0}, \widehat{\beta}_{1}, \widehat{\beta}_{2}\right)\) be the least squares estimator of \((9.14)\), let \(\widehat{\boldsymbol{V}}_{\widehat{\beta}}\) be an estimator of the covariance matrix for \(\widehat{\beta}\) and set \(\widehat{\theta}=\widehat{\beta}_{1} / \widehat{\beta}_{2}\). Define

\[ \widehat{\boldsymbol{R}}_{1}=\left(\begin{array}{c} 0 \\ \frac{1}{\widehat{\beta}_{2}} \\ -\frac{\widehat{\beta}_{1}}{\widehat{\beta}_{2}^{2}} \end{array}\right) \]

Table 9.2: Type I Error Probability of Asymptotic \(5 % W(s)\) Test

Rejection frequencies from 50,000 simulated random samples.

so that the standard error for \(\widehat{\theta}\) is \(s(\widehat{\theta})=\left(\widehat{\boldsymbol{R}}_{1}^{\prime} \widehat{\boldsymbol{V}}_{\widehat{\beta}} \widehat{\boldsymbol{R}}_{1}\right)^{1 / 2}\). In this case a t-statistic for \(\mathbb{M}_{0}\) is

\[ T_{1}=\frac{\left(\frac{\widehat{\beta}_{1}}{\widehat{\beta}_{2}}-\theta_{0}\right)}{s(\widehat{\theta})} . \]

An alternative statistic can be constructed through reformulating the null hypothesis as

\[ \mathbb{M}_{0}: \beta_{1}-\theta_{0} \beta_{2}=0 . \]

A t-statistic based on this formulation of the hypothesis is

\[ T_{2}=\frac{\widehat{\beta}_{1}-\theta_{0} \widehat{\beta}_{2}}{\left(\boldsymbol{R}_{2}^{\prime} \widehat{\boldsymbol{V}}_{\widehat{\beta}} \boldsymbol{R}_{2}\right)^{1 / 2}} \]

where

\[ \boldsymbol{R}_{2}=\left(\begin{array}{c} 0 \\ 1 \\ -\theta_{0} \end{array}\right) \text {. } \]

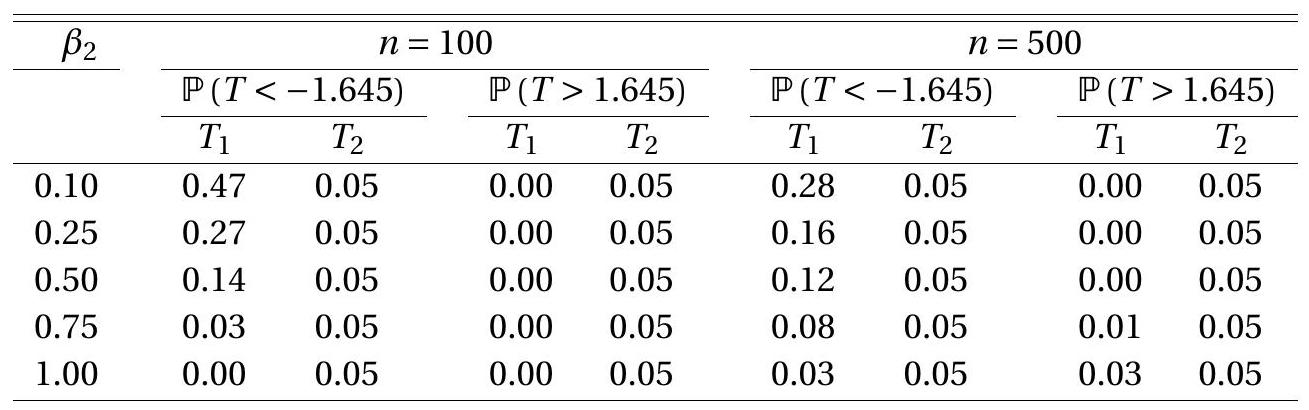

To compare \(T_{1}\) and \(T_{2}\) we perform another simple Monte Carlo simulation. We let \(X_{1}\) and \(X_{2}\) be mutually independent \(\mathrm{N}(0,1)\) variables, \(e\) be an independent \(\mathrm{N}\left(0, \sigma^{2}\right)\) draw with \(\sigma=3\), and normalize \(\beta_{0}=0\) and \(\beta_{1}=1\). This leaves \(\beta_{2}\) as a free parameter along with sample size \(n\). We vary \(\beta_{2}\) among \(0.1\), \(0.25,0.50,0.75\), and \(1.0\) and \(n\) among 100 and 500 .

The one-sided Type I error probabilities \(\mathbb{P}[T<-1.645]\) and \(\mathbb{P}[T>1.645]\) are calculated from 50,000 simulated samples. The results are presented in Table 9.3. Ideally, the entries in the table should be \(0.05\). However, the rejection rates for the \(T_{1}\) statistic diverge greatly from this value, especially for small values of \(\beta_{2}\). The left tail probabilities \(\mathbb{P}\left[T_{1}<-1.645\right]\) greatly exceed \(5 %\), while the right tail probabilities \(\mathbb{P}\left[T_{1}>1.645\right]\) are close to zero in most cases. In contrast, the rejection rates for the \(T_{2}\) statistic are invariant to the value of \(\beta_{2}\) and equal \(5 %\) for both sample sizes. The implication of Table \(9.3\) is that the two t-ratios have dramatically different sampling behavior.

The common message from both examples is that Wald statistics are sensitive to the algebraic formulation of the null hypothesis. Table 9.3: Type I Error Probability of Asymptotic 5% t-tests

Rejection frequencies from 50,000 simulated random samples.

A simple solution is to use the minimum distance statistic \(J\) which equals \(W\) with \(r=1\) in the first example, and \(\left|T_{2}\right|\) in the second example. The minimum distance statistic is invariant to the algebraic formulation of the null hypothesis so is immune to this problem. Whenever possible, the Wald statistic should not be used to test nonlinear hypotheses.

Theoretical investigations of these issues include Park and Phillips (1988) and Dufour (1997).

9.18 Monte Carlo Simulation

In Section \(9.17\) we introduced the method of Monte Carlo simulation to illustrate the small sample problems with tests of nonlinear hypotheses. In this section we describe the method in more detail.

Recall, our data consist of observations \(\left(Y_{i}, X_{i}\right)\) which are random draws from a population distribution \(F\). Let \(\theta\) be a parameter and let \(T=T\left(\left(Y_{1}, X_{1}\right), \ldots,\left(Y_{n}, X_{n}\right), \theta\right)\) be a statistic of interest, for example an estimator \(\widehat{\theta}\) or a t-statistic \((\widehat{\theta}-\theta) / s(\widehat{\theta})\). The exact distribution of \(T\) is

\[ G(u, F)=\mathbb{P}[T \leq u \mid F] . \]

While the asymptotic distribution of \(T\) might be known, the exact (finite sample) distribution \(G\) is generally unknown.

Monte Carlo simulation uses numerical simulation to compute \(G(u, F)\) for selected choices of \(F\). This is useful to investigate the performance of the statistic \(T\) in reasonable situations and sample sizes. The basic idea is that for any given \(F\) the distribution function \(G(u, F)\) can be calculated numerically through simulation. The name Monte Carlo derives from the Mediterranean gambling resort where games of chance are played.

The method of Monte Carlo is simple to describe. The researcher chooses \(F\) (the distribution of the pseudo data) and the sample size \(n\). A “true” value of \(\theta\) is implied by this choice, or equivalently the value \(\theta\) is selected directly by the researcher which implies restrictions on \(F\).

Then the following experiment is conducted by computer simulation:

\(n\) independent random pairs \(\left(Y_{i}^{*}, X_{i}^{*}\right), i=1, \ldots, n\), are drawn from the distribution \(F\) using the computer’s random number generator.

The statistic \(T=T\left(\left(Y_{1}^{*}, X_{1}^{*}\right), \ldots,\left(Y_{n}^{*}, X_{n}^{*}\right), \theta\right)\) is calculated on this pseudo data.

For step 1, computer packages have built-in random number procedures including \(U[0,1]\) and \(N(0,1)\). From these most random variables can be constructed. (For example, a chi-square can be generated by sums of squares of normals.) For step 2, it is important that the statistic be evaluated at the “true” value of \(\theta\) corresponding to the choice of \(F\).