Chapter 16: Non-Stationary Time Series

16 Non-Stationary Time Series

16.1 Introduction

At the beginning of Chapter 14 we displayed a set of economic time series. Several (real GDP, exchange rate, interest rate, crude oil price) did not appear to be stationary. In Section \(14.23\) we introduced the non-stationary unit root process which is an autoregressive process with an autoregressive root at unity. Plots of two simulated examples (Figure 14.5) displayed time-paths with wandering behavior similar to the economic time series. This suggests that perhaps a unit root autoregression is a reasonable model for these series. In this chapter we explore econometric estimation and inference for non-stationary unit root time series.

16.2 Partial Sum Process and Functional Convergence

Take the multivariate random walk

\[ Y_{t}=Y_{t-1}+e_{t} \]

where \(\left(e_{t}, \mathscr{F}_{t}\right)\) is a vector MDS with finite covariance matrix \(\Sigma\). By back-substitution we find \(Y_{t}=Y_{0}+S_{t}\) where

\[ S_{t}=\sum_{i=1}^{t} e_{i} \]

is the cumulative sum of the errors up to time \(t\). We call \(S_{t}\) a partial sum process.

The time index \(t\) ranges from 0 to \(n\). Write \({ }^{1} t=\lfloor n r\rfloor\) as a fraction \(r\) of the sample size \(n\). This allows us to write \(S_{\lfloor n r\rfloor}\) as a function of the fraction \(r\). Divide by \(\sqrt{n}\) so that the variance is stabilized. With these modifications we define the standardized partial sum process.

\[ S_{n}(r)=\frac{1}{\sqrt{n}} S_{\lfloor n r\rfloor}=\frac{1}{\sqrt{n}} \sum_{t=1}^{\lfloor n r\rfloor} e_{t} . \]

The random process \(S_{n}(r)\) is a scaled version of the time-series \(Y_{t}\) and is a function of the sample fraction \(r \in[0,1]\). It is a stochastic process meaning that it is a random function. For any finite \(n, S_{n}(r)\) is a step function with \(n\) jumps.

Let’s consider the behavior of \(S_{n}(r)\) as \(n\) increases. It’s largest discrete jump equals \(n^{-1 / 2} \max _{1 \leq t \leq n}\left\|e_{t}\right\|\). Theorem \(6.15\) shows that this is \(o_{p}(1)\). This suggests that the jumps in \(S_{n}(r)\) asymptotically vanish. We would like to find its asymptotic distribution. We expect the limit distribution to be a stochastic process as well.

\({ }^{1}\) The notation \(\lfloor x\rfloor\) means “round down to the nearest integer”. To do so we need to define the asymptotic distribution of a random function. The primary tool is the functional central limit theorem (FCLT) which is a component of empirical process theory (Chapter 18 of Probability and Statistics for Economists). It turns out that the FCLT depends on how we measure the difference between two functions. The most commonly used measure is the uniform metric. On the space of functions from \([0,1]\) to \(\mathbb{R}^{m}\) it is

\[ \rho\left(v_{1}, v_{2}\right)=\sup _{0 \leq r \leq 1}\left\|v_{1}(r)-v_{2}(r)\right\| . \]

Convergence in distribution for random processes (e.g. Definition \(18.6\) of Probability and Statistics for Economists) is defined with respect to a specific metric. While we don’t repeat the details here the important consequence is that continuity is defined with respect to this metric and this impacts applications such as the continuous mapping theorem.

The Functional Central Limit Theorem (Theorem \(18.9\) of Probability and Statistics for Economists) states that \(S_{n}(r) \underset{d}{\longrightarrow} S(r)\) as a function over \(r \in[0,1]\) if two conditions hold:

- The limit distributions of \(S_{n}(r)\) coincide with those of \(S(r)\).

- \(S_{n}(r)\) is asymptotically equicontinuous.

The first condition means that for any fixed \(r_{1}, \ldots, r_{m},\left(S_{n}\left(r_{1}\right), \ldots, S_{n}\left(r_{m}\right)\right) \underset{d}{\longrightarrow}\left(S\left(r_{1}\right), \ldots, S\left(r_{m}\right)\right)\). The second condition is technical but essentially requires that \(S_{n}(r)\) is approximately continuous with respect to the uniform metric in large samples.

We now characterize the limit distributions of \(S_{n}(r)\). There are three important properties.

- \(S_{n}(0)=0\).

- For any \(r, S_{n}(r) \underset{d}{\longrightarrow} \mathrm{N}(0, r \Sigma)\).

- For \(r_{1}<r_{2}, S_{n}\left(r_{1}\right)\) and \(S_{n}\left(r_{2}\right)-S_{n}\left(r_{1}\right)\) are asymptotically independent.

The first property follows from the definition of \(S_{n}(r)\). For the second, set \(N=\lfloor n r\rfloor\). For \(r>0, N \rightarrow \infty\) as \(n \rightarrow \infty\). The MDS CLT (Theorem 14.11) implies that

\[ S_{n}(r)=\sqrt{\frac{\lfloor n r\rfloor}{n}} \frac{1}{\sqrt{N}} \sum_{t=1}^{N} e_{t} \underset{d}{\longrightarrow} \sqrt{r} \mathrm{~N}(0, \Sigma)=\mathrm{N}(0, r \Sigma) \]

as claimed. For the third property the assumption that \(e_{t}\) is a MDS implies that \(S_{n}\left(r_{1}\right)\) and \(S_{n}\left(r_{2}\right)-S_{n}\left(r_{1}\right)\) are uncorrelated. An extension of the above previous asymptotic argument shows that they are jointly asymptotically normal with a zero covariance and hence are asymptotically independent.

The above three limit properties of \(S_{n}(r)\) are asymptotic versions of the definition of Brownian motion. Definition 16.1 A vector Brownian motion \(B(r)\) for \(r \geq 0\) is defined by the properties:

- \(B(0)=0\).

- For any \(r, B(r) \sim \mathrm{N}(0, r \Sigma)\).

- For any \(r_{1} \leq r_{2}, B\left(r_{1}\right)\) and \(B\left(r_{2}\right)-B\left(r_{1}\right)\) are independent.

We call \(\Sigma\) the covariance matrix of \(B(r)\). If \(\Sigma=\boldsymbol{I}_{m}\) we say that \(B(r)\) is a standard

Brownian motion and denote it as \(W(r)\). It satisfies \(B(r)=\Sigma^{1 / 2} W(r)\).

A Brownian motion \(B(r)\) is continuous with probability one but is nowhere differentiable. In physics, Brownian motion is used to describe the movement of particles. The wandering properties of particles suspended in liquid was described as far back as the Roman poet Lucretius (On the Nature of the Universe, 55 BCE). The name Brownian motion credits the pioneering observational studies of botanist Robert Brown. The mathematical process is often called a Wiener process crediting the work of Norbert Wiener.

The above discussion has shown that the limit distributions of the partial sum process \(S_{n}(r)\) coincide with those of Brownian motion \(B(r)\). In Section \(16.22\) we demonstrate that \(S_{n}(r)\) is asymptotically equicontinuous. Together with the FCLT this establishes that \(S_{n}(r)\) converges in distribution to \(B(r)\).

Theorem 16.1 Weak Convergence of Partial Sum Process If \(\left(e_{t}, \mathscr{F}_{t}\right)\) is a strictly stationary and ergodic MDS and \(\Sigma=\mathbb{E}\left[e_{t} e_{t}^{\prime}\right]<\infty\) then as a function over \(r \in[0,1], S_{n}(r) \underset{d}{\longrightarrow} B(r)\), a Brownian motion with covariance matrix \(\Sigma\).

We extend Theorem \(16.1\) to serially correlated processes in Section 16.4.

Let’s connect our analysis of \(S_{n}(r)\) with the random walk series \(Y_{t}\). Since \(Y_{t}=Y_{0}+S_{t}\), we find

\[ \frac{1}{\sqrt{n}} Y_{\lfloor n r\rfloor}=S_{n}(r)+\frac{1}{\sqrt{n}} Y_{0} . \]

The second term is \(o_{p}(1)\) when \(Y_{0}\) is finite with probability one. Thus under this latter assumption \(n^{-1 / 2} Y_{\lfloor n r\rfloor}=S_{n}(r)+o_{p}(1) \underset{d}{\longrightarrow}\) B. For simplicity we will frequently implicitly assume \(Y_{0}=0\) to simplify the notation, as the case with \(Y_{0} \neq 0\) does not fundamentally change the analysis.

16.3 Beveridge-Nelson Decomposition

The previous section focused on random walk processes. A unit root process more broadly is an autoregression with a single root at unity, which means that the differenced process \(\Delta Y_{t}\) is serially correlated but stationary.

Beveridge and Nelson (1981) introduced a clever way to decompose a unit root process into a permanent (random walk) component and a transitory (stationary) component. This allows a straightforward generalization of Theorem \(16.1\) to incorporate serial correlation.

Recall that a stationary process has a Wold representation \(\Delta Y_{t}=\Theta(\mathrm{L}) e_{t}\) where \(\Theta(z)=\sum_{j=0}^{\infty} \Theta_{j} z^{j}\). Assumption 16.1 \(\Delta Y_{t}\) is strictly stationary with no deterministic component, mean zero, and finite covariance matrix \(\Sigma\). The coefficients of its Wold representation \(\Delta Y_{t}=\Theta(\mathrm{L}) e_{t}\) satisfy

\[ \sum_{j=0}^{\infty}\left\|\sum_{\ell=j+1}^{\infty} \Theta_{\ell}\right\|<\infty . \]

The condition (16.1) on the coefficients is stronger than absolute summability but holds (for example) if \(\Delta Y_{t}\) is generated by a stationary AR process. It is similar to the condition used for the autoregressive Wold representation (Theorem 14.19).

Consider the following factorization of the lag polynomial

\[ \Theta(z)=\Theta(1)+(1-z) \Theta^{*}(z) \]

where \(\Theta(1)=\sum_{\ell=0}^{\infty} \Theta_{\ell}\) and \(\Theta^{*}(z)\) is the lag polynomial

\[ \begin{aligned} \Theta^{*}(z) &=\sum_{j=0}^{\infty} \Theta_{j}^{*} z^{j} \\ \Theta_{j}^{*} &=-\sum_{\ell=j+1}^{\infty} \Theta_{\ell} . \end{aligned} \]

At the end of this section we demonstrate (16.2)-(16.4). Assumption (16.1) is the same as \(\sum_{j=0}^{\infty}\left\|\Theta_{j}^{*}\right\|<\infty\), which implies that \(U_{t}=\Theta^{*}(\mathrm{~L}) e_{t}\) is convergent, strictly stationary, and ergodic (by Theorem 15.4).

The factorization (16.2) means that we can write

\[ \Delta Y_{t}=\xi_{t}+U_{t}-U_{t-1} . \]

where \(\xi_{t}=\Theta(1) e_{t}\). This decomposes \(\Delta Y_{t}\) into the innovation \(e_{t}\) plus the first-difference of the stochastic process \(U_{t}\). Summing the differences we find

\[ Y_{t}=S_{t}+U_{t}+V_{0} \]

where \(S_{t}=\sum_{i=1}^{t} \xi_{t}\) and \(V_{0}=Y_{0}-U_{0}\). This decomposes the unit root process \(Y_{t}\) into the random walk \(S_{t}\), the stationary process \(U_{t}\), and an initial condition \(V_{0}\).

We have established the following.

\[ \begin{aligned} &\text { Theorem 16.2 Under Assumption } 16.1 \text { then (16.2)-(16.4) holds with } \\ &\begin{array}{l} \sum_{j=0}^{\infty}\left\|\Theta_{j}^{*}\right\|<\infty \text {. The process } \Delta Y_{t} \text { satisfies } \\ \qquad \Delta Y_{t}=\xi_{t}+U_{t}-U_{t-1} \\ \text { and } \\ \qquad Y_{t}=S_{t}+U_{t}+V_{0} \end{array} \\ &\text { where } S_{t}=\sum_{i=1}^{t} \xi_{t} \text { is a random walk, } \xi_{t} \text { is white noise with variance } \Theta(1) \Sigma \Theta(1)^{\prime}, \\ &U_{t} \text { is strictly stationary, and } V_{0} \text { is an initial condition. } \end{aligned} \]

Beveridge and Nelson (1981) called \(S_{t}\) the permanent (trend) component of \(Y_{t}\) and \(U_{t}\) the transitory component. They called \(S_{t}\) the permanent component as it determines the long-run behavior of \(Y_{t}\).

As an example, take the MA(1) case \(\Delta Y_{t}=e_{t}+\Theta_{1} e_{t-1}\). This has decomposition \(\Delta Y_{t}=\left(\boldsymbol{I}_{m}+\Theta_{1}\right) e_{t}-\) \(\Theta_{1}\left(e_{t}-e_{t-1}\right)\). In this case \(U_{t}=-\Theta_{1} e_{t}\).

The Beveridge-Nelson decomposition of a series is unique but it is not the only way to construct a permanent/transitory decomposition. The Beveridge-Nelson decomposition has the characteristic that the innovations driving the permanent and transitory components \(S_{t}\) and \(U_{t}\) are perfectly correlated. Other decompositions do not use this restriction.

We close this section by verifying (16.2)-(16.4). Observe that the right-side of (16.2) is

\[ \begin{aligned} \sum_{j=0}^{\infty} \Theta_{j}-\sum_{j=0}^{\infty} \sum_{\ell=j+1}^{\infty} \Theta_{\ell} z^{j}(1-z) &=\sum_{j=0}^{\infty} \Theta_{j}-\sum_{j=0}^{\infty} \sum_{\ell=j+1}^{\infty} \Theta_{\ell} z^{j}+\sum_{j=0}^{\infty} \sum_{\ell=j+1}^{\infty} \Theta_{\ell} z^{j+1} \\ &=\Theta_{0}-\sum_{j=1}^{\infty} \sum_{\ell=j+1}^{\infty} \Theta_{\ell} z^{j}+\sum_{j=1}^{\infty} \sum_{\ell=j}^{\infty} \Theta_{\ell} z^{j} \\ &=\Theta_{0}+\sum_{j=1}^{\infty} \Theta_{j} z^{j} \end{aligned} \]

which is \(\Theta(z)\) as claimed.

16.4 Functional CLT

Theorem 16.1 showed that a random walk process converges in distribution to a Brownian motion. We now extend this result to the case of a unit root process with correlated differences.

Under Assumption \(16.1\) a unit root process can be written as \(Y_{t}=S_{t}+U_{t}+V_{0}\) where \(S_{t}=\sum_{i=1}^{t} \xi_{t}\). Define the scaled processes \(Z_{n}(r)=n^{-1 / 2} Y_{\lfloor n r\rfloor}\) and \(S_{n}(r)=n^{-1 / 2} S_{\lfloor n r\rfloor}\). We find

\[ Z_{n}(r)=S_{n}(r)+\frac{1}{\sqrt{n}} V_{0}+\frac{1}{\sqrt{n}} U_{\lfloor n r\rfloor} . \]

If the errors \(e_{t}\) are a MDS with covariance matrix \(\Sigma\) then by Theorem 16.1, \(S_{n}(r) \underset{d}{\longrightarrow} B(r)\), a vector Brownian motion with covariance matrix \(\Omega=\Theta(1) \Sigma \Theta(1)^{\prime}\). The initial condition \(n^{-1 / 2} V_{0}\) is \(o_{p}(1)\). The third term \(n^{-1 / 2} U_{\lfloor n r\rfloor}\) is \(o_{p}(1)\) if \(\sup _{1 \leq t \leq n}\left|\frac{1}{\sqrt{n}} U_{t}\right|=o_{p}(1)\), which holds under Theorem \(6.15\) if \(U_{t}\) has a finite variance. We now show that this holds under Assumption 16.1. The latter implies that \(\sum_{j=0}^{\infty}\left\|\Theta_{j}^{*}\right\|<\infty\), as discussed before Theorem 16.2. This implies

\[ \left\|\operatorname{var}\left[U_{t}\right]\right\|=\left\|\sum_{j=0}^{\infty} \Theta_{j}^{*} \Sigma \Theta_{j}^{* \prime}\right\| \leq\|\Sigma\| \sum_{j=0}^{\infty}\left\|\Theta_{j}^{*}\right\|^{2} \leq\|\Sigma\| \max _{j}\left\|\Theta_{j}^{*}\right\| \sum_{j=0}^{\infty}\left\|\Theta_{j}^{*}\right\|<\infty \]

as needed.

Together we find that

\[ Z_{n}(r)=S_{n}(r)+o_{p}(1) \underset{d}{\longrightarrow} B(r) . \]

The variance of the limiting process is \(\Omega=\Theta(1) \Sigma \Theta(1)^{\prime}\). This is the “long-run variance” of \(\Delta Y_{t}\). Theorem 16.3 Under Assumption \(16.1\) and in addition \(\left(e_{t}, \mathscr{F}_{t}\right)\) is a MDS with covariance matrix \(\Sigma\), then as a function over \(r \in[0,1], Z_{n}(r) \underset{d}{\rightarrow} B(r)\) a vector Brownian motion with covariance matrix \(\Omega\).

Our derivation used the assumption that the linear projection errors are a MDS. This is not essential for the basic result; the FCLT holds under a variety of dependence conditions. A flexible version can be stated using mixing conditions.

Theorem 16.4 If \(\Delta Y_{t}\) is strictly stationary, \(\mathbb{E}\left[\Delta Y_{t}\right]=0\), with mixing coefficients \(\alpha(\ell)\), and for some \(r>2\), E \(\left\|\Delta Y_{t}\right\|^{r}<\infty\) and \(\sum_{\ell=1}^{\infty} \alpha(\ell)^{1-2 / r}<\infty\), then as a function over \(r \in[0,1], Z_{n}(r) \underset{d}{\longrightarrow} B(r)\), a vector Brownian motion with covariance matrix

\[ \Omega=\sum_{\ell=-\infty}^{\infty} \mathbb{E}\left[\Delta Y_{t} \Delta Y_{t-\ell}\right] \]

For a proof see Davidson (1994, Theorems \(31.5\) and 31.15). Interestingly, Theorem \(16.4\) employs exactly the same assumptions as for Theorem \(14.15\) (the CLT for mixing processes). This means that we obtain the stronger result (the FCLT) without stronger assumptions.

The covariance matrix \(\Omega\) appearing in (16.5) is the long-run covariance matrix of \(\Delta Y_{t}\) as defined in Section 14.13. It is useful to observe that we can decompose the long-run variance as \(\Omega=\Sigma+\Lambda+\Lambda^{\prime}\) where \(\Sigma=\operatorname{var}\left[\Delta Y_{t}\right]\) and

\[ \Lambda=\sum_{\ell=1}^{\infty} \mathbb{E}\left[\Delta Y_{t} \Delta Y_{t-\ell}^{\prime}\right] . \]

This decomposes the long-run variance of \(\Delta Y_{t}\) into its static (one-period) variance \(\Sigma\) and a sum of covariances \(\Lambda\). The matrix \(\Lambda\) is not symmetric.

16.5 Orders of Integration

Take a univariate series \(Y_{t}\). Theorems \(16.3\) and \(16.4\) showed that if \(\Delta Y_{t}\) is stationary and mean zero then the level process \(Y_{t}\), suitably scaled, is asymptotically a Brownian motion with variance \(\omega^{2}\). For this theory to be meaningful this variance should be strictly positive definite. To see why this is a potential restriction suppose that \(Y_{t}=a(\mathrm{~L}) e_{t}\) where the coefficients of \(a(z)\) are absolutely convergent and \(e_{t}\) is i.i.d. \(\left(0, \sigma^{2}\right)\). Then \(\Delta Y_{t}=b(\mathrm{~L}) e_{t}\) where \(b(z)=(1-z) a(z)\) so \(\omega^{2}=b(1)^{2} \sigma^{2}=0\). That is, \(\Delta Y_{t}\) has a longrun variance of 0 . We call the process \(\Delta Y_{t}\) over-differenced, since \(Y_{t}\) is strictly stationary and does not require differencing to achieve stationarity.

To meaningfully differentiate between processes which require differencing to achieve stationarity we use the following definition.

16.5.1 Definition 16.2 Order of Integration.

- \(Y_{t} \in \mathbb{R}\) is Integrated of Order 0 , written \(I(0)\), if \(Y_{t}\) is weakly stationary with positive long-run variance.

- \(Y_{t} \in \mathbb{R}\) is Integrated of Order \(d\), written \(I(d)\), if \(u_{t}=\Delta^{d} Y_{t}\) is \(I(0)\). \(I(0)\) processes are stationary processes which are not over-differenced. \(I(1)\) processes include random walks and unit root processes. \(I(2)\) processes require double differencing to achieve stationarity. \(I(-1)\) processes are stationary but their cumulative sums are also stationary, and are therefore overdifferenced stationary processes. Many macroeconomic time series in log-levels are potentially \(I(1)\) processes. Economic time series which are potentially \(I(2)\) are log price indices, for their first difference (inflation rates) are potentially non-stationary proceses. In this textbook we focus on integer-valued orders of integration but fractional \(d\) are also well-defined. In most applications economists presume that economic series are either \(I(0)\) or \(I(1)\) and often use the shorthand “integrated” to refer to \(I(1)\) series.

The long-run variance of ARMA processes is straightforward to calculate. As we have seen, if \(\Delta Y_{t}=\) \(b(\mathrm{~L}) e_{t}\) where \(e_{t}\) is white noise with variance \(\sigma^{2}\), then \(\omega^{2}=b(1)^{2} \sigma^{2}\). Now suppose \(a(\mathrm{~L}) \Delta Y_{t}=e_{t}\) where \(a(z)\) is invertible. Then \(b(z)=a(z)^{-1}\) and \(\omega^{2}=\sigma^{2} / a(1)^{2}\). For an ARMA process \(a(\mathrm{~L}) \Delta Y_{t}=b(\mathrm{~L}) e_{t}\) with invertible \(a(z)\), then \(\omega^{2}=\sigma^{2} b(1)^{2} / a(1)^{2}\). Hence, if \(\Delta Y_{t}\) satisfies the ARMA process \(a(\mathrm{~L}) \Delta Y_{t}=b(\mathrm{~L}) e_{t}\) then \(Y_{t}\) is \(I(1)\) if \(a(z)\) is invertible and \(b(1) \neq 0\).

Consider vector processes. The long-run covariance matrix of \(\Delta Y_{t}=\Theta(\mathrm{L}) e_{t}\) is \(\Omega=\Theta(1) \Sigma \Theta(1)^{\prime}\). The long-run covariance matrix of \(\boldsymbol{A}(\mathrm{L}) \Delta Y_{t}=e_{t}\) is \(\Omega=\boldsymbol{A}(1)^{-1} \Sigma \boldsymbol{A}(1)^{-1 \prime}\). It is conventional to describe the vector \(\Delta Y_{t}\) as \(I(0)\) if each element of \(\Delta Y_{t}\) is \(I(0)\) but this allows its covariance matrix to be singular. To exclude the latter we introduce the following.

Definition 16.3 The vector process \(Y_{t}\) is full rank \(I(0)\) if its long-run covariance matrix \(\Omega\) is positive definite.

16.6 Means, Local Means, and Trends

Theorem \(16.4\) shows that \(Z_{n}(r) \underset{d}{\longrightarrow} B(r)\). The continuous mapping theorem shows that if a function \(f(x)\) is continuous \({ }^{2}\) then \(f\left(Z_{n}\right) \underset{d}{\longrightarrow} f(B)\). This can be used to obtain the asymptotic distribution of many statistics of interest. Simple examples are \(Z_{n}(r)^{2} \underset{d}{\longrightarrow} B(r)^{2}\) and \(\int_{0}^{1} Z_{n}(r) d r \underset{d}{\longrightarrow} \int_{0}^{1} B(r) d r\). The latter produces the asymptotic distribution for the sample mean as we now show.

Let \(\bar{Y}_{n}=n^{-1} \sum_{t=1}^{n} Y_{t}\) be the sample mean. For simplicity assume \(Y_{0}=0\). Note that for \(r \in\left[\frac{t}{n}, \frac{t+1}{n}\right)\),

\[ \frac{1}{n^{1 / 2}} Y_{t}=Z_{n}(r)=n \int_{t / n}^{(t+1) / n} Z_{n}(r) d r . \]

Taking the average for \(t=0\) to \(n-1\) we find

\[ \frac{1}{n^{1 / 2}} \bar{Y}_{n}=\frac{1}{n^{3 / 2}} \sum_{t=0}^{n-1} Y_{t}=\sum_{t=0}^{n-1} \int_{t / n}^{(t+1) / n} Z_{n}(r) d r=\int_{0}^{1} Z_{n}(r) d r . \]

This is the integral (or average) of \(Z_{n}(r)\) over \([0,1]\).

The continuous mapping theorem can be applied \({ }^{3}\). The above expression converges in distribution to the random variable \(\int_{0}^{1} B(r) d r\). This is the average of the Brownian motion over \([0,1]\).

\({ }^{2}\) With respect to the uniform metric \(\rho\).

\({ }^{3}\) The integral \(f(g)=\int_{0}^{1} g(r) d r\) is a continuous function of \(g\) with respect to the uniform metric. (Small changes in \(g\) result in small changes in \(f\).) Now consider sub-sample means. Let \(\bar{Y}_{1 n}=(n / 2)^{-1} \sum_{t=0}^{n / 2-1} Y_{t}\) and \(\bar{Y}_{2 n}=(n / 2)^{-1} \sum_{t=n / 2}^{n-1} Y_{t}\) be the sample means on the first-half and second-half of the sample, respectively. By a similar analysis as for the full-sample mean

\[ \begin{aligned} &\frac{1}{n^{1 / 2}} \bar{Y}_{1 n}=\frac{2}{n^{3 / 2}} \sum_{t=0}^{n / 2-1} Y_{t}=2 \int_{0}^{1 / 2} Z_{n}(r) d r \underset{\mathrm{c}}{2} \int_{0}^{1 / 2} B(r) d r \\ &\frac{1}{n^{1 / 2}} \bar{Y}_{2 n}=\frac{2}{n^{3 / 2}} \sum_{t=n / 2}^{n-1} Y_{t}=2 \int_{1 / 2}^{1} Z_{n}(r) d r \underset{\mathrm{d}}{\longrightarrow} \int_{1 / 2}^{1} B(r) d r \end{aligned} \]

which are the averages of \(B(r)\) over the regions \([0,1 / 2]\) and \([1 / 2,1]\). These are distinct random variables. This gives rise to the prediction that if \(Y_{t}\) is a unit root process, sample averages will not be constant (even in large samples) and will vary across subsamples.

Furthermore, observe that the limit distributions were obtained after dividing by \(n^{1 / 2}\). This means that without this standardization the sample mean would not be bounded in probability. This implies that the sample mean can be (randomly) large. This leads to the rather peculiar property that sample means will be large, random, and non-informative about population parameters. This means that interpreting simple statistics such as means is treacherous when the series may be a unit root process.

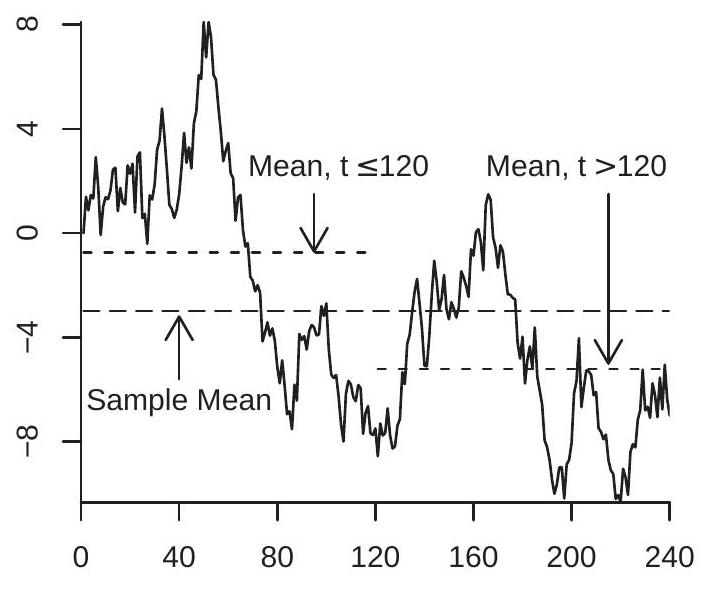

Fitted Means

Fitted Trend

Figure 16.1: Random Walk with Fitted Mean, Sub-Sample Means, and Trend

To illustrate, Figure 16.1(a) displays a simulated random walk with \(n=240\) observations. Also plotted is the sample mean \(\bar{Y}_{n}=-2.98\), along with the sub-sample means \(\bar{Y}_{1 n}=-0.75\) and \(\bar{Y}_{2 n}=-5.21\). As predicted, the mean and sub-sample means are large, variable, and uninformative regarding the population mean.

Now consider a linear regression of \(Y_{t}\) on a linear time trend. The model for estimation is

\[ Y_{t}=\beta_{0}+\beta_{1} t+e_{t}=X_{t}^{\prime} \beta+e_{t} \]

where \(X_{t}=(1, t)^{\prime}\). Again for simplicity assume that \(Y_{0}=0\). Take the least squares estimator \(\widehat{\beta}\). Theorem \(14.36\) shows that

\[ \begin{gathered} \frac{1}{n^{2}} \sum_{t=1}^{n} t \rightarrow \int_{0}^{1} r d r=\frac{1}{2} \\ \frac{1}{n^{3}} \sum_{t=1}^{n} t^{2} \rightarrow \int_{0}^{1} r^{2} d r=\frac{1}{3} . \end{gathered} \]

Define \(D_{n}=\left[\begin{array}{ll}1 & 0 \\ 0 & n\end{array}\right]\). We calculate that

\[ D_{n}^{-1} \frac{1}{n} \sum_{t=1}^{n} X_{t} X_{t}^{\prime} D_{n}^{-1}=\left[\begin{array}{cc} \frac{1}{n} \sum_{t=1}^{n} 1 & \frac{1}{n^{2}} \sum_{t=1}^{n} t \\ \frac{1}{n^{2}} \sum_{t=1}^{n} t & \frac{1}{n^{3}} \sum_{t=1}^{n} t^{2} \end{array}\right] \rightarrow\left[\begin{array}{cc} 1 & \int_{0}^{1} r d r \\ \int_{0}^{1} r d r & \int_{0}^{1} r^{2} d r \end{array}\right]=\int_{0}^{1} X(r) X(r)^{\prime} d r \]

where \(X(r)=(1, r)\).

An application of the continuous mapping theorem with Theorem \(16.1\) yields

\[ D_{n}^{-1} \frac{1}{n^{3 / 2}} \sum_{t=1}^{n} X_{t} Y_{t}=\int_{0}^{1} X(r) Z_{n}(r) d r \underset{d}{\longrightarrow} \int_{0}^{1} X(r) B(r) d r . \]

Together we obtain

\[ \begin{aligned} D_{n} n^{-1 / 2} \widehat{\beta} &=D_{n} n^{-1 / 2}\left(\sum_{t=1}^{n} X_{t} X_{t}^{\prime}\right)^{-1}\left(\sum_{t=1}^{n} X_{t} Y_{t}\right) \\ &=\left(D_{n}^{-1} \frac{1}{n} \sum_{t=1}^{n} X_{t} X_{t}^{\prime} D_{n}^{-1}\right)^{-1}\left(D_{n}^{-1} \frac{1}{n^{3 / 2}} \sum_{t=1}^{n} X_{t} Y_{t}\right) \\ & \underset{d}{\longrightarrow}\left(\int_{0}^{1} X(r) X(r)^{\prime} d r\right)^{-1}\left(\int_{0}^{1} X(r) B(r) d r\right) . \end{aligned} \]

This shows that the estimator \(\widehat{\beta}\) has an asymptotic distribution which is a transformation of the Brownian motion \(B(r)\). For compactness we often write the final expression as \(\left(\int_{0}^{1} X X^{\prime}\right)^{-1}\left(\int_{0}^{1} X B\right)\).

To illustrate, Figure 16.1(b) displays the random walk from panel (a) along with a fitted trend line. The fitted trend appears large and substantial. However it is purely random, a feature only of this specific realization, is uninformative about the underlying parameters, and is dangerously misleading for prediction.

16.7 Demeaning and Detrending

A common preliminary step in time series analysis is demeaning (subtracting off a mean) and detrending (subtracting off a linear trend). With stationary processes this does not affect asymptotic inference. In contrast, an important property of unit root processes is that their behavior is altered by these transformations.

Take demeaning. The demeaned version of \(Y_{t}\) is \(Y_{t}^{*}=Y_{t}-\bar{Y}_{n}\). An important observation is that \(Y_{t}^{*}\) is invariant to the initial condition \(Y_{0}\), so without loss of generality we simply assume \(Y_{0}=0\).

The normalized process is

\[ Z_{n}^{*}(r)=\frac{1}{\sqrt{n}} Y_{\lfloor n r\rfloor}-\frac{1}{\sqrt{n}} \bar{Y}_{n}=Z_{n}(r)-Z_{n}(1) \underset{d}{\longrightarrow} B(r)-\int_{0}^{1} B \stackrel{\text { def }}{=} B^{*}(r) . \]

\(B^{*}(r)\) is demeaned Brownian motion. It has the property that \(\int_{0}^{1} B^{*}(r) d r=0\).

Take linear detrending. Based on least squares estimation of a linear trend the detrended series is \(Y_{t}^{* *}=Y_{t}-X_{t}^{\prime} \widehat{\beta}\) where \(X_{t}=(1, t)^{\prime}\). Like the demeaned series the detrended series is invariant to \(Y_{0}\). The associated normalized process is

\[ \begin{aligned} & Z_{n}^{* *}(r)=\frac{1}{\sqrt{n}} Y_{\lfloor n r\rfloor}-\frac{1}{\sqrt{n}} X_{\lfloor n r\rfloor}^{\prime} \widehat{\beta} \\ & =Z_{n}(r)-X(\lfloor n r\rfloor / n)^{\prime} D_{n} \frac{1}{\sqrt{n}} \widehat{\beta} \\ & \underset{d}{\longrightarrow} B(r)-X(r)^{\prime}\left(\int_{0}^{1} X X^{\prime}\right)^{-1}\left(\int_{0}^{1} X B\right) \stackrel{\text { def }}{=} B^{* *}(r) . \end{aligned} \]

\(B^{* *}(r)\) is the continuous-time residual of the Brownian motion \(B(r)\) projected orthogonal to \(X(r)=\) \((1, r)^{\prime}\). We call \(B^{* *}(r)\) detrended Brownian motion.

There is another method of detrending through first differencing. Suppose that \(Y_{t}=\beta_{0}+\beta_{1} t+Z_{t}\). The first difference is \(\Delta Y_{t}=\beta_{1}+\Delta Z_{t}\). An estimator of \(\beta_{1}\) is the sample mean of \(\Delta Y_{t}\) :

\[ \overline{\Delta Y}_{n}=\frac{1}{n} \sum_{t=1}^{n} \Delta Y_{t}=\frac{Y_{n}-Y_{0}}{n} . \]

The normalization \(Z_{0}=0\) implies \(Y_{0}=\beta_{0}\) so an estimator of \(\beta_{0}\) is \(Y_{0}\). The detrended version of \(Y_{t}\) is \(\widetilde{Y}_{t}=Y_{t}-Y_{0}-(t / n)\left(Y_{n}-Y_{0}\right)\). The associated normalized process is

\[ \widetilde{Z}_{n}(r)=Z_{n}(r)-\frac{\lfloor n r\rfloor}{n} Z_{n}(1) \underset{d}{\longrightarrow} B(r)-r B(1) \stackrel{\text { def }}{=} V(r) . \]

\(V(r)\) is called a Brownian Bridge or a tied-down Brownian motion. It has the property that \(V(0)=V(1)=\) 0 . It is also a detrended version of \(B(r)\) but is distinct from the linearly detrended version \(B^{*}(r)\).

We summarize the findings in the following theorem.

Theorem 16.5 Under the conditions of either Theorem \(16.3\) or Theorem 16.4, then as \(n \rightarrow \infty\)

- \(Z_{n}^{*}(r) \underset{d}{\longrightarrow} B^{*}(r)\)

- \(Z_{n}^{* *}(r) \underset{d}{\longrightarrow} B^{* *}(r)\)

- \(\widetilde{Z}_{n}(r) \underset{d}{\longrightarrow} V(r)\)

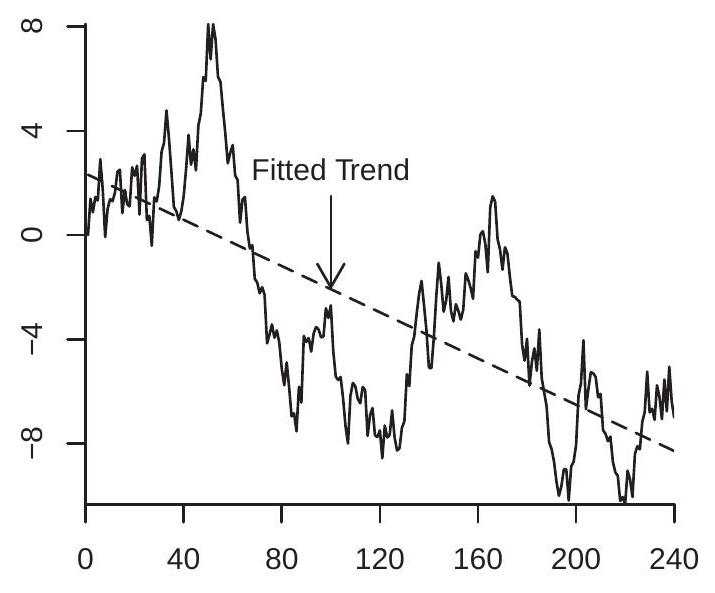

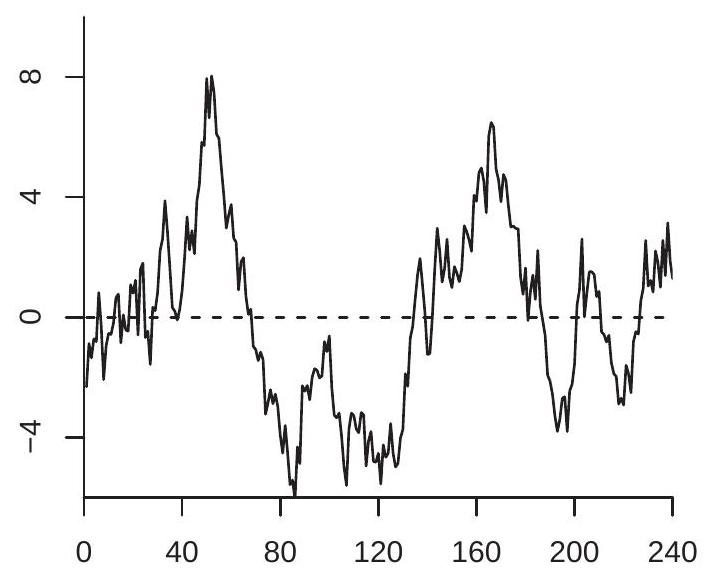

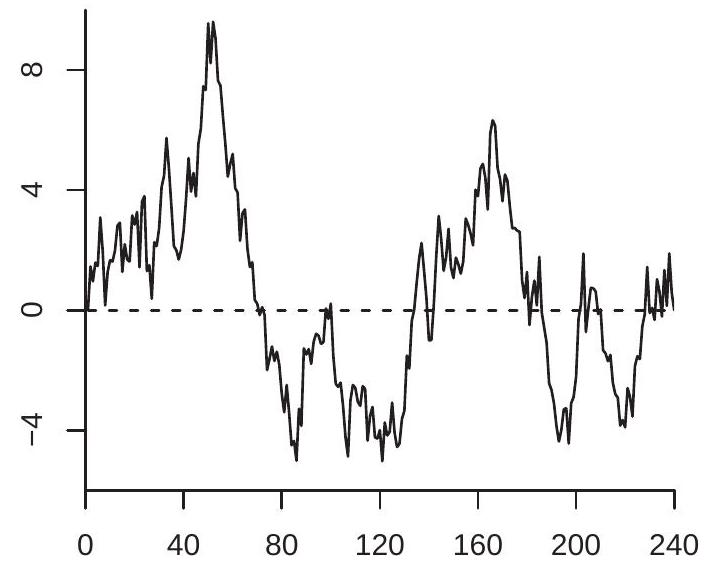

To illustrate, Figure \(16.2\) displays two detrended versions of the series from Figure 16.1. Panel (a) shows the linear detrended series \(Y_{t}^{*}\). Panel (b) shows the first-difference detrended series \(\widetilde{Y}_{t}\). They are visually similar to one another and to Figure \(16.1\) except that the strong linear trend has been removed.

16.8 Stochastic Integrals

The distribution of the least squares estimator in the regression model \(Y_{t}=X_{t}^{\prime} \beta+e_{t}\) requires the distribution of the sample moments \(n^{-1} \sum_{t=1}^{n-1} X_{t} e_{t+1}\). When \(X_{t}\) is non-stationary the limit distribution is non-standard and equals a stochastic integral.

- Linearly Detrended Series

- First Difference Detrended Series

Figure 16.2: Detrended Random Walk

It may help to recall the definition of the Riemann-Stieltijes integral. Over the region \([0,1]\) the integral of \(g(x)\) with respect to \(f(x)\) is

\[ \int_{0}^{1} g(x) d f(x)=\lim _{N \rightarrow \infty} \sum_{i=0}^{N-1} g\left(\frac{i}{N}\right)\left(f\left(\frac{i+1}{N}\right)-f\left(\frac{i}{N}\right)\right) . \]

A stochastic integral is the case where the function \(f\) is random and is defined as a probability limit.

Definition 16.4 The stochastic integral of vector-valued \(X(r)\) with respect to vector-valued \(Z(r)\) over \([0,1]\) is

\[ \int_{0}^{1} X d Z^{\prime}=\int_{0}^{1} X(r) d Z(r)^{\prime}=\underset{N \rightarrow \infty}{\operatorname{plim}} \sum_{i=0}^{N-1} X\left(\frac{i}{N}\right)\left(Z\left(\frac{i+1}{N}\right)-Z\left(\frac{i}{N}\right)\right)^{\prime} . \]

Now consider the following setting. Let \(\left(X_{t}, e_{t}\right)\) be vector-valued sequences where \(e_{t}\) is a MDS with finite covariance and \(X_{t}\) is non-stationary. Assume that for some scaling sequence \(D_{n}\) the scaled process \(X_{n}(r)=D_{n}^{-1} X_{\lfloor n r\rfloor}\) satisfies \(X_{n}(r) \underset{d}{\longrightarrow} X(r)\) for some deterministic or stochastic process \(X(r)\). Examples of \(X_{t}\) sequences include the partial sum process constructed from \(e_{t}\) or another shock, a detrended version of a partial sum process, or a deterministic trend proceses. We desire the asymptotic distribution of \(\sum_{t=1}^{n-1} X_{t} e_{t+1}^{\prime}\). Define the partial sum process for \(e_{t}\) as \(S_{n}(r)=n^{-1 / 2} \sum_{t=1}^{\lfloor n r\rfloor} e_{t}\). From Theorem 16.1, \(S_{n} \underset{d}{\longrightarrow}\) \(B\). We calculate that

\[ \frac{1}{\sqrt{n}} D_{n}^{-1} \sum_{t=0}^{n-1} X_{t} e_{t+1}^{\prime}=\sum_{t=0}^{n-1} X_{n}\left(\frac{t}{n}\right)\left(S_{n}\left(\frac{t+1}{n}\right)-S_{n}\left(\frac{t}{n}\right)\right)^{\prime}=\int_{0}^{1} X_{n} d S_{n}^{\prime} . \]

The equalities hold because \(S_{n}(r)\) and \(X_{n}(r)\) are step functions with jumps at \(r=t / n\). Since \(X_{n}(r)\) and \(S_{n}(r)\) converge to \(X(r)\) and \(B(r)\), by analogy we expect \(\int_{0}^{1} X_{n} d S_{n}\) to converge to \(\int_{0}^{1} X d B\). This is true, but rather tricky to show since the stochastic integral is not a continuous function of \(B(r)\). A general statement of the conditions has been provided by Kurtz and Protter (1991, Theorem 2.2). The following is a simplification of their result.

Theorem 16.6 If \(\left(e_{t}, \mathscr{F}_{t}\right)\) is a martingale difference sequence, \(\mathbb{E}\left[e_{t} e_{t}^{\prime}\right]=\Sigma<\infty\), \(X_{t} \in \mathscr{F}_{t}\), and \(\left(X_{n}(r), S_{n}(r)\right) \underset{d}{\longrightarrow}(X(r), B(r))\) then

\[ \int_{0}^{1} X_{n} d S_{n}^{\prime}=\frac{1}{\sqrt{n}} D_{n}^{-1} \sum_{t=1}^{n-1} X_{t} e_{t+1} \underset{d}{\longrightarrow} \int_{0}^{1} X d B^{\prime} \]

where \(B(r)\) is a Brownian motion with covariance matrix \(\Sigma\).

The basic application of Theorem \(16.6\) is to the case \(X_{n}(r)=S_{n}(r)\). Thus if \(S_{t}=\sum_{i=1}^{t} e_{t}\) and \(e_{t}\) is a MDS with covariance matrix \(\Sigma\) then

\[ \frac{1}{n} \sum_{t=1}^{n-1} S_{t} e_{t+1}^{\prime} \underset{d}{\longrightarrow} \int_{0}^{1} B d B^{\prime} . \]

We can extend this result to the case of serially correlated errors.

Theorem 16.7 If \(Z_{t}\) satisfies the conditions of Theorem \(16.4\) and \(S_{t}=\sum_{i=1}^{t} Z_{t}\) then

\[ \frac{1}{n} \sum_{t=1}^{n-1} S_{t} Z_{t+1}^{\prime} \underset{d}{\longrightarrow} \int_{0}^{1} B d B^{\prime}+\Lambda \]

where \(B(r)\) is a Brownian motion with covariance matrix \(\Omega=\Sigma+\Lambda+\Lambda^{\prime}, \Sigma=\) \(\mathbb{E}\left[Z_{t} Z_{t}^{\prime}\right]\), and \(\Lambda=\sum_{j=1}^{\infty} \mathbb{E}\left[Z_{t-j} Z_{t}^{\prime}\right]\)

The proof is presented in Section \(16.22\).

16.9 Estimation of an \(\mathbf{A R}(1)\)

Consider least squares estimation of the AR(1) parameter \(\alpha\) in the model \(Y_{t}=\alpha Y_{t-1}+e_{t}\). The centered estimator is \(\widehat{\alpha}-\alpha=\left(\sum_{t=1}^{n-1} Y_{t}^{2}\right)^{-1}\left(\sum_{t=1}^{n-1} Y_{t} e_{t+1}\right)\). We use the scaling

\[ n(\widehat{\alpha}-\alpha)=\frac{\frac{1}{n} \sum_{t=1}^{n-1} Y_{t} e_{t+1}}{\frac{1}{n^{2}} \sum_{t=1}^{n-1} Y_{t}^{2}} . \]

We examine the denominator and numerator separately under the assumption \(\alpha=1\). Similarly to our analysis of the sample mean the denominator can be written as an integral. Thus

\[ \frac{1}{n^{2}} \sum_{t=1}^{n-1} Y_{t}^{2}=\frac{1}{n} \sum_{t=1}^{n-1}\left(\frac{1}{n^{1 / 2}} Y_{t}\right)^{2}=\int_{0}^{1} Z_{n}(r)^{2} d r \underset{d}{\longrightarrow} \int_{0}^{1} B(r)^{2} d r=\sigma^{2} \int_{0}^{1} W(r)^{2} d r \]

The convergence is by the continuous mapping theorem \({ }^{4}\). The final equality recognizes that if \(B(r)\) has variance \(\sigma^{2}\) then \(B(r)^{2}=\sigma^{2} W(r)^{2}\) where \(W(r)\) is standard Brownian motion. For conciseness we often write the final integral as \(\int_{0}^{1} W^{2}\).

For the numerator we appeal to Theorem 16.6.

\[ \frac{1}{n} \sum_{t=1}^{n-1} Y_{t} e_{t+1}=\int_{0}^{1} Z_{n} d S_{n} \underset{d}{\longrightarrow} \int_{0}^{1} B d B=\sigma^{2} \int_{0}^{1} W d W . \]

This limiting stochastic integral is quite famous. It is known as Itô’s integral.

Theorem 16.8 Itô’s Integral \(\int_{0}^{1} W d W=\frac{1}{2}\left(W(1)^{2}-1\right)\)

If you are not surprised by Itô’s integral take another look. The derivative of \(\frac{1}{2} W(r)^{2}\) is \(W(r) d W(r)\). Thus by standard calculus and \(W(0)=0\) you might expect \(\int_{0}^{1} W d W=\frac{1}{2} W(1)^{2}\). The presence of the extra term \(-1 / 2\) is surprising. This arises because \(W(r)\) has unbounded variation.

The random variable \(W(1)^{2}\) is \(\chi_{1}^{2}\) which has expectation 1 . Therefore the random variable \(\int_{0}^{1} W d W\) is mean zero but skewed.

The proof of Theorem \(16.8\) is presented in Section \(16.22\).

Returning to the least squares estimation problem we have shown that when \(\alpha=1\)

\[ n(\widehat{\alpha}-1) \underset{d}{\longrightarrow} \frac{\frac{\sigma^{2}}{2}\left(W(1)^{2}-1\right)}{\sigma^{2} \int_{0}^{1} W^{2}}=\frac{\int_{0}^{1} W d W}{\int_{0}^{1} W^{2}} . \]

Theorem 16.9 Dickey-Fuller Coefficient Distribution If \(Y_{t}=\alpha Y_{t-1}+e_{t}\) with \(\alpha=1\), and \(\left(e_{t}, \mathscr{F}_{t}\right)\) is a strictly stationary and ergodic martingale difference sequence with a finite variance, then

\[ n(\widehat{\alpha}-1) \underset{d}{\longrightarrow} \frac{\int_{0}^{1} W d W}{\int_{0}^{1} W^{2}} . \]

The limit distribution in Theorem \(16.9\) is known as the Dickey-Fuller Distribution due to the work of Wayne Fuller and David Dickey. Theorem 16.9 shows that the least squares estimator is consistent for \(\alpha=1\) and converges at the “super-consistent” rate \(O_{p}\left(n^{-1}\right)\). The limit distribution is non-standard and is written as a function of the Brownian motion \(W(r)\). There is not a closed-form expression for the distribution or density of the statistic. Most commonly it is calculated by simulation.

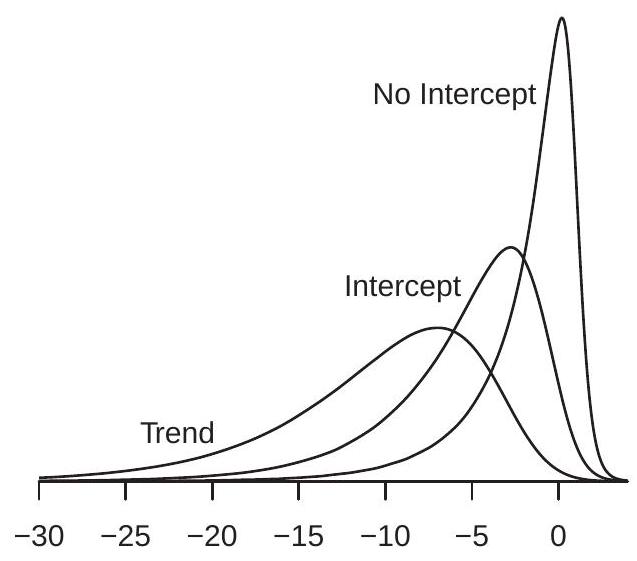

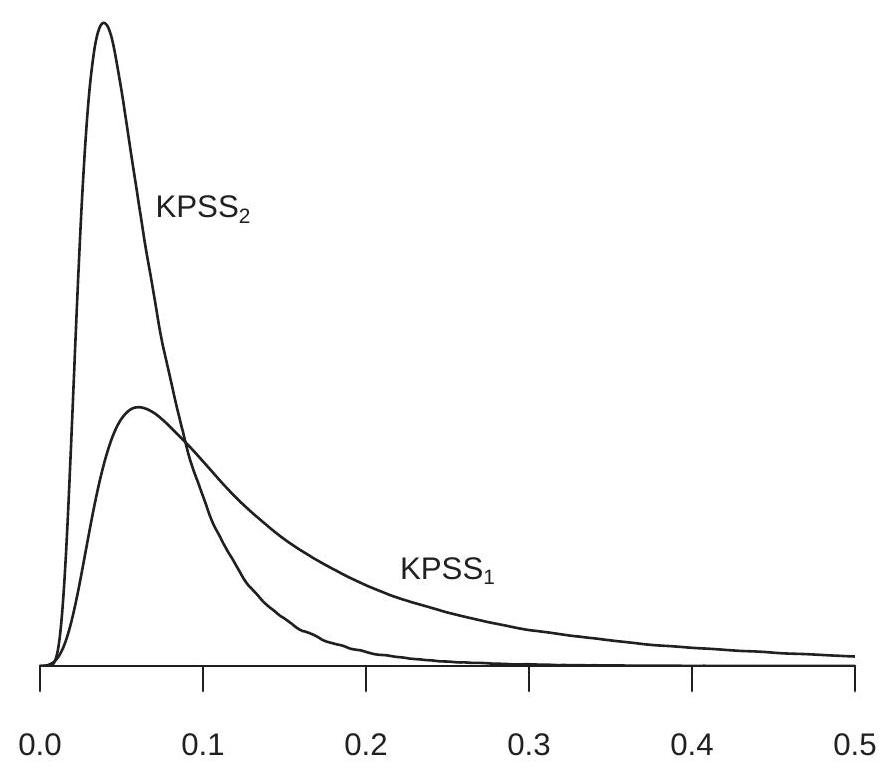

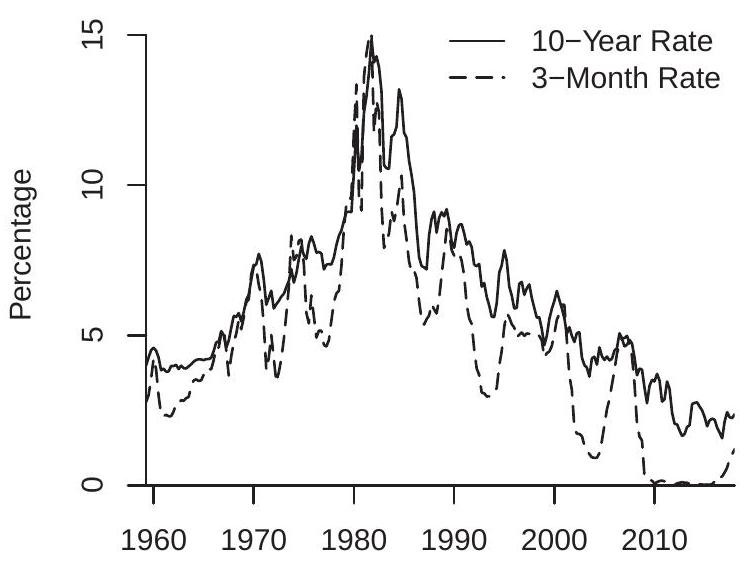

\({ }^{4}\) The function \(g(f)=\int_{0}^{1} f(x)^{2} d x\) is continuous with respect to the uniform metric. The density of the Dickey-Fuller coefficient distribution is displayed \({ }^{5}\) in Figure \(16.3\) (a) with the label “No Intercept”. You can see that the density is high skewed with a long left tail. You can see that most of the probability mass of the distribution is over the negative region. This has the implication that the density has a negative mean and median. Hence the asymptotic distribution of the least squares estimator is biased negatively. This has the practical implication that when \(\alpha=1\) the least squares estimator is biased away from one.

We can also examine the limit distribution of the t-ratio. Let \(\widehat{e}_{t}=Y_{t}-\widehat{\alpha} Y_{t-1}\) be the least squares residual, \(\widehat{\sigma}^{2}=n^{-1} \sum \widehat{e}_{t}^{2}\) the least squares variance estimator, and \(s(\widehat{\alpha})=\widehat{\sigma} / \sqrt{\sum Y_{t}^{2}}\) the classical standard error for \(\widehat{\alpha}\). The t-ratio for \(\alpha\) is \(T=(\widehat{\alpha}-1) / s(\widehat{\alpha})\).

Theorem 16.10 Dickey-Fuller T Distribution Under the assumptions of Theorem \(16.9\)

\[ T=\frac{\widehat{\alpha}-1}{s(\widehat{\alpha})} \underset{d}{\longrightarrow} \frac{\int_{0}^{1} W d W}{\left(\int_{0}^{1} W^{2}\right)^{1 / 2}} . \]

The limit distribution in Theorem \(16.10\) is known as the Dickey-Fuller T distribution. Theorem \(16.10\) shows that the classical t-ratio converges to a non-standard asymptotic distribution. There is no closedform expression for the distribution or density so it is typically calculated using simulation techniques. The proof is presented in Section \(16.22\).

The density of the Dickey-Fuller T distribution is displayed in Figure 16.3(b) with the label “No Intercept”. You can see that the density is skewed but much less so than the coefficient distribution. The distribution appears to be a “fatter” version of the conventional student t distribution. An implication is that conventional inference (confidence intervals and tests) will be inaccurate. We discuss testing in Section \(16.13\).

16.10 AR(1) Estimation with an Intercept

Suppose that \(Y_{t}\) is a random walk and we estimate an AR(1) model with an intercept. The estimated model is \(Y_{t}=\mu+\alpha Y_{t-1}+e_{t}\). By the Frisch-Waugh-Lovell Theorem (Theorem 3.5) the least squares estimator \(\widehat{\alpha}\) of \(\alpha\) can be written as the simple regression using the demeaned series \(Y_{t}^{*}\). That is, the normalized estimator is

\[ n(\widehat{\alpha}-1)=\frac{\frac{1}{n} \sum_{t=1}^{n-1} Y_{t}^{*} e_{t+1}}{\frac{1}{n^{2}} \sum_{t=1}^{n-1} Y_{t}^{* 2}} \]

where \(Y_{t}^{*}=Y_{t}-\bar{Y}\) with \(\bar{Y}=\frac{1}{n} \sum_{t=1}^{n-1} Y_{t}\). By Theorems 16.5.1 and \(16.6\) the calculations from the previous section show that

\[ n(\widehat{\alpha}-1) \underset{d}{\longrightarrow} \frac{\int_{0}^{1} W^{*} d W}{\int_{0}^{1} W^{* 2}} . \]

\({ }^{5}\) The densities in Figure \(16.3\) were estimated from one million simulation draws of the finite sample distribution for a sample size \(n=10,000\). The densities were estimated using nonparametric kernel methods (see Chapter 17 of Introduction to Econometrics).

- Dickey-Fuller Coefficient Density

- Dickey-Fuller T Density

Figure 16.3: Unit Root Distributions

This is similar to the distribution in Theorem 16.9. This is known as the Dickey-Fuller coefficient distribution for the case of an included intercept.

Similarly if we estimate an AR(1) model with an intercept and trend the estimated model is \(Y_{t}=\) \(\mu+\beta t+\alpha Y_{t-1}+e_{t}\). By the Frisch-Waugh-Lovell Theorem this is equivalent to regression on the detrended series \(Y_{t}^{* *}\). Applying Theorems \(16.5 .2\) and 16.6, we find

\[ n(\widehat{\alpha}-1) \underset{d}{\longrightarrow} \frac{\int_{0}^{1} W^{* *} d W}{\int_{0}^{1} W^{* * 2}} . \]

This is known as the Dickey-Fuller coefficient distribution for the case of an included intercept and linear trend.

Similar results arise for the t-ratios. We summarize the results in the following theorem. Theorem 16.11 Under the assumptions of Theorem 16.9, for the case of an estimated AR(1) with an intercept

\[ \begin{gathered} n(\widehat{\alpha}-1) \underset{d}{\longrightarrow} \frac{\int_{0}^{1} W^{*} d W}{\int_{0}^{1} W^{* 2}} \\ T \underset{d}{\longrightarrow} \frac{\int_{0}^{1} W^{*} d W}{\left(\int_{0}^{1} W^{* 2}\right)^{1 / 2}} . \end{gathered} \]

For the case of an estimated AR(1) with an intercept and linear time trend

\[ \begin{gathered} n(\widehat{\alpha}-1) \underset{d}{\longrightarrow} \frac{\int_{0}^{1} W^{* *} d W}{\int_{0}^{1} W^{* * 2}} \\ T \underset{d}{\longrightarrow} \frac{\int_{0}^{1} W^{* *} d W}{\left(\int_{0}^{1} W^{* * 2}\right)^{1 / 2}} . \end{gathered} \]

The densities of the Dickey-Fuller coefficient distributions are displayed in Figure 16.3(a), labeled as “Intercept” for the case with an included intercept, and “Trend” for the case with an intercept and linear time trend. The densities are considerably affected by the inclusion of the intercept or intercept and trend. The effect is twofold: (1) the distributions shift substantially to the left; and (2) the distributions substantially widen. Examining the “trend” version we can see that there is very little probability mass above zero. This means that the asymptotic distribution is not only biased downward, the realization is nearly always negative. This has the practical implication that the least squares estimator is almost certainly less than the true coefficient value. This is a strong form of bias.

The densities of the Dickey-Fuller T distributions are displayed in Figure 16.3(b). The effect of detrending on the \(\mathrm{T}\) distributions is quite different from the effect on the coefficient distirbutions. Here we see that the primary effect is a location shift with only a mild impact on dispersion. The strong location shift is a bias in the asymptotic \(\mathrm{T}\) distribution, implying that conventional inferences will be incorrect.

16.11 Sample Covariances of Integrated and Stationary Processes

Let \(\left(X_{t}, u_{t}\right)\) be a sequence where \(X_{t}\) is non-stationary and \(u_{t}\) is mean zero and strictly stationary. Assume that for some scaling sequence \(D_{n}\) the scaled process \(X_{n}(r)=D_{n}^{-1} X_{\lfloor n r\rfloor}\) satisfies \(X_{n}(r) \underset{d}{\longrightarrow} X(r)\) where \(X(r)\) is continuous with probability one. Consider the scaled sample covariance

\[ C_{n}=\frac{1}{n} D_{n}^{-1} \sum_{t=1}^{n} X_{t} u_{t} . \]

Theorem 16.12 Assume that \(X_{n}(r)=D_{n}^{-1} X_{\lfloor n r\rfloor} \underset{d}{\longrightarrow} X(r)\) where \(X(r)\) is almost surely continuous. Assume \(u_{t}\) is mean zero, strictly stationary, ergodic, and \(\mathbb{E}\left|u_{t}\right|<\infty\). Then \(C_{n} \underset{p}{\longrightarrow} 0\) as \(n \rightarrow \infty\). The proof is presented in Section \(16.22\).

16.12 AR(p) Models with a Unit Root

Assume that \(Y_{t}\) satisfies \(a(\mathrm{~L}) \Delta Y_{t}=e_{t}\) where \(a(z)\) is a \(p-1\) order invertible lag polynomial and \(e_{t}\) is a stationary MDS with finite variance \(\sigma^{2}\). Then \(Y_{t}\) can be written as the AR(p) process

\[ Y_{t}=a_{1} Y_{t-1}+\cdots+a_{p} Y_{t-p}+e_{t} \]

where the coefficients satisfy \(a_{1}+\cdots+a_{p}=1\). Let \(\widehat{a}\) be the least squares estimator of \(a=\left(a_{1}, \ldots, a_{p}\right)\). We now describe its sampling distribution.

Let \(B\) be the \(p \times p\) matrix which transforms \(\left(Y_{t-1}, \ldots, Y_{t-p}\right)\) to \(\left(Y_{t-1}, \Delta Y_{t-1}, \ldots, \Delta Y_{t-p+1}\right)\), for example when \(p=3\) then \(B=\left[\begin{array}{ccc}1 & 0 & 0 \\ 1 & -1 & 0 \\ 0 & 1 & -1\end{array}\right]\). Make the partition \(B^{-1 \prime} a=(\rho, \beta)\) where \(\rho \in \mathbb{R}\) and \(\beta \in \mathbb{R}^{p-1}\). Then the \(\mathrm{AR}(\mathrm{p})\) model can be written as

\[ Y_{t}=\rho Y_{t-1}+\beta^{\prime} X_{t-1}+e_{t} \]

where \(X_{t-1}=\left(\Delta Y_{t-1}, \ldots, \Delta Y_{t-p+1}\right)\). The leading coefficient is \(\rho=a_{1}+\cdots+a_{p}=1\). This transformation separates the regressors into the unit root component \(Y_{t-1}\) and the stationary component \(X_{t-1}\).

Consider the least squares estimators \((\widehat{\rho}, \widehat{\beta})\). They can be written under the assumption of a unit root as

\[ \left(\begin{array}{c} n(\widehat{\rho}-1) \\ \sqrt{n}(\widehat{\beta}-\beta) \end{array}\right)=\left(\begin{array}{cc} \frac{1}{n^{2}} \sum_{t=1+p}^{n} Y_{t-1}^{2} & \frac{1}{n^{3 / 2}} \sum_{t=1+p}^{n} Y_{t-1} X_{t-1}^{\prime} \\ \frac{1}{n^{3 / 2}} \sum_{t=1+p}^{n} X_{t-1} Y_{t-1} & \frac{1}{n} \sum_{t=1+p}^{n} X_{t-1} X_{t-1}^{\prime} \end{array}\right)^{-1}\left(\begin{array}{c} \frac{1}{n} \sum_{t=1+p}^{n} Y_{t-1} e_{t} \\ \frac{1}{\sqrt{n}} \sum_{t=1+p}^{n} X_{t-1} e_{t} \end{array}\right) . \]

Theorems \(16.4\) and the CMT show that

\[ \frac{1}{n^{2}} \sum_{t=1+p}^{n} Y_{t-1}^{2} \underset{d}{\rightarrow} \omega^{2} \int_{0}^{1} W^{2} \]

where \(\omega^{2}\) is the long-run variance of \(\Delta Y_{t}\) which equals \(\omega^{2}=\sigma^{2} / a(1)^{2}>0\).

Theorem \(16.12\) shows that

\[ \frac{1}{n^{3 / 2}} \sum_{t=1+p}^{n} X_{t-1} Y_{t-1} \underset{p}{\longrightarrow} 0 . \]

Theorems \(16.4\) and \(16.6\) show that

\[ \frac{1}{n} \sum_{t=1+p}^{n} Y_{t-1} e_{t} \underset{d}{\longrightarrow} \omega \sigma \int_{0}^{1} W d W . \]

The WLLN and the CLT for stationary processes show that

\[ \begin{aligned} &\frac{1}{n} \sum_{t=1+p}^{n} X_{t-1} X_{t-1}^{\prime} \rightarrow \boldsymbol{Q} \\ &\frac{1}{\sqrt{n}} \sum_{t=1+p}^{n} X_{t-1} e_{t} \underset{d}{\longrightarrow} \mathrm{N}(0, \Omega) \end{aligned} \]

where \(\boldsymbol{Q}=\mathbb{E}\left[X_{t-1} X_{t-1}^{\prime}\right]\) and \(\Omega=\mathbb{E}\left[X_{t-1} X_{t-1}^{\prime} e_{t}^{2}\right]\). Together we have established the following. Theorem 16.13 Assume that \(Y_{t}\) satisfies \(a(\mathrm{~L}) \Delta Y_{t}=e_{t}\) where \(a(z)\) is a \(p-1\) order invertible lag polynomial and \(\left(e_{t}, \Im_{t}\right)\) is a stationary MDS with finite variance \(\sigma^{2}\). Then

\[ \left(\begin{array}{c} n(\widehat{\rho}-1) \\ \sqrt{n}(\widehat{\beta}-\beta) \end{array}\right) \rightarrow\left(\begin{array}{c} a(1) \frac{\int_{0}^{1} W d W}{\int_{0}^{1} W^{2}} \\ \mathrm{~N}(0, V) \end{array}\right) \]

where \(V=\boldsymbol{Q}^{-1} \Omega \boldsymbol{Q}^{-1}\).

This theorem provides an asymptotic distribution theory for the least squares estimators. The estimator \((\widehat{a}, \widehat{\beta})\) is consistent, the coefficient \(\widehat{\beta}\) on the stationary variables is asymptotically normal, and the coefficient \(\widehat{a}\) on the unit root component has a scaled Dickey-Fuller distribution.

The estimator of the representation (16.6) is the linear transformation \(B^{\prime}\left(\widehat{\rho}, \widehat{\beta}^{\prime}\right)^{\prime}\), and therefore its asymptotic distribution is the transformation \(B^{\prime}\) of (16.8). Since the unit root component converges at a faster \(O_{p}\left(n^{-1}\right)\) rate than the stationary component it drops out of the asymptotic distribution. We obtain

\[ \sqrt{n}(\widehat{a}-a) \underset{d}{\longrightarrow} \mathrm{N}\left(0, \boldsymbol{G} V \boldsymbol{G}^{\prime}\right) \]

where, in the \(p=3\) case

\[ \boldsymbol{G}=\left[\begin{array}{cc} 1 & 0 \\ -1 & 1 \\ 0 & -1 \end{array}\right] \text {. } \]

The asymptotic covariance matrix \(\boldsymbol{G} V \boldsymbol{G}^{\prime}\) is deficient with rank \(p-1\). Hence this is only a partial characterization of the asymptotic distribution; equation (16.8) is a complete first-order characterization. The implication of (16.9) is that individual coefficient estimators and standard errors of (16.6) have conventional asymptotic interpretations. This extends to conventional hypothesis tests which do not include the sum of the coefficients. For most purposes (except testing the unit root hypothesis) this means that asymptotic inference on the coefficients of (16.6) can be based on the conventional normal approximation and can ignore the possible presence of unit roots.

16.13 Testing for a Unit Root

The asymptotic properties of the time series process change discontinuously at the unit root \(\rho=\) \(a_{1}+\cdots+a_{p}=1\). It is therefore of standard interest to test the hypothesis of a unit root. We typically express this as the test of \(\mathbb{H}_{0}: \rho=1\) against \(\mathbb{H}_{1}: \rho<1\). We typically view the test as one-sided as we are interested in the alternative hypothesis that the series is stationary (not that it is explosive).

The test for \(\mathbb{H}_{0} \mathrm{vs} . \mathbb{H}_{1}\) is the t-statistic for \(a_{1}+\cdots+a_{p}=1\) in the AR(p) model (16.6). This is identical to the t-statistic for \(\rho=1\) in reparameterized form (16.7). Since the latter is a simple t-ratio this is the most convenient implementation. It is typically called the Augmented Dickey-Fuller statistic. It equals

\[ \mathrm{ADF}=\frac{\widehat{\rho}-1}{s(\widehat{\rho})} \]

where \(s(\widehat{\rho})\) is a standard error for \(\widehat{\rho}\). This t-ratio is typically calculated using a classical (homoskedastic) standard error, perhaps for historical reasons, and perhaps because the asymptotic distribution of ADF is invariant to conditional heteroskedasticity. The statistic is called the ADF statistic when the estimated model is an \(\operatorname{AR}(\mathrm{p})\) model with \(p>1\); it is typically called the Dickey-Fuller statistic if the estimated model is an \(\operatorname{AR}(1)\).

The asymptotic distribution of ADF depends on the fitted deterministic components. The test statistic is most typically calculated in a model with a fitted intercept or a fitted intercept and time trend, though the theory is also presented for the case with no fitted intercept, and extends to any polynomial order trend.

Theorem 16.14 Assume that \(Y_{t}\) satisfies \(a(\mathrm{~L}) \Delta Y_{t}=e_{t}\) where \(a(z)\) is a \(p-1\) order invertible lag polynomial and \(\left(e_{t}, \Im_{t}\right)\) is a stationary MDS with finite variance \(\sigma^{2}\). Then

\[ \mathrm{ADF} \underset{d}{\longrightarrow} \frac{\int_{0}^{1} U d W}{\left(\int_{0}^{1} U^{2}\right)^{1 / 2}} \stackrel{\text { def }}{=} \xi \]

where \(W\) is Brownian motion. The process \(U\) depends on the fitted deterministic components:

- Case 1: No intercept or trend. \(U(r)=W(r)\).

- Case 2: Fitted intercept (demeaned data). \(U(r)=W(r)-r \int_{0}^{1} W\).

- Case 3: Fitted intercept and trend (detrended data). \(U(r)=W(r)-\) \(X(r)^{\prime}\left(\int_{0}^{1} X X^{\prime}\right)^{-1}\left(\int_{0}^{1} X W\right)\) where \(X(r)=(1, r)^{\prime}\).

Let \(Z_{\alpha}\) satisfy \(\mathbb{P}\left[\xi \leq Z_{\alpha}\right]=\alpha\). The test “Reject \(\mathbb{H}_{0}\) if \(\mathrm{ADF}<Z_{\alpha}\)” has asymptotic size \(\alpha\)

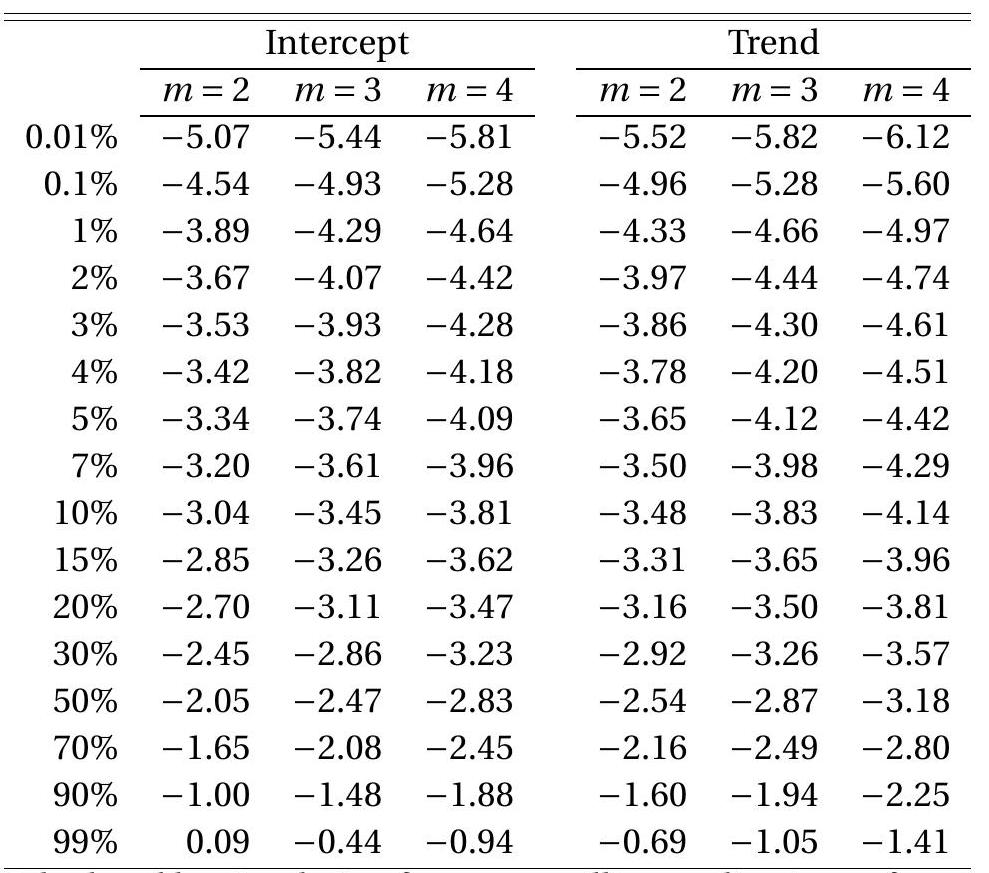

Asymptotic critical values are displayed in the first three columns of Table 16.1. The ADF is a onesided hypothesis test so rejections occur when the test statistic is less than (more negative than) the critical value. For example, the \(5 \%\) critical value for the case of a fitted intercept is \(-2.86\). This means that if the ADF t-ratio is more negative than \(-2.86\) (for example \(\mathrm{ADF}=-3.0\) ) then the test rejects the hypothesis of no unit root. But if the ADF t-ratio is greater than \(-2.86\) (for example ADF \(=-2.0\) ) then the the test does not reject the hypothesis of a unit root.

In most applications an ADF test is implemented with at least a fitted intercept (the second column in the table). Many are implemented with a fitted linear time trend (which is the third column). The choice depends on the nature of the alternative hypothesis. If \(\mathbb{H}_{1}\) is that the series is stationary about a constant mean then the case of a fitted intercept is appropriate. Example series for this context are unemployment and interest rates. If \(\mathbb{M}_{1}\) is that the series is stationary about a linear trend then the case of a fitted trend is appropriate. Examples for this context are levels or log-levels of macroeconomic aggregates.

The ADF test depends on the autoregressive order \(p\). The issue of selection of \(p\) is similar to that of autoregressive model selection. In general, if \(p\) is too small than the model is misspecified and the ADF statistic has an asymptotic bias. If \(p\) is too large than the test coefficient \(\widehat{\rho}\) is imprecisely estimated, reducing the power of the test. Since \(\widehat{\rho}\) is the sum of the \(p\) estimated AR coefficients in the levels model the imprecision can be sensitive to the choice of \(p\). A reasonable selection rule is to use the AIC-selected AR model. Improved rules have been studied by Ng and Perron (2001).

We have argued that it is better to report asymptotic p-values rather than “accept/reject”. For this calculation we need the asymptotic distribution function but this is not available in closed form. A simple approximation is interpolation of the critical values. For example, suppose ADF \(=-3.0\) with a fitted intercept. The two closest critical values are the \(10 \%(-3.13)\) and \(15 \%(-2.94)\). Linear interpolation between these values yields

\[ p=\frac{0.10 \times(3.0-2.94)+0.15 \times(3.13-3.0)}{3.13-2.94}=0.13 \text {. } \]

Thus the asymptotic p-value is approximately \(13 \%\). Reporting a p-value instead of the “decision” of a test improves interpretation and communication.

How should unit root tests be used in empirical practice? The answer is subtle. A common mistake is “We use a unit root test to discover whether or not the series has a unit root.” This is a mistake because a test does not reveal the truth. Rather, it presents evidence whether or not \(\mathbb{M}_{0}\) can be rejected. If the test fails to reject \(\mathbb{H}_{0}\) this does not mean that “We have found a unit root”. Rather, the correct conclusion is “We cannot reject the hypothesis that it has a unit root”. Thus we do not know. If the test rejects \(\mathbb{H}_{0}\) (if the p-value is very small) then we can conclude that the series is unlikely to be a unit root process; its behavior is more consistent with a stationary process. Another common mistake is to adopt the rule: “If the ADF test rejects then we work with \(Y_{t}\) in levels; if the ADF test does not reject then we work with the differenced series \(\Delta Y_{t}\).” This is a mistake because it assigns a modeling rule to the result of a statistical test while the test is only designed to answer the question if there is evidence against the hypothesis of a unit root. The choice of \(Y_{t}\) versus \(\Delta Y_{t}\) is a model selection choice not a hypothesis testing decision.

I believe a reasonable approach is to start with a hypothesis based on theory and context. Does economic theory lead you to treat a series as stationary or non-stationary? Is there a reason to believe that a series should be stationary - thus stable in the mean - or is there reason to believe the series will exhibit growth and change? If you have a clear answer to these questions, that should be your starting place, your default. Use the unit root test to help confirm your assumptions rather than to select a modeling approach. If your assumption is that \(Y_{t}\) has a unit root but the unit root test strongly rejects, then you should re-appraise your theory. On the other hand if your assumption is that \(Y_{t}\) is stationary but the unit root test fails to reject the null of a unit root, do not necessarily depart from your theoretical base. Consider the degree of evidence, the sample size, as well as the point estimates. Use all information together to base your decision.

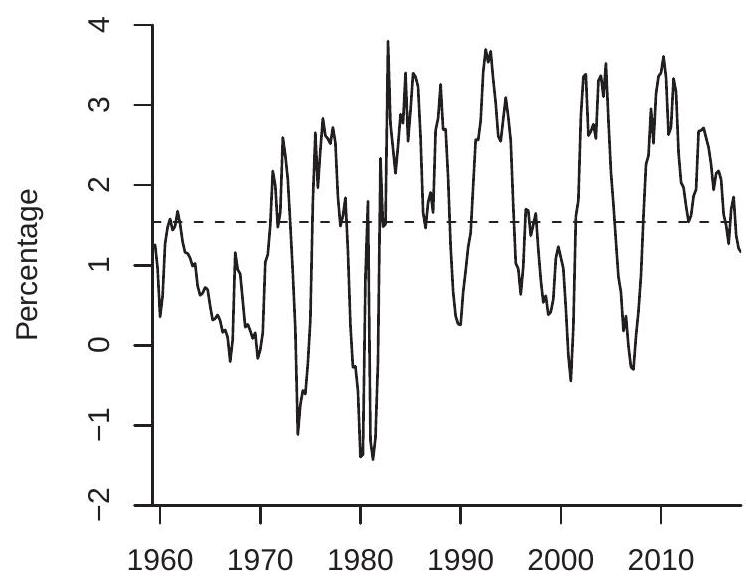

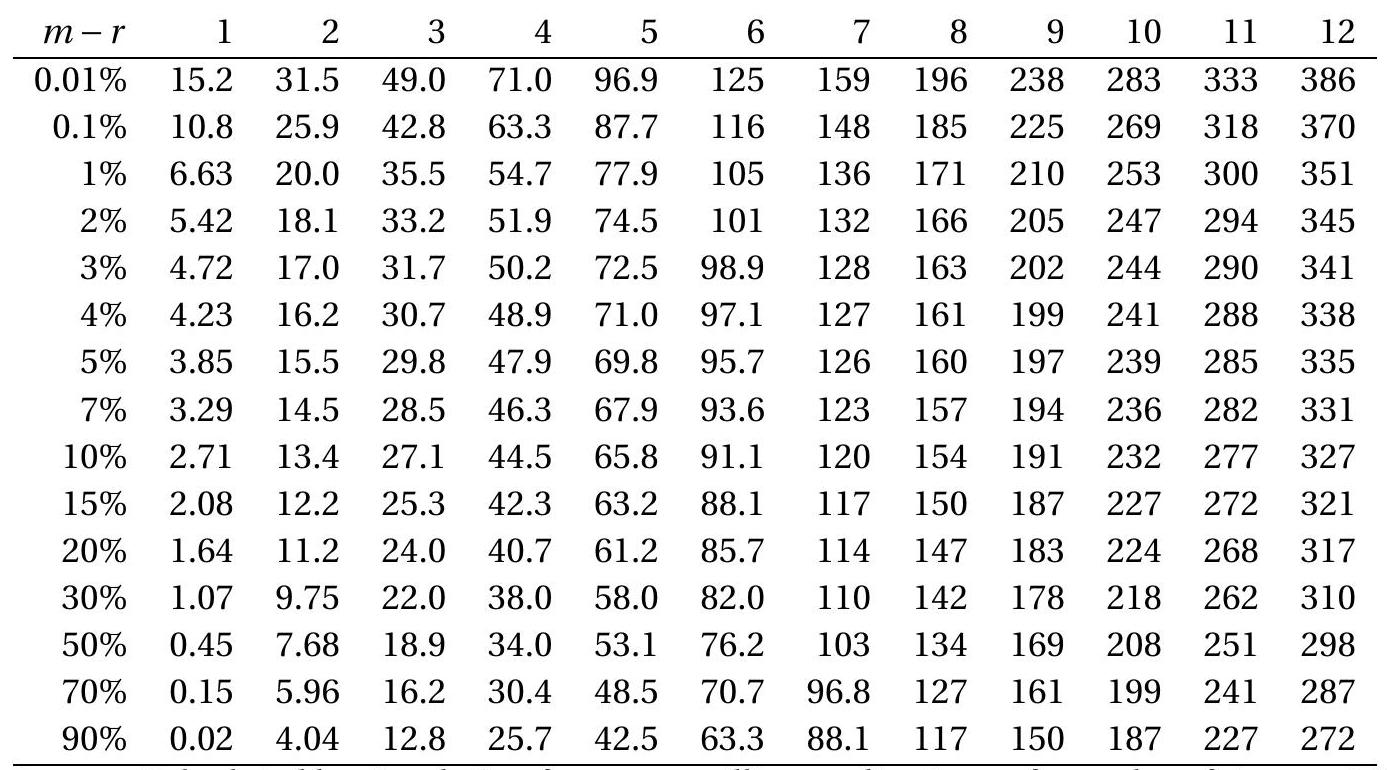

To illustrate application of the ADF test let’s take the eight series displayed in Figures 14.1-14.2 using the variables measured in levels or log-levels. The variables and transformations are listed in Table 16.2. For six of the eight series (all but the interest and unemployment rates) we took the log transformation. We included an intercept and linear time trend in each regression and selected the autoregressive order by minimizing the AIC across AR(p) models with a linear time trend. For the quarterly series we examined AR(p) models up to \(p=8\), for the monthly series up to \(p=12\). The selected values of \(p\) are shown in the table. The point estimate \(\widehat{\rho}-1\), its standard error, the ADF t statistic, and its asymptotic p-value are shown. What we see is for for seven of the eight series (all but the unemployment rate) the p-values are far from the critical region indicating failure to reject the null hypothesis of a unit root. The p-value for the unemployment rate is \(0.01\), however, indicating rejection of a unit root. Overall, the results are consistent with the hypotheses that the unemployment rate is stationary and that the other seven variables are possibly (but not decisively) unit root processes.

The ADF test came into popularity in economics with a seminar paper by Nelson and Plosser (1982). These authors applied the ADF to a set of standard macroeconomic variables (similar to those in Table 16.2) and found that the unit root hypothesis could not be rejected in most series. This empirical finding had a substantial effect on applied economic time series. Before this paper the conventional wisdom was that economic series were stationary (possibly about linear time trends). After their work it became more accepted to assume that economic time series are better described as autoregressive unit root processes. Nelson and Plosser (1982) used this empirical finding to make a further and stronger claim. They argued that Keynesian macroeconomic models (which were standard at the time) imply that economic Table 16.1: Unit Root Testing Critical Values

| ADF | KPSS | |||||

|---|---|---|---|---|---|---|

| No Intercept | Intercept | Trend | Intercept | Trend | ||

| \(0.01 \%\) | \(-3.92\) | \(-4.69\) | \(-5.21\) | \(1.598\) | \(0.430\) | |

| \(0.1 \%\) | \(-3.28\) | \(-4.08\) | \(-4.58\) | \(1.176\) | \(0.324\) | |

| \(1 \%\) | \(-2.56\) | \(-3.43\) | \(-3.95\) | \(0.744\) | \(0.218\) | |

| \(2 \%\) | \(-2.31\) | \(-3.20\) | \(-3.73\) | \(0.621\) | \(0.187\) | |

| \(3 \%\) | \(-2.15\) | \(-3.06\) | \(-3.60\) | \(0.550\) | \(0.169\) | |

| \(4 \%\) | \(-2.03\) | \(-2.95\) | \(-3.50\) | \(0.500\) | \(0.157\) | |

| \(5 \%\) | \(-1.94\) | \(-2.86\) | \(-3.41\) | \(0.462\) | \(0.148\) | |

| \(7 \%\) | \(-1.79\) | \(-2.72\) | \(-3.28\) | \(0.406\) | \(0.134\) | |

| \(10 \%\) | \(-1.62\) | \(-2.57\) | \(-3.13\) | \(0.348\) | \(0.119\) | |

| \(15 \%\) | \(-1.40\) | \(-2.37\) | \(-2.94\) | \(0.284\) | \(0.103\) | |

| \(20 \%\) | \(-1.23\) | \(-2.22\) | \(-2.79\) | \(0.241\) | \(0.091\) | |

| \(30 \%\) | \(-0.96\) | \(-1.97\) | \(-2.56\) | \(0.185\) | \(0.076\) | |

| \(50 \%\) | \(-0.50\) | \(-1.57\) | \(-2.18\) | \(0.119\) | \(0.056\) | |

| \(70 \%\) | \(0.05\) | \(-1.15\) | \(-1.81\) | \(0.079\) | \(0.041\) | |

| \(90 \%\) | \(0.89\) | \(-0.44\) | \(-1.24\) | \(0.046\) | \(0.028\) | |

| \(99 \%\) | \(2.02\) | \(0.60\) | \(-0.32\) | \(0.025\) | \(0.017\) |

Source: Calculated by simulation from one million replications of samples of size \(n=10,000\).

Table 16.2: Unit Root and KPSS Test Applications

| \(p\) | \(\widehat{\rho}-1\) | ADF | p-value | \(M\) | \(\mathrm{KPSS}_{2}\) | \(\mathrm{p}\)-value | |

|---|---|---|---|---|---|---|---|

| \(\log (\) real GDP) | 3 | \(-0.017\) \((.009)\) | \(-1.8\) | \(0.71\) | 18 | \(0.23\) | \(0.01\) |

| \(\log (\) real consumption) | 4 | \(-0.029\) \((.012)\) | \(-2.4\) | \(0.37\) | 18 | \(0.113\) | \(0.12\) |

| \(\log\) (exchange rate) | 11 | \(-0.009\) \((.004)\) | \(-2.2\) | \(0.49\) | 26 | \(0.31\) | \(<.01\) |

| interest rate | 12 | \(-0.005\) \((.004)\) | \(-1.5\) | \(0.52\) | 26 | \(0.56\) | \(<.01\) |

| log(oil price) | 2 | \(-0.013\) \((.005)\) | \(-2.4\) | \(0.35\) | 26 | \(0.23\) | \(<.01\) |

| unemployment rate | 7 | \(-0.014\) \((.004)\) | \(-3.4\) | \(0.01\) | 26 | \(0.14\) | \(0.06\) |

| log(CPI) | 11 | \(-0.001\) \((.001)\) | \(-1.0\) | \(0.95\) | 26 | \(0.55\) | \(<.01\) |

| \(\log\) (stock price) | 6 | \(-0.010\) \((.004)\) | \(-2.2\) | \(0.47\) | 26 | \(0.30\) | \(<.01\) |

time series are stationary while real business cycle (RBC) models (which were new at the time) imply that economic time series are unit root processes. Nelson-Plosser argued that the empirical finding that the unit root tests do not reject was strong support for the RBC research program. Their argument was influential and was a factor motivating the rise of the RBC literature. With hindsight we can see that Nelson and Plosser (1982) made a fundamental error in this latter argument. The unit root behavior in RBC models is not inherent to their structure; rather it is a by-product of the assumptions on the technology process. (If exogenous technology is a unit root process or a stationary process then macroeconomic variables will also be unit root processes or stationary processes, respectively.) Similarly the stationary behavior of 1970s Keynesian models was not inherent to their structure but rather a by-product of assumptions about unobservables. Fundamentally, the unit root/stationary distinction says little about the RBC/Keynesian debate.

The ADF test with a fitted intercept can be implemented in Stata by the command dfuller y, lags (q) regress. For a fitted intercept and trend add the option trend. The number of lags ” \(q\) ” in the command is the number of first differences in (16.7), hence \(q=p-1\) where \(p\) is the autoregressive order. The dfuller command reports the estimated regression, the ADF statistic, asymptotic critical values, and approximate asymptotic p-value.

16.14 KPSS Stationarity Test

Kwiatkowski, Phillips, Schmidt, and Shin (1992) developed a test of the null hypothesis of stationarity against the alternative of a unit root which has become known as the KPSS test. Many users find this idea attractive as a counterpoint to the ADF test.

The test is derived from what is known as a local level model. This is

\[ \begin{aligned} &Y_{t}=\mu+\theta S_{t}+e_{t} \\ &S_{t}=S_{t-1}+u_{t} \end{aligned} \]

where \(e_{t}\) is a mean zero stationary process and \(u_{t}\) is i.i.d. \(\left(0, \sigma_{u}^{2}\right)\). When \(\sigma_{u}^{2}=0\) then \(Y_{t}\) is stationary. When \(\sigma_{u}^{2}>0\) then \(Y_{t}\) is a unit root process. Thus a test of the null of stationarity against the alternative of a unit root is a test of \(\mathbb{H}_{0}: \sigma_{u}^{2}=0\) against \(\mathbb{M}_{1}: \sigma_{u}^{2}>0\). Add the auxillary assumption that \(\left(e_{t}, u_{t}\right)\) are i.i.d normal. The Lagrange multiplier test can be shown to reject \(\mathbb{H}_{0}\) in favor of \(\mathbb{H}_{1}\) for large values of

\[ \frac{1}{n^{2} \widehat{\sigma}^{2}} \sum_{i=1}^{n}\left(\sum_{t=1}^{i} \widehat{e}_{t}\right)^{2} \]

where \(\widehat{e}_{t}=Y_{t}-\bar{Y}\) are the residuals under the null and \(\widehat{\sigma}^{2}\) is its sample variance. To generalize to the context of serially correlated \(e_{t}\) KPSS proposed the statistic

\[ \mathrm{KPSS}_{1}=\frac{1}{n^{2} \widehat{\omega}^{2}} \sum_{i=1}^{n}\left(\sum_{t=1}^{i} \widehat{e}_{t}\right)^{2} \]

where

\[ \widehat{\omega}^{2}=\sum_{\ell=-M}^{M}\left(1-\frac{|\ell|}{M+1}\right) \frac{1}{n} \sum_{t=1}^{n} \widehat{e}_{t} \widehat{e}_{t-\ell} \]

is the Newey-West estimator of the long-run variance \(\omega^{2}\) of \(Y_{t}\).

For contexts allowing for a linear time trend the local level model takes the form

\[ Y_{t}=\mu+\beta t+\theta S_{t}+e_{t} \]

which has null least squares estimator

\[ Y_{t}=\widetilde{\mu}+\widetilde{\beta} t+\widetilde{e}_{t} . \]

Notice that \(\widetilde{e}_{t}\) is linearly detrended \(Y_{t}\). The KPSS test for \(\mathbb{H}_{0}\) against \(\mathbb{H}_{1}\) rejects for large values of

\[ \mathrm{KPSS}_{2}=\frac{1}{n^{2} \widetilde{\omega}^{2}} \sum_{i=1}^{n}\left(\sum_{t=1}^{i} \widetilde{e}_{t}\right)^{2} \]

where \(\widetilde{\omega}^{2}\) is defined as \(\widehat{\omega}^{2}\) but with the detrended residuals \(\widetilde{e}_{t}\).

Theorem 16.15 If \(Y_{t}\) follows Assumption \(16.1\) then

\[ \operatorname{KPSS}_{1} \underset{d}{\longrightarrow} \int_{0}^{1} V^{2} \]

and

\[ \operatorname{KPSS}_{2} \underset{d}{\longrightarrow} \int_{0}^{1} V_{2}^{2} \]

where \(V(r)=W(r)-r W(1)\) is a Brownian bridge, and \(V_{2}(r)=W(r)-\) \(\left(\int_{0}^{r} X(s) d s\right)^{\prime}\left(\int_{0}^{1} X X^{\prime}\right)^{\prime} \int_{0}^{1} X d W\) with \(X(s)=(1, s)^{\prime}\).

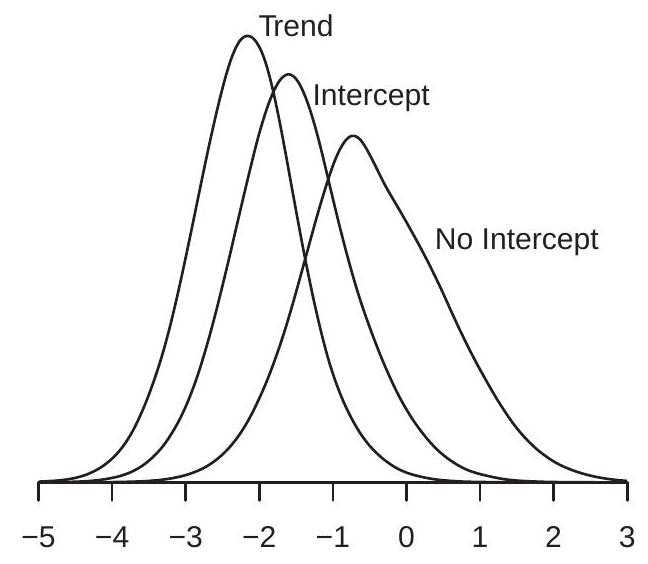

The asymptotic distributions in Theorem \(16.15\) are non-standard and are typically calculated by simulation. The process \(V_{2}(r)\) is known as a Second-level Brownian Bridge. The asymptotic distributions are displayed \({ }^{6}\) in Figure 16.4. The densities are skewed with a slowly-decaying right tail. The \(\mathrm{KPSS}_{2}\) distribution is substantially shifted towards the origin compared to the \(\mathrm{KPSS}_{1}\) distribution, indicating a substantial effect of detrending.

Asymptotic critical values are displayed in the final two columns of Table 16.1. Rejections occur when the test statistic exceeds the critical value. For example, for a regression with fitted intercept and time trend, suppose that the statistic equals \(\mathrm{KPSS}_{2}=0.163\). This exceeds the \(4 \%\) critical value \(0.157\) but not the \(3 \%\) critical value \(0.169\). Thus the test rejects at the \(4 \%\) but not the \(3 \%\) level. An interpolated p-value is \(3.5 \%\). This would be moderate evidence against the hypothesis of stationarity in favor of the alternative hypothesis of nonstationarity.

The KPSS statistic depends on the lag order \(M\) used to estimate the long-run variance \(\omega^{2}\). This is a challenge for test implementation. If \(Y_{t}\) is stationary but highly persistent (for example, an AR(1) with a large autoregressive coefficient) then the lag truncation \(M\) needs to be large in order to accurately estimate \(\omega^{2}\). However, under the alternative that \(Y_{t}\) is a unit root process, the estimator \(\widehat{\omega}^{2}\) will increase roughly linearly with \(M\) so that for any given sample the KPSS statistic can be made arbitrarily small by selecting \(M\) sufficiently large.

Recall that the Andrews (1991) reference rule (14.51) is

\[ M=\left(6 \frac{\rho^{2}}{\left(1-\rho^{2}\right)^{2}}\right)^{1 / 3} n^{1 / 3} \]

where \(\rho\) is the first autocorrelation of \(Y_{t}\). For the KPSS test we should not replace \(\rho\) with an estimator \(\widehat{\rho}\) as the latter converges to 1 under \(\mathbb{H}_{0}\), leading to \(M \rightarrow \infty\) rendering the test inconsistent. Instead we can

\({ }^{6}\) Calculated by simulation from one million simulation draws of samples of size \(n=10,000\).

Figure 16.4: Density of KPSS Distribution

use a default rule based on a reasonable alternative. Suppose we consider the alternative \(\rho=0.8\). The associated Andrews’ reference rule is \(M=3.1 n^{1 / 3}\). This leads to a simple rule \(M=3 n^{1 / 3}\). An interpretation of this choice is that it should approximately control the size of the test when the truth is an AR(1) with coefficient \(0.8\) but over-reject for more persistent AR processes.

To illustrate, Table \(16.2\) reports the \(\mathrm{KPSS}_{2}\) statistic for the same eight series as examined in the previous section, using \(M=3 n^{1 / 3}\). For the first two quarterly series \(n=228\) leading to \(M=18\). For the six monthly series \(n=684\) leading to \(M=26\). For six of the eight series (all but consumption and the unemployment rate) the KPSS statistic equals or exceeds the \(1 \%\) critical value leading to a rejection of the null hypothesis of stationarity in favor of the alternative of a unit root. This is consistent with the ADF test which failed to reject a unit root for these series.

For the consumption series the KPSS statistic has a p-value of \(12 \%\), which does not reject the hypothesis of stationarity. Recall that the ADF test failed to reject the hypothesis of a unit root. Thus neither test leads to a decisive result; as a pair the two tests are inconclusive. In this context I recommend staying with the prediction of economic theory (consumption is a martingale) as it is not rejected by a hypothesis test. The KPSS fails to reject stationarity but that does not mean that the series is stationary.

An interesting case is the unemployment rate series. It has \(\mathrm{KPSS}_{2}=0.14\) with a p-value of \(6 \%\). This is borderline significant for rejection of stationarity. On the other hand, recall that the ADF test had a pvalue of \(1 \%\) rejecting the unit root hypothesis. These results are borderline conflicting. To augment our information we calculate the KPSS \(_{1}\) test as the unemployment rate does not appear to be trended. We find \(\operatorname{KPSS}_{1}=0.19\) with a p-value of \(30 \%\). This is clearly in the non-rejection region, failing to provide evi- dence against stationarity. As a whole, the ADF test (reject unit root), the \(\mathrm{KPSS}_{1}\) test (accept stationarity), and the \(\mathrm{KPSS}_{2}\) test (borderline reject stationarity), taken together are consistent with the interpretation that the unemployment rate is a stationary process.

The \(\mathrm{KPSS}_{2}\) test can be implemented in Stata using the command \({ }^{7} \mathrm{kpss}\) y, \(\operatorname{maxlag}(\mathrm{q})\). For the KPSS \(_{1}\) test add the option notrend. The command reports the KPSS statistics for \(M=1, \ldots, q\), as well as asymptotic critical values. Approximate asymptotic p-values are not reported.

16.15 Spurious Regression

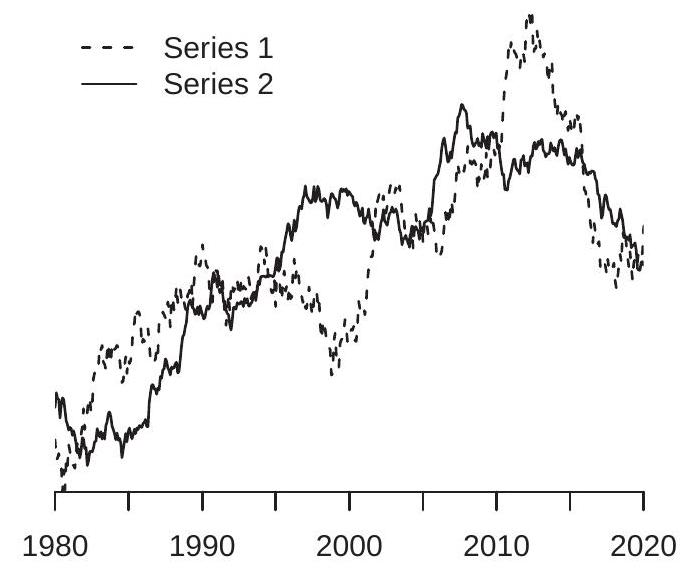

One of the most empirically relevant discoveries from the theory of non-stationary time series is the phenomenon of spurious regression. This is the finding that two statistically independent series, if both unit root processes, are likely to fool traditional statistical analysis by appearing to be statistically related by both eyeball scrutiny and traditional statistical tests. The phenomenon was observed \({ }^{8}\) and named by Granger and Newbold (1974) and explained using the theory of non-stationary time series by Phillips (1986). The primary lesson is that it is easy to be tricked by non-stationary time-series but the problem disappears if we pay suitable attention to dynamic specification.

- Two Unrelated Random Walks

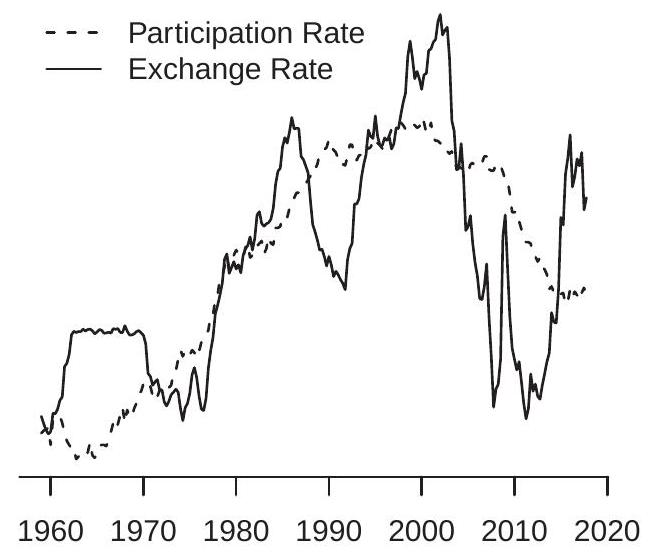

- Exchange and Labor Force Participation Rates

Figure 16.5: Plots of Empirical Series

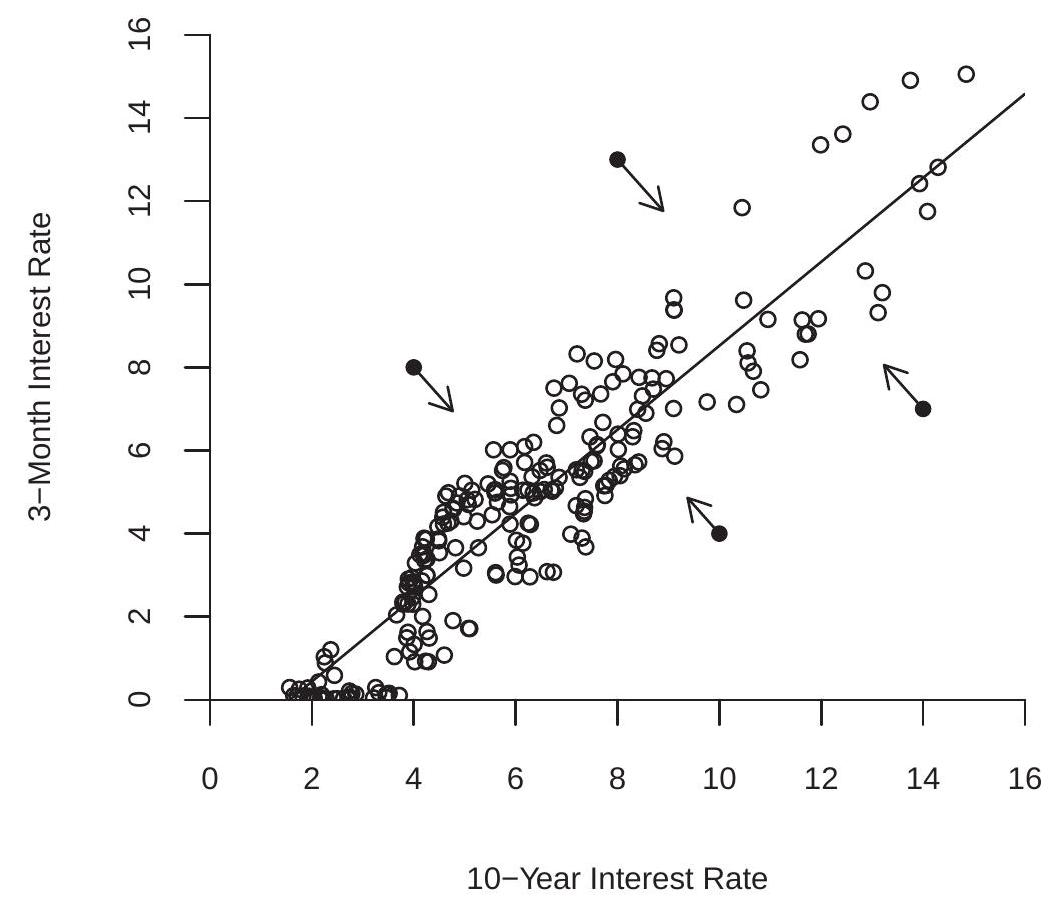

To illustrate the problem examine Figure 16.5(a). Displayed are two time series, monthly for 19802018. A casual review of the graphs shows that both series are generally increasing over 1980-2010 with a no-growth period around 2000, and the series display a downward trend for the final decade. A more refined perusal may appear to reveal that Series 2 leads Series 1 by about five years, in the sense that Series 2 reaches turning points about five years before Series 1. A casual observer is likely to deduce based on Figure 16.5(a) that the two time series are strongly related.

\({ }^{7}\) The command kpss is not part of the standard package, but can be installed by typing ssc install kpss.

\({ }^{8}\) In numerical simulations. However the truth is that Series 1 and Series 2 are statistically independent random walks generated by computer simulation, each standardized to have mean zero and unit variance for the purpose of visual comparison. The “fact” that both series are generally upward trended and have “similar” turning points are statistical accidents. Random walks have an uncanny ability to fool casual analysis. Newspaper (and other journalistic) articles containing plots of time series are routinely subject to the tricks of Figure 16.5(a). Economists are also routinely tricked and fooled.

Traditional statistical examination of the series in Figure 16.5(a) can also lead to a false inference of a strong relationship. A linear regression of Series 1 on Series 2 yields a slope coefficient of \(0.76\) with classical standard error of \(0.03\). The t-ratio for the test of a zero slope is \(T=26\). The equation \(R^{2}\) is \(0.59\). These traditional statistics support the incorrect inference that the two series are strongly related.

Spurious relationships of this form are commonplace in economic time series. An example is shown in Figure 16.5(b), which displays the U.S. labor force participation rate and U.S.-Canada exchange rate, quarterly for 1960-2018. As a visual aid both series have been normalized to have mean zero and unit variance. Both series appear to grow at a similar rate from 1960-2000, though the exchange rate is more volatile. From 2000-2018 they reverse course, with both series declining. The visual evidence is supported by traditional statistics. A linear regression of labor participation on the exchange rate yields a slope coefficient of \(0.70\) with a clasical standard error of \(0.05\). The t-ratio for the test of a zero slope is \(T=15\). The equation \(R^{2}\) is \(0.49\). The visual and statistical evidence support the inference that the two series are related.

This empirical “finding” that the labor participation and exchange rates are related does not make economic sense. Is this an example of a spurious regression between non-stationary variables? A visual inspection of each series supports the contention that each is non-stationary and may be well characterized as a unit root process. We saw in Sections \(16.13\) and \(16.14\) that the ADF and KPSS tests support the hypothesis that the exchange rate is a unit root process. Similar tests reach the same conclusion for labor force participation. Thus the two series are reasonably characterized as unit root processes and these two series could be an empirical example of a spurious regression.

For a formal framework assume that the series \(Y_{t}\) and \(X_{t}\) are random walk processes

\[ \begin{aligned} Y_{t} &=Y_{t-1}+e_{1 t} \\ X_{t} &=X_{t-1}+e_{2 t} \end{aligned} \]

where \(\left(e_{1 t}, e_{2 t}\right)\) are i.i.d., mean zero, mutually uncorrelated, and normalized to have unit variance. Let \(Y_{t}^{*}\) and \(X_{t}^{*}\) denote demeaned versions of \(Y_{t}\) and \(X_{t}\). From the FCLT they satisfy

\[ \left(\frac{1}{\sqrt{n}} Y_{\lfloor n r\rfloor}^{*}, \frac{1}{\sqrt{n}} X_{\lfloor n r\rfloor}^{*}\right) \underset{d}{\longrightarrow}\left(W_{1}^{*}(r), W_{2}^{*}(r)\right) \]

where \(W_{1}^{*}(r)\) and \(W_{2}^{*}(r)\) are demeaned Brownian motions.

Applying the CMT the sample correlation has the asymptotic distribution

\[ \widehat{\rho}=\frac{\frac{1}{n^{2}} \sum_{i=1}^{n} Y_{i}^{*} X_{i}^{*}}{\left(\frac{1}{n^{2}} \sum_{i=1}^{n} Y_{i}^{* 2}\right)^{1 / 2}\left(\frac{1}{n^{2}} \sum_{i=1}^{n} X_{i}^{* 2}\right)^{1 / 2}} \underset{d}{\longrightarrow} \frac{\int_{0}^{1} W_{1}^{*} W_{2}^{*}}{\left(\int_{0}^{1} W_{1}^{* 2}\right)^{1 / 2}\left(\int_{0}^{1} W_{2}^{* 2}\right)^{1 / 2}} . \]

The right-hand-side is a random variable. Furthermore it is also non-degenerate (indeed, it is non-zero with probability one). Thus the sample correlation \(\widehat{\rho}\) remains random in large samples.

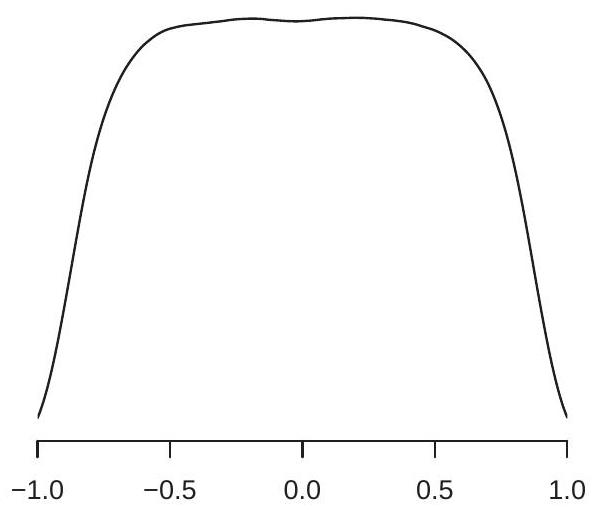

To understand magnitudes, Figure 16.6(a) displays the asymptotic distributon \({ }^{9}\) of \(\widehat{\rho}\). The density has most probability mass in the interval \([-0.5,0.5]\), over which the density is essentially flat. This means that

\({ }^{9}\) Calculated by simulation from one million simulation draws of samples of size \(n=10,000\). the sample correlation has a diffuse distribution. Above we saw that the two simulated random walks had a sample correlation \({ }^{10}\) of \(0.76\) and the two empirical series a sample correlation of \(0.70\). We can now see that these results are consistent with the distribution shown in Figure 16.6(a) and are therefore uninformative regarding the underlying relationships.

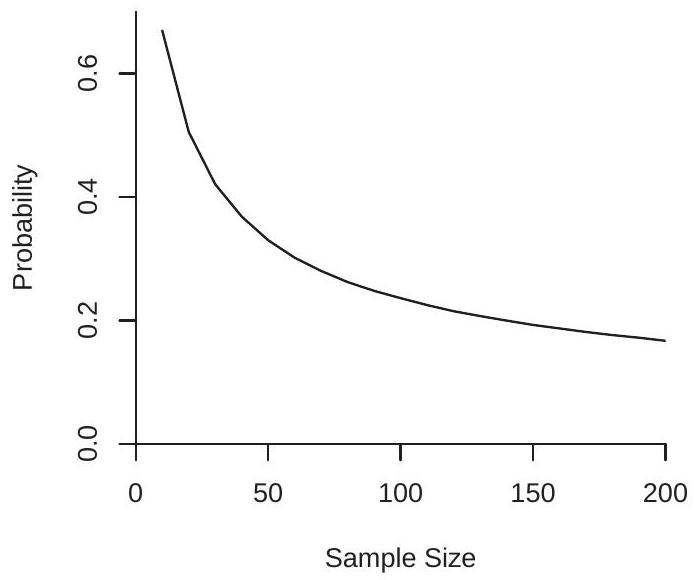

- Asymptotic Density of Sample Correlation

- Coverage Probability of Nominal 95% Interval

Figure 16.6: Properties of Spurious Regression

We can also examine the regression estimators. The slope coefficient from a regression of \(Y_{t}\) on \(X_{t}\) has the asymptotic distribution

\[ \widehat{\beta}=\frac{\frac{1}{n^{2}} \sum_{i=1}^{n} Y_{i}^{*} X_{i}^{*}}{\frac{1}{n^{2}} \sum_{i=1}^{n} X_{i}^{* 2}} \underset{d}{\longrightarrow} \frac{\int_{0}^{1} W_{1}^{*} W_{2}^{*}}{\int_{0}^{1} W_{2}^{* 2}} . \]

This is a non-degenerate random variable. Thus the slope estimator remains random in large samples and does not converge in probability.

Now consider the classical t-ratio \(T\). It has the asymptotic distribution

\[ \frac{1}{n^{1 / 2}} T=\frac{\frac{1}{n^{2}} \sum_{i=1}^{n} Y_{i}^{*} X_{i}^{*}}{\left(\frac{1}{n^{2}} \sum_{i=1}^{n} X_{i}^{* 2}\right)^{1 / 2}\left(\frac{1}{n^{2}} \sum_{i=1}^{n}\left(Y_{i}^{*}-X_{i}^{*} \widehat{\beta}\right)^{2}\right)^{1 / 2}} \underset{d}{\rightarrow} \frac{\int_{0}^{1} W_{1}^{*} W_{2}^{*}}{\left(\int_{0}^{1} W_{2}^{* 2}\right)^{1 / 2}\left(\int_{0}^{1}\left(W_{1}^{*}-W_{2}^{*} \frac{\int_{0}^{1} W_{1}^{*} W_{2}^{*}}{\int_{0}^{1} W_{2}^{* 2}}\right)^{2}\right)^{1 / 2}} . \]

This is non-degenerate. Thus the \(\mathrm{t}\)-ratio has an asymptotic distribution only after normalization by \(n^{1 / 2}\), meaning that the unnormalized t-ratio diverges in probability!

To understand the utter failure of classical inference theory observe that the regression equation is

\[ Y_{t}=\alpha+\beta X_{t}+\xi_{t} \]